Overview of Computer Science

advertisement

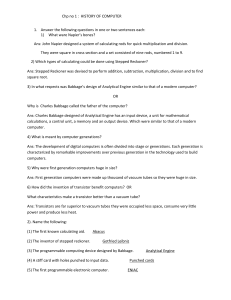

Overview of Computer Science CSC 101 — Summer 2011 History of Computing Overview C Computer t S Science: i Th The Big Bi Picture Pi t Lecture 2 — July 7, 2011 Announcements • The latter slides in this lecture are meant to be a complement p to the video “Giant Brains” which we will watch in class Today • Writing Assignment #1 Due Monday – Assignment can be found on course webpage – Print out assignment and hand in to me before class – Bring electronic copy with you to lab1 on Tuesday 2 Objectives • Video on the History of Computing • What is “information”? – What is “digital information” • Layers of abstraction in computing systems • Understanding Moore’s Law • Reading: – PotS: Preface (VII-XI) – CSI: 2-17 / 24-27 • http://www.thocp.net/timeline/timeline.htm 3 1 Information • How does “information” differ from “data”? – Data: • Basic values or facts • No special meaning – Information: • • • • • Knowledge derived from study, experience, or instruction Facts with significance or meaning What is known beyond chance predictions Data that has been organized or processed in a way that makes it useful Communication that has value because it informs— helping to answer questions and solve problems • Information is useless unless it can be communicated – – – – Vocalizations Symbols Spoken and written languages Analog and digital technologies 4 Information • Some ways to carry and exchange information – – – – – Books and newspapers; paper documents Numbers and numerical data I Images, music i andd video id Artistic expressions Live broadcasts • Radio, television, etc. – Direct communications • Face-to-face, telephone, postal mail, email, IM, etc. • Collectively often referred to as “media” 5 Digital Information • Many different kinds of information; many different types of media • All can be represented “digitally” – A pattern tt off simple i l numbers b (digits) (di it ) • Could be any set of digits – “0” and “1” are particularly simple and easy for a machine • “binary” – Binary data is the universal language of today’s computers • The process of converting information into a numeric (digital) representation is called digitization 6 2 Digital Information • Once information has been converted into digits, it’s just a bunch of numbers. • What can we do with a file full of numbers? 7 Digital Information • Once information has been converted into digits, it’s just a bunch of numbers. • Since it’s just numbers, it can be: – Easily stored (no matter what kind of information) – Easily copied (at full quality) – Easily combined or modified (edited) – Easily transmitted or shared – Easily taken (copied) without permission 8 Layers of Abstraction • An abstraction is a kind of simplification – A way to think of something without worrying about specific details – Consider an automobile • Abstractions are an important part of computing – When you send an email, you don’t need to think about all the details of how it actually gets sent • This course will look at many different levels of abstraction 9 3 Layers of Abstraction • Can consider computing systems from many different levels, like layers of an onion Communications Applications Operating Systems Programming Hardware Information 10 Computer Science • A computer is any device that processes information based on some instructions – Information goes in (input) – That information is processed – Different information comes out (output) • What do we mean by “computer science”? Computer Science is no more about computers than Astronomy is about telescopes. —Edsger Dijkstra(1930–2002) – It’s not just about writing or using programs – It’s not just about the Web or the Internet or email or word processing – It’s not just about the latest Xbox 11 Computer Science vs. Information Technology • Computer Science (CS) is the discipline that studies the theoretical foundations of how information is described and transformed • Information Technology (IT) involves the development and implementation of processes and tools (both hardware and software) for describing and transforming information 12 4 Computer Science vs. Information Technology • Many aspects of “computer science” and “information technology” – – – – – – Programming concepts and languages; software engineering Computer hardware engineering (architecture) C Computation t ti theory; th algorithms l ith andd data d t structures t t Information management (informatics) E-commerce and information protection; security and privacy Etc., etc., etc. • Through the implementations of information technologies, computer science has a direct impact on almost every aspect of our daily lives – We all, then, benefit from an understanding of the field 13 Electric vs. Electronic • The very first computing devices used mechanical levers and dials • After the advent of electricity, electrical relays y were used ((1835)) – A great improvement over mechanical levers and gears – Slow, heavy, prone to fouling • In the 1940’s, electronic vacuum tubes began to be used – Faster – Very hot and prone to failure 14 Electric vs. Electronic • In the 1950’s the electronic transistor was invented – “Solid state” • No vacuum tubes • No mechanical devices (relays) – Smaller, faster, more reliable, cheaper than vacuum tubes • Integrated circuits were developed in the 1960’s – Large numbers of transistors on a single chip of silicon • The Intel 4004 CPU had 2,250 transistors (early 1970’s) 15 5 Electric vs. Electronic • VLSI (very large scale integration) circuits are now common – Over 1,000,000,000 transistors on a single, tiny chip ~1 inch ~0.25 inch 16 Price– Price –Performance Over Time Year Name Performance (adds/sec) Memory (KB) Price (2010 $)* Performance/Price (vs. UNIVAC) 1951 UNIVAC I 1,900 48 8,400,000 1 1964 IBM S/360 500,000 64 7,030,000 314 1966 1976 1981 (mainframe) PDP 8 PDP-8 (minicomputer) Cray-1 (supercomputer) IBM PC 33,000 4 111,000 1,300 166,000,000 32,768 15,300,000 48,000 240,000 256 7,200 147,000 50,000,000 16,384 11,800 18,700,000 Apple iPhone 4 1,000,000,000 524,000 700 6,320,000,000 Lenovo T410 ThinkPad 2,400,000,000 3,146,000 1,300 8,200,000,000 (original PC) 1991 HP9000/750 2010 2010 (workstation) (WFU std issue) * adjusted for inflation to 2010 based on consumer price index 17 Moore’s Law • John Moore, co-founder of Intel • Predicted in 1971 that the number of transistors on a chip (and therefore its speed) would double about every year or so. • Actual: # of transistors doubles about every 2 years • Price/Performance (previous slide) doubles about every 1.7 years http://www.intel.com/technology/mooreslaw/; http://en.wikipedia.org/wiki/Moore's_law 18 6 Extrapolating Moore’s Law • How long can this continue? – Transistors i needd to be smaller to fit more on a chip – What happens if they need to be smaller than an atom? 19 A Brief Taxonomy of Computers • Servers – Computers designed to provide software and other resources to other computers over a network • Workstations – High-end computers with extensive power for specialized applications by individual users • Personal computers – General-purpose computers for single users 20 A Brief Taxonomy of Computers • Portable computers – Devices that can easily be moved • Laptop (notebook) computers • Handheld computers p • Embedded computers – Special-purpose devices with non-standard interfaces • • • • • • In-car navigation systems Home security systems Building management systems Set-top cable TV boxes Game consoles Etc., etc. 21 7 Clusters and Grids • Many inexpensive computers working together to solve large problems – Cluster – all in one location – Grid – “distributed computing” – many locations but working together 22 Clusters and Grids • The “Gravity Grid” at UMass Dartmouth is a cluster of PS3 boxes for simulating black hole dynamics http://gravity.phy.umassd.edu/ps3.html 23 Computer Technology Overview • History of computer technology can be divided into five generations: – – – – – Early: abacus, mechanically driven / programmed, Turing machine 1st: Vacuum tubes used to store information 2nd: Advent of transistor / Immediate access memory 3rd: Integrated Circuits / Moore’s Law / Terminal 4th: Large Scale Integration / Personal Computer • Recurring theme of need (often military) pushing innovation – WWII pushed the creation of MARK1 and ENIAC • The Rest of the slides in this video are meant to complement the video we watched in class today. • You might find some of the information useful as a review 24 8 Origins of Digital Computers • The first digital computer • But, fingers aren’t sufficient for big problems 25 Origins of Digital Computers • Earliest computing devices were designed to aid numeric computations • The abacus was first developed p in Babylonia y over 3,000 years ago • Decimal number system – 11th century • Algebraic symbols – 16th century 26 Early Calculating Machines • Blaise Pascal (France, 1623–1662) – Pascaline – addition and subtraction only 27 9 Early Calculating Machines • Gottfried Wilhelm von Leibniz (Germany, 1646–1716) – Stepped Reckoner – All four arithmetic functions (+ – × ÷) 28 Charles Babbage • England, 1791–1871 • First true pioneer of modern digital g computing p g machines • Worked on two prototype calculating machines – Difference Engine – Analytical Engine 29 Babbage’s Difference Engine • A special-purpose machine (designed to calculate and print navigational tables) • Used the “method of differences” to solve polynomials l i l • Current manufacturing technology was insufficient – the Difference Engine never really worked 30 10 Babbage’s Analytical Engine • A general-purpose machine (programmable) • Based on the idea of the Jacquard loom – Fabric pattern stored on punch cards • Had the basic parts of every modern computer – Input and Output – “Mill” (processor) – “Store” (memory) 31 Babbage’s Legacy • Babbage’s Analytical Engine was perhaps the first general-purpose, digital computing device • But, his ideas and achievements were forgotten for many years 32 Konrad Zuse • Germany, 1910–1995 • Between 1936 and 1943 designed a number of general-purpose computing machines • Used electromechanical relay switches – (Not electronic yet) – No longer based on levers and gears • Offered his technology to the German government to help crack allied codes, but was turned down – Nazi military felt their superior air power was all they needed to win WWII 33 11 Howard Aiken and Grace Hopper • Aiken (1900-1973) designed, and Hopper (1906-1992) programmed the Harvard Mark I (1944) – Used by the Navy for ballistic and gunnery table calculations – Electromechanical relays • Hopper’s group found the first actual computer bug – a moth that had caused the Mark I to fail • Hopper also invented the compiler (more on compilers later) 34 The Harvard Mark I 35 John V. Atanasoff • US, 1903–1955 • With grad student Clifford Berry built the ABC machine hi (1939) • First electronic digital computing machine (vacuum tubes instead of relays–smaller & faster) • A special-purpose machine (for solving simultaneous equations) • First to use binary numbers 36 12 John Mauchly and J. Presper Eckert • Mauchly (1907–1980) and Eckert (1919–1995) • Headed ENIAC team at U. Penn • ENIAC (Electronic Numerical Integrator and Computer) was the first electronic, generalpurpose, digital computer • Manually programmed • Commissioned by the US Army during WWII for computing ballistic firing tables 37 ENIAC • Completed 1946 (after the end of the war) • Massive scale (30 tons) and redundant design • Data encoded internally in decimal • Detailed histories of ENIAC and other early computing devices can be found at http://ei.cs.vt.edu/~history/ENIAC.Richey.HTML and http://ftp.arl.mil/~mike/comphist/ 38 ENIAC • Manually programmed by boards, switches, and “digit trays” • Programmed by a group of female mathematicians working for the Army as ‘clerks’ – The first six were inducted into the Women in Technology International Hall of Fame a half-century later (1997) 39 13 John von Neumann • Hungary, 1903–1954 (moved to US in 1930) • Pronounced “NOI-mahn” • Developed the stored program concept p – No more manual programming! – Published his ideas in 1945 • Helped Mauchley and Eckert design EDVAC (Electronic Discrete Variable Computer) at U. Penn (1949) • He later conceived of the cold-war principle of Mutual Assured Destruction (MAD) 40 John von Neumann • Designed the IAS machine (1951) at Princeton’s Institute for Advanced Study (IAS) – Implemented a new model for computer organization: “The von Neumann Architecture” We’ll cover the von Neumann architecture in detail later 41 Alan Turing • Great Britain, 1912–1954 • Developed a simple, abstract, universal machine model for determining computability 42 14 Alan Turing • Led the ULTRA project that, during WWII, broke the code for Germany’s Enigma machine • Constructed Colossus (1943) which was (1943), used to crack the Nazis’ Enigma code – Details still classified – 2400 vacuum tubes • Often considered a major breakthrough towards ending WWII 43 Commercial Computers • In the early 20th century, advances in computational technology were mostly from research projects • Large investments in these research projects came from wartime needs during WWII • After the war, computational technologies began to be developed into commercial products 44 Commercial Computers • UNIVAC 1 (1951) – Mauchley & Eckert – First commercial, generalpurpose computer system – Vacuum tubes – Liquid mercury memory tanks 45 15 Commercial Computers • IBM System/360 (1964) – Solid-state circuits (transistors and i integrated d circuits) i i ) – Family of compatible models – Defined the idea of “mainframe” for decades 46 Commercial Computers • DEC PDP series (1970’s) – “Minicomputers” – Mainframe performance at a fraction of the cost • Cray 1 (1976) – “Supercomputers” – High-performance systems for “number crunching” – Advanced designs 47 16