B029 A PRACTICAL, GRADIENT-BASED APPROACH FOR PARAMETER SELECTION IN HISTORY MATCHING

advertisement

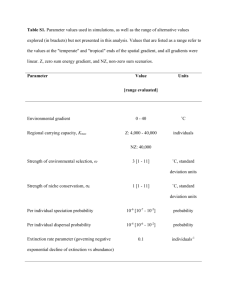

1 B029 A PRACTICAL, GRADIENT-BASED APPROACH FOR PARAMETER SELECTION IN HISTORY MATCHING Alberto Cominelli, Fabrizio Ferdinandi ENI Exploration and Production Division Abstract Reservoir management is based on the prediction of reservoir performance by means of numerical simulation models. Reliable predictions require that the numerical model mimics the known production history of the field. Then, the numerical model is iteratively modified to match the production data with the simulation. This process is termed history matching (HM). Mathematically, history matching can be seen as an optimisation problem where the target is to minimize an objective function quantifying the misfit between observed and simulated production data. One of the main problems in history matching is the choice of an effective parameterization: a set of reservoir properties that can be plausibly altered to get an history matched model. This issue is known as parameter identification problem. In this paper we propose a practical implementation of a multi-scale approach to identify effective parameterizations in real-life HM problems. The approach is based on the availability of gradient simulators, capable of providing the user with derivatives of the objective function with respect to the parameters at hand. Those derivatives can then be used in a multi-scale setting to define a sequence of richer and richer parameterisations. At each step of the sequence the matching of 9th the production data is improved. The methodology has been applied to history match the simulation model of a North-Sea oil reservoir. The proposed methodology can be considered a practical solution for parameter identification problems in many real cases. This until sound methodologies, primarily adaptive multi scale estimation of parameters, will become available in commercial software programs. Introduction Predictions of the reservoir behaviour require the definition of subsurface properties at the scale of the simulation block. At this scale, a reliable description of the porous media require to build a reservoir model by integrating of all the available source of data. By their nature, we can categorise the data as prior and production data. The former type of data can be defined as the set of information on the porous media that can be considered as “direct” measure of the reservoir properties. The latter type of data includes dynamic data collected at wells, e.g. water cut, shut-in pressure and time-lapse data. Prior data can be incorporated directly in the set-up of the reservoir model and well established reservoir characterisation workflows are widely used to capture in this procedure the inherent heterogeneity. Production data can be incorporated in the definition of the model only by HM the European Conference on the Mathematics of Oil Recovery — Cannes, France, 30 August - 2 September 2004 2 simulation model. The agreement between simulation and observations can be quantified by using a weighted leastsquares type objective function J, J= 1 T −1 R C R, 2 (1) where R is the residual vector which i-th entry is the difference between the observed (o) and simulated (c) production datum for the i-th measure, Ri = oi − ci , (2) while C is the correlation matrix, accounting for both measurement and modeling error. The matrix C is symmetric and positive definite. Notably, in many practical circumstances the matrix C is assumed to be diagonal. HM can then be seen as the minimization of the function J by perturbing the model parameters x: min l ≤ x ≤u J ( x) = min l ≤ x ≤u 1 R( x)T C −1 R( x) (3) 2 where l and u are lower and upper bounds for the parameters vector x. The problem in equation (3) is as an inverse problem, where the target is to recovery some model parameters by matching the production data. As inverse problem, HM is ill-posed: errors affect both the model and measurement process, the production data do not include enough information to condition all the possible model parameters down to the simulator cell scale. Ill-posedness can impact the reliability of the production forecast because models very similar from an HM point of view may differ significantly as far as the future of the reservoir is concerned. Setting-up the problem in a Bayesian framework, then adding a quadratic term which accounts for the prior information on the model, can provide the required regularization to turn the problem into a well-posed one. However in real studies a prior term can hardly be defined and the choice is to restrict the set of possible parameters by means of some kind of zonation, i.e. a division of reservoir cells into zone of equal permeability or porosity. Most of the time that is done with some sensitivity simulation usually supported by the physical knowledge of the field at hand. Then minimization methods for leastsquares problems are often far from being effective in practical cases. Moreover, when an acceptable result is achieved, the trade-off is often an over-parameterization of the model, with large uncertainty in the estimated parameters. Hence, there is a widely recognized need of practical, cost effective tools and methodology for parameter identification. The methodology to identify HM parameters applied in this work belongs to the family of multiscale parameter estimation (MPE) techniques. MPE has become really attracting as way to estimate parameters in history-matching type problems. It usually provides with a connection between the solution of the inverse problem and the scale lengths associated to the production data. In this work we implement the idea proposed by Chavent et al [6] to use fine-scale derivatives of the objective function J as an engine to define a richer and cost-effective parameterization in a multiscale process. The paper proceeds as follows: after a review of multi-scale parameter estimations, the gradient based methodology is presented, an application on a real case is described and, finally, conclusions are drawn on the basis of the result obtained. Multiscale Parameter Estimation Multiscale estimation aims to avoid overparameterization by adding parameters in a hierarchical way. A multiscale estimation process starts from a rather poor coarse scale parameterization, typically one parameter for the whole reservoir. Then, ordinary multiscale estimation (OME) 3 proceeds by adding at a given level of the hierarchical evaluation all the possible parameters[1][2]. In this way at the n-th stage of the estimation process the optimization problem has 2dn degrees of freedom in a ddimensional case, see Figure 1. This approach can be effective as regards to the solution of the minimization problem but it does not ensure that overparameterization does not occur. Moreover, in real 3D problems the dimension of the optimization problems that has to be solved at each stage of OME increases rapidly. a) c) b) d) Figure 1 - Ordinary Multiscale Estimation: in a 32x32 cells model (a) 1-4-16 parameters (b to d) are added in three steps of the estimation sequence. With adaptive multi-scale estimation[4][6] (AME) the parameters space is not refined uniformly. Rather, new parameters are added only if they are warranted by the production data. Notably, an analysis of the dependency between non linearity and scale of parameterization for similar but simpler parabolic-type problems has shown that fine-scale permeability features are responsible for highly non-linearity in the model response, while coarse scale updates are associated with linear behavior of the model response. The AME algorithm is terminated when production data are matched or any richer parameterization is unreliable. AME has proven to be a cost-efficient strategy for parameter estimation in 2D 9th case, both for simple and for complex problems[4][6], field-like cases[5]. However an implementation of this methodology in a real HM study, including the need to work with 3D geometry, is not available. This motivated the application of a similar but simpler multi-scale parameter estimation, where, following Chavent et al. [6], the engine used to define new possible parameters is based on gradients of the objective function computed for a reference fine-scale parameterization. Gradient Driven Multi-Scale Estimation In multi-scale parameter estimation the definition of richer parameterisation at a given step of the process is based on geometrical considerations. In OME each parameter has to be refined (see Figure 1) by regular cuts of the domain. In AME the refinement of the parameterisation is selective, nonetheless also in AME geometrical considerations play a major role. Gradient Based Multiscale Estimation (GBME) uses the gradient of the objective function to guide the refinement of the parameterisation. To introduce the methodology, let us assume as reference problem to estimate the permeability of a 2D model consisting of N=8x8 cells cartesian grid. In a multiscale setting a uniform permeability distribution can be estimated by minimising the objective function J with respect to on a single parameter x0. An optimal value J(x0*) can then be found and the corresponding permeability grid k is a uniform grid with N=64 entries equal to x0*. Next, the gradient ∇J0* of the objective function with respect to the permeability values of the 64 model cells can be computed: (∇J ) = (∂J / ∂k ) T 0* i i k i = x 0* , i = 1,..,N , (4) Following Chavent et al. [6], the gradient ∇J* can be mapped on the computational grid, see Figure 2-a. European Conference on the Mathematics of Oil Recovery — Cannes, France, 30 August - 2 September 2004 4 Based on such map, a new zonation can be defined by including cells with positive derivative in one zone (red cells in Figure 2-b) and cells with strictly negative derivative in another one (light blue cells in Figure 2-b). Two parameters, x11 and x12, can then be defined to characterize the permeability in the two regions. lumping the cells in each zone according to the sign of the corresponding gradient. The cells in the light-blue region in Figure 2-b are so split (Figure 2-c) in two regions, light-blue and dark-blue. Similarly, the red zone (Figure 2-b) can be partitioned in two zones, red and yellow (Figure 2-c). By definition, the bound-constrained minimization of the objective function J with respect to x0 equals to the minimization of J under the constraint α = x − x , α = 0. 1 1 1 2 (5) Then, the proposed parameterization can be seen as a relaxation of the constraint (5) and the corresponding Lagrange multiplier λ, λ = (∂J 0* / ∂x11 ) = −(∂J 0* / ∂x12 ) , (6) can be interpreted as a measure of the impact on the objective function of the relaxation of constraint (5), λ = (dJ 0* / dα )α =0 , (7) b) thus Ben-Ameur et al.[3] named λ a refinement indicator. By construction the refinement of the coarse parameterization on the basis of the gradients sign is likely to give the best cost-effective parameterization in terms of reduction of J. The new parameterisation can be used in the second step of the GBME to improve the match, then getting two calibrated parameters, 1* T 1* 1* x = ( x1 , x 2 ) , and a value for the 1* objective function J 1* = J ( x ) . Notably, by definition J 1* ≤ J 0* . Although the new value J 1* represents and improvement of the HM, it is quite likely that a further improvement is required. Then, at this point the calibrated parameters x1* can be used to compute the gradient of the objective function ∇J1* with respect to the 64 permeability values on the fine scale. The parameterization shown in Figure 2-b can be refined by c) Figure 2 –Gradient map after the first step of the GBME (a), the related 2 parameter zonation (b) and the 4 parameter zonation (c). By associating a uniform permeability value to each of the four sub-zones, the new parameterisation consisting of 4 degree of freedom, x2T=(x12, x22, x32, x42), can be defined. The couple (x12, x22) comes from the refinement of the parameter x11, while the couple (x32, x42) comes from the refinement of the parameter x21. This parameterization can be calibrated in the next step of the GBME to get an objective 2* J 2* = J ( x ) , with function J 2* ≤ J 1* ≤ J 0* . 5 More generally, at the n-th step of the GDME 2n calibrated parameters can be refined by means of the corresponding fine scale gradient ∇Jn*. The refined parameterisation consists of 2n+1 degree of freedom to be calibrated in the n+1 step. The n-th step parameterization can be recovered from the n+1 by adding n equality constraints: α = x − x , α = 0, i = 1,.., n . i n+1 N +1 2 i −1 2i i (8) Before the n+1 step regression. the effectiveness of the proposed richer parameterization can be evaluated by computing the n refinement indicators associated to the corresponding equality constraints: λi = (∂J *n / ∂x2ni+−11 ) = −(∂J *n / ∂x2ni+1 ) , (9) With respect to OME, GBME is more efficient because the number of parameters increase as a power of 2 at each step, while in OME this happens only for 1D problems. AME is more efficient because the parameters are added selectively. The selection criterion is based on the estimation of the potential uncertainty associated with a proposed parameterization. This usually prevents the risk of over-parameterization. In the current GBME the refinement indicators λi can provide with a guess on the improvement in the parameterization associated with the refinement of the i-th set of parameters. However, the refinement indicators do not represent an estimate of the reliability of the n new couples of parameters. In this work we have dealt with the over-parameterization issue only a posteriori by means of a L-curve type analysis[4][10], where the uncertainty in the n* calibrated parameterization x is based on the maximum eigenvalue of the posterior parameters covariance matrix ~ T −1 C = (∇R C∇R ) . From a computational viewpoint the bottleneck in GDME is the computation of the fine scale array of derivatives, which 9th entails the computation of a large number of HM parameters. The ideal choice, one parameter for each cell, can be selected only if the gradient ∇J is computed by means of Adjoint formulation. In this work we used a commercial simulator[8] which computes HM parameters by means of the so-called forward gradient methods. This approach is quite effective, because it directly provides with the sensitivity matrix ∇R. This allows to compute the GaussNewton approximation to the Hessian matrix, ∇RT C −1∇R , hence cost-efficient methods like Levenberg-Marquardt can be implemented. However, this way of computing gradients is time consuming[8][9], because the cpu-time scales linearly with the number of parameters. Hence, in real or even realistic cases it is prohibitive to define a parameter for each cell. In this work we introduced the concept of finer allowed parameterization (FAP), which means the finer parameterization that can be used for a detailed gradient computation at each step of GBME. The FAP constraints are case dependent and their definition is tightly related to the cpu-time required to simulate the model. A 2D problem to highlight the concept of FAP is shown in Figure 3-a: a 2D reservoir with an highly permeable channel up to 1000 mD, embedded in a low permeable matrix. Synthetic history data have been created by simulating a water flooding with one producer, located in the channel, and two injectors. Then, using as baseline permeability a constant 80mD value, a GBME was carried out, using as FAP the 8x8 parameterization shown in Figure 3-b. The parameterizations calibrated in the second and third step of the sequence are shown in Figure 3-c and in Figure 3-d, respectively. Although the FAP is relatively coarse (2x2 boxes), nonetheless the first FAP gradient evaluation gives a rough but reasonable European Conference on the Mathematics of Oil Recovery — Cannes, France, 30 August - 2 September 2004 6 estimate of the permeability trend (see the green area in Figure 3-c). WCT measurement error of 0.05 was chosen. From a practical point of view we assumed that a single GDME would run in a day, with the cpu time-consuming FAP gradient simulation running overnight. Figure 3 – 16x16 cells permeability distribution with an high permeable channel along the main diagonal(a), a FAP consisting of 64 2x2 cells boxes (b), stage 2 parameter zonation (c) and the stage 3 parameter zonation (d). Application to Camelot History Match The Camelot field is a Paleocene undersaturated oil reservoir located in the UK Continental Shelf of the North Sea. The field has brought onstream for production since 11/1986 by means of 14 production wells. For pressure maintenance purposes water was injected till 1998. After 1998 the surrounding aquifer still provided with enough energy to support reservoir pressure largely above the bubble point. Since 11/1986, only water cut (WCT) data have been systematically collected. To predict field production the reservoir has been simulated by means of a dead oil model. Grid model consists of 46x150x39 cells, with only 55394 active. Layer 1 to 9 correspond to the main oil bearing sand – F – while the sequences 11-25 and 26-35 correspond to E and D sands. In this work we implemented the GDME to reconcile the baseline model with the WCT data collected in a history time-window going from 1986 to 2002. Field production was simulated by running the model with liquid rate boundary conditions. GDME has been implemented to calibrate the model by selecting as HM parameters horizontal transmissibility multipliers (HMTRNXY). Constant, uncorrelated Figure 4 – FAP defined for the Camelot field: view from the top In addition, to preserve the main geological layering, it was required that the fine scale zones associated to FAP did not mix cells belonging to different oil bearing sands. To fulfill both aforequoted constraints a FAP consisting of 645 regions were been defined. The regions are columns of cells spanning vertically an entire oil-bearing sand, with base box of 3x3 cells. In Figure 4, a view from the top of the FAP regions can be seen. On a Pc with Xeon 3.2 GHtz processors the cpu time to compute the FAP gradients was about 8 hours, with 11 minutes required to simulate the production time-window. GBME has been run until the value of the root means square (RMS) production misfit, defined as RMS = R T C −1 R , is lower than 1. This is the default stopping criterion in a commercial gradient based HM package[9]. 7 At each step of GBME, the parameters were optimized by using a commercial of Levenbergimplementation[11] Marquardt method in the framework of a research HM package. 8000 Objective Function 7000 Numerical Results 6000 8 Parameters 5000 4000 32 Parameters 3000 2000 1000 A sequence of 6 GDME steps were run until the stopping criterion was fulfilled with a final parameterization consisting of 32 regions. The sequence of the optimal values J* is plotted in Figure 5 vs the number of parameters, alongside with the values of the RMS. 10000 0 0 2.5 2 6000 5000 1.5 4000 RMS Objective Function 7000 8 10 12 14 By means of this analysis, see Figure 6, we noticed that the uncertainty in the parameterization is fairly constant up to the 4th stage of the GBME sequence (8 parameters space), while a rapid increase in the uncertainty could be seen in the two subsequent step 1 3000 2000 1 0.5 Well PA2 0.9 1000 0.8 0 0 10 15 20 25 30 0.7 35 Number of HM parameters Figure 5 - objective function and RMS values versus the number of parameters. The behavior of the objective function J* shows some typical feature of multiscale type history matching: larger improvement are achieved in the early steps of the process, where the linearity associated to large scale features in the –unknown – property trend dominates. Finer parameterization generated at later stage is associated with small scale feature and leads to more and more moderated improvements as the sequential estimation proceeds. We expected then to notice some trade-off between accuracy in the matching and reliability of the parameter estimate. Following [4] we have measured the uncertainty in the parameterization by means of the maximum eigenvalue of the ~ parameter covariance matrix C . Then, the tradeoff between accuracy and reliability can be visualized by means of a L-curve plot, where the objective function J* is represented vs the maximum eigenvalue of ~ the corresponding C matrix. 0.6 WCT 5 0.5 0.4 0.3 0.2 0.1 0 0 1000 2000 3000 4000 5000 6000 2000 2500 3000 time (days) 1 Well PA-B2 0.9 0.8 0.7 0.6 WCT 0 9th 6 Figure 6 – L-type curve: optimal objective function vs. the maximum eigenvalue of the posterior covariance matrix. 3 Objective Function RMS 4 Maximum eigenvalue 9000 8000 2 0.5 0.4 0.3 0.2 0.1 0 0 500 1000 1500 time (days) Figure 7 – WCT vs time for well PA2(top) and PA-B2: history (black), baseline model (blue), 8 (green) and 32 (red) parameters GBME models results. Thus, the price for improvement in the RMS value from stage 4 (RMS=1.17) to stage 6 (RMS=0.87) is a fairly large increase of the uncertainty in the parameters. In most of the wells the difference between 8-parameters and 32 – European Conference on the Mathematics of Oil Recovery — Cannes, France, 30 August - 2 September 2004 8 parameters simulation models is fairly small, see for instance well PA-2 results in Figure 7. Nonetheless, in the case of well PA-B2 (see Figure 7) the less uncertain 8parameters model falls short of matching the history data . References [1] Yoon, S., Malallah, A. H., Datta-Gupta, A.,Vasco, D. W, Beherens, R. A., A Multiscale approach to Production Data Integration Using Streamline Models, SPE 56653, SPE ATCE, 1999. [2] Lee, S.H., Malallah, A., Datta-Gupta, A., Higdon, D.: “Multiscale Data Integration Using Markov Random Fields,” paper SPE 63066, SPE ATCE, 2000. [3] Ben Ameur, H., Chavent, G., Jaffré, J.: “Refinement and Coarsening of Parameterization for the Estimation of Hydraulic Transmissivity,” in Proceedings of the 3rd Int. Conference on Inverse Problems in Engineering: Theory and Practice, Port Ludlow, WA, USA, June 13-18 1999. [4] Grimstad, A.A., Mannseth, T., Nævdal, G., Urkedal, H., “Scale Splitting Approach to Reservoir Characterization”, SPE 66394, SPE Reservoir Simulation , 2001. [5] Grimstad A, Mannseth , T., Aanonsen, S. A., Aavatsmark, I., Cominelli, A., Mantica, S., “Identification of Unknown Permeability Trends from History Matching of Production Data”, SPE 77485, SPE ATCE 2002. [6] A.-A. Grimstad, T. Mannseth, G. Nævdal, and H. Urkedal. “Adaptive multiscale permeability estimation”, Computational Geosciences, 7: 1-25, [7] 2003.Chavent, G., Bissel, R., “Indicator for the Refinement of Parameterisation”, International Symposium on Inverse Problem in Engineering. Nagano, 1998. [8] Eclipse 2003A Technical Description, 2003, Schlumberger. Abingdon. [9] Simopt 2003A User Schlumberger, Abingdon. [10] Hansen, P.C.: “Analysis of discrete ill-posed problems by means of the L-curve,” SIAM Review, 34(4), 561–580, 1992. [11] IMSL FORTRAN Numerical BCLSJ routine documentation. Conclusions In this work an implementation of the methodology to select parameterization in a multiscale setting by means of gradients[6] has been presented. The application was performed on a real case by exploiting the gradient capabilities of a commercial simulator, together with a HM gradient-based package. In terms of reduction of the objective function J, the implementation has achieved the goal. However, the GBME methodology is still subject to the risk of over-parameterization, as shown by means of a L-curve type analysis. The methodology then has to be improved by means of the addition of selective parameterization criterion. One possibility is to use the refinement indicators, see equation (9), to select new possible Although of easy parameters[3]. implementation, this solution does not provide with any guess on the reliability of the proposed parameterization. On the other side, the use of sensitivity matrices[6] to guess the potential improvement in the objective function and associated standard deviation is much more promising. Besides the risk of over-parameterization, a real limitation of this approach is the computation of FAP to define new parameters. Currently the forward sensitivity simulation is time-consuming and in many real cases the dimension of the FAP space may become unrealistically small because of computational constraints. In this case, the extension of AME type methodologies [4][5][6] to 3D problems could provide with a sound and cost effective alternative. Guide, 2003, Libraries,