Computer - Aided Diagnosis: Concepts and Applications

advertisement

Outline

ComputerComputer-Aided Diagnosis:

Concepts and Applications

• Concepts of CAD

• Current CAD applications

• Computer vision techniques used in CAD

• Issues involved in development of CAD

systems

Heang-Ping Chan, Ph.D.

Berkman Sahiner, Ph.D.

Lubomir Hadjiiski, Ph.D.

• Future directions

Department of Radiology

University of Michigan

Why CAD?

• Interpretation of medical images is

never 100% accurate

ComputerComputer-Aided Diagnosis –

> Detection error

Concepts

> Characterization error

> IntraIntra-reader variability

> InterInter-reader variability

Page 1

ComputerComputer-Aided Diagnosis

CAD

Medical image

• Provide second opinion to radiologist in

the detection and diagnostic processes

Detection of

lesion by radiologist

Computer

detection of lesion

• Radiologist is the primary reader and

decision maker

+

Classification of

lesion by radiologist

Computer

classification of lesion

+

Radiologist’s decision

CAD Applications

• Breast Cancer

> Mammography

CAD Current Applications

> Ultrasound

> MR breast imaging

> MultiMulti-modality

Page 2

CAD Applications

CAD Applications

• Colon Cancer

• Lung Cancer

> CT virtual colonography

> Chest radiography

> Thoracic CT

• Pulmonary embolism

> CT angiography

• Interstitial disease

> Chest radiography

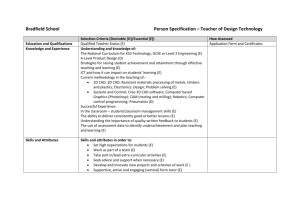

CAD Algorithm Development Process

Algorithm design

Algorithm

evaluation

Development of CAD System

Comparison of

CAD algorithms

Effects of CAD

in laboratory

Performance in

patient population

Effects of CAD in

clinical practice

Page 3

CAD System

General Scheme of Detection System

Preprocessing

• Detection

Segmentation of Lesion Candidates

• Characterization

• Combined detection and characterization

Extraction of Features

Feature Selection

Feature Classification

Detected Lesions

General Scheme of

Characterization System

General Scheme of Combined System

Preprocessing

Preprocessing

Segmentation of Lesion Candidates

Segmentation of Lesion Candidates

Extraction of Features

Extraction of Features

Feature Selection

Feature Selection

Feature Classification

Feature Classification

Likelihood of Malignancy

Detected Lesions &

Likelihood of Malignancy

Page 4

Preprocessing

Image Segmentation

• Trim off regions not of interest

• Identify objects of interest

> Contrast

• Suppress structural background, and

enhance signalsignal-toto-noise ratio

> SignalSignal-toto-noise ratio

> Linear spatial filtering

> Gradient field convergence

> NonNon-linear enhancement

> Line convergence – spiculated lesion

> MultiMulti-scale wavelet transform

> etc

> etc

Object Segmentation

Feature Extraction

• Descriptors of object characteristics

• Extract object from image background

> Morphological features

> Gray level thresholding

Shape, size, edge, surface roughness,

spiculation,

spiculation, …

> Region growing

> Gray level features

> Edge detection

Contrast, density, …

> Active contour model

> Texture features

> Level set

Patterns, homogeneity, gradient, …

> etc

> Wavelet features

> etc

Page 5

Feature Selection

Feature Selection

• Select effective features for the

classification task

• Exhaustive search

• Stepwise feature selection

• Reduce the dimensionality of feature

space

• Genetic algorithm

• Simplex optimization

• Reduce the requirement of training

sample size

• etc.

Feature Classification

Feature Classifiers

• Linear classifiers

• Detection

• NonNon-linear classifiers

> Differentiate true lesions from normal

anatomical structures

> Quadratic classifier

• Characterization

> Bayesian classifier

> Differentiate malignant from benign lesions

> Neural networks

• MultiMulti-class classification

> Support vector machine

> Differentiate normal tissue, malignant, and

benign lesions

> etc.

Page 6

Feature Classifiers

CAD Algorithm Design

• Other classifiers

• Application specific

> K-means clustering

• Designed with training samples

> N nearest neighbors

> etc.

CAD Algorithm Design

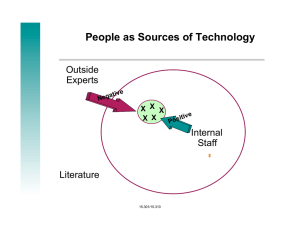

Database for CAD Algorithm Design

Database Collection:

• Database

• Quality -

> Training set

> Adequate representation of various types of

normals and abnormals (of the disease of

interest) in patient population

> Validation set

> Independent test set

• Quantity > Bias and variance of classifier performance

depend on design sample size

> larger data set

Page 7

better training

Sample Size Effect on

Bias of Classifier Performance

Sample Size Effect

•

Bias of a classifier performance index

estimated from a set of design

samples, relative to the estimate at

infinite sample size:

E { f ( N train )}

f( )

• Monte Carlo simulation study

• Classifier performance index: Area under

the ROC curve, Az

1

+ higherhigher-order terms

N train

• Dependence of Az on design sample size

• Comparison of classifiers of different

complexity

Ref. Fukunaga K, Introduction to Statistical Pattern

Recognition.

Recognition. 2nd Ed. (Academic Press, NY) 1990.

Classifiers of Different Complexity

Class Distributions in Feature Space

•

Examples:

TwoTwo-class classification:

> Multivariate normal distributions

Equal covariance matrices

• Linear discriminant classifier

• Quadratic classifier

Optimal classifier - Linear discriminant

• Artificial neural network (ANN)

> Theoretical optimum at

Az = 0.890

Page 8

N train

Class Distributions in Feature Space

Comparison of Classifiers

(Equal Covariance Matrices)

X2

1.0

k-D

9D

Training

0.9

Az

Class A

Linear

ANN-921

0.8

X1

Testing

Equal Cov. Matrices

Class B

0.7

0.00

0.01

0.02

0.03

Quadratic

0.04

0.05

0.06

1/No. of Training Samples per Class

Class Distributions in Feature Space

Class Distributions in Feature Space

(Unequal Covariance Matrices)

X2

• TwoTwo-class classification:

k-D

> Multivariate normal distributions

Unequal covariance matrices

Class A

Optimal classifier - Quadratic discriminant

> Theoretical optimum at

Az = 0.890

X1

N train

Class B

Page 9

Comparison of Classifiers

Class Distributions in Feature Space

• TwoTwo-class classification:

1.0

Training

> Multivariate normal distributions

Unequal covariance matrices

Equal mean

0.8

Linear

Quadratic

0.7

Optimal classifier - Quadratic discriminant

Testing

ANN-991

0.6

0.00

0.01

0.02

0.03

> Theoretical optimum at

Az = 0.890

9D

Unequal Cov. Matrices

0.04

0.05

0.06

N train

1/No. of Training Samples per Class

Class Distributions in Feature Space

Comparison of Classifiers

(Equal Mean)

X2

1.0

k-D

9D

0.9

Class A

Training

0.8

Az

Az

0.9

X1

Class B

0.7

Quadratic

0.6

ANN 9-30-1

0.5

Linear

Equal Mean

0.4

0.00

0.01

0.02

0.03

0.04

0.05

1/No. of Training Samples per Class

Page 10

0.06

Testing

Summary - Sample Size Effects

Summary - Sample Size Effects

• A classifier trained with a larger sample set

has better generalization to unknown

samples.

• Classification in certain feature spaces

requires more complex classifiers such as

neural networks or quadratic discriminant.

• If design sample set is small, a simpler

classifier will provide better generalization.

• When design sample set is small, overovertraining of neural network will degrade

generalizability.

generalizability.

Ref. Chan HP, Sahiner B, Wagner RF, Petrick N. Classifier design for

computer-aided diagnosis: Effects of finite sample size on the

mean performance of classical and neural network classifiers.

Med Phys 1999; 26: 2654-2668.

CAD Algorithm Design

Resampling Methods:

Evaluation of Classifier Performance

with a Limited Data Set

• Classifier design is straightforward if

available samples with verified truth are

unlimited

• Unfortunately, in the real world, especially

in medical imaging, collecting data with

ground truth is time consuming and costly

• Database is never large enough!

Page 11

Maximize Trainers

Resampling Methods for CAD Design

• Resampling methods for training and testing

CAD algorithm with a limited data set:

• The larger the number of cases for training,

the more accurate the classifier

> Resubstitution

> Ideally, use all available cases x1, …, xN for

training

> Hold out

> Cross validation

> How to evaluate the performance without

collecting new cases from the population?

> LeaveLeave-oneone-out

> Bootstrap

> etc.

Resampling Scheme 2 - Hold Out

Resampling Scheme 1 - Resubstitution

• Training set = Test set

• Partition data set into M trainers and NN-M testers

> Resubstitution estimate (Consistency Test)

> Training set independent of test set

> Optimistically bias test results

> Training size substantially smaller than N

> Pessimistically bias test results

Train

N cases

M

Classifier parameters

Train

N cases

“Test”

Classifier parameters

N-M

Page 12

Test

Resampling Scheme 3 - Cross Validation

• Use leaveleave-oneone-out estimate

• K-fold cross validation

> Train on NN-1 cases

> Divide into k subsets of size: S = N / k

> Train on (k(k-1) subsets, test on the leftleft-out subset

> Average results of all k test groups

N-S

Less pessimistic than HoldHold-Out

> Test on leftleft-out case, accumulate N test cases

> Variance?

Train

N cases

Classifier parameters

S

Resampling Scheme 4: LeaveLeave-OneOne-Out

N-1

Train

1

Test

N cases

Test

Resampling Scheme 5 - Bootstrap

Classifier parameters

Bootstrap Estimate of Resubstitution Bias

• Bootstrap

• x = (x1,…,xN), N cases from the population

• Randomly draw a bootstrap sample x*b

> Resampling with replacement from

available samples

• In the bootstrap world:

> Estimate and remove resubstitution

bias by using bootstrap samples

b

b

b

b

> “Resubstitution”

Resubstitution” Az value: Az(x* , x* )

b

• Ordinary bootstrap

b

> “Test”

Test” Az value: Az(x* , x)

• 0.632 bootstrap

> Bias of resubstitution:

resubstitution:

w b = Az(x*b, x*b) - Az(x*b, x)

• Other variants

Page 13

Resampling Methods

Bootstrap Estimate of Resubstitution Bias

• Bias:

• Repeat for B bootstrap samples

> LeaveLeave-oneone-out: Pessimistic

• Average the bias w b over B replications

> Bootstrap: Optimistic

• Variance:

• Remove bias from true resubstitution Az

1 B

b

b

b

Az(x, x) [A (x* , x* )- Az(x* , x)]

B b =1 z

> In general, bootstrap has lower variance

than leaveleave-oneone-out

• Depend on feature space

Evaluation of CAD Algorithm Performance

• CAD algorithm performance depends on

> How well it was trained

Evaluation of CAD System Performance

and Comparison of CAD Systems

Page 14

> How it was tested

Testing

Testing

• True test performance requires

independent test set

• Comparison of CAD algorithms > use large random samples from patient

population

• CAD performance depends on properties

of test cases

> Relative performance – use common test

set

> Test set should be statistically similar to

patient population

Validation vs. True Test

• Important issue:

Even an “independent”

independent” test set will turn into

a part of the training set if algorithm is

optimized based on the test results

Effects of CAD on Radiologists’

Performance – Laboratory Studies

> Optimistic estimate of algorithm

performance

• True “test”

test” comparison has to be based on

test set unknown to all algorithms

Page 15

Effects of CAD

Effects of CAD

• Laboratory observer performance studies

• Variance component analysis

> ROC methodology

> BeidenBeiden-WagnerWagner-Campbell method

• DorfmanDorfman-BerbaumBerbaum-Metz method

> Power estimate

> ROC with localization

> Sizing of larger trial from pilot study

• LROC - Swensson

• XFROC, JAFROC - Chakraborty (under

development)

Effect of CAD

• Findings of laboratory ROC studies

> CAD improves radiologists’

radiologists’ performance in

detection and characterization of

CAD in Clinical Practice

• Breast lesions

• Lung nodules

Page 16

Commercial CAD Systems in Mammography

• R2

Prospective Study of CAD

in Screening Mammography

• Freer et al.

> FDA approval in 1998

> 19.5% increase in cancer detection

• CADX (Qualia

(Qualia))

> 18% increase in callcall-back rate

> FDA approval in Jan. 2002

• Bandodkar et al.

• ISSI

> 12% increase in cancer detection

> FDA approval in Jan. 2002

> 12% increase in callcall-back rate

• Kodak

> FDA approvable letter in Nov. 2003

Prospective Study of CAD

in Screening Mammography

Prospective Study of CAD

in Screening Mammography

• Cupples et al.

• Gur et al.

> 13% increase in cancer detection

> No statistically significant increase in

cancer detection

• Helvie et al.

> No statistically significant increase in

callcall-back rate

> 10% increase in cancer detection

> 10% increase in callcall-back rate

Page 17

Effects of CAD in Clinical Practice

Effects of CAD in Clinical Practice

• CAD system does not need to be more

accurate than radiologists to provide

useful second opinion

• CAD system designed as an aid to

radiologists should not be misused as

a primary reader

> Complementary to radiologists is more

important

> Loss of advantage of doubledouble-reading

Clinical Trial

• Outcome of CAD clinical trial can be affected

by

> Radiologists’

Radiologists’ vigilance in reading

Future Directions

> Radiologists’

Radiologists’ interpretation of CAD marks

> Study design

• Continued effort to study the effects of CAD in

clinical settings needed to resolve differences

in outcomes

Page 18

Future Directions

Future Directions

• Breast Cancer

• Similar development of CAD for other

diseases

> Combined mammography/Ultrasound

> Tomosynthesis

> Breast CT

• CAD in other image analysis tasks

> DualDual-energy imaging

> Dynamic MR breast imaging

> Risk prediction

> Optical imaging

> Molecular imaging

> etc

> Prognosis estimation

> MultiMulti-modality CAD

> …

Future Directions

Computerized

Image Analysis

• Beyond the role of second reader

Computer Aid

> Provide quantitative or other info to

radiologists to assist in detection and/or

diagnostic decision

Detection

> As a “physician assistant”

assistant” to screen out

negative cases ??

Monitoring

Diagnosis

Treatment

Planning

Page 19

Surgery,

Intervention