ties iminating proper cteristic, discr ra

advertisement

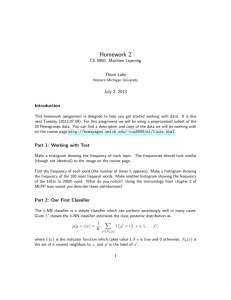

e.g. camera

measuring

device,

e.g.

redness,weight}

redness,weight}

{0.1,0.3,100g}

{roundness,

{roundness,

{0.9,0.8,50g}

peer

tomato

Features: characteristic, discriminating properties

measurements

features

properties/

object

physical

Classify objects on the basis of

measured properties

Pattern recognition

numbers

1

x 2 = (x2 , x22 )

(redness)

x2

{0.9,0.6}

{0.4,0.3}

{0.6,0.6}

B (pear)

x1 (roundness)

A (tomato)

1

x1 = (x1 , x21 )

{0.1,0.2}

{0.2,0.1}

Record images (sensor)

segment objects (segmentation)

measure features (analysis)

F: {redness, roundness}

Feature space

{0.8,0.9}

{0.7,0.9}

{0.7,0.8}

{0.3,0.4}

{0.4,0.2}

redness

B (pear)

measurement =

roundness

A (tomato)

inherent variation (e.g. biological)

+

measuring noise (e.g. digitization)

Similar objects (pears or tomatoes)

In feature space: cloud of points (intra-class variation)

Class

redness

?

A (tomato)

roundness

B (pear) ?

?

Compare objects on the basis of their

features

Pattern recognition

Define/find probability model

Classify on basis of probability for classes

By statistical modeling (Bayesian decision making)

Define/find decision boundaries D(x)

Classify on basis of (sign of) D(x)

By decision boundaries

Define/find prototypical examples of classes (Θ)

Classify on basis of similarity with prototypes

By prototypes

Pattern recognition

redness

B

B

roundness

B

A

A

Classify an unknown object to the label of

the nearest learning object

Compare to prototypes of class

Prototypes

redness

B

A

roundness

B

A

A

Decision

Boundary

Pattern recognition: Finding decision boundaries from

a set of example objects (learning)

decision boundaries

Classes: clouds in feature space separable by

Decision boundary

(redness)

x2

D2 ( x )

(3 errors)

x1 (roundness)

D1 ( x )

(redness)

x2

(6 errors)

D3 ( x )

x1 (roundness)

(2 errors)

Decision boundary → Minimizing error

Optimizing decision boundary

P (B | x )

redness

B

A

B

A

A

P (A | x )

If class A is more probably given

the measured values then decide

for class A

roundness

P (A | x ) > P (B | x ) x → A

else

x →B

Decision rule:

Bayesian decision making

Define/find probability model

Classify on basis of probability for classes

By statistical modeling (Bayesian decision making)

Define/find decision boundaries D(x)

Classify on basis of (sign of) D(x)

By decision boundaries

Define/find prototypical examples of classes (Θ)

Classify on basis of similarity with prototypes

By prototypes

Pattern recognition

x2

(redness)

fX ( x | B )

B (pear)

conditional probability densities

x1 (roundness)

fA ( x)

fX ( x | A )

A (tomato)

Feature vectors spread according to the class-

x = (x1 , x 2 ,..., x k )

Feature vector k-dimensional stochastic vector

Statistical analysis

If class A is more probably given

the measured values then decide

for class A

PAfA ( x ) > PBfB ( x ) x → A

else

x →B

Decision rule becomes (in known terms !):

fA ( x )PA

fA ( x )PA

=

P (A | x ) =

f (x )

fA ( x )PA + fB ( x )PB

Bayes’ Theorem:

P (A | x ) > P (B | x ) x → A

else

x →B

Desired decision rule:

Bayesian decision making

fB ( x )

(redness)

x2

B (pear)

D (x ) ≥ 0

D (x ) < 0

x1 (roundness)

Decision

boundary

fA ( x )

A (tomato)

x →A

x →B

Sign decision boundary determines classification

D ( x ) = fA ( x )PA − fB ( x )PB

Bayes classifier constructs decision boundary

Optimality Bayes classifier

pear

redness

D (x ) < 0

D (x ) = 0

D (x ) ≥ 0

D (x ) = 0

Decision boundary:

roundness

tomato

D (x ) ≥ 0 x → A

D (x ) < 0 x → B

Classification

Decision boundary: D(x)

ε = PB

( )≥ 0

( )<0

fB ( x )dx + PA ∫ fA ( x )dx

∫

D x

D x

Substitution of pdf’s:

ε = P (D ( x ) ≥ 0 | x ∈ B )PB + P (D ( x ) < 0 | x ∈ A )PA

Bayes rule

probability that object with label B

is classified as having label A

ε = P (D ( x ) ≥ 0, x ∈ B ) + P (D ( x ) < 0, x ∈ A )

Classification error:

Classification error

1-dimensional

problem

projection

f (x 1 )

fB (x1 )

B

fB ( x )

B (pear)

x1 (roundness)

A fA (x1 )

x1 (roundness)

A (tomato)

fA ( x )

Illustration (simplified)

fA ( x )PA

εB

A

D (x ) ≥ 0

fA ( x )

D (x ) = 0

= PA

B

D (x ) < 0

( )< 0

fA ( x )dx

∫

D x

fB ( x )

fB ( x )PB

ε A = P (B , x ∈ A ) = P (D (x ) < 0 | A)PA

Illustration Bayes error

ε = PA −

( )≥0

( )<0

(PAfA ( x ) − PBfB ( x ))dx

∫

D x

( )≥0

( )≥0

Integrate complete

interval: ∫ f (x )dx = 1

( )≥0

fB ( x )dx + PA ∫ fA ( x )dx + PA ∫ fA ( x )dx − PA ∫ fA ( x )dx

∫

D x

D x

D x

D x

( )<0

ε = PB

( )≥0

fB ( x )dx + PA ∫ fA ( x )dx

∫

D x

D x

Add and subtract

same term

(cont’d)

ε = PB

Rewrite

Classification error

( )≥0

(PAfA ( x ) − PBfB ( x ))dx

∫

D x

D ( x ) ≥ 0 if and only if

PAfA ( x ) − PBfB ( x ) ≥ 0

Thus, choose D(x) such that only

positive terms are integrating

Minimizing ε : maximizing 2nd term

ε = PA −

Minimize classification error ε

Bayes decision boundary

Optimal decision boundary (→ *)

D * ( x ) = PAfA ( x ) − PBfB ( x ) ≥ 0 x → A

<0 x →B

Decision boundary:

Optimal decision boundary

x →A

D (x ) ≥ 0

εB

D (x ) = 0

x →B

D (x ) < 0

εA

B

fB ( x )PB

Bayes error

Bayes error: Shifting D(x) causes increases ε

fA ( x )PA

A

ε = εA + εB = ε *

Bayes decision boundary

Bayes boundary

(cont’d)

D * (x ) = 0

D * (x ) < 0

D * (x ) = 0

D * ( x ) defines areas:

fA ( x )PA

A

B

fB ( x )PB

D * (x ) ≥ 0

D * (x ) = 0

ε*

*

D

( x ) not necessarily one point

Bayes boundary

(cont’d)

S * (x ) = 0

B

S * (x ) = 0

fB ( x )PB

Thus, D * ( x ) not necessarily a linear decision

boundary

fA ( x )PA

A

Two classes with the same mean:

classification still possible

Bayes boundary

PAfA ( x )

A

x →i

x

B PBfB ( x )

C

PC fC ( x )

x → A because PAfA ( x ) largest

i

with i = arg max { Pi fi ( x ) }

Classification according to

Multiple classes

A

B

A

B

D

A

B

C

Class clouds can have any shape → shape D(x) varies

Overlap exist → perfect (100% separation) D(x)

often does not exist

Multiple classes → D(x) describes multiple decisions

Bayes boundary revisted

non-parametric

→ known distribution model

→ no knowledge about

underlying distribution

→ estimate parameters from

→ tot restricting the pdf

learning set

→ restricting shape of pdf

parametric

D ( x ) = PAfA ( x ) − PBfB ( x ) ≥ 0 x → A

<0 x →B

Bayes classifier

Bayes decision making

Zero-crossings R(x) same as D(x)

R ( x ) = ln {PAfA ( x )} − ln {PBfB ( x )}

↓

D ( x ) = PAfA ( x )} − PBfB ( x )

Substituting fA (x),fB (x) in D(x)

Monotonic transformation

1

T

−1

fA ( x ) =

−

(

x

−

µ

)

Σ

exp{

A

A (x − µ A ) }

1/2

k /2

2

ΣA (2π )

1

Normal distribution fA (x),fB (x)

Parametric estimator

What shape?

Decision boundary found by setting R(x) = 0

R ( x ) = (x − µB )T ΣB−1 (x − µB ) − (x − µA )T ΣA−1 (x − µA ) +

PA

ΣA

2ln{ } + 2ln{ }

PB

ΣB

After substitution of fA (x),fB (x)

Normal-based classifier

x T ( ΣA−1 − ΣB−1 ) x

(cont’d)

Ellipse/circle

hyperbole

parabola

linear

ΣA = ΣB

Can take any quadratic shape

exact shape depends on the covariance ratios

Quadratic term:

Normal-based classifier

-5

0

5

10

15

-8

-10

-6

-4

-2

0

2

4

6

8

-10

-5

0

5

10

-10

-5

0

5

10

15

20

25

Linear decision boundary

-6

-10

-4

-2

0

2

4

6

5

10

15

-10

-5

0

5

10

-10

-5

0

5

10

15

20

(cont’d)

25

-15

-15

-10

-5

0

5

10

-10

-5

0

5

10

But, if data not normally distributed

then wrong decision boundaries

0

non-parametric

classifier

-5

Quadratic decision boundaries

Normal-based classifier

non-parametric

→ no knowledge about

→ known distribution model

underlying distribution

→ estimate parameters from

→ tot restricting the pdf

learning set

→ restricting shape of pdf

parametric

D ( x ) = PAfA ( x ) − PBfB ( x ) ≥ 0 x → A

<0 x →B

Bayes classifier

Bayes decision making

x1

A

NV

n

ˆ

f (x ) =

Number of bins grows exponentially

Need large learning set (large N)

Easy and effective, but

x2

Divide sample space in bins (volume V)

Estimate probability density by counting

samples of learning set in bin (n)

Histogram

∀

all learning objects

Kh ( x , y )

∑

N y

1

ˆ

f (x ) =

x =y

y

h

Kh ( x , y ) ≈ 0, d ( x , y ) zeer groot

Kh ( x , y ) = 1,

KERNEL

Weigh contribution of object y with

kernel K (x,y)

Object y close to x contributes more to f (x)

than an object z further away from x

Estimation f (x):

Parzen estimator

(cont’d)

x2 x3 x4

x5

x6x7 x8

x

∀

Kh ( x , y )

∑

N y

1

kernel width

fˆ( x ) =

Kernel width: influences the smoothness of f(x)

x1

Kh (x , y )

Estimation is sum of kernels placed at objects

Parzen estimator

A

x1

Can be wild; depends on what ?

x2

B

D (x ) = 0

∀

Kh ( x , y )

∑

N y

1

ˆ

f (x ) =

Shape decision boundary

PDF can have any

shape

Parzen estimator

D(x) of Parzen estimator

Extremes ?

Larger: increasing insensitivity for number of points

Smoothing parameter (h, kernel width)

less data: wilder shape

more points: can use smaller kernels

Number of data points:

Wildness:

D(x) of Parzen estimator (2)

x

(closer to object class B )

(closer to µA )

x

x →B

x →A

h → ∞ : Class of nearest mean

D(x) linear decision boundary

h ↓ 0 : Class of nearest object (learning set)

D(x) equal to Nearest Neighbor rule

Two extremes:

D(x) of Parzen estimator (3)

ε

nearest

neighbor

Varying h

lots of articles published on

how to select

h

optimal smoothing parameter

nearest mean

Euclidean distance classifier

Extremes Parzen D(x) (2)

x2

x1

j =1

j =1

else

x →B

PA ∏fA (x j ) > PB ∏fA (x j ) x → A

d

d

Naïve Bayes classifier

j =1

fA ( x ) = ∏fA (x j )

d

Assuming independent

features

Naïve Bayes Classifier

Define/find probability model

Classify on basis of probability for classes

By statistical modeling (Bayesian decision making)

Define/find decision boundaries D(x)

Classify on basis of (sign of) D(x)

By decision boundaries

Define/find prototypical examples of classes (Θ)

Classify on basis of similarity with prototypes

By prototypes

Pattern recognition

Holds also if

µA

A

R (x ) = 0

Σˆ = PA ΣˆA + PB ΣˆB

µB

B

When shape of R(x) linear

Equal covariance ( Σ = Σ)

A

B

Different means (µ ≠ µ )

A

B

Normal-based classifier

R ( x ) = Wx + w 0

Need only to estimate

k coefficients instead

of k x k matrix

2) When expecting that ΣA=ΣB then use this information

since due to noise estimates often show ΣA≠ΣB

Hence, enforcing ΣA=ΣB, makes the decision boundary

becomes more accurate

Rewrite as

R ( x ) = (µ A − µB )T Σˆ −1 x + Const .

1) less coefficients to estimate (less computational load)

Why?

Force that ΣA=ΣB during estimation of fA(x) and fB(x)

Classes linearly separable

Use of prior knowledge

→

D ( x ) = Wx with x0=1

i

i

2

i =1

E = ∑ (D ( x ) − L( x ) )

N

Minimize total square error:

i

x

Each object

has class label L( x i ) ∈ {-1,1}

Find W by minimizing classification error

i

i.e. D ( x i ) should equal L( x i ) for all x

D ( x ) = Wx + w 0

Assume linear decision boundary

Linear decision boundary

i

i

2

→ E = D −L

2

= (WX ) − L

W

T

= XX

(

Gives

)

T −1

XL

(

→ W = LT X T XX T

)

−1

T

(cont’d)

N

∂E

= ∑ 2(Wx i − L( x i ) )x i = X ( X TW T − L ) = 0

∂W

i =1

Optimize with respect to W

i =1

E = ∑ (Wx − L( x ) )

N

Substitute D ( x i )

Linear decision boundary

2

T

=Σ

LX

T

T

T

T

(XX )

T −1

x

T

(cont’d)

= (XL) = (NA µA − NB µB )

Resembling linear normal-based classifier

D ( x ) = Wx = (NA µA − NB µB )T Σ −1 x

Substitute covariance and mean

XX

T

Covariance and mean definitions

D ( x ) = Wx = L X

Decision function becomes

Linear decision boundary

d

y

f (y ,T ) = tanh( )

T

d

D ( x ) = f ∑w j x j

j =1

-1.5

-4

-1

-0.5

0

0.5

1

1.5

-3

-2

-1

.1

0

1

5

2

Apply non-linear activation (desirable properties)

j =1

D ( x ) = Wx = ∑w j x j

Linear decision function

Non-linear decision

3

25

4

xdi

…

x 2i

x1i

D (x i )

Perceptron: Non-linear decision function

Perceptron

xdi

…

x 2i

x 2i

x1i

Neural Net: Layered perceptrons

µA

σA

S (x ) = 0

σ B′

µB

σB

B

fB ( x )

( µA − µB )2

max{ 2

}

2

σA + σB

Fisher criterion

2) But, with respect to the spread of the classes

1) Means far apart : max{( µA − µB )2 }

fA ( x )

A

When are classes well separable ?

Fisher Linear Discriminant

between scatter

(Direction in which

the Fisher criterion

is maximal)

Fisher direction

Direction:

Sf ( x )

x2

B

µB

µA

within scatter

( µ A − µ B )2

σ A2 + σ B2

A

x1

S ′( x )

( µ A − µ B ) 2 ; between scatter

within scatter

Maximize between versus within scatter

Fisher’s criterion

Exactly same direction as the linear

Bayes classifier

difference with linear

Bayes classifier

Fisher: No assumption on normal densities (just class

separability)

Fisher: No error minimization but maximization

Fisher criterion

Note !

Df ( x ) = Wx + w 0

Df ( x ) = ( µA − µB )T Σ −1 x + Const .

Maximizing Fisher criterion:

Fisher’s decision boundary

Bayes classifier + assumption of normal-based

densities + equal covariance matrices

Fisher classifier

Perceptron

Derived from:

Linear classifier

Define/find probability model

Classify on basis of probability for classes

By statistical modeling (Bayesian decision making)

Define/find decision boundaries D(x)

Classify on basis of (sign of) D(x)

By decision boundaries

Define/find prototypical examples of classes (Θ)

Classify on basis of similarity with prototypes

By prototypes

Pattern recognition

redness

B

B

roundness

B

A

A

Classify an unknown object to the label of

the nearest learning object

Nearest neighbor classifier

(cont’d)

piecewise linear

contour

x2

Piecewise linear

Can have any shape

x1

:A

:B

Shape decision boundary NN classifier

NN classifier

(cont’d)

Solution ?

x2

λB

λA

λB

Outliers have a lot of influence!

NN classifier

x1

:A

:B

x2

λB

λA

x1

λA

:A

:B

Consider k nearest neighbors instead of one (1-NN)

Classify using majority rule of k neighboring classes

The larger k , the smaller the probability that

outliers influence the decision

k-NN classifier

K-NN classifier

ε k − NN = ε *

K=1: 1-NN becomes proportional classifier

k → ∞ ,N → ∞

k →0

N

lim

K→∞, N →∞: Choose class with highest density

Error approaches Bayes error

k →N

lim ε k − NN = min( PA , PB )

K=N: Choose class with highest probability

k-NN : Error analysis

Class assignment based on random experiment

Probabilities in the same ratio as the local densities

fA(x) and fB(x)

Proportional classifier

Assign to class A OR B (0/1)

But, fA(x) and fB(x) may both be greater than zero

Densities of A and B AFTER classification different

than in learning set

Bayes classifier

Proportional classifier

(cont’d)

qA ( x )

A

qB ( x )

As many objects assigned to

class B as class A

B

PAfA ( x )

P (A | x ) = qA ( x ) =

f (x )

prob. density function

after classification similar

as before classification

x → B with probability P (B | x ) = qB ( x )

x → A with probability P (A | x ) = qA ( x )

Not largest but random experiment!

Proportional classifier

Note! No relation with assignment

(random experiment): assignment

independent from true label

ε ( x ) = 2qA ( x )qB ( x )

Assignment by random experiment:

qA,qB

ε ( x ) = P ( x → A, x ∈ B )

+ P (x → B , x ∈ A)

ε ( x ) = P ( x → A )P (x ∈ B ) + P ( x → B )P (x ∈ A )

ε ( x ) = qA ( x )qB ( x )

+ qB ( x )qA ( x )

Classifier designed such that:

P (x ∈ A | x ) = P (x → A | x )

P (x ∈ A | x ) = qA

Definition

Error proportional classifier

ε =∫

*

S * (x )

ε*

S (x )

B

fB ( x )PB

f (x )

∫ min{PAfA (x ), PBfB (x )}f (x )dx

ε * = ∫ min{PAfA ( x ), PBfB ( x )}

Bayes error:

Integrating over lowest

probability density

fA ( x )PA

A

Express proportional classifier in Bayes

error

Bayes error revisited

qA ( x ) =

PAfA ( x )

f (x )

ε * = ∫ ε * ( x ) f ( x ) dx = E [ε * ( x )]

made for a given x

ε * ( x ) ; smallest error that is being

ε * = ∫ min{qA ( x ), qB ( x )} f ( x ) dx

*

min{PAfA ( x ), PBfB ( x )}

ε =∫

f ( x ) dx

f (x )

ε * = ∫ min{PAfA ( x ), PBfB ( x )}

Bayes error revisited (2)

1 − min{...}

*

2

ε prop = E [ε ( x )] = 2 E [ε * ( x )] − 2E [ε * ( x )2 ]

min{qA ( x ), qB ( x )}

Defined as:

Error proportional classifier

*

ε ( x ) = 2 ε ( x ) − 2{ε ( x )}

= 2 ε * ( x )(1 − ε * ( x ))

= 2 min{qA ( x ), qB ( x )} max{qA ( x ), qB ( x )}

ε ( x ) = 2 qA ( x )qB ( x )

Expected error in x

Error proportional classifier

*

ε prop < 2ε − 2ε

*2

2

*

*

2

Positive!

(subtract something from 2ε)*

*2

Worst case:

proportional classifier 2 times

as bad as Bayes classifier

(never smaller than ε*!!!!)

= 2ε * (1 − ε * )

ε prop = E [ε ( x )] < 2ε

*

*

E [y 2 ] > E [y ]2

E [ε (x ) ] > E [ε (x )] = ε

Bayes error: 2ε

var(y ) = E [y 2 ] − E [y ]2 > 0

ε prop = E [ε ( x )] = 2 E [ε * ( x )] − 2E [ε * ( x )2 ]

Error proportional classifier

B

PB fB ( x )

1 − NN

→ proportional classifier

n →∞

1-NN:Assignment to class of nearest object

In limiting case equal to proportional classifier

Probability of being assigned to a certain class depends

on the number of samples

PAfB ( x )

A

Assignment class A

1-NN : Error analysis

(cont’d)

Worst error

(opposite D(x))

On the average (2 samples are only one draw)

however good rules

ε * < ε NN < 1 − ε *

Each sample can lie exactly on class center ⇒ perfect

decision boundary

or it can lead to the worst decision boundary

If n < ∞, what can we say about εΝΝ

Nothing! Namely, if there are 2 samples:

n →∞

*

ε < ε NN < 2ε − 2ε

*

*2

1-NN : Error analysis

2ε *

1

: appears to be good rule of thumb

k ≈

N

: NN character of proportional classifier

*

( ε 1 − NN → 2ε )

k →1

k →N

k

: classification according ratio of classes

a priori probabilities: lim ε k − NN = min( PA , PB )

optimum

N

k →N

Choice of k

ε k −NN

min( PA , PB )

Classification according

a priori probabilities

k-NN : Error analysis

1

k

(cont’d)

Locally noisy → increasing k with one

can have large influence on ε

εk −NN

Practice

k-NN : Error analysis

(cont’d)

λB

x1

λA

:A

:B

Leads to Parzen density estimator (kernels)

x2

λB

Weight k nearest neighbors w.r.t. the distance

Alternative:

k-NN : Adaptations

(cont’d)

Editing:

decreasing ε

Condensing: decreasing computational load

Calculate distance to ALL learning samples

But, not necessary: Remember only those samples that

are in the vicinity of the decision boundary: Condensing

Computationally expensive (during classification)

Cause segments -> edit learning set

Outliers

Disadvantages:

k-NN : Adaptations

No guarantees; Only for large learning set

approaching Bayes decision boundary

Divide learning set into subsets

Select a learning and test set

Remove falsely classified samples (test set) from

learning set (don’t use them anymore)

Repeat; until all objects are classified correctly

Procedure

k-NN: Editing

Choose arbitrary learning sample

Classify sample according to the other learning

samples

If sample is falsely classified then add to new

learning set

Repeat until no errors appear

Procedure

k-NN: Condensing

original

after editing and condensing

Example Condensing

Optimal Bayes classifier

Parameterized densities: Normal-based classifier

Non-parameterized densities: Histogram, Parzen, Naïve

Bayes

By statistical modeling (Bayesian decision making)

Normal-based classifier (equal covariance)

Linear decision boundary, perceptron

Fisher linear classifier

By decision boundaries

K-nearest neighbor classifier

By prototypes

Summary