Does Cumulative Advantage Increase Inequality in the Distribution of

advertisement

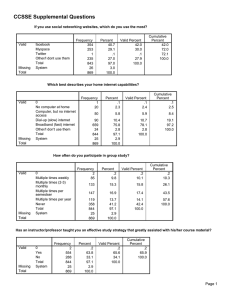

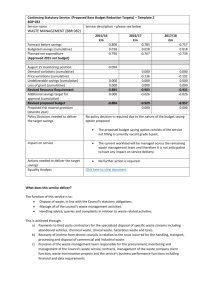

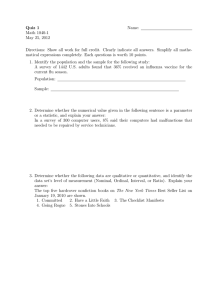

Does Cumulative Advantage Increase Inequality in the Distribution of Success? Evidence from a Crowdfunding Experiment ∗ Rembrand Koning Stanford GSB Jacob Model Stanford GSB September 29, 2015 Abstract The diffusion of online marketplaces has increased our access to “social information” – records of past behavior and opinions of consumers. One concern with this development is that social information may create cumulative advantage dynamics that distort marketplaces by increasing inequality in the distribution of success. Critically, this argument assumes that products that are likely to succeed disproportionately benefit from social information. We challenge this assumption and argue that cumulative advantage processes can aggregate to have nearly any effect on distribution of success, even decreasing the level of inequality in some cases. We assess these claims using archival data and a field experiment in a crowdfunding marketplace. Consistent with prior work, randomized changes to social information generate cumulative advantage. However, our treatments did not change the distribution of success. Products benefited equally from our treatments regardless of their predicted likelihood of success. Our treatments still affected marketplace dynamics by weakening the relationship between predicted and achieved success. ∗ Both authors contributed equally, order is alphabetical. Special thanks to DonorsChoose for making this research possible. This work has benefited from feedback provided by participants at CAOSS, AOM, and ASA. Generous funding was provided by Stanford’s Center on Philanthropy and Civil Society and Center for Social Innovation. 1 1 Introduction One of the seminal insights of social science is that social information, past behavior and opinions of others, powerfully shapes our attitudes, beliefs and actions. The rise of online platforms has made social information in the form of popularity, ratings and reviews increasingly accessible. However, concomitant with this profusion of social information, there is a growing controversy as to whether it facilitates or distorts marketplaces (Zuckerman, 2012; Muchnik, Aral and Taylor, 2013). On the one hand, such information may provide informative signals about difficult to observe aspects of products and services (Zhang and Liu, 2012). On the other hand, the use of social information is subject to many well-documented biases that may undermine or even pervert its potential signaling value (Cialdini, 1993; Simonsohn and Ariely, 2008). Small and arbitrary initial differences in social information may lead to large differences in success due to self-reinforcing “cumulative advantage” dynamics (Banerjee, 1992; DiPrete and Eirich, 2006). Indeed, a large body of archival (Simonsohn and Ariely, 2008; Chen, Wang and Xie, 2011; Burtch, Ghose and Wattal, 2013) and experimental (Salganik, Dodds and Watts, 2006; Tucker and Zhang, 2011; Muchnik, Aral and Taylor, 2013; van de Rijt et al., 2014) research has found evidence that changes in social information lead to cumulative advantage processes. Scholars have used this evidence to argue that social information in marketplaces inherently magnifies inequality in the distribution of success (Salganik, Dodds and Watts, 2006; Tucker and Zhang, 2011; Muchnik, Aral and Taylor, 2013; van de Rijt et al., 2014). Prior to any differences in social information, products in a marketplace already differ in their expected success. For instance, we take for granted that some products auctioned on eBay are more or less likely to reach their reserve price based on factors such as appearance and the reputation of the seller. Past research has largely sidestepped these incoming differences by employing sophisticated statistical controls or using randomization to account for pre-existing differences (cf. Salganik, Dodds and 2 Watts, 2006). However, in order to understand how social information changes the distribution of success in marketplaces, we need to know how incoming differences in expected success interact with changes in social information. Consider the case of two new books being sold in an online marketplace such as Amazon. One that is from a first-time author and one that is from a well-known author. A positive change in social information to both books, such as a good review, will create a wider gap in sales if the well-known author benefits more from the review. Alternatively, the same good review may plausibly benefit the first-time author more than a well-known author as the latter already has some established reputation and audience (Kovács and Sharkey, 2014). In this case, the change in social information will reduce the gap in success between these two books. This example illustrates how the direction of the effect of cumulative advantage on inequality is not obvious (Allison, Long and Krauze, 1982). Without an understanding of how cumulative advantage processes interact with a product’s expected success, we can say little about the effects of social information on inequality of success in a marketplace. In this paper, we investigate how changes to social information interact with a product’s expected success and explore the implications of these interactions for the distribution of success in online marketplaces. Specifically, we couple archival data from a online fundraising marketplace with an field experiment to examine the interaction between a product’s expected success and cumulative advantage processes. The marketplace, DonorsChoose, is a two-sided marketplace that helps public school teachers find donors willing to fund school supplies. DonorsChoose facilitates this by enabling teachers to post fundraising appeals (called “projects”) and by providing modern search tools to donors to find projects that appeal to them. One significant challenge is that the expected success of a product is typically not directly observable in field settings. We build on the approach taken in Salganik, Dodds and Watts’s (2006) seminal study of online culture markets in which Salganik 3 and colleagues created multiple online markets with the identical set of products. The authors experimentally manipulated the presence or absence of social information (in their context, popularity) and tracked the success of products in the marketplace. In their design, the success of products in marketplaces without social information served as a direct measure of expected success in the absence of social information. Although we cannot observe the same DonorsChoose project in multiple conditions, we take a conceptually similar approach to Salganik and colleagues by creating a counterfactual measure of expected success for projects that received our experimental treatments. We estimate expected success by generating predictions for each project using a Random Forest algorithm trained on the characteristics and fundraising outcomes of over 400 thousand past projects. With this measure of expected success, we then randomly contributed $5 or $40 to 320 projects and tracked their progress towards their fundraising goals. This randomization enables us to test whether exogenous changes in social information lead to cumulative advantage dynamics. Critically, we test whether these dynamics disproportionately benefit projects that were (un)likely to succeed by interacting our randomized treatments with our measure of expected success. This approach enables us to assess how arbitrary changes to social information change the distribution of funding outcomes. Consistent with prior work on social information in online marketplaces, we find that our randomly assigned contributions result in cumulative advantages that increase the probability that a project reaches its funding goal. Surprisingly, this effect is remarkable homogeneous across projects with different levels of predicted success; projects with characteristics that made them very likely to succeed benefited just as much as projects with characteristics that made them unlikely to succeed. In this way, our intervention introduced more noise into the fundraising process by reducing the correlation between a project’s expected and realized success. The utility of this noise depends on the goals of the marketplace. Increasing the unpredictability of success 4 reduces the chance that projects with the most popular characteristics succeed. However, a little noise also creates greater opportunities for novel, innovative and risky projects to reach their funding goals. 2 Social Influence, Cumulative Advantage and Inequality Cumulative advantage processes are considered a key driver of inequalities in society and markets (DiPrete and Eirich, 2006). Initial advantages become magnified through self-reinforcing dynamics resulting in a distribution of success that is highly unequal. Scholars have suggested two mechanisms that produce cumulative advantage processes: resource accumulation and information cascades. In the first case, small initial differences in the distribution of resources become magnified as those with initial resource advantages can channel these into additional resources and skills (Merton, 1968). An example of this is Merton’s pioneering work on cumulative advantage in academic research. This work takes a broad view of resources to include status garnered through awards. Award winners leverage their increased status to get additional funding for their work and so attract more talented students and collaborators (Merton, 1968). In this sense, Merton’s mechanism is one of resource accumulation —award winners can use their initial advantage to improve the quality of their work. Even if the initial allocation of the award were arbitrary, the emergent performance differences are merited. An alternative mechanism is information cascades (Banerjee, 1992). In these models, the assumption is that the quality of a product or service is difficult to observe directly. Consumers must rely on other observable signals such as social information that have some correlation, however weak, with quality (Podolny, 2005). For products and services, common examples of social information include information on popular- 5 ity, endorsements or reviews. This information tends to be self-reinforcing; a marginal increase in the popularity or number of endorsements a product receives makes it more attractive to subsequent consumers and reviewers. The core difference between Merton’s cumulative advantage mechanism and the information cascade model is that in the latter, success can become decoupled from a product’s underlying quality. The primary concern regarding cumulative advantage in Merton’s example of resource accumulation is that arbitrary and negligible initial differences allow certain scientists to receive a disproportionate amount of success. This resulting inequality reflects real differences in quality. That said, the arbitrary processes that lead to the inequality may be deemed unfair. The concern of information cascades scholars is quite different; their concern is that the resulting inequality in success may be largely uncorrelated with the underlying features and quality of a product or service. The expanding role of social information in our everyday lives has increased concerns over the decoupling of social information from underlying quality (Muchnik, Aral and Taylor, 2013; Colombo, Franzoni and Rossi-Lamastra, 2014). For example, business owners can manipulate their online reputations by purchasing and then reviewing their own products, or more nefariously, by paying others to give perfect reviews. This is a commonplace activity; one scholar estimated that upwards of one-third of online reviews are fake.1 Even if they are eventually identified and removed, fake reviews or ratings may influence other consumers, leading them to alter their ratings, reviewing or purchasing behavior. Once a process of cumulative advantage begins, it may be difficult to correct. Even beyond outright fraud, natural variation in marketplace activity may generate cumulative advantage processes. For example, differences in the timing of initial endorsements or purchases, which may be effectively random, could lead to cumulative advantages. These sources of advantage may be even more difficult to address than outright fraud. Ideally, outcomes in marketplaces would be relatively 1 http://www.nytimes.com/2012/08/26/business/book-reviewers-for-hire-meet-a-demand-for-onlineraves.html 6 robust to these perturbations. However, research suggests that some marketplaces may not be especially robust to these effects. In their pioneering studies of online culture markets, Salganik and colleagues examined how such arbitrary differences in social information change a product’s success in a simplified online marketplace created by the researchers (Salganik, Dodds and Watts, 2006; Salganik and Watts, 2008). They randomly assigned participants into marketplaces that either displayed the popularity of a product —in their setting, the number of downloads of a song— or suppressed this information. Product success, as measured by the number of downloads of a song, was more variable in marketplaces with popularity information than those without it. In the popularity conditions, the songs that arbitrarily received a few initial downloads achieved greater market share than the same song in a marketplace without social information. This study demonstrates that markets with social information create cumulative advantages through endogenous processes that disproportionately benefit the most appealing songs. In turn, this increases inequality within the marketplace. In a follow-up study, Salganik and colleagues also show that these endogenous processes are not destiny. In the same marketplace, they directly manipulated the popularity of songs by reversing the endogenous ranking of songs (Salganik and Watts, 2008). The impact of this manipulation of social information varied across products within the same marketplace, as measured by their success in marketplaces without social information. It strongly benefited the songs with the lowest levels of expected success. In contrast, songs with the highest levels of expected success did regain position in the download rankings but only after hundreds of subsequent downloads. The combination of these effects implies that in this second experiment, the social influence manipulation (i.e., the ranking inversion) likely reduced the inequality in popularity. In sum, the results of Salgnik and colleagues’ studies suggest that social information can generate cumulative advantage processes that have the potential to magnify or 7 reduce inequality in success, even within the same marketplace. A flourishing body of research has extended this work by examining whether changes to social information produce cumulative advantage effects in field settings. For example, Zhang and Liu (2012) found evidence in an observational study of a peer-to-peer lending platforms that changes in social information lead to cumulative advantage effects. However, it is difficult to casually identify social influence effects with observation data. Changes to social information are usually endogenous to a product’s expected success; people tend to consume, review or rate products that are likely to be appealing and succeed in the marketplace. The combination of the difficulty of measuring a product’s appeal coupled with selection processes makes it extremely challenging to determine how much success is due to changes in social information. Several recent studies have adopted a field experiment approach and to address these concerns by randomizing changes to social information. This research stream has largely reached similar conclusions (cf. Burtch, Ghose and Wattal, 2013) with studies finding that changes to social information through exogenous positive endorsements or contributions leads to cumulative advantage in a wide variety of settings including wedding services marketplaces (Tucker and Zhang, 2011), online social news aggregators (Muchnik, Aral and Taylor, 2013), online petitions, online reviews, and crowdfunding platforms (van de Rijt et al., 2014). Therefore, we expect as our baseline hypothesis: Hypothesis 1: Exogenous endorsements of or contributions to products produce cumulative advantage effects If changes to social information produce cumulative advantage, what are the implications for the distribution of success in markets? Scholars have tended to erroneously equate evidence of cumulative advantage with evidence for increased inequality of success (van de Rijt et al., 2014). However, the relationship is not deterministic because changes in the inequality of success implies that success of products relative to one 8 another—not just the absolute levels of success of a given product—change. The research design of randomly assigning changes to social information in a market only allows a researcher to estimate changes to absolute levels of success. It does not allow a researcher to draw conclusions on how these effects shape the distribution of success across products. Consider the simplest case where the cumulative advantage effects are identical across all products (e.g., a 10% increase in success rates). This produces a general shift in absolute success rates but relative success is unchanged. However, when cumulative advantage effects systematically vary across different types of products, the implications are more complex. If products with high levels of expected success benefit more from changes to social information than products with low levels of expected success, this would increase the inequality of success. Conversely, inequality in the distribution of success may decrease if products with low levels of expected success benefit more from changes to social information than products with high levels of expected success. Of course more complex relationships are possible, such as products with high and low levels of expected success benefiting more than those with average levels of expected success. Overall, these cases illustrate how cumulative advantage effects may aggregate to have nearly any effect—or no effect at all—on the level of inequality in the distribution of success. To illustrate this more concretely, imagine two musicians who are trying to raise similar amounts of money to produce a new album. These musicians decide to list their fundraising efforts on a crowdfunding platform, a popular type of online marketplace. These platforms aggregate individual contributions towards a publicly stated fundraising goal. They also commonly feature social information, typically the number and size of prior contributions. The first musician is an established one and is likely to reach her fundraising goal because she can rely on her established fan base for support. The second musician is an inexperienced musician and is less likely to reach his goal. 9 Imagine that each one of them randomly receives an initial contribution of $50. The question for scholars of cumulative advantage is which album benefits more from this contribution? One possibility suggested by the cumulative advantage literature is a “successbreeds-success” dynamic where the experienced musician disproportionately benefits. Potential contributors may prefer--perhaps because they are risk averse—to fund an album that they perceive as very likely to succeed. In this case, the first contribution will lead to stronger cumulative advantage effects for the established musician that, in turn, will create a wider gap in fundraising success than one would expect without this contribution. An alternative possibility suggested by the research on status and market uncertainty is that the inexperienced musician may disproportionately benefit from the contribution. The rationale is that it is likely that potential contributors have more uncertainty regarding the success of the inexperienced musician relative to the established musician (Podolny, 2005; Azoulay, Stuart and Wang, 2013). If the contribution decreases the level of uncertainty regarding the quality of the inexperienced musician’s fundraising appeal more than the experienced musician, then the contribution would disproportionately help the inexperienced musician. This mechanism would suggest that the first contribution would reduce the gap in realized success relative to expected success. These mechanisms are meant to be illustrative rather than exhaustive. Either one or even a combination of each, is plausible. Moreover, the functional form of the interaction of expected success and the $50 contribution may change the implications for inequality as well. For instance, there could be ceiling or floor effects. If an extremely well-known musician like Paul McCartney were to try to fundraise for an album on a crowdfunding platform, an initial contribution would likely have little effect on the distribution of success. Understanding these considerations is important, but the focus of our study is not to quantify the size or form of these mechanisms. Instead, 10 we are concerned with how the effects produced by changes in social information, regardless of the mechanism, aggregate to affect the distribution of success. While we shown that cumulative advantage may affect the distribution of success in myriad of ways, prior work has suggested that cumulative advantage processes tend to increase inequality in the distribution of success (Salganik, Dodds and Watts, 2006; Tucker and Zhang, 2011; Muchnik, Aral and Taylor, 2013; van de Rijt et al., 2014). Therefore, we expect: Hypothesis 2: Exogenous endorsements of or contributions to products benefit products with high levels of expected success more than products with low levels of expected success We explicitly examine whether cumulative advantage leads to increased inequality by testing if social information has differential benefits across products and services with different levels of expected success. In order to map differential benefits from cumulative advantage to changes in inequality in success, one needs a counterfactual estimate of expected success. Following Salganik and colleagues, we propose that a product’s expected level of success without an exogenous change to social information provides a reasonable way to create a counterfactual (Salganik, Dodds and Watts, 2006). This counterfactual enables us overcome two measurement issues. First, it provides a basis for measuring cumulative advantage. Deviation from expected success can be used to quantify cumulative advantage effects. Second, it enables one to locate products on the distribution of expected success prior to being affected by social information. This placement enables us to draw conclusions on how a change in social information may affect the shape of the distribution of success. In the following section, we describe the philanthropic marketplace we use in order to demonstrate our methodology. 11 3 Study Context Our study takes place on one of the largest and oldest crowdfunding platforms: www.donorschoose.org (referred to as DonorsChoose henceforth). Since its founding in 2003, DonorsChoose has raised over $300 million dollars for classrooms by acting as an online marketplace that connects teachers in need of school supplies with donors interested in supporting public education. Teachers list a project in the marketplace by completing a standardized template which includes the supplies they want for their classroom, the total cost of the supplies and a brief description of how the supplies will be used. The projects range from basic classroom supplies such as textbooks to more novel requests such as educational technology. The majority of funding requests range from a few hundred up to one thousand dollars. Potential donors can search through tens of thousands of projects using standard internet searching features such as keywords and facets. The barriers to entry for support are extremely low: one can contribute little as one dollar. Figure 1 presents a screenshot taken at the time of our experiment of a randomly selected project’s website. DonorsChoose provides very detailed information, most of which they verify through third-party sources. This information includes time-invariant details like the shipping costs, the level of poverty of the school and a text description of the project created by a teacher. It also includes dynamically updated social information such as the number of previous donations, the total amount raised so far and text messages of support from prior donors. When making a donation, donors can either reveal their identity or make an anonymous donation. They are also prompted to write a message for other potential donors to view, though this step is optional. Immediately after a donation, the project page is updated to reflect the donated amount, number of past donors and any new messages of support written by the donor. DonorsChoose operates in a similar manner to other prominent crowdfunding sites such as Kickstarter or IndieGoGo. Projects are successful if they achieve their funding 12 goal. When a project reaches its goal, DonorsChoose ships the supplies directly to the school to be used. Projects cannot receive donations above their fundraising goal and are immediately closed to donations once it reaches its goal. If the project fails to meet its funding goal after five months of listing, DonorsChoose removes the project from the website and donors are refunded the amount they donated to use towards other projects in the marketplace. This marketplace, which over 200,000 teachers and two million donors have participated in, is a prototypical example of a philanthropic crowdfunding platform (Agrawal, Catalini and Goldfarb, 2011; Burtch, Ghose and Wattal, 2013; Meer, 2014). We selected a philanthropic crowdfunding platform for several reasons. First, there are clear metrics of success on the platform: whether a product is funded and how quickly it reaches its fundraising goal. Second, there is variation in outcomes and expected levels of success. Roughly two-thirds of projects are funded in the marketplace and this varies greatly based on geography, subject matter and other project characteristics. Third, social information is very prominent in this marketplace. Information on the number of donors and a progress bar, which displays how much money has already been contributed, is displayed at the top of a project’s website (see Figure 1). Donors to a project also have the option of writing short messages that are listed on the project page. Overall, the platform is designed to communicate social information to inform the decisions of potential donors. Fourth, philanthropic crowdfunding is a place where social information should matter because “quality” of charitable causes is often difficult to directly observe (Hansmann, 1987). In particular, on DonorsChoose the beneficiaries of the donations, the teacher and the students, are typically distinct groups from the donors.2 This makes it difficult to assess the potential use of the pedagogical resources in the classroom 2 DonorsChoose tracks donations made by teachers who posted the projects These are rare events. Through our qualitative examination of donor comments and conversations with DonorsChoose, it is clear that the majority of donors are direct beneficiaries 13 and, once acquired, to monitor their use. The lack of direct observability creates the necessary preconditions for social information to lead to cumulative advantage effects; it is possible for social information to influence evaluations such that there is a decoupling of evaluations from a product’s underlying features. Indeed, a large literature in behavioral economics on matching and seed donations show that initial or matching contributions lead to increased rates of subsequent donations (e.g., List and Reiley, 2002; Karlan and List, 2012). Based on this work and research on crowdfunding platforms (van de Rijt et al., 2014), we expect that cumulative advantage operates in this setting. This is critical to our study because in order for social information to affect the distribution of funding outcomes, it needs to be able to produce cumulative advantage effects. Beyond theoretical considerations, understanding cumulative advantage in the philanthropic domain is important in its own right. Individual philanthropy is economically significant as individuals are estimated to have donated over $250B to charities in 2014.3 According to Gallup surveys, the vast majority of Americans —over 80 % overall and over 95 % of Americans with incomes above 75 thousand dollars— are estimated to donate to charity in a given year.4 Online giving, in particular, is becoming increasingly important. Online donations grew at an estimated 8.9% in 2014 compared to the 2.1% increase of donations overall.5 As online donations and crowdfunding platforms become an increasingly important way for charities to fundraise, it is critical to understand how social information shapes these decisions and affects the fundraising process. In addition, our particular setting is well-suited for our approach. DonorsChoose has collected extremely detailed data on all the donation activity on its website since its inception. They have the exact time (up to the nearest second), amount and source for 3 Giving USA 2015 http://www.gallup.com/poll/166250/americans-practice-charitable-giving-volunteerism.aspx 5 Blackbaud Charitable Giving Report: How Nonprofit Fundraising Performed in 2014 4 14 every donation on the website. This data enables us to construct a linked longitudinal set of donations for every project and every donation on DonorsChoose. We leverage this rich historical data to construct accurate predictions on the expected success of a project based on its time-invariant features when it is first listed on the marketplace. While attractive for theoretical reason and empirical reasons, there is a reasonable concern that studying donation decisions may not be generalizable to choice decisions in other marketplaces, such as consumer purchasing decisions. Scholars have suggested that donations are motivated by factors such as social signaling (Bénabou and Tirole, 2006; Karlan and McConnell, 2014) and the intrinsic utility or “warm glow” of the act of donating rather than aspects of the cause (Andreoni, 1990). While these factors certainly affect some donations on this platform, there are several reasons to believe that a large subset of donors on DonorsChoose are basing their decisions on more technical aspects of a project and are making decisions which are more analogous to traditional models of choice. First, one of the main attractions of DonorsChoose as a resource to donors is the ability to search and compare projects through keyword search and faceting (hence the name of the platform). Donors also have many alternatives to support public education such as school fundraisers, parent-teacher organizations, scholarship funds and a plethora of education nonprofits. If a donor wanted to support public eduction for warm glow or social signaling reasons, these are widely available options that do not involve the costs of searching through the platform. Thus, we would expect some degree of sorting amongst donors. Second, empirical analyses of DonorsChoose suggest donors are sensitive to aspects of the projects. For instance, Meer (2014) and our own analysis of DonorsChoose have found that donors are less likely to fund projects that have high levels of so-called “overhead costs” such as sales tax and shipping costs. In addition, Meer (2014) found that projects with higher levels of competition from similar projects are less likely to be funded, suggesting that donors are choosing between projects. These results 15 are consistent with recent research on donations more generally, which suggests that some donors are concerned with how their charitable dollars are being used (Gneezy, Keenan and Gneezy, 2014). In sum, the empirical evidence suggests that donors on DonorsChoose are making decisions using criteria, such as competition and price, that we would expect would affect choice behavior in other marketplaces. 4 Data and Methods Identifying the effect of cumulative advantage is difficult even with the detailed data that DonorsChoose collects. Without some form of randomization, naturally occurring or induced by a third-party, one cannot confidently separate social influence effects from unobserved heterogeneity (Manski, 1993; Shalizi and Thomas, 2011). For example, one project in our data very quickly attracted numerous donors from across the country and was funded withing hours of being listed, far less time than a typical project. It was a request for a set of books from the very popular book series, The Hunger Games. The timing of the project also set it up for success as a popular movie based on the book had been released shortly before the project was listed. As a result, fans of this series from around the country were searching the marketplace for The Hunger Games-related projects to support. Based on our reading of the messages left by the donors, it is likely that most were making their decisions to support the project based on their interest in the series and not based on the activities of prior donors. However, this relationship would be difficult to discern statistically as the flurry of donations would induce a correlation between early donations and eventual fundraising success. This is certainly an extreme example, but it illustrates how difficult it is for an analyst to differentiate social influence from difficult to observe aspects of products. We follow recent studies of social influence and sidestep the problem of unobserved heterogeneity by taking an experimental approach. Specifically, we donated $7,200 16 in $5 and $40 increments to 320 randomly selected projects on DonorsChoose over a 30-day period. We randomized which projects received our anonymous donations to isolate the causal effects of an initial contribution on funding outcomes. We also account for the fact that in this marketplace our donation simultaneously increases the probability of a project being funded by reducing the amount a project needs to reach its funding goal by either five or forty dollars. We control for this “mechanical” reduction by using a non-parametric modeling strategy to isolate the social influence effect. Our experimental approach allows us to test if social influence leads to cumulative advantages. This alone does not inform us on how cumulative advantage process affect the inequality of outcomes. This is because we cannot say if projects with a greater chance of being funded disproportionately benefit from changes to social information. Randomization has washed away such differences. To overcome this hurdle, we construct a counterfactual measure of a project’s expected success in the absence of our randomized donation. The interaction between this predicted level of success and the randomized contribution enables us to assess if the change in unpredictability varies across projects in different parts of the distribution of success. If projects likely to succeed disproportionately benefit then we have evidence for success-breeds-success inequality dynamics. We take advantage of the fine-grained historical data available on DonorsChoose to build a measure of expected success. Specifically, we use data on the characteristics and outcomes of hundreds of thousands of past projects to create an estimate of the probability a project would be funded without our intervention. If our exogenous changes to social information through randomized donations lead to success-breedssuccess dynamics, then projects with a higher predicted probability of success should benefit more than other projects. Conversely, if projects with lower predicted probabilities of success disproportionately benefit, then our randomized donations may actually 17 be reducing inequality in outcomes. The next section describes how we generate these estimates. 4.1 Predicting Success We used data on all projects posted between January 1st, 2005 and August 21st, 2012 to train a prediction algorithm that estimates a newly posted project’s chance of success. We excluded data from before 2005 as the website was significantly smaller and the information concerning each project is much less detailed. This window leaves us with 426,790 projects after excluding a handful of outlier projects that requested either zero or more than ten-thousand dollars. These 426,790 projects comprise our training set. This data provides a large number of project characteristics with which we can predict funding success. For each project, we know the timing of when a project comes online, the sales tax amount, fulfillment costs, vendor shipping charges, number of students who benefit, resource type, subject matter and if the project is eligible for matching funds. For each teacher, we know the teacher’s salutation (a proxy for gender), the grade-level they teach, if the teacher is part of Teach for America, and if the teacher is a New York Teaching Fellow. For each school, we know the location of the school, the school poverty level, if it is a charter school, a year-round school, a magnet school, an New Leaders for New Schools school, and if the school is part of the KIPP system. Each of these variables may independently affect the probability that a project will reach its funding goal. In addition, it is likely that complex functions and combinations of these variables matter as well. For example, on average projects that request iPads may be less successful than projects that request that request textbooks. However, this difference may be even greater for low poverty schools than high-poverty schools. Donors may see technology at wealthier schools as especially unappealing. One might 18 also imagine that teacher characteristics have important interactions. Perhaps gender stereotypes matter and male teachers are more successful at fundraising for sports equipment projects than their female counterparts. Overall, we want to use a prediction method that is able to account for potentially complex interactions between the project, teacher and school characteristics. We account for these potential interactions in a computationally tractable manner using a widely used machine learning algorithm, Random Forests (Breiman, 2001; Friedman, Hastie and Tibshirani, 2008). Random Forests are an extension of Classification and Regression Trees (CART). Unlike standard regression analysis, the CART algorithm automatically determines which covariates to include and how to include them. It does this by scanning over the set of variables and picking the single variable that best predicts the outcome of interest when split into two groups. Within each of these groups, the algorithm again selects the single variable that best subdivides these groups when split. It iterates in this manner until it there are less than a pre-specified number of observations in each group. The tree allows for the modeling of complex interactions since each of the subgroups may split on different variables. For example, the tree may first split projects into high-poverty schools that are likely to be funded and low-poverty schools that are unlikely to be funded. Then the algorithm would try to split each of these groups. For the low-poverty group, the split may be on the fundraising goal, indicating that donors are price sensitive. For the high-poverty group, the next split may be on vendor shipping charges, indicating that donors to this group are averse to funding overhead costs. Despite the theoretical and interpretative clarity of CARTs, they often have poor predictive performance and are unstable. The Random Forests algorithm addresses this issue by averaging the predictions of many CARTs trained on different bootstrapped samples of the data and by considering a randomly selected set of variables at each 19 split in each tree. This second step decorrelates the trees, which results in less variability when making predictions using the entire forest of trees. Random Forests have performed extremely well in benchmark tests of machine learning prediction techniques (Friedman, Hastie and Tibshirani, 2008; James et al., 2013). We use the “randomForest” package in R to build a predictive model of project success and treat the 426,790 projects described above as our training set. In the training sample, 65.5% of projects reach their funding goal. Therefore, a naive algorithm that always predicts every project ends up funded would yield a classification error rate of 34.5%. We improve on this error rate by fitting a Random Forest using 28 variables (see Figure 2 for the complete list). Instead of including cities or zip codes for each school, we include the longitude and latitude of the school, allowing the Random Forest to inductively partition geographical variation. After an initial exploratory analysis, we found that building a forest with 250 trees and considering five randomly selected variables at each split yielded the greatest predictive performance. The performance of the model is evaluated using the out-of-bag error rate, a procedure conceptually similar to cross-validation. The final error rate is 24.2%, a 30% decrease from naively guessing that every project is funded. The model exhibits few false negatives. The Random Forest incorrectly classifies projects that are funded as unfunded only 8.7% of the time. In contrast, for projects that do not reach their funding goal, the algorithm is only correct 53.6% of the time. In sum, the Random Forest greatly improves upon the naive prediction and does an especially good job at predicting which projects are likely to be winners. We also assessed the performance of the algorithm against our qualitative understanding of what factors matter on the platform. We first examined which variables the algorithm determined were most important when predicting whether a project would be funded. Figure 2 shows the importance of each variable in fitting the Random Forest, from most important at the top to least important at the bottom. Intuitively, this 20 graphs shows which variables lead to the greatest gains in classification accuracy. While variable importance tends to be biased towards variables that are continuous or have more categories, the plot nonetheless provides a way to check for which variables matters for project success. Consistent with our expectations, the amount requested is the most important variable, followed by date posted, geography, and then the project’s subject. This accords with other analyses of this platform (Meer, 2014) and more generally, with analyses of descriptive statistics of crowdfunding platforms (Mollick, 2014). To further unpack what the algorithm is doing, we randomly selected projects from each decile of predicted funding probability and present the differences and similarities in Table 1. A cursory glance at this table would suggest that larger projects are less likely to get funded. But this relationship is not deterministic. For example, the project in the 5th row only requests $194.02 but the predicted probability of funding is 27.6%. However, the project in the 20th row is the most likely to get funded (95.6%) and requests an additional $201.84 for a total of $395.86. That said, reducing the amount requested for the project in the 1st row from $816.54 to $250 would increase the predicted probability of funding from 5.6% to 48.28%. Moving the date of posting for the project in the 15th row from March to June reduces the funding probability from 77.2% to 66.4%. This difference suggests that it may be harder to raise money in the summer months when most schools are not in session. For the project in the 16th row, removing the secondary focus subject reduces the predicted probability of funding from 97.6% to 66.8%, suggesting that having a secondary focus subject may be beneficial. In contrast, for the project in the 18th row adding a secondary focus subject lowers the predicted probability of funding from 88.8% to 82.4%. It may be that secondary focus subject interacts with other project characteristics in ways that would likely be difficult to capture without the aid of the Random Forest algorithm. 21 4.2 Experimental Design Our experiment made contributions to 320 randomly selected DonorsChoose projects as soon as they were listed in the marketplace: 160 five dollar donations and 160 forty dollar donations. These are the 10th percentile and 65th percentile of donation amounts on the site, respectively. We worked with the Chief Technology Officer of DonorsChoose to create a customized data feed for all newly listed projects in a given day. We only include projects that have not received any prior contribution in the few hours since they went live. Over 99% of projects were included in our feed. We also restricted our sample to projects with a primary or secondary subject as “Literacy,” “Literature & Writing,” or “ESL”. This second selection criterion reduces the natural variation that occurs across categories and increases our statistical power (Gerber and Green, 2012). The selection procedure worked as follows. For each of the twenty days on which we made contributions, we first generated a list of all projects that fit the criteria described above. We avoid altering macro-level market dynamics by only donating to a small number of the listed projects. Donating to a large number of projects in one day might change the probability of funding for both the treated and untreated projects. Therefore, we restrict the number of projects that we contribute to on any given day in order to ensure that we can meet the stable unit treatment value assumption (SUTVA). This assumption would be violated if we dramatically changed the dynamics of the marketplace (Morgan and Winship, 2007). Specifically, we limit the number of projects we donate to at most 16 on any given day, a small fraction of active projects. We donated to projects on twenty days from August 22nd to September 18th, 2012. To maintain strict comparability between our treatment and control groups, we only analyze projects listed on days in which we made a donation. Figure 3 shows the number of projects we donated to, and the total number of projects at risk at receiving our treatment. Our donations were anonymous and all contributions appear visually 22 identical to potential donors to minimize potential donor identity effects (Karlan and List, 2012). We worked with DonorsChoose to ensure that our initial donation did not immediately alter the project’s search rank.6 (Ghose and Yang, 2009; Ghose, Ipeirotis and Li, 2014). We construct a control group out of the 2,651 projects that were at risk for being selected into treatment but were randomly excluded. We cannot include the entire sample of non-treated projects in our models because the number of projects and funding probability vary greatly across days. From our prediction modeling, we know that timing effects are important and thus including the entire sample may lead us to bias our estimates by improperly weighting some days more than others. To address this issue, one can include fixed effects for each day to control for inter-day variation in funding probability or sample projects proportional to the number of projects treated on each day. We chose to randomly sample projects in proportion to the number treated on that day.7 Specifically, we sample 5 control projects per 1 treatment project to maximize our power. We have to drop two days where there are too few potential control projects to sample. This procedure leaves us with 144 projects that receive the $5 treatment, 144 the $40 treatment, and 1,440 randomly selected projects serving as our control group. 5 Results and Analysis We begin our analysis by comparing our treatment and control groups. Table 2 presents summary statistics for the control, $5 treatment, and $40 treatment groups. The vari6 By the design of the search algorithm, the amount contributed does not alter the search rank until the project is nearing its 5 month time frame for funding Even at this point, the primary characteristics used to rank search are the school characteristics, time remaining and amount outstanding to raise. Checking the search order qualitatively during our experiment revealed no difference between our treatment and control groups. Finally, the effects observed from our treatments occur before the algorithm meaningfully alters search results. 7 Results are qualitatively unchanged using the fixed-effect approach. 23 ables “Total Project Amount”, “Number of Students Reached”, and the “Random Forest Predicted Probability of Funding” are time invariant and are measured prior to our treatment donations. In Table 2 the italicized variables “Days to Funding” and “Project is Funded” are measured after our treatment. Correlations between these variables are presented in Table 3. The pre-treatment variables show no statistically significant differences between groups and provide first-order evidence that our randomization procedure was successful. More formally, Table 4 regresses the three pre-treatment variables on our treatment conditions. If our randomizations are unrelated to project characteristics, then it should be the case that the coefficients on our treatment variables should be very close to zero. Indeed, the coefficients are small, and all insignificant. This gives us confidence that our procedure resulted in randomized treatment assignments. The effects of our intervention on the post-treatment variables are presented in Table 2. We find that our randomized donations alter the time to funding and the probability that a project is funded. Projects in the $40 treatment group have a funding rate of 84%, 10% higher than the control group rate of 73%. They also reach their funding goal on average 13 days faster than the 70 days it takes the control group. Unexpectedly, projects in the $5 treatment arm appear to perform somewhat worse than the control being funded at a 72% rate as compared to the control rate of 74%. Moreover, projects that received the $5 donations take on average 9 days longer to be funded than projects in the control group. We examine the hazard of funding by fitting separate Kaplan-Meier survival curves by experimental condition in order to better understand how our treatments altered funding dynamics. This approach also enables us to account for the right-censoring that occurs when DonorsChoose removes projects that have been on the site for 5 months (approximately 150 days). Given enough time, some (or perhaps all) of these projects would be fully funded. Analyzing funding rates allows us to account for this censoring. 24 Figure 4 plots the probability of funding by experimental condition. The x-axis is the number of days since posting. Consistent with Table 2, the $40 treatment appears to have a greater hazard rate of funding than the control group and this difference appears to grow larger with time. This increase is consistent with models of social influence leading to cumulative advantages. The funding probability for projects that received the $5 treatment appears slightly lower, but this decrease does not appear to change with time. While the $40 Kaplan-Meier curve is suggestive of cumulative advantage, it does not take into account that our donation reduces the amount of outstanding money a teacher has to raise to reach their goal, which we refer to as the “amount outstanding”. For instance, projects in the $40 treatment arm have $40 less to raise. Therefore, even if donors were unaffected by our treatment, projects in the $40 treatment arm should still be more successful at reaching their funding goals, ceteras paribus. We account for the reduction in the amount outstanding by controlling for it in a non-parametric way. Specifically, we fit Generalized Additive Models with coefficients for each of our treatments and with a penalized regression spline in the amount outstanding. We set the number and location of knots for the spline by minimizing the error rate using cross-validation. Table 5 present linear probability models of funding and Table 6 presents hazard models of funding. Model 1 and Model 5 replicate the summary statistic and KaplanMeier analysis presented above in regression form. The results are consistent with the visual evidence in Figure 4. The probability of funding for the projects in the $40 treatment condition increases by 9.7 percent (SE = 0.038) and the hazard of funding by 0.29 (SE = 0.096). While the $5 treatment is negative, the coefficient size is small and statistically insignificant. Model 2 and Model 6 control for the mechanical reduction by incorporating a spline in the amount outstanding. Model 6, the Cox model, also 25 includes strata for each quintile of the amount outstanding.8 We find some evidence for cumulative advantage in Models 2 and 6. Once we account for the mechanical reduction in the amount outstanding, the coefficient for the $40 treatment drops from 0.097 to 0.069 and is only significant at the 10% level. In Model 6, the hazard drops from 0.291 to 0.217 but remains significant at the 5% level. Taking these results together, it appears that roughly one-third of our effect occurs because of the mechanical reduction in the amount outstanding and the other two-thirds by changing the hazard rate of future donations. The $5 donations do not appear to have any meaningful effect on outcomes. Overall, Hypothesis 1 is largely supported for our $40 treatment. Next, we investigate Hypothesis 2 by testing whether these cumulative advantage effects change the distribution of outcomes. We estimate these changes by interacting our treatments with our measure of predicted success. We begin by first including normalized predicted funding probability in Models 3 and 7 in Tables 5 and 6. The predicted funding probability coefficient is highly significant in the linear probability and Cox models. To get a sense of the effect size, it is useful to compare the coefficient in our regression to the overall variability of our measure. A one standard deviation increase corresponds to a 15% increase in predicted probability. In the linear probability model, the coefficient on our normalized measure is 14.5%. This implies that a one standard deviation increase in the predicted probability of funding (15% higher) leads to a 14.5% increase in the actual probability of funding in our data. This provides strong evidence that our predicted probability measure is capturing differences in the likelihood of project success. Moreover, we increase our power by capturing a substantial amount of project heterogeneity with the inclusion of the predicted funding 8 One concern with fitting Cox models is meeting the proportional hazards assumption. In unreported analyses, we find that larger projects are less likely to get funded in the early days than projects that request a smaller amount, though this difference dissipates over time. To account for this, we stratify models 6-8 on quintiles of project size. Testing the model with these strata reveals that we meet the proportional hazards assumption. 26 probability variable in Models 3 and 7. The magnitude and statistical significance of our $40 treatment increases in both models. This provides further evidence that our $40 treatment leads to cumulative advantage. Models 4 and 8 in Tables 5 and 6 interact our treatment indicators with the predicted probability of funding. We find little evidence that projects that have higher predicted probabilities of funding benefit more from a randomized $40 treatment. The coefficient on the interaction term is small and statistically insignificant in both models. The main effect of the $40 donation does not change in magnitude nor significance. Thus, we find little evidence for cumulative advantage distorting the baseline chance of funding success. All projects appear to benefit equally from arbitrary and exogenous variation in social information. A potential concern with our analysis is that the functional form of the interaction effect may be non-linear. Our assumption of linearity may be masking underlying effects that at the tails of the distribution. We account for these possibilities by again using Generalized Additive Models with a flexible spline specification. Specifically, we fit models in which we interact our $40 treatment with a spline of predicted funding probability. This allows us to see if our treatments have larger or small effects at different points in the predicted funding probability distribution. Since the $5 treatment has no effect thus far, we drop these observations from this analysis to ease interpretation. As above, we determine the location and number of knots using cross-validation and minimization of the the out-of-fold error rate. Interpreting the implications of these non-linear interactions using coefficient estimates directly is extremely difficult. In lieu of regression tables, we present the results of these non-linear interactions by plotting the marginal effects in Figure 5. The xaxis in both plots is the standardized predicted funding probability. The black lines represent changes in funding probability for projects in the control group. The blue line changes in the funding probability for the the projects in the $40 treatment group. 27 The light shaded areas are the 95 percent confidence intervals. While the spline in the linear probability model is slightly non-linear at the tails, the Cox hazard model is perfectly linear. For both groups and in both models, the realized funding probability increases linearly with the predicted funding probability. We find no evidence for non-linear interaction effects. In short, we find no evidence for Hypothesis 2. Our treatments are constant no matter a project’s expected level of success; the unlikely to succeed and the very likely to succeed appear to equally benefit. In summary, we find that our $40 treatment leads to cumulative advantage. However, we find little evidence that our treatment varies across projects with different predicted levels of success. These results taken together suggest that cumulative advantage operates in our setting but that random variation in social information plays no direct role creating wider differences in success than one would expect. Instead, it seems that exogenous changes in social information induced by our treatments benefits projects across the distribution of expected success in a relatively equally way. This suggests that exogenous changes in social information may simply lead to more unpredictable outcomes, even if the distribution of success in expectation is relatively similar. 6 Discussion Our study has shown that the existence of cumulative advantage induced by exogenous changes does not necessarily increase inequality of success. This existence proof is an important corrective to the assumption made by many scholars that cumulative advantage, often created by social influence processes, inherently increases the inequality of success in marketplaces (Salganik, Dodds and Watts, 2006; Muchnik, Aral and Taylor, 2013; van de Rijt et al., 2014). Rather than assume a direct mapping between cumulative advantage and changes in the inequality of success, we provide a replicable 28 methodology for assessing the existence and strength of this link. Quantifying these effects is necessary in order to design marketplaces that balance the benefits of social information with the potential costs of the distortions such information may introduce. Though we did not find evidence that exogenous changes to social information produce differential cumulative advantage effects, there are other mechanisms through which cumulative advantage processes may exacerbate inequalities. For example, products that have an innate appeal may be more likely to receive an initial review, endorsement or contribution. Receiving earlier support could provide an initial advantage for these products over comparable products. In addition, some products in certain marketplaces may be more likely to receive large contributions, which prior research (along with our results) suggests may be more likely to attract subsequent customers (List and Reiley, 2002). Future research should investigate the role of these endogenous processes. However, our analysis greatly reduces concerns that arbitrary and exogenous early differences in social information deterministically lead to increases in the inequality of success. In our setting, products that are unlikely to succeed and that are likely to succeed equally benefit from changes to social information that is uncorrelated with underlying features of a product. By focusing on the inequality of success, our approach also sidesteps the contentious debate over whether the “wisdom of crowds” exists when social information in a marketplace leads to potentially interdependent rather than independent judgments (Zhang and Liu, 2012). This is an important debate to have, but it ignores the fact that marketplaces are often designed in ways that shepherd crowds towards particular goals. For example, crowdfunding platforms like Kickstarter and IndieGoGo curate and promote products that would otherwise be difficult to discover. DonorsChoose explicitly highlights projects that serve high-poverty public schools precisely because one of its goals as a philanthropic marketplace is to help direct capital to needy students. In practice, these platforms tend to reduce the inequality of success by promoting products that 29 are aligned with the goals of the marketplace designers. An important limitation to our study is the generalizablilty of its context. While we argue that the behavior of donors on DonorsChoose is similar to what we would expect in other marketplaces, we cannot be certain without replication in other contexts. For instance, it is possible that our interventions affect only aspects of evaluation that are particular to a philanthropic context, such as social signaling or warm glow, rather than more general perceptions of project’s features. Although we cannot rule out these explanations with our current study, future research should attempt to study these possibilities. Moreover, understanding both commercial and philanthropic motivations is particularly timely as new products and services are increasingly combining the two (Battilana and Lee, 2014). For example, consider the case of so-called “rewards-based” crowdfunding platforms such as Kickstarter and IndieGoGo. While some contributors use these platforms to buy a product or service (i.e., the contributor’s reward is the actual product or service being developed), many contributors support the overall endeavor and do not receive the actual products and services created. Instead, they typically receive recognition or trinkets as their rewards, which is very similar to what donors normally receive in exchange for charitable contributions. 7 Conclusion One of the key findings of our study is that social information may increase unpredictability of success in marketplaces. But when would unpredictability be desirable and when might it be detrimental? Our view is that the value of unpredictability is that it is one way to promote diversity of success in a marketplace. Diversity is not always a good thing. For instance, unpredictability is especially harmful in marketplaces where consumers have common goals, a consensus on what constitutes “quality” and a consensus on how to measure it. One example of this type of marketplace would 30 be peer-to-peer lending. Lenders have similar goals; they are looking for the best risk adjusted rate of return they can get. A situation in which social influence increases the funding of poor performing loans is bad for the consumers of these marketplaces and, in the long run, may jeopardize the viability of these platforms if lenders lose money. It is also likely bad for people who are taking the loan, as failing to repay a loan may hurt their credit rating or ability to get future loans. Alternatively, many marketplaces have consumers with diverse motivations and where assessments of quality are more varied (Zuckerman, 2012). These marketplaces may benefit from unpredictability as it leads to diversity in the distribution of success. The chance of an unpredicted success may encourage risk-taking, innovation, and exploratory strategies (March, 1991). The diversity of success may be an explicit goal for marketplaces seeking to foster innovation, a goal of many crowdfunding platforms. Concerns about decoupling success from the underlying appeal of a product may be muted because the single ideal or metric of “quality” may not exist. In sum, the greater levels of unpredictability created through social information may, on average, help products with less inherently popular characteristics. But it may also enable less appealing but more innovative products to succeed. 31 References Agrawal, Ajay K., Christian Catalini and Avi Goldfarb. 2011. “The geography of crowdfunding.” National Bureau of Economic Research . Allison, Paul D, J Scott Long and Tad K Krauze. 1982. “Cumulative advantage and inequality in science.” American Sociological Review pp. 615–625. Andreoni, James. 1990. “Impure altruism and donations to public goods: a theory of warm-glow giving.” The economic journal pp. 464–477. Azoulay, Pierre, Toby Stuart and Yanbo Wang. 2013. “Matthew: Effect or fable?” Management Science 60(1):92–109. Banerjee, Abhijit V. 1992. “A simple model of herd behavior.” The Quarterly Journal of Economics pp. 797–817. Battilana, Julie and Matthew Lee. 2014. “Advancing research on hybrid organizing– Insights from the study of social enterprises.” The Academy of Management Annals 8(1):397–441. Bénabou, Roland and Jean Tirole. 2006. “Incentives and Prosocial Behavior.” American Economic Review 96(5):1652–1678. Breiman, Leo. 2001. “Random forests.” Machine Learning 45(1):5–32. Burtch, Gordon, Anindya Ghose and Sunil Wattal. 2013. “An empirical examination of the antecedents and consequences of contribution patterns in crowd-funded markets.” Information Systems Research 24(3):499–519. Chen, Yubo, Qi Wang and Jinhong Xie. 2011. “Online social interactions: A natural experiment on word of mouth versus observational learning.” Journal of Marketing Research 48(2):238–254. Cialdini, Robert B. 1993. Influence: The psychology of persuasion. Colombo, Massimo G, Chiara Franzoni and Cristina Rossi-Lamastra. 2014. “Internal Social Capital and the Attraction of Early Contributions in Crowdfunding.” Entrepreneurship Theory and Practice 39(1):75–100. DiPrete, Thomas A and Gregory M Eirich. 2006. “Cumulative Advantage as a Mechanism for Inequality: A Review of Theoretical and Empirical Developments.” Annual Review of Sociology 32(1):271–297. Friedman, Jerome, Trevor Hastie and Robert Tibshirani. 2008. The Elements of Statistical Learning. Springer series in statistics Springer, Berlin. Gerber, Alan S and Donald P Green. 2012. Field experiments: Design, analysis, and interpretation. WW Norton. 32 Ghose, Anindya, Panagiotis G Ipeirotis and Beibei Li. 2014. “Examining the Impact of Ranking on Consumer Behavior and Search Engine Revenue.” Management Science . Ghose, Anindya and Sha Yang. 2009. “An empirical analysis of search engine advertising: Sponsored search in electronic markets.” Management Science 55(10):1605– 1622. Gneezy, Uri, Elizabeth A Keenan and Ayelet Gneezy. 2014. “Avoiding overhead aversion in charity.” Science 346(6209):632–635. Hansmann, Henry. 1987. “Economic theories of nonprofit organization.” The nonprofit sector: A research handbook 1:27–42. James, Gareth, Daniela Witten, Trevor Hastie and Robert Tibshirani. 2013. An introduction to statistical learning. Springer. Karlan, Dean and John A. List. 2012. “How Can Bill and Melinda Gates Increase Other Peoples Donations to Fund Public Goods?” National Bureau of Economic Research . Karlan, Dean and Margaret A McConnell. 2014. “Hey look at me: The effect of giving circles on giving.” Journal of Economic Behavior & Organization 106:402–412. Kovács, Balázs and Amanda J Sharkey. 2014. “The Paradox of Publicity How Awards Can Negatively Affect the Evaluation of Quality.” Administrative Science Quarterly 59(1):1–33. List, John and David Reiley. 2002. “The Effects of Seed Money and Refunds on Charitable Giving: Experimental Evidence from a University Capital Campaign.” Journal of Political Economy 110(1):215–233. Manski, Charles F. 1993. “Identification of endogenous social effects: The reflection problem.” The Review of Economic Studies 60(3):531–542. March, James G. 1991. “Exploration and Exploitation in Organizational Learning.” Organization Science 2(1):71–87. Meer, Jonathan. 2014. “Effects of the price of charitable giving: Evidence from an online crowdfunding platform.” Journal of Economic Behavior & Organization 103:113–124. Merton, Robert K. 1968. “The Matthew Effect in Science.” Science 159:56–63. Mollick, Ethan. 2014. “The dynamics of crowdfunding: An exploratory study.” Journal of Business Venturing 29(1):1–16. Morgan, Stephen L. and Christopher Winship. 2007. Counterfactuals and causal inference: Methods and principles for social research. Cambridge University Press. 33 Muchnik, Lev, Sinan Aral and Sean J. Taylor. 2013. “Social influence bias: A randomized experiment.” Science 341(6146):647–651. Podolny, Joel M. 2005. Status Signals. A Sociological Study of Market Competition Princeton Univ Pr. Salganik, Matthew J. and Duncan J. Watts. 2008. “Leading the herd astray: An experimental study of self-fulfilling prophecies in an artificial cultural market.” Social Psychology Quarterly 71(4):338–355. Salganik, Matthew J, Peter Sheridan Dodds and Duncan J Watts. 2006. “Experimental Study of Inequality and Unpredictability in an Artificial Cultural Market.” Science 311:854–856. Shalizi, Cosma Rohilla and Andrew C Thomas. 2011. “Homophily and contagion are generically confounded in observational social network studies.” Sociological Methods & Research 40(2):211–239. Simonsohn, Uri and Dan Ariely. 2008. “When Rational Sellers Face Nonrational Buyers: Evidence from Herding on eBay.” Management Science 54(9):1624–1637. Tucker, Catherine and Juanjuan Zhang. 2011. “How does popularity information affect choices? A field experiment.” Management Science 57(5):828–842. van de Rijt, Arnout, Soong Moon Kang, Michael Restivo and Akshay Patil. 2014. “Field experiments of success-breeds-success dynamics.” Proceedings of the National Academy of Sciences 111(19):6934–6939. Zhang, Juanjuan and Peng Liu. 2012. “Rational herding in microloan markets.” Management Science 58(5):892–912. Zuckerman, Ezra W. 2012. “Construction, concentration, and (dis) continuities in social valuations.” Annual Review of Sociology 38:223–245. 34 8 Figures and Tables Figure 1: Example of the DonorsChoose website at the time the experiment took place. 35 Variable Importance Tota Project Amount ● Day in Year ● School Longitude ● School Latitude ● Secondary Focus Subject ● Primary Focus Subject ● Vendor Shipping Charges ● Number Students Reached ● Day of Week ● Sales Tax ● Year ● Secondary Focus Area ● Resource Type ● Grade Level ● Fulfillment Costs ● Primary Focus Area ● Double Impact Match Eligible ● Teacher Mr, Mrs, or Ms ● School Poverty Level ● Almost Home Match Eligible ● TFA Teacher ● Charter School ● Year Round School ● Magnet School ● NLNS School ● NYTF Teacher ● Promise School ● KIPP School ● 0 5000 36 10000 15000 20000 MeanDecreaseGini Figure 2: Random Forest variable importance. Variables at the top of the list are more important in predicting a project’s probability of success than variables at the bottom of the list. Table 1: Two Randomly Selected Projects from each Decile of Predicted Funding Probability PID 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 P(Funding) Project Title Date Project Amt Primary Subject 0.056 05-12 816.54 Literature & Writing A New Projector Would Make Presentations-Kids Brighter! 0.068 04-16 377.72 Literature & Writing Continuing To Expand Our Electronic Library 0.136 05-06 1, 899.96 Mathematics Teaching Through Technology 0.156 03-15 412.50 Mathematics Becoming Mathematicians With Technology 0.276 02-18 194.02 Special Needs Create And Learn 0.292 08-09 661.49 Environmental Science Geologists In The Making 0.316 07-21 210.02 Literature & Writing Stay Gold, Ponyboy 0.320 03-30 379.85 Literature & Writing Learning and Growing through Music 0.404 06-16 440.60 Literature & Writing Help Us Make Writing Wondrous! 0.452 03-14 252.47 Literacy iCan Learn with iPods! 0.536 05-10 735.32 Music Keyboards to Keep Kids Learning Part 2! 0.572 06-15 434.45 Literacy We Are Greatly in Need of General School Supplies 0.656 02-23 777.27 Literature & Writing New Technology for Our Classroom 2 0.696 06-12 142.32 Literacy Keeping Our Classroom Colorful in the New School Year! 0.772 03-07 598.26 Special Needs Technology for Special Needs Students 0.796 02-19 296.83 Literacy Where Are the Books? 0.816 05-25 339.35 Music Help Us Learn Guitar! 0.888 03-11 612.59 Literacy Empowering First Graders 0.928 03-16 250.84 Special Needs Read and Explore 0.956 08-11 395.86 Literacy Fill Our bookshelves! 37 Secondary Subject Shipping Students Literacy 12 175 Literacy 12 27 Other 158 24 31.29 100 Mathematics 0 30 Applied Sciences 0 70 0 24 Mathematics 12 18 Literacy 0 31 Mathematics 12 21 0 120 0 30 12 75 0 21 47.21 9 0 18 0 10 0 27 12 13 0 26 ESL Literature & Writing Special Needs Early Development Literacy Table 2: Summary Statistics by Experimental Condition Variable N Mean St. Dev. Min Median Max Control Projects Total Project Amount Number Students Reached Predicted Probability Days to Funding Project is Funded 1,440 1,440 1,440 1,440 1,440 534.80 68.1 0.66 69.9 0.74 393.55 111.4 0.15 56.1 0.44 133.47 5 0.22 1 0 434.77 30 0.67 49 1 4,512.88 999 0.96 150 1 $5 Treated Projects Total Project Amount Number Students Reached Predicted Probability Days to Funding Project is Funded 144 144 144 144 144 495.18 61.8 0.66 78.7 0.72 297.88 96.8 0.15 56.2 0.45 131.72 7 0.27 2 0 435.63 30 0.65 58 1 2,216.21 999 0.94 150 1 $40 Treated Projects Total Project Amount Number Students Reached Predicted Probability Days to Funding Project is Funded 144 144 144 144 144 491.74 74.5 0.67 56.7 0.84 391.95 145.1 0.15 52.2 0.37 134.95 12 0.29 1 0 418.36 29.5 0.69 39 1 3,297.28 999 0.92 150 1 Training Set Projects Italicized variables are measured post treament. Table 3: Correlations (1) (2) (3) (4) (5) (6) (7) (8) Total Project Amount Number Students Reached Predicted Probability Number of Natural Donors Days to Funding Reached Funding Goal Forty Dollar Treatment Five Dollar Treatment (1) (2) (3) (4) (5) (6) (7) 0.08 -0.63 -0.09 0.18 -0.14 -0.03 -0.03 -0.07 -0.02 -0.01 0.02 0.02 -0.02 0.09 -0.32 0.28 0.02 -0.02 -0.26 0.38 -0.01 -0.02 -0.78 -0.07 0.05 0.06 -0.02 -0.09 38 (8) Table 4: Balance Tests Dependent variable: PFP Log(Project Amount) Log(Num Students Reached) (1) (2) (3) $5 Treatment −0.008 (0.013) −0.046 (0.051) 0.004 (0.075) $40 Treatment 0.010 (0.013) −0.088 (0.051) 0.013 (0.075) Constant 0.663∗∗ (0.004) 6.098∗∗ (0.015) 3.705∗∗ (0.023) 1,728 3 −843.173 −0.001 1,728 3 −1,513.84 0.001 1,728 3 −2,195.23 −0.001 Observations Model D.F. Log Likelihood Adjusted R2 ∗ p<0.05; ∗∗ p<0.01 Linear Regression Models. Predicted Funding Probability (PFP). Note: 39 Table 5: Linear Probability Models Is the project funded? (1) (2) (3) (4) $5 Treatment −0.028 (0.038) −0.033 (0.037) −0.020 (0.036) −0.019 (0.036) $40 Treatment 0.097∗ (0.038) 0.069 (0.037) 0.087∗ (0.036) 0.084∗ (0.037) 0.145∗∗ (0.015) 0.141∗∗ (0.015) Predicted Funding Probability (PFP) $5 Treatment × PFP 0.022 (0.036) $40 Treatment × PFP 0.027 (0.036) Constant Project Amount Splines Observations Estimated Model D.F. Log Likelihood Adjusted R2 Note: 0.744∗∗ (0.011) 0.746∗∗ (0.011) 0.744∗∗ (0.011) 0.744∗∗ (0.011) No Yes Yes Yes 1,728 3 −1,007.198 0.003 1,728 6.87 −974.833 0.042 1,728 6.17 −934.405 0.085 1,728 8.12 −935.990 0.085 ∗ p<0.05; ∗∗ p<0.01; Linear probability models with penalized splines. All continuous variables standardized. Predicted Funding Probability (PFP). 40 Table 6: Cox-Proportional Hazard Models Days till project is funded (5) (6) (7) (8) $5 Treatment −0.138 (0.103) −0.186 (0.103) −0.145 (0.104) −0.149 (0.105) $40 Treatment 0.291∗∗ (0.096) 0.217∗ (0.097) 0.270∗ (0.097) 0.239∗ (0.102) 0.388∗∗ (0.045) 0.374∗∗ (0.047) Predicted Funding Probability (PFP) $5 Treatment × PFP 0.032 (0.106) $40 Treatment × PFP 0.160 (0.107) Project Amount Quintile Strata Project Amount Splines Observations Estimated Model D.F. Log Likelihood Note: No No Yes Yes Yes Yes Yes Yes 1,728 2 -8,936.613 1,728 3.17 -6,813.302 1,728 5.01 -6,773.990 1,728 7.91 -6,772.463 ∗ p<0.05; ∗∗ p<0.01; Cox-proportional hazard models with penalized splines. All continuous variables standardized. Predicted Funding Probability (PFP). 41 Count of new projects 300 Condition 200 40 5 0 100 0 9−18 9−17 9−16 9−15 9−14 9−13 9−12 9−11 9−10 9−09 9−08 9−07 9−06 9−05 9−04 9−03 9−02 9−01 8−31 8−30 8−29 8−28 8−27 8−26 8−25 8−24 8−23 8−22 Date Figure 3: Number of projects per day posted during experimental intervention period by condition. 42 1 0.8 0.6 0.4 Exp Condition 0 0.2 Control $5 $40 0 50 100 150 Days since project posted Figure 4: Kaplan-Meier Curves showing the probability after x days that a project is fully funded by condition. 43 Cox-Proportional Hazard 0 Change in Hazard of Funding -1 0.2 0.0 -0.2 -0.4 -2 -0.6 Change in Probability of Funding 1 0.4 0.6 Linear Probability Model -3 -2 -1 0 1 2 -3 Standardized Predicted Funding Probability -2 -1 0 1 2 Standardized Predicted Funding Probability Figure 5: Testing the Linearity of the Interaction Effect using Generalized Addative Models. 44