Tangible User Interfaces Introduction

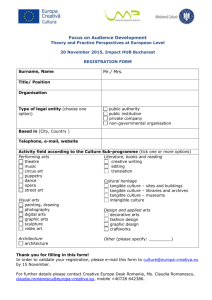

advertisement