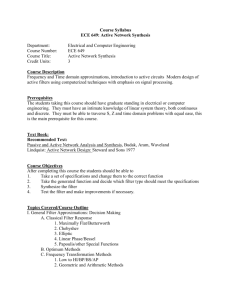

Chapter 9: Optimum Linear Systems

advertisement

Chapter 9: Optimum Linear Systems

9-1

Introduction

9-2

Criteria of Optimality

9-3

Restrictions on the Optimum System

9-4

Optimization by Parameter Adjustment

9-5

Systems That Maximize Signal-to-Noise Ratio

9-6

Systems That Minimize Mean-Square Error

Concepts:

System Performance Criteria

Criteria of Optimality

Restrictions on the Optimum System

Optimization by Parameter Adjustment (Variation of Parameters)

Matched Filtering

Systems That Maximize Signal-to-Noise Ratio

Systems That Minimize Mean-Square Error

Weiner Filters

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

1 of 16

ECE 3800

Chapter 9: Optimum Linear Systems

9-2

Criteria of Optimality

1. The criterion must have physical significance and not lead to a trivial result.

2. The criterion must lead to a unique solution.

3. the criterion should result in a mathematical form that is capable of being solved.

Be careful, “optimality” is in the opinion of the person defining it ….

It may not be the same optimal for all people/applications.

Common optimal criterion/definitions in ECE:

maximize the output signal to noise ratio (SNR) – Sec. 9.5

minimum mean squared error estimate (MMSE)

maximum likelihood estimate (MLE)

maximum a-posteriori estimate (MAP)

minimized a defined cost function

9-3

Restrictions on the Optimum System

Realizability - It can really be built, not just theory

Causality – inputs cause outputs, no “crystal balls” looking into the future

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

2 of 16

ECE 3800

9.5

Systems that Maximize Signal-to-Noise Ratio

PSignal

SNR is defined as

E s t 2

N 0 B EQ

PNoise

st nt

Define for an input signal

so t no t

Define for a filtered output signal

For a linear system, we have:

s o t no t

h st nt d

0

The input SNR can be describe as

SNRin

PSignal

PNoise

The output SNR can be described as

SNRout

PSignal

PNoise

E nt

E st 2

2

Es t

E n t N B

E so t 2

2

o

2

o

o

EQ

or substituting

2

E h s t d

0

SNRout

1

N o ht 2 dt

2

0

Using Schwartz’s Inequality: The sum of the square of a product is less than or equal to the

product of the sum of the squares. Or mathematically (in continuous time)

(http://en.wikipedia.org/wiki/Cauchy%E2%80%93Schwarz_inequality )

2

2

2

h s d h d s d

0

0

0

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

3 of 16

ECE 3800

Applying this inequality to the SNR out equation

2

2

h d E s t d

2

0

0

2

or SNRout

SNRout

E s t d

No

1

2

0

N o ht dt

2

0

To achieve the maximum SNR, the equality condition of Schwartz’s Inequality must hold, or

2

2

2

h s t d h d s t d

0

0

0

h K st u

For the filtering operation, the condition can be met for

where K is an arbitrary gain constant.

The desired impulse response is simply the time inverse of the signal waveform at time t, a fixed

moment chosen for optimality. If this is done, the maximum filter power (with K=1) can be

computed as

h

2

0

d

st

2

t

d

0

maxSNRout

s

2

d t

2

t

No

This filter concept is called a matched filter.

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

4 of 16

ECE 3800

Matched Filter Pulse or radar detection

If you wanted to detect a burst waveform that has been transmitted, to maximize the received

SNR, the receiving filter should be the time inverse of the signal transmitted!

For

use

burst t ,

s t

0,

K burstT t ,

ht

0,

0t T

T t

0t T

T t

We expect to receive a transmitted burst as

yt A st Tdelay nt ht

Note and caution: when using such a filter, the received signal maximum SNR will occur when

the signal and convolved filter perfectly overlap (maximum of the auto- or cross-correlation).

This moment in time occurs when the “complete” burst has been received by the system. If

measuring the time-of-flight of the burst, the moment is exactly the filter length longer than the

time-of-flight. (Think about where the leading edge of the signal-of-interest is when transmitted,

when first received, and when fully present in the filter).

This is a form of correlation detection. The convolution of a filter comprised of the reverse time

signal being searched for results in a correlation process when the “filter convolution” is

performed. The result is the summed time waveforms of a delayed and gain scaled

autocorrelation with a noise cross-correlation. In general the more “unlike noise” the signal is,

the better the output results will be.

The strangest implementation is a signal that is a pseudo-random sequence. As long as the input

noise and pseudo-random sequence are uncorrelated, it works great … like the Global

Positioning System (GPS).

This is the basis for “Direct Sequence Spread Spectrum” (DSSS) being used for

communications.

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

5 of 16

ECE 3800

Matched Filters

Classic examples: Radar or sonar pulses.

See: http://en.wikipedia.org/wiki/Matched_filter

The discrete implementation can be quite interesting ….

sn vn

s o n vo n

Define for an input signal

Define for a filtered output signal

For a discrete time linear system, we have:

s o n no n hk s n k vn k

k 0

k 0

k 0

s o n no n hk s n k hk vn k h s h v

H

H

Using a notational shortcut (vector math concepts, detection and estimation theory)

h s h s

SNR

P

E v n E h v h v

h s h s h s h s

SNR

E h v v h

h R h

E s o n

PSignal

out

2

H

2

Noise

H

H

H

H

H

o

H

H

H

out

H

H

H

H

H

H

vv

If the noise terms are independent

SNRout

h

H

s h s

H

H

h v2 I h

H

h

H

h

s h s

v2

H

H

h

H

Using Schwartz’s Inequality

(http://en.wikipedia.org/wiki/Cauchy%E2%80%93Schwarz_inequality )

h

H

2

s h s h s h h s s h h s s

H

H

SNR out

h

H

H

H

H

s s

h h

h s s

v2

H

H

H

H

2

v

To achieve the maximum SNR, the equality condition of Schwartz’s Inequality must hold, or

h s h s h

H

H

H

h s s

H

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

6 of 16

ECE 3800

h K s

This condition can be met for

hk K sn k u k , for k 0 : N 1

or in terms of time samples

where K is an arbitrary gain constant.

An alternate computation plays some different “tricks”

SNRout

h

H

s h s

H

E h vv h

H

H

h Rvv

H

SNRout

h

H

Rvv

h

H

1

1

2

2

H

H

H

H

1

R

R

2

s

2

1

Rvv

h

1

vv

H

2

h s

H

h Rvv h

Rvv

h

s h s

H

1

1

h

2

R

h R

h

2

vv

Rvv

H

H

1

vv

H

2

H

Rvv

1

H

2

h

2

2

1

vv

2

2

s

Again using Schwartz’s Inequality

(http://en.wikipedia.org/wiki/Cauchy%E2%80%93Schwarz_inequality )

2

h s h h s s

H

H

R h R s R s

R h R h

R

s R

s s R

s

SNRout

H

R 12 h

vv

H

1

vv

vv

1

vv

1

SNRout

1

2

vv

H

1

2

vv

H

1

2

vv

H

1

2

vv

2

2

2

1

H

vv

Notice that this sets an upper bound on the SNR regardless of the filter used!

Equality occurs when

Rvv

1

2

h K Rvv

1

2

s or h K Rvv

1

s

This result can be “normalized” if we let

1 K s Rvv

2

H

1

Rvv

s resulting in h

1

s

s Rvv

H

1

s

If we again assume “iid” noise samples we get a matched filter

K

K

h 2 I s 2 s

v

v

Looking at the max SNR

SNRout _ max

1

v2

s s N

H

S2

v2

This is N times the power ratio of the signal samples divided by the noise samples! (DSSS!)

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

7 of 16

ECE 3800

9-6 Another optimal solution: Systems that Minimize the MeanSquare Error between the desired output and actual output

Err t X t Y t

The error function

Y t

where

h X t N t d

0

Performed in the Laplace Domain

FE s FX s FY s FX s H s FX s FN s

F E s F X s FY s F X s 1 H s H s F N s

Computing the error power

E Err

2

j

1

S XX s 1 H s 1 H s S NN s H s H s ds

j 2 j

E Err

2

j

S XX s S NN s H s H s

1

ds

j 2 j S XX s H s S XX s H s S XX s

FC s FC s S XX s S NN s

Defining

E Err 2

S XX s

S XX s

F

s

H

s

F

s

H

s

C

j C

FC s

FC s

1

ds

j 2 j S XX s S NN s

F s F s

C

C

We can not do much about the last term, but we can minimize the terms containing H(s).

Therefore, we focus on making the following happen

FC s H s

or

H s

S XX s

0

FC s

S XX s

S XX s

FC s FC s S XX s S NN s

A nice idea, but the function is symmetric in the s-plane, and thereby, does not represent a causal

system!

So what can we do …

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

8 of 16

ECE 3800

Let’s see about some interpretations. First, let H1 s 1

, which should be causal.

FC s

Step 1:

S XX s S NN s

Note that for this filter

1

FC s

1

1

FC - s

This is called a whitening filter as it forces the signal plus noise PSD to unity (white noise).

Step 2:

H s H 1 s H 2 s H 2 s

Letting

E Err 2

FC s

H 2 s S XX s

H 2 s S XX s

FC s

FC s

FC s FC s

FC s FC s

1

ds

j 2 j S XX s S NN s

F s F s

C

C

j

E Err 2

S XX s

S XX s

H

s

H

s

2

2

j

FC s

FC s

1

ds

j 2 j S XX s S NN s

F s F s

C

C

Minimizing the terms containing H2(s), now we must focus on

S s

S s

and H 2 s XX

H 2 s XX

FC s

FC s

Letting H2 be defined for the appropriate Left or Right half-plane poles

Let

S s

S s

and H 2 s XX

H 2 s XX

FC s LHP

FC s RHP

The composite filter is then

H s H1 s H 2 s

S s

XX

FC s FC s LHP

1

This solution is often called a Wiener Filter and is widely applied when the signal and noise

statistics are known a-priori!

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

9 of 16

ECE 3800

Eigenvalue Based Filters

We can continue a derivation started in the previous class discussion about time-sample filters,

matrices and eigenvalues.

yk wk xk

xk sk nk

The expected value

E y k y k E wk xk xk wk

E y k y k wk E xk xk wk

E y k y k wk R k wk

E y k y k H E wk xk wk xk H

H

H

H

H

H

H

H

For a WSS input

H

XX

E y k y k H wk R XX wk H

If the signal and noise are zero mean, this becomes

E y k y k H wk R SS R NN wk H

How do we maximize the output SNR

PSignal

PNoise

wk RSS wk H

wk R NN wk H

If we assume that the noise is white,

2

N

0

R NN

0

PSignal

PNoise

1

N2

0

0

N2

N2 I

0

N 2

0

wk R SS wk H

wk I wk H

1

N2

wk R SS wk H

wk wk H

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

10 of 16

ECE 3800

Performing a cholesky factorization of the signal autocorrelation matrix generates the following.

Here, the numerator should suggest that an eigenvalue computation could provide a degree of

simplification.

PSignal

PNoise

1

N2

wk RS RS

H

wk

wk wk

H

H

Once formed, the eigenvalue equation to solve is

wk RS wk

which result in solutions for the resulting eigenvalues and eigenvectors of the form

PSignal

PNoise

1

N2

wk wk H

wk wk H

2

N2

Selecting the maximum eigenvalue and it’s eigenvector for the filter weight that maximizes the

SNR!

PSignal

PNoise

max

2

N2

Note: If the original noise is not white, you can provide a whitening filter prior to forming the

signal autocorrelation and performing an eigenvector solution.

For a lot more take state-space systems and then a class in estimation theory. This is usually done

at the graduate (Master’s Degree) level.

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

11 of 16

ECE 3800

Adaptive Filter

If a desired signal reference is available, we may wish to adapt a system to minimize the

difference between the desired signal and a filter input signal.

Interference Cancellation Example

Cancellation of unknown interference that is present along with a desired signal of interest.

Two sensors of signal + interference and just interference

Reference signal (interference) is used to cancel the interference in the Primary signal

(noise + interference)

Classic Examples: Fetal heart tone monitors, spatial beamforming, noise cancelling

headphones.

From: S. Haykin, Adaptive Filter Theory, 5th ed., Prentice-Hall, 2014

The “Reference signal” contains the unwanted interference. The goal of the adaptive filter is to

match the reference signal with the “interference” in the “Primary signal and force the output

“difference error” to be minimized in power. Since “interference” is the only thing available to

work with, the “power minimum” solution would be one where the interference is completely

removed!

These techniques are based on the Weiner filter solution. While the signal and interference

statistics are not known a-priori (before the filter gets started), after a number of input samples

they can be estimated and used to form the filter coefficients. Then, as time continues, there is a

sense that the estimates should improve until the adaptive coefficients are equal to those that

would be computed with a-priori information.

The advantage ... adaptive filter can work when the statistics are slowly time varying!

Note: An application using an LMS adaptive filter is not too difficult for a senior project!

(noise cancelling headphones, remove 60 cycle hum, etc.)

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

12 of 16

ECE 3800

An example is in your textbook p. 407-411. Cancelling an interfering waveform.

20

original

10

0

-10

-20

0

0.1

0.2

0.3

0.4

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0.5

0.6

Time (sec)

0.7

0.8

0.9

1

15

Filtered

10

5

0

-5

-10

Adaptive W eights in Time

6

a1

a2

a3

a4

4

Time (sec)

2

0

-2

-4

-6

-8

0

0.1

0.2

0.3

0.4

0.5

0.6

W eights

0.7

0.8

0.9

1

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

13 of 16

ECE 3800

Matlab

%

% Text Adaptive Filter Example

%

clear

close all

t=0:1/200:1;

% Interfereing Signal - 60 Hz

n=10*sin(2*pi*60*t+(pi/4)*ones(size(t)));

% Signal of interest and S + I

x1=1.1*(sin(2*pi*11*t));

x2=x1+n;

% Reference signal to excise

r=cos(2*pi*60*t);

m=0.15;

a=zeros(1,4);

z=zeros(1,201);

z(1:4)=x2(1:4);

w(1,:)=a';

w(2,:)=a';

w(3,:)=a';

w(4,:)=a';

% Adaptive weight computation and application

for k=4:200

a(1)=a(1)+2*m*z(k)*r(k);

a(2)=a(2)+2*m*z(k)*r(k-1);

a(3)=a(3)+2*m*z(k)*r(k-2);

a(4)=a(4)+2*m*z(k)*r(k-3);

z(k+1)=x2(k+1)-a(1)*r(k+1)-a(2)*r(k)-a(3)*r(k-1)-a(4)*r(k-2);

w(k+1,:)=a';

end

figure(1)

subplot(2,1,1);

plot(t,x2,'k')

ylabel('original')

subplot(2,1,2)

plot(t,z,'k');grid;

ylabel('Filtered');

xlabel('Time (sec)');

figure(2)

plot(t,w);grid;

title('Adaptive Weights in Time')

ylabel('Time (sec)')

xlabel('Weights')

legend('a1','a2','a3','a4');

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

14 of 16

ECE 3800

Future Considerations

Detection and Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of parameters

based on measured/empirical data that has a random component. The parameters describe an

underlying physical setting in such a way that their value affects the distribution of the measured

data. An estimator attempts to approximate the unknown parameters using the measurements.

See: http://en.wikipedia.org/wiki/Estimation_theory

There are three types of estimation:

(from: S. Haykin, Adaptive Filter Theory, 5th ed., Prentice-Hall, 2014

Filtering (causal)

Smoothing (non-causal)

Prediction (causal predicting the future)

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

15 of 16

ECE 3800

Topics encounter in Estimation Theory include:

Linear Optimal Filters

Requires a priori statistical/probabilistic information about the signal and environment.

Matched filters, Wiener filters and Kalman filters

Adaptive filters

Self-designing filters that “internalize” the statistical/probabilistic information using

recursive algorithm that, when well design, approach the linear optimal filter

performance.

Applied when complete knowledge of environment is not available a priori

Example course notes:

ECE6950 Adaptive Systems

Section 2

Section 3

Section 5

Notes and figures are based on or taken from materials in the course textbook: Probabilistic Methods of Signal and System

Analysis (3rd ed.) by George R. Cooper and Clare D. McGillem; Oxford Press, 1999. ISBN: 0-19-512354-9.

B.J. Bazuin, Spring 2015

16 of 16

ECE 3800