Journal of Systems Architecture 58 (2012) 112–125

Contents lists available at SciVerse ScienceDirect

Journal of Systems Architecture

journal homepage: www.elsevier.com/locate/sysarc

Instruction set architectural guidelines for embedded packet-processing engines

Mostafa E. Salehi ⇑, Sied Mehdi Fakhraie, Amir Yazdanbakhsh

Nano Electronics Center of Excellence, School of Electrical and Computer Engineering, Faculty of Engineering, University of Tehran, Tehran 14395-515, Iran

a r t i c l e

i n f o

Article history:

Received 7 September 2009

Received in revised form 16 January 2012

Accepted 25 February 2012

Available online 5 March 2012

Keywords:

Packet-processing engine

Application-specific processor

Benchmark profiling

Architectural guideline

a b s t r a c t

This paper presents instruction set architectural guidelines for improving general-purpose embedded

processors to optimally accommodate packet-processing applications. Similar to other embedded processors such as media processors, packet-processing engines are deployed in embedded applications, where

cost and power are as important as performance. In this domain, the growing demands for higher bandwidth and performance besides the ongoing development of new networking protocols and applications

call for flexible power- and performance-optimized engines.

The instruction set architectural guidelines are extracted from an exhaustive simulation-based profiledriven quantitative analysis of different packet-processing workloads on 32-bit versions of two wellknown general-purpose processors, ARM and MIPS. This extensive study has revealed the main performance challenges and tradeoffs in development of evolution path for survival of such general-purpose

processors with optimum accommodation of packet-processing functions for future switching-intensive

applications. Architectural guidelines include types of instructions, branch offset size, displacement and

immediate addressing modes for memory access along with the effective size of these fields, data types of

memory operations, and also new branch instructions.

The effectiveness of the proposed guidelines is evaluated with the development of a retargetable compilation and simulation framework. Developing the HDL model of the optimized base processor for networking applications and using a logic synthesis tool, we show that enhanced area, power, delay, and

power per watt measures are achieved.

Ó 2012 Elsevier B.V. All rights reserved.

1. Introduction

High-performance and flexible network processors are expected

to comply the user demands for improved networking services and

packet-processing tasks at different line speeds. According to the

ever-increasing demand for higher bandwidth, the performance

bottleneck of networks has been transferred to the processing elements and consequently there has been a tremendous effort in

speeding these modules. Traditional PEs are either based on custom hardware blocks or general-purpose processors (GPPs). Custom ASIC designs have better performance, higher manufacturing

costs, and lower flexibility; however, GPPs are more flexible but

are not speed-power-area optimized for networking applications.

According to various performance requirements of network workloads, there is a plenty of work on the design of network processor

architecture and instruction set. Some designs exploit the flexibility of GPPs and use as many GPPs as required to satisfy the performance requirements. For example, Niemann et al. [1] exploit a

massive parallel-processing structure of simple processing

⇑ Corresponding author.

E-mail addresses: mersali@ut.ac.ir, mostafa.salehi@gmail.com (M.E. Salehi),

fakhraie@ut.ac.ir (S.M. Fakhraie), a.yazdanbakhsh@ece.ut.ac.ir (A. Yazdanbakhsh).

1383-7621/$ - see front matter Ó 2012 Elsevier B.V. All rights reserved.

doi:10.1016/j.sysarc.2012.02.004

elements, and due to its regularity, the architecture can be scaled

to accommodate various performance and throughput requirements. As an alternative to employing large number of simple

GPPs, Vlachos et al. [2] introduce a high performance packet-processing engine (PPE) with a three-stage pipeline consisting of three

special purpose processors (SPPs). The proposed SPPs are microprogrammed processors optimized for header field extraction,

header field modification, packet verification, bit and byte processing, and leave only some generic software execution to the central

processing core.

SPPs are also used in many of the commercial network processors

(NPs) to improve packet processing performance. Sixteen programmable processing units are used in Motorola C-5 [3] in a parallel

configuration. IBM PowerNP [4] introduce a multi-processor NP

architecture with embedded processor complex (EPC). The EPC has

a PowerPC core and 16 programmable protocol processors. Intel

IXP1250 [5] uses six micro-engines (MEs). Each ME is a 32-bit RISC

processor that do the majority of the network processing tasks such

as packet header inspection and modification, classification, routing,

metering, etc. The ME instruction set is a mix of conventional RISC

instructions with additional features specifically tailored for network processing. Another NP called FlexPathNP [6,7] exploits the

diverse processing requirements of packet flows and sends the

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

packets with relatively simple processing requirements directly to

the traffic manager unit. By this technique the CPU cluster computing capacity will be used optimally and the processing performance

is increased. A cache-based network processor architecture is proposed in [8] that has special process-learning cache mechanism to

memorize every packet-processing activity with all table lookup results of those packets that have the same information such as the

same pair of source and destination addresses. The memorized results are then applied to subsequent packets that have the same

information in their headers.

Most of the previously introduced architectures and instruction

sets are based on a refined version of a well-known architecture

and instruction set. To have a reproducible analysis, we focus on

typical NP workloads and benchmarks and exploit a powerful simulator and profiler [9] to obtain some useful details of network processing benchmarks on two well-known GPPs. The results indicate

performance bottlenecks of representative packet-processing

applications when GPPs are used as the sole processing engine.

Then we use these results in accordance with quantitative study

of different network applications to extract helpful architectural

guidelines for designing optimized instruction set for packet-processing engines.

To keep up with the demands of increasing performance and

evolving network applications, the programmable network-specific PEs should support application changes and meet their heavy

processing workloads. Therefore considering flexibility for short

time to market, it is necessary to build on the existing application

development environments and users’ background on using general purpose processors (GPPs). On the other hand, means should

be provided for catching up with the increasing demand of higher

performance at affordable power and area. In this paper, we provide a solution for finding the minimum required instructions of

two most-frequently-used GPPs for packet-processing applications. In addition, a retargetable compilation and simulation

framework is developed based upon which the proposed instruction set architectural guidelines are evaluated and compared to

the base architectures.

The proposed guidelines provide a wide variety of design alternatives available to the instruction set architects including: memory addressing, addressing modes, type and size of operands,

operands for packet processing, operations for packet processing,

control flow instructions, and also propose special-purpose

instructions for packet-processing applications. These guidelines

demonstrate what future general-purpose processors need to

power-speed optimally respond to the growing number of embedded applications with switching demands. Based on the introduced

architectural guidelines, an embedded packet-processing engine

useful for a wide range of packet-processing applications can be

developed, and be used in massively parallel processing architectures for cost-sensitive demanding embedded network applications. The proposed guidelines would also be applicable to the

processing nodes of embedded applications that are responsible

for packet-processing tasks among others.

2. Analysis of packet-processing applications

Hennessy and Patterson [10] present a quantitative analysis of

instruction set architectures aimed at processors for desktops,

servers and also embedded media processors and introduce a wide

variety of design alternatives available to the instruction set architects. In this paper we present comparative results for development of embedded engines customized for packet-processing

tasks in different network applications. According to the IETF

(Internet Engineering Task Force), operations of network applications can be functionally categorized into data-plane and control-

113

plane functions [11]. The data-plane performs packet-processing

tasks such as packet fragmentation, encapsulation, editing, classification, forwarding, lookup, and encryption. While the controlplane performs congestion control, flow management, signaling,

handling higher-level protocols, and other control tasks. There

are a large variety of NP applications that contain a wide range

of different data-plane and control-plane processing tasks. To

properly evaluate network-specific processing tasks, it is necessary

to specify a workload that is typical of that environment.

CommBench [12] is composed of eight data-plane programs

that are categorized into four packet-header processing and four

packet-payload processing tasks. In a similar work NetBench [13]

contains nine applications that are representative of commercial

applications for network processors and cover small low-level code

fragments as well as large application-level programs. CommBench

and NetBench both introduce data-plane applications. NpBench

[14] targets towards both control-plane and data-plane workloads.

A tool called PacketBench is presented in [15], which provides a

framework for implementing network-processing applications

and extracting workload characteristics. For statistics collection,

PacketBench presents a number of micro-architectural and networking related metrics for four different networking applications

ranging from simple packet forwarding to complex packet payload

encryption. The profiling results of PacketBench are obtained from

ARM-based SimpleScalar [16]. Embedded Microprocessor Benchmarking Consortium (EEMBC) [17] has also developed a networking benchmark suite to reflect the performance of client and

server systems (TCPmark), and also functions mostly carried out

in infrastructure equipment (IPmark). The IPmark is intended for

developers of infrastructure equipment, while the TCPmark, which

includes the TCP benchmark, focuses on client- and server-based

network hardware.

Considering representative benchmark applications for header

and payload processing for IPv4 protocol, we have presented a simulation-based profile-driven quantitative analysis of packet-processing applications. The selected applications are IPv4-radix and

IPv4-trie as look-up and forwarding algorithms, a packet-classification algorithm called Flow-Class, Internet Protocol Security (IPSec)

and Message-Digest algorithm 5 (MD5) as payload-processing

applications. To develop efficient network processing engines, it

is important to have a detailed understanding of the workloads

associated with this processing. PacketBench provides a framework for developing network applications and extracting a set of

workload characteristics on ARM-based SimpleScalar [16] simulator. To have an architecture-independent analysis, we have identified and then modified the PacketBench profiling capabilities that

are added to ARM-based SimpleScalar and also developed a compound simulation and profiling environment for MIPS-based

SimpleScalar, yielding a MIPS-based profiling platform. MIPSbased SimpleScalar which is augmented with PacketBench profiling capabilities, reproduce the PacketBench profiling observations

on MIPS processor. The measurements which are indicative of network applications reveal the performance challenges of different

programs. The presented measurements are also dynamic, in

which, the frequency of a measured event is weighed by the number of times that the event occurs during execution of the

application.

We present the experimental results of profiling representative

network applications on 32-bit versions of ARM and MIPS processors using ARM9 and MIPSR3000 examples. ARM and MIPS family

of processors are two widely-used processors in the network-processor products. Intel IXP series of network processors use StrongARM processors that are based on ARM architecture [18], and

Broadcom BCM products [19] have used MIPS processors in communication-processor products. The comparative results of both

ARM and MIPS platforms then yield architectural guidelines for

114

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

developing application-specific processing engines for network

applications. To have a realistic analysis, we use packet traces of

real networks. An excellent repository of traces collected at several

monitoring points is maintained by the National Laboratory for Applied Network Research (NLANR/PMA) [20]. We have selected

many traces of this trace repository as our input packet traces

and the average value of the results are presented here. For each

application, the extracted properties are the frequencies of load,

store, and branch instruction, instruction distribution, instruction

patterns, frequent instruction sequences, offset size of branches,

rate of the taken branches, execution cycles, and performance

bottlenecks.

2.1. Execution time analysis

We use the execution time analysis to evaluate and compare

the performance of MIPS and ARM processors when running each

of the selected applications. To present comparative results, we ensure the reproducibility principle in reporting performance measurements, such that another researcher would duplicate the

results in different platforms. The execution time of an application

is calculated according to the following formula [21] in terms of

Instruction Count (IC), Clock Per Instruction (CPI), and Clock Period

(CP).

Execution time of application ¼ IC CPI CP

In our previous work [22], we employ this reproducible analysis

for packet-processing applications as the number of required cycles for processing a packet (IC CPI) and consequently involve

the processor architectural dependencies. IC CPI is a part of the

presented formula and the remaining parameter is CP, therefore,

having known the frequency of different processors, the reported

number of the required clock cycles of an application simply leads

to calculation of the application execution time. This then makes

different architectures universally comparable when running the

target applications. To find the instruction count and clock count

of packet-processing tasks and have a comparative analysis, we

have profiled benchmark applications on both of ARM- and

MIPS-based simulators. The results in Table 1 shows the number

of instructions and also the required clock cycles for processing a

packet in each of the specified applications using both of the MIPSand ARM-based SimpleScalar environments.

As shown in Table 1, the computational properties of the selected applications vary when they are executed with different

processors. Despite the simple instruction set of MIPS, ARM has a

more powerful instruction set. In ARM processor, each operation

can be performed conditionally according to the results of the previous instructions [23]. Furthermore, ARM supports complex

instructions that perform shift as well as arithmetic or logic operation in a single instruction. These instructions can lead to lower

instruction count in loops and also in codes with complicated logic/arithmetic operations. However, these complex instructions

complicate the pipeline architecture and may cause the reduction

of ARM clock frequency that consequently may lead to more execution time and lower performance. A smart compiler can take

advantage of complex ARM instructions and produce more optimized codes in terms of lower number of instructions. Throughout

an application compilation with ARM compiler, when the complex

instructions are not applicable, general instructions are used and

number of instructions in the generated code is expected to be less

than or equal to when the code is compiled with MIPS compiler.

According to the results of compiling selected applications with

ARM and MIPS compilers, payload processing applications have

about 18% lower instructions when compiled with ARM compiler.

However, in header processing applications ARM results are worse

than MIPS. Both of the selected cross compilers are based on

gcc2.95.2. With the same cross compiler, instruction counts of

the header processing applications in ARM are 10–80% higher than

instruction counts of these applications in MIPS (Table 1). When

multiplying the ‘‘number of clock cycles of running an application’’

with a processor, with the ‘‘clock period’’, of a specific implementation of that processor, the total execution or elapsed time is

achieved, that would make the results universally comparable

among different implementations of various processors.

2.2. The role of compiler

As shown in Table 1, the instructions count of IPV4-radix, is 80%

higher when compiled with ARM cross compiler. The instruction

count of the IPV4-radix based on its containing functions is summarized in Table 2. As shown in this table the instruction count of validate_packet and inet_ntoa functions are similar but the instruction

count of the lookup_tree in ARM is about six times more than in

MIPS.

Table 3 shows the instruction count of the sub-functions of the

lookup_tree. The instruction count of the inet_aton function in ARM

is about 10 times more than MIPS. This is because of the strtoul

function generated with ARM compiler which is optimized properly with MIPS cross compiler. This observation shows the effect

of cross compiler on the number of generated instructions when

the same gcc compiler is used.

To reveal the effect of compiler version on instruction count of

the compiled application codes and to compare different compilers

together, we obtain the results with another version of gcc for ARM

cross compiler and the instruction count of the representative

applications on both 2.95.2 and 3.4.3 versions of ARM cross compiler are calculated. According to the results (Table 4), despite

the 80% difference in IPV4-radix results in ARM and MIPS 2.95.2

cross compilers, compiling IPV4-radix with MIPS gcc2.95.2 and

ARM gcc3.4.3 cross compilers yield almost equal instruction counts

and for the other applications different versions of MIPS and ARM

cross compilers have negligible effects on instruction count of the

compiled application codes. Therefore, from now on we use the

optimum compiler results of each processor for further comparisons in this paper.

2.3. Instruction set operations

The supported operations by most instruction set architectures

are categorized in Table 5 [10]. All processors must have some

instruction support for basic system functions and generally provide a full set of the first three categories. The amount of support

for the last categories may vary from none to an extensive set of

special instructions. Floating-point instructions will be provided

Table 1

Computational complexity of the packet-processing applications based on TSH [20] traces.

ARM [22]

# of instructions

# of clock cycles

MIPS

IPV4-radix

IPV4-trie

Flow-Class

IPSec

MD5

IPV4-radix

IPV4-trie

Flow-Class

IPSec

MD5

4205

5092

206

494

152

340

100998

113108

8911

14202

2376

3630

186

398

113

274

123394

227330

11043

17570

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

Table 2

The number of instructions for different functions of the IPV4-radix when compiled

with ARM and MIPS gcc2.95 cross compilers.

Function

ARM

MIPS

validate_packet

inet_ntoa

lookup_tree

Total

115

1510

2354

4096

96

1630

397

2160

Table 3

Instructions count of lookup_treefunction based on gcc2.95.2 ARM compiler.

Function

ARM

MIPS

Bzero

inet_aton

rn_match

22

2168

137

18

213

144

Table 4

Instruction count comparison of the representative applications with ARM gcc.2.95.2

and gcc.3.4.3.

Application

IPV4-radix

Processor

ARM

gcc version

# of instructions

# of clock cycles

2.95

4164

5044

MIPS

3.4

2358

2927

2.95

2376

3630

Table 5

Categories of instruction operators [10].

Operation type

Examples

Arithmetic and

logical

Data transfer

Integer arithmetic and logical operations: add, subtract,

and, or, multiply, divide

Loads/stores (move instructions on computers with

memory addressing)

Branch, jump, procedure call and return, traps

Operating system call, virtual memory management

instructions

Floating point operations: add multiply, divide, compare

Decimal add, decimal multiply, decimal-to-character

conversions

String move, string compare, string search

Pixel and vertex operations, compression/decompression

operations

Control

System

Floating point

Decimal

String

Graphics

in any processor that is intended to be used in an application that

makes much use of floating point. Decimal and string instructions

are sometimes primitives, or may be synthesized by the compiler

from simpler instructions. Based on five SPECint92 integer programs, it is shown in [24] that the most widely-executed instructions are some simple operations of an instruction set such as

‘‘load’’, ‘‘store’’, ‘‘add’’, ‘‘subtract’’, ‘‘and’’, register-register ‘‘move’’,

and ‘‘shift’’ that account for 96% of instructions executed on the

popular Intel 8086. Hence, the architect should be sure to make

these common cases fast. Multiplies and multiply-accumulates

are added to this simple set of primitives for DSP applications.

Usage patterns of the top 15 frequently-used ARM instructions

in packet processing applications are presented in Table 6. According to this table the most frequent instructions reside in the category of primitive instructions including the operations of the first

three categories in Table 5. Thus, we have divided the instruction

set to three main categories including memory and logic/arithmetic instructions, control flow, and special purpose instructions, and

compare the profiling results of representative sample codes for

both ARM and MIPS processors in the following sections.

115

3. Quantitative analysis of network-specific instructions

To analyze the results and extract architectural guidelines for

instruction set of an optimized packet-processing engine, we have

divided the instruction set into three main categories and compared the profiling results of some representative sample examples

for both ARM and MIPS processors. We have also considered the effects of the compiler on the generated codes. The first category is

memory instructions. Before analyzing memory instructions, we

must define how memory addresses are interpreted and how they

are specified. Addressing modes have the ability to significantly reduce instruction counts of an application; they also add to the

complexity of hardware and may increase the average CPI of processor. Therefore, the usage of different addressing modes is quite

important in helping the architect choose what to support. The old

VAX architecture has the richest set of addressing modes including

10 different addressing modes leading to fewest restrictions on

memory addressing. Ref. [10] presents the results of measuring

addressing-mode-usage patterns in three benchmark programs

on the VAX architecture and concludes that immediate and displacement dominate memory-addressing-mode usage. As network

applications migrate towards larger programs and hence rely on

compilers, so addressing modes must match the ability of the compilers developed for embedded processors. As packet-processing

applications head towards relying on compiled code, we expect

increasing emphasis on simpler addressing modes. Therefore, dislike Ref. [10] we do not profile network applications on VAX and

select displacement and immediate addressing modes for network

applications. Other addressing modes such as register-indirect, indexed, direct, memory-indirect, and scaled can be easily synthesized with displacement mode.

3.1. Memory and logic/arithmetic instructions

To show the effect of different memory and logic/arithmetic

instructions on the instruction count of the generated codes, Table

7 presents two sample codes that are compiled with both ARM and

MIPS cross compilers. The selected codes are representative codes

for two distinguished categories: high memory access and excessive logic/arithmetic operations. According to the results, the former has less instruction count in MIPS (eight instructions

compared to 13 instructions in ARM) and the latter is optimized

when compiled with ARM (eight instructions compared to 10

instructions in MIPS). The reason is that MIPS supports byte

(‘‘lb’’, ‘‘sb’’), 2-byte (‘‘lh’’, ‘‘sh’’), and 4-byte (‘‘lw’’, ‘‘sw’’) loads and

stores. Therefore 8-bit, 16-bit, and 32-bit data types are read from

or write to memory with a single instruction. However, ARM only

supports byte (‘‘ldrb’’, ‘‘strb’’) and 4-byte (‘‘ldr’’, ‘‘str’’) memory

accesses. Therefore, 2-byte load stores should be simulated with

a sequence of ‘‘ldrb’’, ‘‘strb’’, arithmetic, and logic instructions in

ARM, as shown in the assembly code of the Fibonacci. This is why

this code has more instructions when compiled with ARM cross

compiler. Besides, the conditional and combined arithmetic/logic

instructions of ARM lead to less instruction counts for shift-andadd multiplier code which needs more logic/arithmetic instructions when compared to the Fibonacci code. In this case as seen,

MIPS code has more instructions.

According to this observation and the fact that some variables

such as checksum, IP packet type, and source/destination port

numbers are all 16-bit values, the 2-byte loads/stores can considerably affect the instruction count of packet-processing applications. Distribution of the 2-byte loads/stores, logic, and

arithmetic instructions for the selected applications based on the

MIPS compiler are shown in Table 8. As shown in this table the

usage of 2-byte loads/stores in IPV4-lctrie and Flow-Class are higher

116

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

Table 6

Usage pattern of the top15 frequently-used instructions based on ARM.

Instruction

IPV4radix (%)

IPV4trie (%)

FlowClass (%)

IPSec

(%)

MD5

(%)

Average

(%)

Ldr

Add

Mov

Cmp

Orr

Ldrb

And

Sub

Str

Strb

Eor

Bne

Bcc

Beq

Bic

8.7

2.6

10.8

18.5

0.6

3.7

0.4

2.8

3.8

0.6

0.0

3.9

2.1

4.0

0.0

7.7

13.9

12.9

10.8

7.2

12.9

4.6

7.7

0.0

1.5

0.0

2.1

0.0

3.6

0.0

28.4

9.9

12.1

9.1

1.2

7.8

0.0

1.8

10.5

5.4

0.0

6.0

0.0

1.8

1.2

33.6

0.5

16.3

0.1

14.7

0.4

16.5

0.3

0.6

0.4

10.0

0.1

0.0

0.0

3.6

6.0

33.7

2.4

7.9

6.9

5.6

2.7

8.9

2.8

6.6

3.4

0.2

7.6

0.1

2.0

16.9

12.1

10.9

9.3

6.1

6.1

4.8

4.3

3.5

2.9

2.7

2.5

1.9

1.9

1.4

than other applications. Since 2-byte loads/stores are not compiled

as optimally as MIPS with ARM compiler, the instruction counts of

such memory-intensive applications are higher when compiled

with ARM. However, as shown in Table 8, the logic and shift operations are higher in MD5 and IPSec applications which are good

candidates to be annotated with ARM combined instructions and

produce codes with fewer instructions.

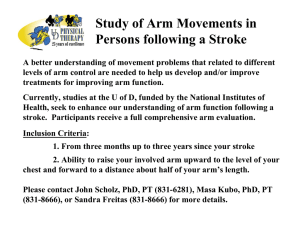

Another important measurement for designing instruction set is

size of the displacement field in memory instructions and also size

of the immediate value in instructions, in which, one of the operands is an immediate value. Since these sizes can affect the instruction length, a decision should be made to choose the optimized size

for these fields. Based on the representative network benchmark

measurements, we expect the size of the displacement field to be

at least 9 bits. As shown in Fig. 1, this size captures about 88%

and 95% of the displacements in benchmark programs in MIPS

and ARM, respectively.

Fig. 2 presents usage patterns of the immediate sizes used in

different instructions based on the profiling results of network

benchmarks on ARM and MIPS. According to these results we suggest 9-bit for size of the immediate value which covers about 88%

of the immediate values in ARM and MIPS.

3.2. Control flow instructions

The instruction that changes the flow of control in a program is

called either transfer, branch, or jump. Throughout this paper we

will use jump for unconditional and branch for conditional changes

in control flow. There are four common types for control flow

instructions: conditional branches, jumps, subroutine calls, and returns from subroutines. According to the frequencies of these control-flow instructions extracted from running packet-processing

benchmarks on ARM and MIPS profiling environments, conditional

branches are dominant. There are three common implementations

for conditional branches in recent processors. One of them implements the conditional branch with a single instruction which performs the comparison as well as the decision in a single

instruction, for example, ‘‘beq’’ and ‘‘bne’’ instructions in the MIPS

instruction set [21]. The other methods need two instructions for

conditional branches, the first one performs the comparison and

the second one makes a decision based on the comparison results.

The comparison result is saved in a register, (such as the ‘slt’

instruction in MIPS), or will modify the processor status flags (such

as the ‘cmp’ instruction in ARM instruction set [23]).

We have developed some simple C codes to represent a wide

variety of conditional codes including ‘‘case’’, ‘‘if’’ and ‘‘else’’ conditional statements and have compiled them with both ARM and

MIPS cross compilers. According to the results, the conditional

assignments of ARM are implemented with a sequence of compare,

branch, and assignments in MIPS. These complex instructions may

yield less instructions in the codes generated with ARM cross compiler. Besides, ‘‘beq’’ and ‘‘bne’’ instructions in MIPS compare two

registers and jump to the branch target in a single instruction which

can be done with at least two instructions in ARM, one for compare

and another for branch. The extracted results represent the effectiveness of each of the indicated advantages of ARM and MIPS. To

compare the profiling results of MIPS and ARM together in a practical example, we have extracted the conditional statement of packet-validation function which is used in all of the benchmark

applications. The conditional statement of this function is a combination of simple if statements that are combined with logical ‘‘or’’ or

logical ‘‘and’’. According to the presented results in Table 9, the code

is compiled to equal number of instructions in both of ARM and

MIPS processors. It means that although simple conditional codes

may lead to different instruction counts when compiled with MIPS

or ARM, compiling practical conditional codes with simple instructions of MIPS has same instruction counts comparable to when it is

compiled with more powerful instructions of ARM.

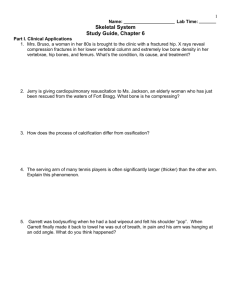

The most common way to specify the destination of a branch is

to supply an immediate value called offset that is added to the program counter (PC). Size of branch-offset field would affect the

encoding density of instructions and therefore, restrict the operand

variety in terms of number of the operands as well as the operand

size. Another important measurement for instruction set is branch

offset size. According to [10], short offset fields often suffice for

branches and offset size of the most frequent branches in the integer programs can be encoded in 4–8 bits. Fig. 3shows the usage

pattern for branch offset sizes of both conditional and unconditional branches that are used in the selected packet-processing

applications. According to the results, 95% of conditional branches

need offset sizes of 5–10 bits and offset size of 91% of unconditional branches range from 5 to 13 bits.

3.3. Special-purpose instructions

Packet header and payload are read from a non-cachable memory that is located on the system bus. We use the PacketBench

expression for this component that is called packet memory [15].

The other local memories are also called non-packet memories.

According to the profiling results of the layer two switching [25],

packet-memory accesses are about 2% of the total instructions

and since the packet memory is on the system bus, each access

to the packet memory takes about 15 processor clock cycles [25].

It is also observed that packet-memory accesses consume 26% of

the total execution time [25]. The percent of packet-memory

accesses of applications are summarized in Table 10. According

to this table, packet memory accesses range from 3.7% to 45.2%

and IPV4-trie and Flow-Class have the highest packet memory

accesses among the other applications.

A good solution for reducing the latency of packet-memory

accesses is to reduce the bus-access overhead with burst load

and store instructions. Also, an IO controller can exploit a directmemory access (DMA) device and transfer the packet data to the

local memory of the processor and reduce the bus access overhead

[2]. By reducing bus latencies the maximum achievable improvement with the burst memory instructions is evaluated in Table

11. According to these results, proper use of the burst memory

instructions in a code can significantly improve the execution time

of the application. The results show the maximum achievable performance improvements, however the DMA transfer or burst memory transfers are inserted to code manually. For automatic burst

insertions an algorithm such as the one proposed by Biswas [26]

can be used.

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

117

Table 7

The compiled code for MIPS and ARM, (a) Fibonacci series, where hundred numbers are generated and written to memory (b)

computationally-intensive shift-and-add multiplier.

(a) Fibonacci series (main function C code)

short int A[100]; int i;

A[0]=1; A[1]=1;

for (i=2;i<100;i++)

A[i]=A[i-1]+A[i-2];

Compiled code for ARM

Compiled code for MIPS

00008554<main+0x28>ldrb r0, [ip, #-201]

00008558<main+0x2c>ldrb r1, [ip, #-203]

0000855c<main+0x30>ldrbr2, [ip, #-202]

00008560<main+0x34>ldrb r3, [ip, #-204]

00008564<main+0x38>orr r2, r2, r0, lsl #8

00008568<main+0x3c>orrr3, r3, r1, lsl #8

0000856c<main+0x40>addr0, r2, r3

00008570<main+0x44>movr1, r0, asr #8

00008574<main+0x48>subslr, lr, #1; 0x1

00008578<main+0x4c>strbr1, [ip, #-199]

0000857c<main+0x50>strbr0, [ip, #-200]

00008580<main+0x54>addip, ip, #2; 0x2

00008584<main+0x58>bpl00008554

(b) Multiply with add and shift (main function C code)

p=0;p |= q;

for (i=0;i<8;i++) {

if (p & 0x1) p=((p >> 8)+m) << 8;

p = (p & 0x8000) | (p>>1);}

Compiled code for ARM

020001cc<main+0x14>tstr3, #1

020001d0<main+0x18>addne r3, r0, r3, asr #8

020001d4<main+0x1c>movne r3, r3, lsl #8

020001d8<main+0x20>andr2, r3, #32768

020001dc<main+0x24>movr3, r3, asr #1

020001e0<main+0x28>orrr3, r2, r3

020001e4<main+0x2c>subs r1, r1, #1

020001e8<main+0x30>bpl020001 cc

Table 8

Distribution of 2-byte load/ store, arithmetic, and logic instructions for the selected

applications when compiled with MIPS gcc2.95.2 cross compiler.

IPV4-radix

IPV4-trie

Flow-Class

IPSec

MD5

2-byte store (%)

2-byte load (%)

Arithmetic (%)

Logic (%)

0.1

0.5

0.5

0.0

0.0

1.6

7.0

6.6

0.0

0.1

32.7

33.1

27.0

19.9

33.2

16.3

29.4

13.3

57.6

38.3

4. Proposed instruction set for embedded packet-processing

engines

In the field of network processor design, some packet-processing-specific instructions are proposed in [27–30]. There is also a

lot of research in synthesizing instruction set for embedded application-specific processors which propose complex instructions for

high performance extensible processors [31–38]. All of these researches start with primitive instructions and refine them to boost

target performance. In this section, we propose the optimized primitive instruction sets for flexible and low-power packet-processing

engines. The proposed general-purpose instructions are used to

give flexibility for any further changes in packet-processing flow

and special-purpose instructions can be used to boost the execution

of the packet-processing tasks and therefore, increase performance.

As network applications migrate towards larger programs and

hence become more attracted to compilers, they have been trying

to use the compiler technology developed for desktop and embedded computers. Traditional compilers have difficulty in taking

00400230<main+0x40>lhu $2,-2($4)

00400238<main+0x48>lhu $3,-4($4)

00400240<main+0x50>addiu $5,$5,1

00400248<main+0x58>addu $2,$2,$3

00400250<main+0x60>sh $2,0($4)

00400258<main+0x68>addiu $4,$4,2

00400260<main+0x70>slti $2,$5100

00400268<main+0x78>bne $2,$0,00400230

Compiled code for MIPS

00400240<main+0x50>andi $2,$4,1

00400248<main+0x58>beq $2,$0,00400268

00400250<main+0x60>sra $2,$4,0x8

00400258<main+0x68>addu $2,$2,$6

00400260<main+0x70>sll $4,$2,0x8

00400268<main+0x78>andi $3,$4,32768

00400270<main+0x80>sra $2,$4,0x1

00400278<main+0x88>or $4,$3,$2

00400280<main+0x90>addiu $5,$5,-1

00400288<main+0x98>bgez $5,00400240

high-level language code and producing special-purpose instructions. However, new retargetable compiler technology deployed

for extensible processors (i.e. Tensilica [39] and CoWare [40], along

with the CoSy compiler [41]) might be used for optimum code

generations using special-purpose instructions.

The proposed instruction set is designed based on the requirements of different packet-processing applications, quantified in

terms of the required micro-operations and their frequencies. We

propose the general-purpose instructions according to the distribution of different instructions in the representative benchmark

applications including both header- and payload-processing algorithms. Table 12 presents the instruction distribution of the packet-processing applications in ARM and MIPS processors. The results

are sorted according to the maximum values of the instruction

occurrences among the selected applications. The top 25 instructions of the applications are listed in Table 12. The required basic

general-purpose instructions can be extracted from this table.

The reduced general-purpose instruction set trades performance

for power. We select the minimum number of instructions to have

the lowest power consumption and also convince an acceptable

performance. Therefore, all of the instructions that have a high

occurrence in the selected applications on both ARM and MIPS processors should contribute in the proposed list. However, we skip

the instructions that are rarely used and can be synthesized with

other instructions. This leads to the lowest required number of

instruction count.

As shown in Table 12, the selected applications have almost a

similar arithmetic, logic, memory, and branch instruction distribution with both of ARM and MIPS processors. As shown, the frequent

arithmetic instructions are ‘‘Add’’ and ‘‘Sub’’ with both register and

118

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

Fig. 1. Usage patterns of the displacement size in memory instructions based on the profiling results of network benchmark on (a) MIPS and (b) ARM processors.

Fig. 2. Usage patterns of immediate sizes of instructions based on the profiling results of network benchmark on (a) MIPS and (b) ARM processors.

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

119

Table 9

Representative code for condition checking. The code is extracted from the validate-packet function of the IPV4 lookup

applications.

if ((ll_length>MIN_IP_DATAGRAM) &&

(in_checksum&&

(ip_v == 4) &&

(ip_hl>= MIN_IP_DATAGRAM/4) &&

(ip_len>= ip_hl))

return 1;

else return 0;

Compiled code for ARM (gcc3.4)

Compiled code for MIPS (gcc. 2.95)

0000866c<validate_packet>cmp r1, #0

00008670<validate_packet+0x4>cmpne r0, #20

00008674<validate_packet+0x8>movr1, r3

00008678<validate_packet+0xc>ble00008684

0000867c < validate_packet+0x10>cmp r2, #4

00008680<validate_packet+0x14>beq 0000868c

00008684<validate_packet+0x18>mov r0, #0

00008688<validate_packet+0x1c>mov pc, lr

0000868c<validate_packet+0x20>ldrr3, [sp]

00008690<validate_packet+0x24>cmp r3, r1

00008694<validate_packet+0x28>cmpge r1, #4

00008698<validate_packet+0x2c>mov r0, #1

0000869c<validate_packet+0x30>movgt pc, lr

000086a0<validate_packet+0x34>b00008684

00400400<validate_packet>addu $2,$0,$0

00400408<validate_packet+0x8>lw $8,16($29)

00400410<validate_packet+0x10>addiu $3,$0,20

00400418<validate_packet+0x18>slt $3,$3,$4

00400420<validate_packet+0x20>beq $3,$0,00400468

00400428<validate_packet+0x28>beq $5,$0,00400468

00400430<validate_packet+0x30>addiu $3,$0,4

00400438<validate_packet+0x38>bne $6,$3,00400468

00400440<validate_packet+0x40>slti $3,$7,5

00400448<validate_packet+0x48>bne $3,$0,00400468

00400450<validate_packet+0x50>slt $3,$8,$7

00400458<validate_packet+0x58>bne $3,$0,00400468

00400460<validate_packet+0x60>addiu $2,$0,1

00400468<validate_packet+0x68>jr $31

immediate operands. The ‘‘Slt’’ instructions of MIPS which are used

for LESS THAN or LESS THAN OR EQUAL comparisons can be omitted and substituted with ‘‘Bl’’ and ‘‘Ble’’ instructions. MIPS and

ARM follow different approaches for implementing the branch.

MIPS compares two registers and based on the comparison results

jumps to the target address in a single instruction called ‘‘Beq’’ or

‘‘Bne’’. ARM implements the branch with two separate instructions; the first one does the comparison and the second checks

the flags of the processor and jumps to the target address if the

branch condition is satisfied. Since branch instructions have a high

contribution in the selected applications, we propose the singleinstruction comparison-and-jump approach. Therefore, the ‘‘Slt’’

and ‘‘Cmp’’ instructions are not included in arithmetic instructions

and ‘‘Beq’’, ‘‘Bne’’, ‘‘Bl’’, and ‘‘Ble’’ are proposed for branch instructions. The other branch instructions can be implemented with

these instructions.

The frequent logic instructions are ‘‘And’’, ‘‘Or’’, and ‘‘Xor’’.

‘‘Nor’’ and the immediate modes of logic instructions can be synthesized with the corresponding register modes. Table 8 shows

that the 2-byte loads/stores can considerably affect the instruction

count of an application. Since 2-byte load/stores are not compiled

optimally with ARM compiler, the instruction counts of IPV4-trie

and Flow_class applications are higher when compiled with ARM.

Therefore, the proposed memory access instructions support 8bit, 16-bit, and 32-bit loads and stores. The frequent shift instructions are ‘‘Sll’’, ‘‘Srl’’, and ‘‘Sra’’. As shown in Table 8, the logic and

shift operations are higher in MD5 and IPSec applications which are

good candidates to be synthesized with ARM complex instructions

to produce codes with fewer instructions. Usage patterns of ARM

complex instructions in the selected benchmark applications are

summarized in Table 13.

According to Table 13, 4.7% and 3.6% of IPSec and MD5 instructions are of these types, respectively. Each of ARM complex instructions would be synthesized with at least two MIPS instructions.

Over all, as shown sum of the average values of complex instruction usages for all representative applications is about 10%. Therefore, properly employing these instructions would improve the

instruction count about 10%. However, because of the complexity

of these instructions, they would complicate the pipeline design

and hence, might elongate the overall clock period of the processor.

Therefore, one should decide on using such instructions considering compiler potentials and also architecture design issues.

IPSec and MD5 are two large applications that contain about

100,000 and 9000 instruction in ARM. According to the results,

the instruction counts of these applications are about 23% higher

when compiled for MIPS. One reason for this difference in instruction counts is the usage of ARM complex instructions (Table 13). As

another source for this difference, we have observed the effect of

burst load and store instructions in ARM (‘‘stdmb’’ and ‘‘ldmdb’’)

which perform a block transfer to/from memory. These instructions are widely used in function calls and returns for saving and

restoring the function parameters to the stack. These instructions

can also be used for accessing memory for some registers which

should be ‘‘push’’ to or ‘‘pop’’ from stack. Since the investigated

payload processing applications are composed of too many small

functions, these instructions improve the instruction counts in

function calls and returns. According to our profiling results, the

burst memory access instructions of ARM improve the instruction

count of IPSec and MD5 about 3.5% and 9%, respectively. Some of

the widely-used instructions of MIPS and ARM (according to the

profiling results), and also the proposed optimum instruction set

are summarized in Table 14.

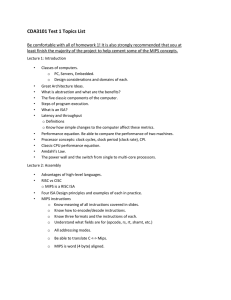

5. Retargetable instruction set compilation and simulation

framework

To evaluate the proposed instruction set, we have customized

the gcc compiler [42] and developed a retargetable compilation

framework for exploring the instruction set space for packet-processing applications. The GNU compiler collection (usually shortened to gcc) is a Linux-based compiler produced by the GNU

project which supports a variety of programming languages. GCC

has two distinct sections called machine dependent and machine

independent parts. The machine dependent part is responsible

for the final compilation process. In this part, the machine-independent intermediate output is compiled to the target machine

code using the machine definition (MD) file. The general structure

of the GCC compiler is shown in Fig. 4 (based on [42]).

The MD codes state all the microarchitectural specifications

such as: number of registers, supported instructions, instruction

set architecture, and execution flow of each instruction. The machine dependent codes consist of two basic files, the MD file that

contains the instruction patterns of the target processors and a C

file containing some macro definitions. The MD file defines the

120

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

Fig. 3. Usage patterns of offset sizes for conditional and unconditional branches in network benchmarks for ARM and MIPS processors.

patterns of target processor instructions by using a register transfer language (RTL) which is an intermediate representation similar

to the final assembly code.

Our proposed retargetable compilation and simulation framework is shown in Fig. 5. The exploration starts with the required

modifications to the MD or C files to specify the instruction set

121

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

Table 10

Percentage of packet and non-packet memory accesses from total instructions in the

selected applications.

IPV4-radix

IPV4-trie

Flow-Class

MD5

IPSec

Packet memory (%)

Non-packet memory (%)

5.0

45.2

43.1

27.6

3.7

29.8

6.9

23.7

15.4

21.5

Table 13

Usage patterns of ARM complex instructions in the selected benchmark applications.

Table 11

Maximum achieved performance improvement by reducing the bus overhead for

packet-memory accesses.

IPV4-radix

IPV4-trie

Flow-Class

MD5

IPSec

Clock count

New clock count

Improvement (%)

3630

398

274

17570

227330

3470

238

169

13257

219897

4.4

40.2

38.4

24.5

3.3

and microarchitecture of the target processor. After that, the modified GCC codes are compiled for generating the target compiler.

With the help of the generated compiler, the application source

codes are compiled for the new processor. The SimpleScalar is also

used as a retargetable simulator. The machine definition file (DEF)

of the SimpleScalar is modified according to the architecture of the

target processor for supporting the simulation of the generated

binary codes. The binary codes are then executed by SimpleScalar

to get the application profiling information (cycle count and

instruction count). This flow can iteratively explore all the proposed modifications to the instruction set and compiler and investigate the effects of these modifications on the performance of the

processor.

Instruction

IPV4radix (%)

IPV4trie (%)

FlowClass (%)

IPSec

(%)

MD5(%)

Average

(%)

subcs

Bic

orrcs

movcc

cmpcc

Mvn

Subs

movne

addcs

movs

Tsts

moveq

movnes

ldreq

cmpne

ldrne

10.4

0.0

5.5

4.7

3.1

0.6

1.4

2.1

1.6

0.0

1.6

1.5

1.4

0.5

0.4

0.3

0.0

0.0

0.0

0.0

0.0

1.0

0.0

0.0

0.5

2.1

0.0

0.0

0.0

0.0

0.0

0.0

0.0

1.2

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

3.6

0.0

0.0

0.0

0.0

1.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.1

0.0

2.0

0.0

0.0

0.0

1.3

0.2

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

2.1

1.4

1.1

0.9

0.6

0.6

0.5

0.4

0.4

0.4

0.3

0.3

0.3

0.1

0.1

0.1

6. Experimental results

In previous sections we have quantitatively compared the effectiveness of MIPS and ARM instruction sets for the networking

application benchmarks and proposed some architectural guidelines for instruction set of an optimized packet-processing engine.

In this Section we use our developed compilation and simulation

framework to obtain post compilation quantitative comparisons.

We evaluate the effectiveness of the proposed instruction set by

comparing its performance for packet-processing benchmarks

with that of the MIPS instruction set. Interested reader can generalized these comparisons to ARM using the mentioned results in previous sections.

Table 12

Distribution of different instructions in the representative applications.

MIPS

ARM

Category

Instruction

IPV4radix (%)

IPV4trie (%)

FlowClass (%)

IPSec

(%)

MD5

(%)

MAX

(%)

Instruction

Memory

Lw

Sw

Lhu

Sb

Lbu

Lb

Addu

Addiu

Slti

Sltu

Lui

Subu

Or

Andi

Srl

Xor

Sll

And

Srav

Srlv

Nor

Ori

Beq

Bne

J

7.6

5.3

1.0

1.7

5.1

2.3

15.7

13.8

0.7

2.5

1.1

1.8

1.4

3.1

1.1

0.0

2.4

0.6

0.0

0.7

0.0

0.6

10.9

7.5

2.4

7.8

1.6

7.1

0.5

0.5

0.0

13.6

18.0

7.1

0.5

1.1

1.3

2.2

5.5

3.8

0.0

3.7

1.1

0.0

1.3

0.5

1.6

9.0

6.3

1.1

25.5

11.9

5.0

1.9

5.0

0.0

11.4

11.3

1.5

0.0

0.0

1.5

0.0

1.5

0.0

0.0

0.8

0.8

3.0

3.0

0.0

0.8

2.7

8.8

1.4

17.6

1.0

0.0

0.3

0.5

0.0

13.9

6.0

0.8

0.0

1.7

0.0

13.9

13.5

13.0

8.2

6.4

0.8

0.0

0.0

0.0

0.8

0.1

0.9

0.1

3.6

3.0

0.1

5.6

4.7

0.0

24.0

8.9

0.0

6.4

3.6

0.2

8.8

0.2

3.7

2.6

7.2

3.6

0.0

0.0

2.5

3.6

0.3

6.6

0.1

25.5

11.9

7.1

5.6

5.1

2.3

24.0

18.0

7.1

6.4

3.6

1.8

13.9

13.5

13.0

8.2

7.2

3.6

3.0

3.0

2.5

3.6

10.9

8.8

2.4

Ldr

Ldrb

Str

Strb

Arithmetic

Logic

Branch

IPV4radix (%)

IPV4trie (%)

FlowClass (%)

IPSec

(%)

MD5

(%)

MAX

(%)

8.7

3.7

3.8

0.6

7.7

12.9

0.0

1.5

28.4

7.8

10.5

5.4

33.6

0.4

0.6

0.4

6.0

5.6

2.8

6.6

33.6

12.9

10.5

6.6

Add

Cmp

Sub

Subcs

Cmpcc

2.6

18.5

2.8

6.4

1.8

13.9

10.8

7.7

0.0

0.0

9.9

9.1

1.8

0.0

0.0

0.5

0.1

0.3

0.0

0.0

33.7

7.9

8.9

0.0

0.0

33.7

18.5

8.9

6.4

1.8

And

Mov

Orr

Eor

Orrcs

Movcc

Movs

Movne

Movnes

Bic

Bcc

Bne

Bgt

Beq

Bl

Ble

0.4

10.8

0.6

0.0

5.6

3.9

0.1

1.8

1.6

0.0

2.1

3.9

0.4

4.0

1.1

0.9

4.6

12.9

7.2

0.0

0.0

0.0

2.1

0.0

0.0

0.0

0.0

2.1

5.2

3.6

1.5

1.5

0.0

12.1

1.2

0.0

0.0

0.0

0.0

0.0

0.0

1.2

0.0

6.0

0.0

1.8

1.2

1.2

16.5

16.3

14.7

10.0

0.0

0.0

0.0

0.0

0.0

3.6

0.0

0.1

0.0

0.0

0.2

0.0

2.7

2.4

6.9

3.4

0.0

0.0

0.0

0.0

0.0

2.0

7.6

0.2

0.0

0.1

0.4

0.0

16.5

16.3

14.7

10.0

5.6

3.9

2.1

1.8

1.6

3.6

7.6

6.0

5.2

4.0

1.5

1.5

122

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

Table 14

Proposed instruction set for embedded packet-processing engines.

Arithmetic

Logic

Memory

Branch

MIPS

ARM

Proposed instructions

Addu

Addiu

Slti

Sltu

Subu

Or

Ori

And

Andi

Xor

Nor

Srl

Sll

Srav

Srlv

Lw

Lhu

Lbu

Lb

Sw

Sb

Add

Sub

Subcs

Cmp

Cmpcc

Or

Orcs

And

Eor

Mov

Movcc

Movs

Movne

Movnes

Bic

Ldr

Ldrb

Str

Strb

Ldm

Stm

Add: add two registers

Addi: add register and immediate

Sub: Sub two registers

Subi: Sub immediate from register

Beq

Bne

JJal

Beq

Bne

Bgt

Bl

Ble

Bcc

And, Andi: and two operands

Or, Ori: or two operand

Xor, Xori: xor two operands

Sll, Sllv: shift left logical

Srl, Srlv: shift right logical

Sra, Srav: shift right arithmetic

Bic: bit clear

Ldw: load 32-bit from memory

Ldh: load 16-bit from memory

Ldb: load 8-bit from memory

Stw: store 32-bit to memory

Sth: store 16-bit to memory

Stb: store 8-bit to memory

Ldm: load a block from memory

Stm: store a block to memory

Beq: branch if registers are equal

Bne: branch if registers are not equal

Blt: branch if a register is less than a register

Ble: branch if a register is less than ro equal

to a register

B: unconditional branch

Br: branch to the address in the register

Bal: branch and link

Fig. 4. General structure of the GCC.

Exploiting the proposed exploration framework, we have

started with the MIPS instruction set [21] as the starting point

and have modified it to converge to the optimized instruction

set. We have evaluated the effectiveness of each modification to

the instruction set in terms of execution cycles and instruction

count for each of the representative benchmark applications.

According to the results, there are some instructions that are

least-frequently or never used when compiling the selected applications. We have found multiply and divide among these instructions. We have excluded these instructions from the instruction

list with negligible performance degradation. Some of the least-frequent-used instructions of MIPS in the selected benchmark

applications are shown in Table 15.

Fig. 5. Our retargetable compilation and simulation framework.

Table 15

Some of the least-frequently used instructions of MIPS in selected benchmark

applications.

Instruction

IPV4radix (%)

IPV4trie (%)

FlowClass (%)

IPSec

(%)

MD5

(%)

Average

(%)

mult

mfhi

divu

blez

bgtz

bgez

bltz

xori

slt

dsw

dlw

0.73

0.59

0.59

0.30

0.29

0.29

0.25

0.17

0.15

0.15

0.15

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.00

0.01

0.00

0.00

0.00

0.00

0.02

0.00

0.00

0.15

0.12

0.12

0.06

0.06

0.06

0.05

0.03

0.03

0.03

0.03

Decisions on whether excluding or including instructions from/

to the instruction set is made based on the profiling results of the

exploration framework. Some examples of the effect of excluding

(e.g. immediate logical and shift instructions) or including (e.g.

branch instructions, and byte- and half-word-loads and stores)

new instructions on the performance and code size for the selected

applications are shown in Fig. 6. As shown, excluding the immediate logical instructions (i.e. ‘‘andi’’, ‘‘ori’’, and ‘‘xori’’) and immediate shifts (i.e. ‘‘sll’’, ‘‘srl’’, and ‘‘sra’’) increases the execution

cycles of representative applications about 7% and 11% on average,

respectively. Therefore, it is not recommended to exclude these

instructions from the MIPS instruction set. Including the proposed

branch instructions (i.e. ‘‘blt’’ and ‘‘ble’’) reduces the execution cycles of the selected applications by 8% on average. Excluding the

half word loads/stores (i.e. ‘‘lh’’, ‘‘lhu’’/‘‘sh’’) increases the execution

cycles about 3/1% on average, respectively. In addition excluding

byte loads/stores (i.e. ‘‘lb’’, ‘‘lbu’’/‘‘sb’’) reduces the performance

about 5.4/6.9% on average, respectively. Furthermore, by excluding

all half-word- and byte-loads and stores, the maximum degradation in performance is 23% for MD5 and 27% for Flow-Class applications. Comparing to the instruction sets that only support 32-bit

loads/stores, the proposed instruction set can provide considerable

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

123

Fig. 6. Peformance comparison of the proposed architecture with MIPS: effect of omitting immediate logical instructions, and some types of load/stores, and also adding new

branch instructions on performance and code size for the selected applications, (a) improved performance, (b) code size.

Table 16

Area, power, and delay improvements on MIPS for the proposed instruction set.

Total cell area

Power (mW)

Delay (ns)

MIPS

Proposed

Improvement (%)

96425.9

8.7

3.4

60270.2

7.4

2.8

37

15

17

performance improvements. Because when the compiler supports

only 32-bit loads/stores, the 8-bit and 16-bit loads/stores should

be synthesized with a ‘‘lw’’/‘‘sw’’ followed by a sequence of logical

and shift operations for extracting/modifying the required part of

the 32-bit value.

We have also modeled the MIPS processor in synthesizable Verilog HDL. This development has been verified against the PISA

model utilized in SimpleScalar [9,16]. The modeled processor has

a 5-stage single issue pipeline architecture considering the data

hazards and resolving them by forwarding and interlock techniques. The Verilog model of the proposed processor is developed

based on the developed instructions and is consistent with the

machine definition files in compiler and simulator as well. The Verilog model is synthesized with a digital logic synthesizer using a

CMOS 90 nm standard cell library and the effects of the proposed

instruction additions and omissions on area, frequency, and power

consumption of the implied processor is evaluated. Since the clock

period and also the clock cycles are both improved, therefore the

performance is enhanced with our proposed instruction set. Furthermore, the power consumption of the proposed processor is

also reduced, thus the performance per watt is improved. Based

on the results of Table 16, and 8% performance improvement of

new branch instructions (Fig. 6), about 48% improvements in performance per watt is achieved in our approach (normalized execution time divided by normalized power, i.e. 1.081.17/0.85 = 1.48).

7. Conclusion

We have presented a quantitative analysis of networking

benchmarks to extract architectural guidelines for designing optimized embedded packet-processing engines. Exhaustive quantitative analysis of MIPS and ARM instruction sets for selected

124

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125

benchmarks has been made. SimpleScalar simulation and profiling

environments are deployed to obtain comparative results, based

upon which instruction set architectural guidelines are developed.

The reproducible profile-driven results are based on representative

header- and payload-processing tasks. The experiments recommend load-store architecture with displacement and immediate

addressing modes supporting 8-, 16-, and 32-bit memory operations for packet-processing tasks. We also recommend new compare-and-branch instructions for conditional branches which

support registers as operands.

To validate the proposed instruction set guidelines by considering the mutual interaction of architecture and compiler in an integrated environment, a retargetable compilation and simulation

framework has been developed. This framework utilizes GCC and

SimpleScalar machine definition capabilities in the aforementioned

development. It is shown that the proposed basic set of networking

instructions provides low-power and cost-sensitive operation for

embedded packet-processing engines. These optimized engines

can be employed in massively parallel NP architectures or embedded processors customized for packet processing in the future packetized world. Furthermore, the proposed instructions can also be

used as the base set for accommodating application-specific custom

instructions for more-complex processors.

References

[1] J.C. Niemann, C. Puttmann, et al., Resource efficiency of the GigaNetIC chip

multiprocessor architecture, Journal of Systems Architecture 53 (2007) 285–

299.

[2] K. Vlachos, T. Orphanoudakis, et al., Design and performance evaluation of a

programmable packet processing engine (PPE) suitable for high-speed network

processors units, Microprocessors & Microsystems 31 (3) (2007) 188–199.

[3] Patrick Crowley, Mark A. Franklin, Haldun Hadimioglu, Peter Z. Onufryk,

Network Processor Design, Issues and Practices, The Morgan Kaufmann Series

in Computer Architecture and Design, vol. 1, Elsevier Inc., 2005.

[4] J. Allen, B. Bass, et al., IBM PowerNP network processor: hardware, software,

and applications, IBM Journal of Research and Development 47 (2/3) (2003)

177–194.

[5] Panos C. Lekkas, Network Processors: Architectures, Protocols, and Platforms,

McGraw-Hill Professional Publishing, 2003.

[6] R. Ohlendorf, A. Herkersdorf, T. Wild, FlexPath NP – a network processor

concept with application-driven flexible processing paths, in: third IEEE/ACM/

IFIP International Conference on Hardware/Software Codesign and System,

Synthesis, (CODES+ISSS), September 2005, pp. 279–284.

[7] R. Ohlendorf, T. Wild, M. Meitinger, H. Rauchfuss, A. Herkersdorf, Simulated

and measured performance evaluation of RISC-based SoC platforms in network

processing applications, Journal of Systems Architecture 53 (2007) 703–718.

[8] M. Okuno, S. Nishimura, S. Ishida, H. Nishi, Cache-based network processor

architecture: evaluation with real network traffic, IEICE Transaction on

Electron, E89–C (11) (2006) 1620–1628.

[9] D. Burger, T. Austin, The SimpleScalar tool set version 2.0, Computer

Architecture News 25 (3) (1997) 13–25.

[10] J.L. Hennessy, D.A. Patterson, Computer architecture: a quantitative approach,

fourth ed., The Morgan Kaufmann Series in Computer Architecture and Design,

Elsevier Inc., 2007.

[11] IETF RFCs, available from: <http://www.ietf.org/>.

[12] T. Wolf, M.A. Franklin, CommBench – a telecommunications benchmark for

network processors, in: Proc. of IEEE International Symposium on Performance

Analysis of Systems and Software (ISPASS), April 2000, pp. 154–162.

[13] G. Memik, W.H. Mangione-Smith, W. Hu, Net-Bench: a benchmarking suite for

network processors, in: Proc. of IEEE/ACM International Conference on,

Computer-Aided Design, November 2001, pp. 39–42.

[14] B.K. Lee, L.K. John, NpBench: a benchmark suite for control plane and data

plane applications for network processors, in: Proc. of IEEE International

Conference on Computer Design (ICCD 03), October 2003, pp. 226–233.

[15] R. Ramaswamy, T. Wolf, PacketBench: a tool for workload characterization of

network processing, in: Proc. of IEEE International Workshop on Workload

Characterization, October 2003, pp. 42–50.

[16] SimpleScalar LLC, available from: <http://www.simplescalar.com>.

[17] EEMBC, The Embedded Microprocessor Benchmark Consortium, Available

from: <http://www.eembc.org/home.php>.

[18] IntelÒ IXP4XX Product Line of Network Processors, Available from: <http://

www.intel.com/design/network/products/npfamily/ixp4xx.htm>.

[19] Broadcom corporation, Communications Processors, available from: <http://

www.broadcom.com/products/Data-Telecom-Networks/CommunicationsProcessors#tab=products-tab>.

[20] National Laboratory for Applied Network Research – Passive Measurement and

Analysis. Passive Measurement and Analysis, available from: <http://

pma.nlanr.net/PMA/>.

[21] J.L. Hennessy, D.A. Patterson, Computer organization and design: the

hardware/software interface, third ed., The Morgan Kaufmann Series in

Computer Architecture and Design, Elsevier Inc., 2005.

[22] M.E. Salehi, S.M. Fakhraie, Quantitative analysis of packet-processing

applications regarding architectural guidelines for network-processingengine development, Journal of Systems Architecture 55 (2009) 373–386.

[23] ARM Processor Instruction Set Architecture, available from: <http://

www.arm.com/products/CPUs/architecture.html>.

[24] Jurij Silc, Borut Robic, Th. Ungerer, Processor Architecture: From Dataflow to

Superscalar and Beyond, Springer-Verlag, 1999.

[25] M.E. Salehi, R. Rafati, F. Baharvand, S.M. Fakhraie, A quantitative study on

layer-2 packet processing on a general purpose processor. in: Proc. of

International Conference on Microelectronic (ICM06), December 2006, pp.

218–221.

[26] Partha Biswas, Kubilay Atasu, Vinay Choudhary, Laura Pozzi, Nikil Dutt, Paolo

Ienne, Introduction of local memory elements in instruction set extensions. in:

Proc. of the 41st Design Automation Conference, San Diego, CA, June 2004, pp.

729–734.

[27] H. Mohammadi, N. Yazdani, A genetic-driven instruction set for high speed

network processors, in: Proc. of IEEE International Conference on Computer

Systems and Applications (ICCSA 06), March 2006, pp. 1066–1073.

[28] Gary Jones, Elias Stipidis, Architecture and instruction set design of an ATM

network processor, Microprocessors and Microsystems 27 (2003) 367–379.

[29] N.T. Clark, H. Zhong, S.A. Mahlke, Automated custom instruction generation for

domain-specific processor acceleration, IEEE Transaction on Computers 54

(10) (2005) 1258–1270.

[30] M. Grünewald, D. Khoi Le, et al. Network application driven instruction set

extensions for embedded processing clusters, in: Proc. International

Conference on Parallel Computing in, Electrical Engineering, September

2004, pp. 209–214.

[31] Muhammad Omer Cheema, Omar Hammami, Application-specific SIMD

synthesis

for

reconfigurable

architectures,

Microprocessors

and

Microsystems 30 (2006) 398–412.

[32] Pan Yu, Tulika Mitra, Scalable custom instructions identification for instruction

set extensible processors, in: Proc. of International Conference on Compilers,

Architecture and Synthesis for Embedded Systems, September 2004, pp. 69–

78.

[33] Jason Cong, Yiping Fan, Guoling Han, Zhiru Zhang, Application-specific

instruction generation for configurable processor architectures, in: Proc. of

the ACM/SIGDA international symposium on Field programmable gate arrays,

2004, pp. 183–189.

[34] S.K. Lam, T. Srikanthan, Rapid design of area-efficient custom instructions for

reconfigurable embedded processing, Journal of System Architecture 55 (2009)

1–14.

[35] K. Atasu, C. Ozturan, G. Dundar, O. Mencer, W. Luk, CHIPS: custom hardware

instruction processor synthesis, IEEE Transactions on Computer Aided Design

of Integrated Circuits and Systems 27 (2008) 528–541.

[36] L. Pozzi, K. Atasu, P. Ienne, Exact and approximate algorithms for the extension

of embedded processor instruction sets, IEEE Transaction on Computer-Aided

Design of Integrated Circuits and Systems 25 (2006) 1209–1229.

[37] F. Sun, S. Ravi, A. Raghunathan, N.K. Jha, A synthesis methodology for hybrid

custom instruction and co-processor generation for extensible processors, IEEE

Transactions on Computer-Aided Design 26 (11) (2007) 2035–2045.

[38] Philip Brisk, Adam Kaplan, Majid Sarrafzadeh, Area-efficient instruction set

synthesis for reconfigurable system-on-chip designs, in: Proc. of the 41st

annual Design Automation Conference (DAC 04), 2004, pp. 395–400.

[39] Tensilica: Customizable Processor Cores for the Dataplane, available from:

<http://www.tensilica.com/>.

[40] Karl Van Rompaey, DiederikVerkest, Ivo Bolsens, Hugo De Man, ‘‘CoWare – a

design environment for heterogeneous hardware/software systems’’, in: Proc.

of European Design Automation Conference, 1996, pp. 252–257.

[41] ACE CoSy compiler development system, available from: <http://www.ace.nl/

compiler/cosy.html>.

[42] GCC, the GNU Compiler Collection, available from: <http://gcc.gnu.org/>.

Mostafa Ersali Salehi Nasab was born in Kerman, Iran,

in 1978. He received the B.Sc. degree in computer

engineering from University of Tehran, Tehran, Iran, and

the M.Sc. degree in computer architecture from University of Amirkabir, Tehran, Iran, in 2001 and 2003,

respectively. He has received his Ph.D. degree in school

of Electrical and Computer Engineering, University of

Tehran, Tehran, Iran in 2010. He is now an Assistant

Professor in University of Tehran. From 2004 to 2008, he

was a senior digital designer working on ASIC design

projects with SINA Microelectronics Inc., Technology

Park of University of Tehran, Tehran, Iran. His research

interests include novel techniques for high-speed digital design, low-power logic

design, and system integration of networking devices.

M.E. Salehi et al. / Journal of Systems Architecture 58 (2012) 112–125