CSC242: Intro to AI Lecture 23

advertisement

CSC242: Intro to AI

Lecture 23

Learning: The Big

Picture So Far

Function Learning

Linear Regression

Linear Classifiers

Neural Networks

Learning Probabilistic

Models

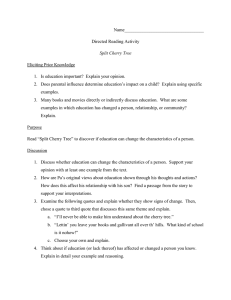

100% cherry

75% cherry

25% lime

50% cherry

50% lime

25% cherry

75% lime

100% lime

D1=

D2=

Bags

Agent, process, disease, ...

Candies

Actions, effects, symptoms,

results of tests, ...

Observations

D3=

Goal

Predict next Predict agent’s next move

candy

Predict next output of process

Predict disease given symptoms

and tests

Learning and Bayesian

Networks

Learning and Bayesian

Networks

• The distribution defined by the network is

parameterized by the entries in the CPTs

associated with the nodes

• A BN defines a space of distributions

corresponding to the parameter space

Learning and Bayesian

Networks

• If we have a BN that we believe represents

the causality (conditional independence) in

our problem

• In order to find (estimate) the true

distribution...

• We need to learn the parameters of the

model from the training data

Burglary

JohnCalls

P(B)

.001

Earthquake

Alarm

B

t

t

f

f

A P(J)

t

f

.90

.05

E

t

f

t

f

P(E)

.002

P(A)

.95

.94

.29

.001

MaryCalls

A P(M)

t

f

.70

.01

hΘ

P(F=cherry)

Θ

Flavor

hΘ

P(F=cherry)

Θ

Flavor

N

c

l

Independent Identically

Distributed (i.i.d.)

• Probability of a sample is independent of

any previous samples

P(Di |Di

1 , Di 2 , . . .)

= P(Di )

• Probability distribution doesn’t change

among samples

P(Di ) = P(Di

1)

= P(Di

2)

= ···

P(F=cherry)

hΘ

Θ

Flavor

N

P (d | h⇥ ) =

=

c

l

Y

j

c

P (dj | h⇥ )

· (1

)l

Maximum Likelihood

Hypothesis

argmax P (d | h⇥ )

⇥

Maximum Likelihood

Hypothesis

argmax P (d | h⇥ )

⇥

Log Likelihood

P (d | h⇥ ) =

Y

=

c

L(d | h⇥ ) = log P (d | h⇥ ) =

j

P (dj | h⇥ )

· (1

X

j

)l

log P (dj | h⇥ )

= c log

+ l log(1

)

Maximum Likelihood

Hypothesis

L(d | h⇥ ) = c log

+ l log(1

c

c

argmax L(d | h⇥ ) =

=

c+l

N

⇥

)

Flavor

Wrapper

P(F=cherry)

Θ

Flavor

Wrapper

F P(W=red|F)

cherry

Θ1

lime

Θ2

h⇥,⇥1 ,⇥2

P(F=cherry)

Θ

Flavor

Wrapper

F P(W=red|F)

cherry

Θ1

lime

Θ2

P(F=cherry)

h⇥,⇥1 ,⇥2

Θ

Flavor

Wrapper

F P(W=red|F)

cherry

Θ1

lime

Θ2

P (F = f, W = w | h⇥,⇥1 ,⇥2 ) =

P (F = f | h⇥,⇥1 ,⇥2 ) · P (W = w | W = f, h⇥,⇥1 ,⇥2 )

P (F = c, W = g | h

,

1,

2

)=

· (1

1)

F

W

P(F=f,W=w| hΘ,Θ1,Θ2)

cherry

red

Θ Θ1

cherry

green

Θ (1-Θ1)

lime

red

(1-Θ) Θ2

lime

green

(1-Θ) (1-Θ2)

N

c

rc

l

gc

rl

gl

F

W

P

N=c+l

cherry

red

Θ Θ1

rc

cherry

green

Θ (1-Θ1)

gc

lime

red

(1-Θ) Θ2

rl

lime

green

(1-Θ) (1-Θ2)

gl

F

cherry

cherry

W

red

green

P

Θ Θ1

Θ (1-Θ1)

N=c+l P (d | h⇥,⇥1 ,⇥2 )

rc

gc

(

1)

( (1

rc

1 ))

lime

red

(1-Θ) Θ2

rl

((1

)

lime

green

(1-Θ) (1-Θ2)

rl

((1

)(1

gc

2)

rl

gl

))

2

P (d | h⇥,⇥1 ,⇥2 ) =

(

1)

=

c

rc

(1

· ( (1

l

) ·

1 ))

gc

rc

1 (1

L(d | h⇥,⇥1 ,⇥2 ) = c log

· ((1

1)

gc

)

·

2)

rl

rl

2 (1

+ l log(1

· ((1

gl

)

2

)+

[rc log

1

+ gc log(1

1 )]+

[rl log

2

+ gl log(1

2 )]

)(1

2 ))

gl

c

c

=

=

c+l

N

1

rc

rc

=

=

rc + g c

c

2

rl

rl

=

=

rl + g l

l

h⇥,⇥1 ,⇥2

P(F=cherry)

Θ

Flavor

Wrapper

F P(W=red|F)

cherry

Θ1

lime

Θ2

c

c

=

=

c+l

N

1

rc

rc

=

=

rc + g c

c

2

rl

rl

=

=

rl + g l

l

argmax L(d | h⇥,⇥1 ,⇥2 ) = argmax P (d | h⇥,⇥1 ,⇥2 )

⇥,⇥1 ,⇥2

⇥,⇥1 ,⇥2

Naive Bayes Models

Class

Attr1

Attr2

Attr3

...

Naive Bayes Models

{ mammal, reptile, fish, ... }

Class

Furry

Warm

Blooded

Size

...

Naive Bayes Models

Class

Attr1

Attr2

Attr3

...

Naive Bayes Models

{ mammal, reptile, fish, ... }

Class

Furry

Warm

Blooded

Size

...

Naive Bayes Models

{ terrorist, tourist }

Class

Arrival

Mode

One-way

Ticket

Furtive

Manner

...

Naive Bayes Models

Disease

Test1

Test2

Test3

...

Learning Naive Bayes

Models

• Naive Bayes model with n Boolean

attributes requires 2n+1 parameters

• Maximum likelihood hypothesis can be

found with no search

• Probabilities are observed frequencies

• Scales to large problems

• Robust to noisy or missing data

Learning with

Complete Data

• Can learn the CPTs for a Bayes Net from

observations that include values for all

variables

• Finding maximum likelihood parameters

decomposes into separate problems, one

for each parameter

• Parameter values for a variable given its

parents are the observed frequencies

{ terrorist, tourist }

Class

Arrival

Mode

One-way

Ticket

Furtive

Manner

...

Arrival One-Way Furtive

...

Class

taxi

yes

very

...

terrorist

car

no

none

...

tourist

car

yes

very

...

terrorist

car

yes

some

...

tourist

walk

yes

none

...

student

bus

no

some

...

tourist

Disease

Test1

Test2

Test3

...

Test

Test2

Test3

...

Disease

T

F

T

...

?

T

F

F

...

?

F

F

T

...

?

T

T

T

...

?

F

T

F

...

?

T

F

T

...

?

2

Smoking

2

Diet

2

Exercise

2

Smoking

2

Diet

2

Exercise

54 HeartDisease

6

Symptom 1

6

Symptom 2

6

Symptom 3

(a)

78 parameters

54

Symptom 1

162

Symptom 2

486

Symptom 3

(b)

708 parameters

Hidden (Latent)

Variables

• Can dramatically reduce the number of

parameters required to specify a Bayes net

• Reduces amount of data required to

learn the parameters

• Values of hidden variables not present in

training data (observations)

• “Complicates” the learning problem

EM

Expectation-Maximization

• Repeat

• Expectation: “Pretend” we know the

parameters and compute (or estimate)

likelihood of data given model

• Maximization: Recompute parameters

using expected values as if they were

observed values

• Until convergence

Learning: The Big

Picture for 242

Function Learning

Linear Regression

Linear Classifiers

Neural Networks

Learning Probabilistic Models (Bayes Nets)

Tue 23 Apr & Thu 25 Apr

Posters!

Get there early...