DSA Assessment Plan

advertisement

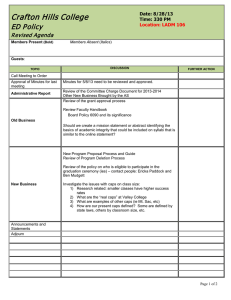

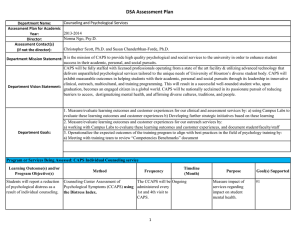

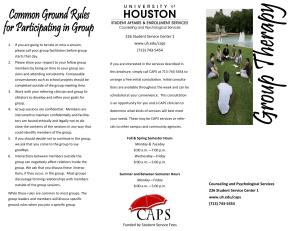

DSA Assessment Plan Department Name: Assessment Plan for Academic Year: Director: Assessment Contact(s) (if not the director): Counseling and Psychological Services 2013-2014 Norma Ngo, Psy.D. Christopher Scott, Ph.D. and Susan Chanderbhan-Forde, Ph.D. Department Mission Statement: It is the mission of CAPS to provide high quality psychological and social services to the university in order to enhance student success in their academic, personal, and social pursuits. CAPS will be fully staffed with licensed professionals operating from a state of the art facility & utilizing advanced technology that delivers unparalleled psychological services tailored to the unique needs of University of Houston’s diverse student body. CAPS will exhibit measurable outcomes in helping students with their academic, personal and social pursuits through its leadership in innovative clinical, outreach, multicultural, and training programming. This will result in a successful well-rounded student who, Department Vision Statement: upon graduation, becomes an engaged citizen in a global world. CAPS will be nationally acclaimed in its passionate pursuit of reducing barriers to access, destigmatizing mental health, and affirming diverse cultures, traditions, and people. Department Goals: 1. Measure/evaluate learning outcomes and customer experiences for our clinical and assessment services by: a) using Campus Labs to evaluate these learning outcomes and customer experiences b) Developing further strategic initiatives based on these learning outcomes and customer experiences 2. Measure/evaluate learning outcomes and customer experiences for our outreach services by: a) working with Campus Labs to evaluate these learning outcomes and customer experiences, and document student/faculty/staff 3. Operationalize the expected outcomes of the training program to align with best practices in the field of psychology training by: a) Meeting with training team to review “Competencies Benchmarks” document 1 DSA Assessment Plan Program or Services Being Assessed: CAPS Individual Counseling service Learning Outcome(s) and/or Program Objective(s) Method Frequency Students will report a reduction Counseling Center Assessment of The CCAPS will of psychological distress as a Psychological Symptoms (CCAPS) using be administered result of individual counseling. the Distress Index. every 1st and 4th visit to CAPS. Timeline (Month) Ongoing Purpose Measure impact of services regarding impact on student mental health. Goal(s) Supported #1 Results: Purpose of Assessment: Measure impact of services regarding impact on student mental health. Time Frame of the Assessment: Ongoing. Reports were calculated from 9/1/13 to 8/1/14 Describe the methodology and frequency of the Assessment activities. A psychological outcome measure called the Counseling Center Assessment of Psychological Symptoms (CCAPS) was used and administered every 1st and 4th visit to CAPS. The overall Distress Index was compared between initial and subsequent visits to CAPS. Is the Learning or Program Outcome achieved? Yes How do you know? 46% of clients met the reliable change index (RCI) criterion for improvement and also had their latest scores below the cut point used to generate the report. These two criteria, combined, provide the most stringent criteria currently used in psychotherapy research to indicate Reliably and Clinically Significant Improvement. As such, this is the percent of people for whom it may be confidently said that treatment has had a profound and meaningful effect. (from the Center for Collegiate Mental Health (CCMH) Counseling Center Assessment of Psychological Symptoms (CCCAPS) Center-Wide Change Manual, 2012). Share specific data points from the assessment: Out of 161 clients who met the cut-off score for significant distress at the time they sought services, 46% demonstrated reliable improvement in overall distress (at a .05 significance level) from 9/1/13 to 8/1/14. Action: Any changes to the program/service content? No changes are currently planned. These results can serve as baseline data for future assessment projects. Any changes to the method in which the program/service is provided? No plans for FY 2015 Any changes in the funding/personnel dedicated to the program/service? CAPS plans to request funding for two additional clinicians for FY 2015-2016. 2 DSA Assessment Plan Program or Services Being Assessed: CAPS Individual Counseling service Learning Outcome(s) and/or Program Objective(s) Students will experience an improvement in academic functioning as a result of individual counseling. Method Frequency Counseling Center Assessment of The CCAPS will Psychological Symptoms (CCAPS) using be administered every 1st and 4th the Academic Distress Scale. visit to CAPS. Timeline (Month) Ongoing Purpose Measure impact of services in reducing academic distress. Goal(s) Supported #1 Results: Purpose of Assessment: Measure impact of services in reducing academic distress. Time Frame of the Assessment: Ongoing. Reports wereran from 9/1/13 to 8/1/14. Describe the methodology and frequency of the Assessment activities: Counseling Center Assessment of Psychological Symptoms (CCAPS) using the Academic Distress Scale was administered every 1st and 4th visit to CAPS. Is the Learning or Program Outcome achieved? Yes How do you know? 26% of clients met the reliable change index (RCI) criterion for improvement and their latest scores were below the cut point used to generate the report. These two criteria, combined, provide the most stringent criteria currently used in psychotherapy research to indicate Reliably and Clinically Significant Improvement. As such, this is the percent of people for whom it may be confidently said that treatment has had a profound and meaningful effect. (from the Center for Collegiate Mental Health (CCMH) Counseling Center Assessment of Psychological Symptoms (CCCAPS) Center-Wide Change Manual, 2012). Share specific data points from the assessment. oOut of the 160 clients who met the high cutoff for significant academic distress during this time period 26% indicated statistically significant (.05 level) reliable reduction of academic distress. Action: Any changes to the program/service content? No current plans. Any changes to the method in which the program/service is provided? No current plans. Any changes in the funding/personnel dedicated to the program/service? CAPS plans to request funding for 2 additional clinicians for 2015-2016. 3 DSA Assessment Plan Program or Services Being Assessed: CAPS customer service (questions cover interactions with both clinical and clerical staff) Learning Outcome(s) and/or Program Objective(s) Assess key areas of customer satisfaction for students. Method Timeline (Month) Frequency 1. Brief (5-10 question) Campus Labs 3x per year. survey to be administered via tablet. 2. Paper free response form asking clients to write about their customer service experiences at CAPS. Purpose Administration in: Evaluate key customer November, April, July services interactions clients have with clinicians and front office staff. Goal(s) Supported #1 Results: Purpose of Assessment: Evaluate key customer services interactions with clients. Time Frame of the Assessment: Administration during a 1-2 weeks period to all clients that check-in for appointments in November, April, July. Describe the methodology and frequency of the Assessment activity: Brief (7 question) survey to be administered by paper form and entered into Campus Labs 2. Paper free response form asking clients to write about their customer service experiences at CAPS. Is the Learning or Program Outcome achieved? Yes. How do you know? We were able to determine baseline levels of customer satisfaction. We were also able to determine changes in satisfaction between periods of high and low utilization. Share specific data points from the assessment: The following are the questions on the assessment and a summary of the quantitative results from each of the three semester the evaluation was administered: • Counseling helped me identify at least one change I can make to help me meet my goals: 92% Clients Agreed/Strongly Agreed in the fall; 84% Clients Agreed/Strongly Agreed in the spring; 89% Agreed/Strongly Agreed in the summer • CAPS fees are reasonable: 94% Clients Agreed/Strongly Agreed in the fall; 90% Clients Agreed/Strongly Agreed in the spring; 95% Clients Agreed/Strongly Agreed in the summer. • My clinician showed respect and concern for my problems: 96% Clients Agreed/Strongly Agreed in the fall; 95% Clients Agreed/Strongly Agreed in the spring; 100% Clients Agreed/Strongly Agreed in the summer • Counseling has helped me learn ways to better cope with my feelings: 76% Clients Agreed/Strongly Agreed in the fall, 76% Clients Agreed/Strongly Agreed in the spring; 82% Clients Agreed/Strongly Agreed in the summer. • Counseling helped me become a more successful student: 53% Clients Agreed/Strongly Agreed in the Fall; 55% Clients Agreed/Strongly Agreed in the spring; 55% Clients Agreed/Strongly Agreed in the summer. • I would be comfortable recommending CAPS to a friend: 92% Clients Agreed/Strongly Agreed in the Fall; 91% Clients Agreed/Strongly Agreed in the Spring; 94% Clients Agreed/Strongly Agreed in the summer • My individual counseling sessions are frequent enough to meet my needs: 71% Clients Agreed/Strongly Agreed in the Fall; 65% Clients Agreed/Strongly Agreed Spring; 88% Clients Agreed/Strongly Agreed in the summer. Action: Any changes to the program/service content? • Fall and spring results are similar however summer results differed in several ways. During the summer sessions we are able to see ongoing clients more frequently (often 1 session per week) given we have substantially less demand during that time period. These two questions revealed greater satisfaction and reportedly better outcomes during the summer: • My individual counseling sessions are frequent enough to meet my needs. • Counseling helped me learn ways to better cope with my feelings Any changes to the method in which the program/service is provided? o We will revise practice recommendations to encourage more frequent individual counseling visits for ongoing clients during the fall and spring semesters.. Service agreements for clinicians have been modified to equally incentivize seeing ongoing clients more frequently rather than just rewarding taking on more clients. Any changes in the funding/personnel dedicated to the program/service? o We plan to request funding for two new clinicians for 2015-2016. That will help us to offer more frequent individual counseling visits during busy periods in the spring and fall semesters. 4 DSA Assessment Plan Program or Services Being Assessed: Group Therapy Program Learning Outcome(s) and/or Program Objective(s) Method Frequency Students will be able to decrease their social anxiety and depression and increase their interpersonal effectiveness as a result of participating in group therapy this semester. The assessment measure will be the CCAPS, looking specifically at the social anxiety and depression measures to see if clients' symptoms decrease over time. Group evaluations will also be administered at the end of every group. CCAPS and other outcome measures will be administered-pre and post group. Group evaluations will be completed during last group session. Timeline (Month) Statistics will be analyzed at the end of every semester, so December 2013/January 2014 for fall semester and June 2014 for spring semester. Purpose Goal(s) Supported To determine the #1 effectiveness of group therapy on clients' reported symptoms and to help shed light on how group program might be more effective Results: Purpose of Assessment: In order to assess the effectiveness of the CAPS Group Program regarding our learning outcomes for this academic year. Time Frame of the Assessment: 8/2013- 8/2014 Some of the data for the summer groups could not be included since some of the groups are still in session and they have not completed the evaluations or their final CCAPS yet. The data included is up to 8/4/2014 for the CCAPS data. Describe the methodology and frequency of the Assessment activities: The methodology was as follows: I utilized the measures on the CCAPS (already administered regularly at CAPS) in order to measure outcomes of the group therapy program and if clients’ symptoms were reduced. I focused primarily on the measures of social anxiety and depression, as these are those targeted in my assessment plan. The CCAPS was given to every student who participated in group therapy this academic year pre and post group participation. I also utilized the data from the group evaluation that all participating students complete at the end of their group experience. I focused on the self-report, Likert scale items regarding interpersonal effectiveness: “Group helped me improve my ability to communicate and interact with others”; “I was able to consistently work on the group goals I created for myself”; “I felt connected with my other group members”; “At the end of group, my overall well-being had improved”. Is the Learning or Program Outcome achieved? Yes How do you know? • The data shows that the learning outcome was achieved, as evidenced by the CCAPS reports. The data showed that 33% of clients reliably improved (% of students that improved on this subscale, on CCAPS) on the social anxiety subscale. Social anxiety is a main presenting concern for clients who participate in our group therapy program, so this is a good indicator of effectiveness. Also data showed that 47% of clients reliably improved on the depression subscale. • The data from the group evaluations shows evidence that group clients increased their interpersonal effectiveness. The vast majority agreed or strongly agreed with the following statements (the percentages are included in the parentheses): o “Group helped me improve my ability to communicate and interact with others” –(96% in fall, 90% in spring semester) o “I was able to consistently work on the group goals I created for myself” –(65% fall, 82 % spring) o “I felt connected with my other group members” (88% fall;88% spring) o “At the end of group, my overall well-being had improved” –(81% fall, 88% spring) Share specific data points from the assessment. *Included above 5 DSA Assessment Plan Action: Any changes to the program/service content? In order to focus more on the learning outcome of reducing social anxiety, we plan to make our Navigating Social Waters group more structured and focused on skill building than in semesters past. A new staff will be co-leading this group which will bring a new viewpoint and approach to this group for students who struggle with significant social anxiety. We ongoingly consult on how to make our groups more effective during our Group Case Conference biweekly. Any changes to the method in which the program/service is provided? The group program will be changing to a more ongoing and long term model of group therapy instead of our more time-limited model in years past. Our process and themed groups will now be held the same day, same time, and have one of the same co-leaders for both the fall and spring semester. This will allow students to participate in the same group during both semesters and increase their ability to reduce their symptoms and work on their group goals in a more consistent and long term way. By having the groups at consistent times, CAPS will enable students to schedule their classes around their desired group if warranted and increase ability to participate in the group that best fits their needs. We are also hoping that allowing students to “rollover” into the same group will reduce the time/energy spent starting the groups up each semester; therefore, increasing the amount of weeks our groups run and maximizing impact. Our psyhoeducational groups will remain time-limited (run for one semester at a time). Also, we reduced the number of groups offered to allow all groups to be co-led (have two group facilitators). We believe this will increase quality of the group experience for our students and positively impact their work on their treatment goals. Any changes in the funding/personnel dedicated to the program/service? We will be adding two additional staff members this fall and they will both be heavily involved in our group program. We hope to incorporate their clinical experience and welcome their feedback regarding our group program, offerings, and content. 6 DSA Assessment Plan Program or Services Being Assessed: LD/ADHD Assessment Learning Outcome(s) and/or Method Program Objective(s) Clients who complete ADHD/LD 1) CAPS ADHD/LD assessment survey will be testing will be able to identify used. 2) Majority of respondents will report increased their academic strengths and awareness of academic strengths & weaknesses weaknesses. Frequency All asssessment clients will be asked to complete survey at conclusion of assessment process Timeline (Month) Ongoing: Data will be analyzed at end of Fall, Spring, and Summer 2013 semesters Purpose Goal(s) Supported Asses utility of assessment # 1 process for improving students' knowledge of academic strengths and weaknesses and inform any needed changes.1) Plan revised from AY 2011/2012 to clarify goals 2) Will be comparing AY 2013/2014 results to baseline from AY 2012 Results: Purpose of Assessment: Assess utility of assessment process for improving students' knowledge of academic strengths and weaknesses and inform any needed changes. Time Frame of the Assessment: Data are at end of each Fall, Spring, and Summer semesters Describe the methodology and frequency of the Assessment activities: • Data are at end of each Fall, Spring, and Summer semesters • Clients who completed LD/ADHD assessments were administered a survey to assess the Learning Goal established in the 2012-2013 CAPS assessment plan as well as other areas of the LD/ADHD assessment process. Data were then entered data into Excel by the Assessment Coordinator. • Tool: Paper evaluation; MS Excel Is the Learning or Program Outcome achieved? o Yes How do you know? o Data was analyzed using Excel Share specific data points from the assessment: Ability to better identify academic strengths and weaknesses: 94% of clients who responded to the post-assessment survey indicated that they either agreed or strongly agreed that they were able to better identify academic strengths and weaknesses Ability to identify strategies which will improve academic success: 94% of clients who responded to the post-assessment survey indicated that they either agreed or strongly agreed that they had learned about strategies they can use to improve their academic performance A majority of students noted in their comments about the assessment process that they believe the process is very helpful, helped them understand their functioning better, particularly their academic strengths and weaknesses. Several students also commented that they perceived their assessment clinician as particularly sensitive, helpful, and understanding. Past assessment surveys have generated overwhelmingly positive responses from clients about their assessment experience and overwhelming endorsement that learning goals are met. To gather information more useful for changes in program delivery, a qualitative question was added asking students what could have been done to improve their assessment experience at CAPS. One significant theme emerged from analysis of responses to this question: Students expressed that giving them a clear overview of the assessment process helps them to be mentally and emotionally prepared for the assessment process Comments from three students mentioned that preparing them emotionally for the assessment process as well as more clearly explaining each instrument to be administered at the beginning of the assessment process would be helpful to students in being mentally prepared at the beginning of the process was important. Action: Any changes to the program/service content? • No. Any changes to the method in which the program/service is provided? • Yes. Clinicians providing assessments will increase time spent talking to clients at the beginning of the assessment process about the instruments to be administered and the possible emotional implications of undergoing an ADHD/LD assessment Any changes in the funding/personnel dedicated to the program/service? • No. 7 DSA Assessment Plan Program or Services Being Assessed: LD/ADHD Assessment Learning Outcome(s) and/or Method Program Objective(s) Clients who complete ADHD/LD 1) CAPS ADHD/LD assessment survey will be testing will be able to identify used. 2) Majority of respondents will report learning strategies which will improve strategies to improve academic success their academic success. Frequency All asssessment clients will be asked to complete survey at conclusion of assessment process Results: Action: 8 Timeline (Month) Ongoing: Data will be analyzed at end of Fall, Spring, and Summer 2013 semesters Purpose Goal(s) Supported Asses utility of assessment # 1 process for improving students' knowledge of strategies to improve academic success and inform any needed changes.1) Plan revised from AY 2011/2012 to clarify goals 2) Will be comparing AY 2013/2014 results to baseline from AY 2012 DSA Assessment Plan Program or Services Being Assessed: Outreach Learning Outcome(s) and/or Method Program Objective(s) 1) 70% of workshop attendees 1) Initial Evaluation will demonstrate understanding • Criteria: An attendee that attended at of the Food For Thought least 75% of the FFTW. • Workshops (FFTW) by being Process: Distribute evaluations at the able to identify one new skill end of the FFTW. Enter data into that was learned during the campus labs at the end of the FFTW (to FFTW. be completed by outreach coordinator) 2) 70% of workshop attendees • Tool: Paper evaluation; campus labs that respond to a one-month 2) One Month Follow-up follow-up, will be able to • Criteria: An individual that attended at demonstrate knowledge and least 75% of the FFTW. application of the FFTW by Process: An evaluation will be sent via estating he/she has used one mail at one month after the FFTW. skill • Tool: An electronic assessment via 3) 70% of Diversity Institute campus labs participants that respond to an 3) 2014 Diversity Institute Participants electronic assessment will self- will be administered an electronic prereport improved understanding assessment via campus labs, two weeks of the diversity issues that before the start date of the Diversity present on the campus. Institute. A Post-assessment will be administered via campus labs to all Diversity Institute Participants, two weeks after the date of the 2014 Diversity Institute. Frequency 1) At each FFTW workship (weekly during Fall 2013 and Spring 2014). One month after an attendee attends the FFTW. 2) At each FFTW workshop (weekly during Fall 2013 and Spring 2014). One month after an attendee attends the FFTW. 3) Two administrations of an assessment. One before Diversity Institute and one after Diversity Institute. Timeline Purpose Goal(s) Supported (Month) • FFTW occur on a • Educate UH #2 weekly basis during community on a variety Fall 2013 and Spring of topics 2014. • Enhance skills of • Data from each individual (e.g. FFTW will be entered communication, at the end of each emotional regulation) FFTW. • Effectiveness of • Data (from FFTW weekly FFTW which are and one-month follow- designed to serve as a up) will be interpreted supplement to therapy. at end of Fall 2013 • Effectiveness of FFTW semester and Spring which utilizes one hour 2014 semester. of staff clinical time per • End of academic week. year data will be • To raise awareness interpreted during around diversity, to Summer 2013. promote inter-cultural • Diversity Institute contact, and to 2014 is currently increase multicultural scheduled for March, understanding 2014 Results: Learning or Program Outcome: 1) 70% of workshop attendees will demonstrate understanding of the Food For Thought Workshops (FFTW) by being able to identify one new skill that was learned during the FFTW. 2) 70% of workshop attendees that respond to a one-month follow-up, will be able to demonstrate knowledge and application of the FFTW by stating he/she has used one skill 3) 70% of Diversity Institute participants that respond to an electronic assessment will self-report improved understanding of the diversity issues that present on the campus. Describe the methodology and frequency of the Assessment activities: 1)Evaluations were distributed at the end of Food for Thought Workshops. Data was entered into campus labs at the end of the FFTW. 2) One Month Follow-up for Food for Thought Workshop participants: An individual that attended at least 75% of the FFTW will be sent a Campus Labs evaluation will be sent via e-mail at one month after the FFTW. 3) 2014 Diversity Institute Participants were administered an electronic pre-assessment via campus labs, two weeks before the start date of the Diversity Institute. A Post-assessment will be administered via campus labs to all Diversity Institute Participants, two weeks after the date of the 2014 Diversity Institute. Learning outcome number 1 (70% of FFTW attendees can identify one new skill)--appears achieved. Learning outcome number 2 (70% of workshop attendees that respond to a one-month follow-up, had used one skill) was not achieved given the low response rate for the follow-up surveys. 9 DSA Assessment Plan Learning outcome number 3 (70% of Diversity Institute participants will self-report improved understanding of the diversity issues) appears achieved. How do you know? Survey results indicate gains in Learning Outcomes 1 & 3. Given that these surveys rely on self-reports it is unknown whether participants can actually demonstrate greater knowledge or apply skills effectively. This will need to be explored in future assessment projects. Share specific data points from the assessment. o 89% of FFTW attendees indicated they had learned one new skill. A very small number of students (13) responded to the follow-up survey. Only 5 responded to the question in the follow up survey item asking “To what extent did you incorporate that skill into your lifestyle?"". All of these 5 indicated that they had incorporated this new skill to some extent. 3 out of the 5 indicated that they had incorporated the skill either moderately or not very much while only 2 indicated they had incorporated the skill “considerably”. o 85% of Diversity Institute respondents agreed/strongly agreed that they were more knowledgeable about size as a diversity factor. o 80% of Diversity Institute respondents agreed/strongly agreed that were knowledgeable about spirituality and religion as a diversity factor. o 73% of Diversity Institute attendees reported that they are likely to incorporate an identified multicultural related behavior in their life into their lives as a result of their attendance. Action: Any changes to the program/service content? The plan is that the next Diversity Institute will include a panel discussion component. Any changes to the method in which the program/service is provided? No Any changes in the funding/personnel dedicated to the program/service? The plan is to increase participation from CAPS interns and practicum staff students in upcoming Diversity Institutes. 10 DSA Assessment Plan Program or Services Being Assessed: Training Learning Outcome(s) and/or Method Program Objective(s) CAPS practicum trainees will CAPS practicum trainees must attain an demonstrate competence in the average score of 3 (“acceptable”) on the following core skills: individual CAPS Trainee Evaluation form in each therapy, sensitivity to diversity, core skill by the end of the practicum. ethical sensitivity and professionalism, and use of supervision/training. Frequency At least twice per training year Timeline (Month) Written evaluation form is completed by the primary supervisor at the end of each semester (December, April). Informal feedback is also given at mid-semester (October, February). Purpose Goal(s) Supported Both to provide #3 feedback to trainees regarding their skill development, and to evaluate the effectiveness of the training provided in those skills. If aggregate trainee scores are routinely lower in a particular skill, the quantity, quality, and modality of training provided in that skill are then reassessed and improved. Results: Method: CAPS practicum trainees must attain an average score of 3 (“acceptable”) on the CAPS Trainee Evaluation form in each core skill by the end of the practicum. Frequency: At least twice per training year Timeline: Written evaluation form is completed by the primary supervisor at the end of each semester (December, April). Informal feedback is also given at mid-semester (October, February). Purpose: Both to provide feedback to trainees regarding their skill development, and to evaluate the effectiveness of the training provided in those skills. If aggregate trainee scores are routinely lower in a particular skill, the quantity, quality, and modality of training provided in that skill are then reassessed and improved. Status: The Learning Outcome was achieved. In each core skill, our practicum trainees showed competence (minimum average score of 3) each semester. Indeed, our trainees showed increased competence over the course of the training year, as evidenced by increased average scores each semester. For the two practicum trainees in AY2014, the aggregate scores for each period were as follows: December 2013 Individual Therapy: 3.89 Sensitivity to Diversity: 3.38 Ethical Sensitivity and Professionalism: 4.66 Use of Supervision and Training: 4.10 April 2014 Individual Therapy: 4.43 Sensitivity to Diversity: 3.75 Ethical Sensitivity and Professionalism: 4.78 Use of Supervision and Training: 4.91 Overall 2013-2014 Scores Individual Therapy: 4.16 (AY13 comparison: 3.85) Sensitivity to Diversity: 3.56 (AY 13 comparison: 3.94) Ethical Sensitivity and Professionalism: 4.72 (AY 13 comparison: 4.37) Use of Supervision and Training: 4.5 (AY 13 comparison: 4.2) Action: Individual Therapy overall scores were the lowest of the four core skills last year, whereas Sensitivity to Diversity overall scores were the lowest this year. This was likely due to this year’s trainees having significantly more prior individual therapy experience (3 years vs. 0 - 1 years) compared to last year’s cohort. Indeed, this more experienced cohort achieved higher scores overall except in the area of Sensitivity to Diversity. It may be useful for the coming years to provide specific training around multicultural competence for our practicum trainees, beyond what is provided within individual and cohort supervision. In AY15, we will again have 2 practicum trainees, one advanced (3 years experience) and one beginner (0 years experience). 11