Estimation of Constant Gain Learning Models Eric Gaus Srikanth Ramamurthy

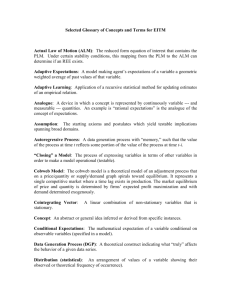

advertisement

Estimation of Constant Gain Learning

Models

Eric Gaus∗

Srikanth Ramamurthy†

April 2014

Abstract

Constant Gain Learning models present a unique set of challenges to the

econometrician compared to models based on Rational Expectations. This is

because of the differences in the equilibrium concept and stability conditions

between the two paradigms. This paper focuses on three key issues: stability

conditions, identification and derivation of the likelihood function. Several illustrative examples complement the general estimation methodology to demonstrate

the aforementioned issues in practice.

Keywords: Adaptive Learning, Constant Gain, E-Stability.

∗

Gaus: Ursinus College, 601 East Main St., Collegville, PA 19426-1000 (e-mail: egaus@ursinus.edu)

Ramamurthy: Sellinger School of Business, Loyola University Maryland, 4501 N. Charles Street,

Baltimore, MD 21210 (e-mail: sramamurthy@loyola.edu).

†

1

Introduction

Adaptive learning explores economic decision making within a bounded rationality

framework. In the field of macroeconomics, adaptive learning models have made a

resurgence primarily based on the foundations of Evans and Honkapohja (2001). While

much of the work in this area has remained within the confines of theory, there is a

small but growing literature that attempts to fit these models to real data. Both

the complexity of models and econometric techniques have varied tremendously. On

the frequentist side, Orphanides and Williams (2005) calibrate the learning parameters and then estimate the structural parameters of the model. Branch and Evans

(2006) demonstrate that a simple calibrated adaptive learning model provides the best

fit and forecast of the Survey of Professional Forecasters. Chevillon, Massmann and

Mavroeidis (2010) demonstrate a technique based on the Anderson-Rubin statistic for

improving estimates of the structural parameters.

With the exception of Chevillon et al. (2010), the central focus of the empirical

learning literature has been macroeconomic, concerned primarily with the relative fit

of various learning models to the data. Details of the estimation methodology, in particular, the unique set of issues involved in the estimation of these models, remain

elusive to many emerging researchers. This is particularly relevant in light of the fundamental differences in the equilibrium concept, stability conditions and the associated

parameter constraints between the learning and RE paradigms. The goal of this paper

is to fill this void. It describes the general methodology for estimating a broad category

of learning models, paying careful attention to the specific issues that the researcher is

likely to encounter in the process. Specifically, it focusses on the following three areas:

1. Stability conditions: Equilibrium in a learning model is defined by convergence

to some solution. Typically, though not always, the benchmark is the solution

under rational expectations. Evans and Honkapohja (2001) study this equilibrium in great depth and characterize its stability properties with the E-stability

principle. An important question that arises in this regard is the relation between

E-stability and determinacy of the RE solution. McCallum (2007) demonstrates

that under certain conditions determinacy is a sufficient condition for E-stability.

Likewise it is also possible that when a multiplicity of solutions exist, some of

those solutions may not be E-stable. It is important for the researcher to be

aware of these subtleties to ensure that the appropriate parameter constraints

are enforced in the estimation process. These issues are particularly relevant

when estimating a model with lagged endogenous variables. From a practical

standpoint, it is necessary to assume that agents include only t − 1 data in their

information set. In the case of lagged endogenous variables, this results in multiple equilibria under learning. Typically, E-stability conditions can help the

researcher select the appropriate equilibrium when taking a model to the data.

In the simplest case two solutions exist, one locally E-stable and the other locally

E-unstable. As shown in Marcet and Sargent (1989), in this case convergence

to the E-stable solution occurs only with the use of a projection facility. This

technique ensures that a series of bad shocks does not lead the system outside the

basin of attraction. An alternative approach is to penalize the likelihood function

1

whenever the underlying law of motion becomes explosive. Example 2 in Section

3 highlights these issues.

2. Idenification: Chevillon et al. (2010) show that, under certain conditions, the

structural parameters may not all be identified as the equilibrium under learning

converges to that of RE. Clearly this raises concerns about the reliability of the

estimates in models that assume E-stability. However, as we show in this paper

with the aid of a simple bivariate example, identification of the structural parameters can be improved as long as one of the variables is influenced by expectations

of other variables, but is itself not directly or indirectly part of the expectational

feedback loop. As it turns out, the interest rate, specified by the Taylor (1993)

rule in the NK-DSGE models, satisfies precisely this condition. Note that expectations of interest rate appear neither in the Taylor rule (direct), nor in the

IS or Phillips curve equations (indirect). Intuitively, the interest rate provides

an additional measurement that aids in the identifiability of the parameters by

shrinking the set of possible parameter values that give rise to the same data. It

is our belief that a more generalized version of this property plays an important

role in the identification of the structural parameters in learning based NK-DSGE

models.

3. Derivation of the Likelihood function: From an econometric standpoint, an interesting aspect of fitting these models concerns the evaluation of the likelihood.

Because the agents in the models have limited information - either about some

of the model parameters or the structure of the model itself (or possibly both) they update their beliefs about the model based on some form of least squares

(LS) estimation. Consequently, the evolution of the endogenous variables depends critically on these LS coefficients. We refer to these coefficients as the

learning parameters. Neither the learning parameters nor the covariates of the

LS model are observed by the econometrician. While these quantities may not be

of particular interest to the researcher, deriving the joint density of the data as a

function of only the model parameters (but marginalized of the learning parameters) becomes an issue. The approach taken in the literature is to recursively

calculate the learning parameters based on predicted values of the unobserved

covariates. A detailed discussion of this is presented in the following section of

the paper.

Because much of the empirical work in this area has focused mainly on learning

under constant gain (CGL), the goal here is to provide a self-contained guide that can

be readily implemented for estimating CGL models. To accomplish this goal, several

examples complement the general estimation methodology. The first two examples

illustrate the aforementioned issues with the aid of simulated data. These examples

are meant to be instructive with complete details of the estimation process. Since NewKeynesian (NK) Dynamic Stochastic General Equilibrium (DSGE) models serve as one

of the bulwarks of the empirical macroeconomics field, our third example is a standard

NK-DSGE model that is fit to real data. The handful of papers in this area continue

the tradition of fitting these models using Bayesian techniques. Milani (2007) shows

that incorporating learning in a simple linearized DSGE model results in a better fit

to the data compared to its RE counterpart. It also considers interesting extensions by

2

introducing learning in the underlying microfounded model along the lines of Evans,

Honkapohja and Mitra (2011) and Preston (2005). Continuing on those lines Eusepi

and Preston (2011) find that expectations, in general, and learning, specifically, may

explain business cycle fluctuations. More recently, Slobodyan and Wouters (2012) have

estimated a more complicated medium scale DSGE model. Their findings suggest that

the overall fit of the model hinges on the initialization of the agents’ beliefs. More

importantly, they conclude that limiting the information set of the agents leads to an

empirical fit that favors comparably to a structural VAR model. For a more in depth

survey of the literature on DSGE models under learning we refer the interested reader

to Milani (2012).

The rest of the paper is organized as follows. The next section describes the general

class of adaptive learning models and the estimation procedure. Section three provides

three examples from this class of models. Concluding remarks are provided in Section

four.

2

General Framework

Evans and Honkapohja (2001) provides a unifying theoretical framework for analyzing

the dynamic properties of adaptive learning models. In general, the methodology

for taking a learning model to the data involves some additional steps compared to

a model based on rational expectations. While some of these steps are founded in

theory, others are relevant from an empirical standpoint. This section describes the

model, the stability conditions, the state space form, and estimation strategy. For ease

of readability, the exposition here closely follows Chapter 10 in Evans and Honkapohja

(2001).

Consider the class of models given by

e

yt = K + N yt−1 + M0 yte + M1 yt+1

+ P wt + Qυt

wt = F wt−1 + et

(1)

(2)

Here yt is an n × 1 vector of endogenous variables, wt is a p × 1 vector of exogenous

variables that follows a stationary VAR process with et ∼ N (0, Σ1 ) and υt ∼ N (0, Σ2 )

is an additional q dimensional error that is independent of et ; n ≥ p + q. Capital letters

denote vectors or matrices of appropriate dimensions that comprise the model parameters θ. For future reference, we call the parameters in matrices K, N , M0 , and M1 the

structural parameters and those in P , Q, F , Σ1 and Σ2 the shock parameters. Finally,

the superscript e represents the mathematical expectation of y given the information

set I at time t − 1: yte := E(yt |It−1 ). One could also work with contemporaneous

expectations by defining the appropriate information set.1 Clearly, expectations of y

depend on what is and is not included in this information set. Rational expectation

(RE) assumes agents’ knowledge of the history of the realizations of both the endogenous and exogenous variables in the model up to and including period (t − 1), all of

the parameters in the model as well as the structure of the model itself. In contrast,

1

See Evans and Honkapohja (2001) for details as well as some examples of this case.

3

adaptive learning agents have a strict subset of this information. In particular, we

will suppose that (a) our agents do not know the structural parameters of the model,

and (b) their perception of the model is based on the Minimum State Variable (MSV)

representation of the RE solution (McCallum 1983). Alternative information sets have

been studied in Marcet and Sargent (1989) and Hommes and Sorger (1998). In typical

macroeconomic applications, this system is obtained by log-linearizing the underlying

microfounded general equilibrium model. As will be clear from the discussion below,

models with lagged endogenous variables present additional issues than ones without.

2.1

Solution and Stability Conditions

The MSV solution to (1)-(2) takes the form

yt = a + byt−1 + cwt−1 + P et + Qυt

(3)

where a, b and c are to be determined. Whereas the RE agent solves for these parameters by the method of undetermined coefficients, adaptive learners estimate them

using the data available in their information set. Accordingly, this reduced form is

referred to as the learning agents’ perceived law of motion (PLM). Stability analysis in

this case revolves around the “consistency” of the estimator in relation to some solution. The benchmark solution is usually the rational expectations equilibrium (REE).2

Consequently, the question of interest is whether the REE is learnable. Evans and

Honkapohja (2001, 2009) analyze the stability properties of the REE in terms of the

so called E-stability principle. The main results from the practitioner’s viewpoint are

summarized below.

e

= a + ab +

Calculating the conditional expectations yte = a + byt−1 + cwt−1 and yt+1

b yt−1 + bcwt−1 + cF wt−1 , and substituting into (1) yields

2

yt = K + (M0 + M1 (I + b))a + (N + M0 b + M1 b2 )yt−1 +

(P F + M0 c + M1 (bc + cF ))wt−1 + P et + Qυt .

(4)

This is the law of motion for yt that results from the expectations above and is referred

to as the actual law of motion (ALM). In practice, estimates of a, b and c would be

plugged into this expression. Hereafter we refer to these coefficients as the learning

parameters. Given the form of the PLM (3), these coefficients can be estimated by

0

0

the least squares algorithm with zt−1 = (1, yt−1

, wt−1

)0 as the regressors. However,

agents presumably assign a larger weight to more recent observations than the distant

past. A natural choice is therefore some form of discounted least squares. Denoting

φi = (ai , bi , ci )0 , where i refers to the ith equation in the system, and, bi and ci are the

i-th rows of b and c, these quantities can be estimated efficiently in real time by the

formula

φ̂i,t = φ̂i,t−1 + γRt−1 zt−1 (yi,t − φ̂0i,t−1 zt−1 )

0

Rt = Rt−1 + γ(zt−1 zt−1

− Rt−1 )

2

(5)

(6)

This need not be the case. However, it is the natural benchmark because agents effectively have

the rational expectations information set when the structural parameters are known in this model.

4

where Rt is the familiar moment matrix. It is important to note the timing of the

information set here. The assumption is that the estimate φ̂t is available only after

yt is observed. Thus, the ALM evolves in real time with φ̂t−1 replacing the learning

parameters in (4). Parameter γ ∈ (0, 1) dictates the extent to which past data is

discounted. A larger value of γ leads to heavier discounting. Note that γ is assumed

to be constant. Hence the terminology of constant gain least squares (CGLS). Setting

γ = 1/t above results in the familiar recursive lease squares (RLS) formula. For a brief

introduction to the RLS algorithm and CGLS, see pages 32-34 and 48-50, respectively,

in Evans and Honkapohja (2001). Lastly, notice the squared term b2 in the ALM. This

(matrix) quadratic arises because of the autoregressive component in the model. In

the absence of yt−1 , the solution, stability condition and estimation are all simpler. We

discuss this case separately later.

To calculate the REE we equate coefficients in (3) and (4). This gives rise to the

following set of matrix equations:

a = K + (M0 + M1 + M1 b)a

b = M1 b2 + M0 b + N

c = M0 c + M1 bc + M1 cF + P F

(7)

(8)

(9)

Without making further assumptions it is generally not possible to obtain a closed form

solution to this system of equations. The interesting point though is that the matrix

quadratic can result in multiple solutions for b. We illustrate this in the context of a

univariate example below for which the analytical solution is readily calculated. The

mapping from the PLM to the ALM, referred to as the T-map is given by

T (a, b, c) = (K + (M0 + M1 (I − b))a, M1 b2 + M0 b + N, M0 c + M1 bc + M1 cF + P F ) (10)

To determine the conditions for the local stability of the REE under learning one

follows the E-stability principle in Evans and Honkapohja (2001). Under decreasing

gain (γ = 1/t), the condition for E-stability is that, at the REE, the eigenvalues of the

Jacobian of vecT must have real parts less than 1. Denoting the REE (ā, b̄, c̄), this

translates to the condition that the following square matrix

I ⊗ (M0 + M1 (I − b̄))

ā0 ⊗ M1

0

0

I ⊗ M0 + b̄0 ⊗ M1

0

DT (ā, b̄, c̄) =

0

0

0

c̄ ⊗ M1

I ⊗ (M0 + M1 b̄) + F ⊗ M1

(11)

must have eigenvalues with real parts less than 1. For computational efficiency, it

suffices to calculate the eigenvalues of the three sub matrices on the diagonal of DT .

The analogous E-stability condition for constant gain learning is that these eigenvalues

lie within a circle of radius 1/γ with origin (1 − 1/γ, 0) (Evans and Honkapohja 2009).

The following points regarding the importance of constraining the parameter space

to the E-stable region are noteworthy to the practitioner:

1. Presumably, the main goal of working with a learning model is to analyze the

learnability of a REE. Learnability provides a practical justification for the existence of a REE. This point is also stressed in McCallum (2007). It is therefore

5

imperative for a practitioner to enforce the E-stability constraint, particularly

when conducting empirical analysis. Otherwise, the purpose of the analysis remains unclear as are the interpretations of the parameter estimates. In this

situation, one might as as well directly estimate the parameters under the said

REE.

2. An exception to the previous point is when the agents’ information set includes

the contemporaneous endogenous variables. As shown in McCallum (2007), in

this case, determinacy of a REE also ensures that it is E-stable. However, because the causality does not run in the other direction, a researcher that is only

interested in the determinate solution needs to exercise caution.

3. When a multiplicity of solutions exist, E-stability may not by itself suffice to

ensure convergence to the stationary solution. As shown in Marcet and Sargent

(1989), ensuring convergence in this situation requires the implementation of an

appropriate projection facility. There is no unique way to implement it however.

Consequently, parameter estimates depend on the rule chosen by the researcher.

An alternative and more direct, although less effective, approach is to penalize the

likelihood function when the T-map condition is violated for a particular estimate

of the learning coefficients. We discuss both alternatives more concretely in the

context of Example 2 in the following section.

As an illustration, consider the following univariate model

e

yt = α + δyt−1 + βyt+1

+ wt

wt = ρwt−1 + et

(12)

Comparing the model in (1)-(2), K = α, N = δ, M1 = β, P = 1 and F = ρ. For

reasons that will be clear in the following section, we have set M0 = Q = 0. Writing

the MSV solution as

yt = a + byt−1 + cwt−1 + et .

(13)

the conditional expectation of yt+1 given information up to (t − 1) is

Et−1 yt+1 = (1 + b)a + b2 yt−1 + c(b + p)wt−1 .

(14)

Substituting this back into the model (12) we get the ALM

yt = α + β(1 + b)a + (βb2 + δ)yt−1 + (βc(b + p) + ρ)wt−1 + et .

(15)

with the corresponding T-map

Ta (a, b, c) = α + β(1 + b)a

Tb (a, b, c) = βb2 + δ

Tc (a, b, c) = ρ + β(b + ρ)c

6

(16)

(17)

(18)

The RE solution to this system is given by

ā = α/(1 − β(1 + b̄))

p

b̄ = (1 ± 1 − 4βδ)/2β

c̄ = ρ/(1 − β(b̄ + ρ))

(19)

(20)

(21)

where it is assumed that the discriminant 1 − 4βδ is positive and that all the denominators are non-zero. Note that the squared term leads to two distinct solution for b.

We reiterate here that it is important to impose the following constraint: −1 < b̄ < 1.

This ensures that the ALM at the RE solution is stationary, but allows for temporary

non-stationarity based on a particular path of learning coefficient estimates.

The derivative of the T-map at the RE solution is

β(1 + b̄) βā

0

0

2β b̄

0

DT (ā, b̄, c̄) =

0

βc̄ β(b̄ + ρ)

(22)

Recall that, under constant gain learning, the REE solution is E-stable if the eigenvalues

of DT are contained in the circle with origin (1 − 1/γ, 0) and radius 1/γ. Because we

restrict the REE to be real, the eigenvalues of DT (which are simply its diagonal) are

also real. For a given γ it is then sufficient to check that 1 − 2/γ < diag(DT ) < 1.

Clearly, only one of the solutions for b̄ (the one with the negative square root) satisfies

this condition. The alternative solution, obtained from the positive square root of the

discriminant, is E-unstable.

We complete this section with a brief discussion of a special case of the general

model in (1). In the absence of the autoregressive component, the REE is unique and,

assuming the appropriate rank condition for the system matrices, has a closed form

solution. To see this, we write the MSV solution (as well as the PLM) as

yt = a + cwt−1 + P et + Qυt

(23)

Calculating the conditional expectations as before and and substituting into the model

results in the ALM

yt = K + (M0 + M1 )a + (M0 c + M1 cF + P F )wt−1 + P et + Qυt

(24)

Finally, equating terms in (23) and (24), it follows that the RE solution for a and c is

ā = (In − M0 − M1 )−1 K

vec(c̄) = (In×n − In ⊗ M0 − F 0 ⊗ M1 )−1 (F 0 ⊗ In )vec(P )

(25)

(26)

where In is the n-dimensional identity matrix and vec denotes vectorization by column.

The T-map now simplifies to

T (a, c) = (K + (M0 + M1 )a, M0 c + M1 cF + P F )

(27)

In this case, the E-stability condition for CGLS simplify to the condition that the

7

eigenvalues of the following matrices

DTa (ā) = M0 + M1

DTb (b̄) = In ⊗ M0 + F 0 ⊗ M1

(28)

(29)

lie within a circle of radius 1/γ with origin (1 − 1/γ, 0). With this background, we now

turn to the econometrician’s problem of estimation and inference.

2.2

Likelihood Function and Parameter Estimation

Our goal is to estimate the model parameters θ given the sample data on the endogenous

variables: YT = (y0 , . . . , yT ). One might also be interested in inferring the exogenous

variables WT = (w0 , . . . , wT ), as well as the learning parameters ΦT = (φ0 , . . . , φT ).

Parameter inference is based on the assumption that data is generated by the ALM

(4). Unlike in the RE framework, therefore, the model generating the data is different

for the agent and the researcher. We return to this point later in the section.

To estimate the unknowns in the model, we combine the ALM (4) and the exogenous

process (2) in the state space model (SSM)

st = µt−1 (θ, φ̂t−1 ) + Gt−1 (θ, φ̂t−1 )st−1 + H(θ)ut

yt = Bst

(30)

(31)

by defining

yt

et

K + (M0 + M1 (I + b̂t−1 ))ât−1

st =

, ut =

∼ N (0, Ω), µt−1 =

,

wt

υt

0

Gt−1 =

N + M0 b̂t−1 + M1 b̂2t−1 (P F + M0 ĉt−1 + M1 (b̂t−1 ĉt−1 + ĉt−1 F )

,

0

F

P Q

H=

, B = In 0

I 0

where 0 denotes vectors or matrices of appropriate dimensions. Note that the measurement equation (31) simply extracts yt from the state vector st . Consequently, only

part of the state vector that comprises wt is truly latent. In the context of SSMs, it is

important that wt remain an exogenous process.

The interesting part of the SSM here is the learning parameters φ̂t−1 in the system

matrices µt−1 and Gt−1 . Recall that these time varying parameters are calculated by

0

0

the formula (5)-(6) with zt−2 = (1, yt−2

, wt−2

)0 as the vector of regressors. Consequently,

the system matrices involve parameters that are non-linear functions of the lagged state

vector wt−2 as well as the past observations Yt−1 . Our SSM is therefore unconventional

and the usual technique for deriving the likelihood function as a by-product of the

Kalman filter is not fruitful here.

To state the problem explicitly, the likelihood function now depends on Φ̂T in addi8

tion to θ. Thus, the joint density of the data takes the form

f (y1 , . . . , yT |Y0 , θ, Φ̂T ) = f (yT |YT −1 , θ, φ̂T −1 ) . . . f (y1 |Y0 , θ, φ̂0 )

(32)

where, from the ALM we have exploited the fact that, for t = 1, . . . , T , given Yt−1 , the

density of yt depends only on θ and φ̂t−1 . For given values of Φ̂T and θ, this likelihood

function is well defined. From a frequentist perspective, therefore, one can at least

conceptually think of maximizing it with respect to both Φ̂T and θ, much the same

way as one would calculate the MLE of θ in a standard SSM. However, the impediment

here is that φ̂t is a function of the latent states wt−1 , which is itself a random variable.

While, estimates (or more precisely the conditional distribution) of the latent states

may be calculated based on the observed data, it is not equivalent to knowing the latent

states. In other words, only conditional on WT can Φ̂T be treated as a parameter in

the likelihood function. But then the likelihood function itself is a function of WT . It

is therefore not feasible to derive the joint density of the data only as a function of

the unknown parameters by means of the Kalman filter. To our knowledge there is

no legitimate method for calculating the likelihood function in this problem without

taking recourse to nonlinear techniques.

The approach taken in the literature is to approximate the likelihood value by calculating φ̂t , t = 1, . . . , T , conditional on estimates of WT . Conveniently then, the

Kalman filter delivers the latter in the form of E(wt |Yt , θ), t = 1, . . . , T . One therefore

iteratively updates φ̂t within the Kalman filter by substituting E(wt |Yt ) in place of

wt in (5)-(6). Of course, this procedure raises concerns about the optimality of the

Kalman filter as well as the properties of the MLE of θ. Clearly this approach leans

towards computational efficiency rather than accuracy. Finally, it is worth noting that

if ∀t, wt = 0, then the ALM is simply a vector autoregressive process that can be

cast as a standard linear Gaussian SSM. In that case the aforementioned complication

regarding the likelihood function is not relevant.

Having dealt with the calculation of φ̂t , t = 1, . . . , T , the only remaining concern is

the initialization of φ̂0 . The two main approaches in the literature to deal with this are

(a) estimate φ̂0 using extraneous information and treat it as a known quantity when

estimating θ and (b) treat φ̂0 as an additional parameter and estimate it alongside θ

using the sample data. While several variants of the former have been discussed in

the literature (see, for instance, Slobodyan and Wouters (2012)), we focus here on the

training sample approach. We then compare this to the case where φ0 is estimated

within the sample. Note that the aforementioned discussion applies equally well to R0 .

Without loss of generality, we only consider the estimation of φ0 in this paper. In our

examples, we initialize R0−1 as an arbitrary matrix with large diagonal elements.

We now provide the details for likelihood based estimation of θ in the case of

the training sample approach. We denote the available pre sample of length S by

Y = (y−S+1 , . . . , y0 ). Simultaneously let W = (w−S , . . . , w−1 ) be the collection of

the corresponding latent states. Then, in the frequentist setting, the procedure for

estimating θ can be summarized conceptually as follows:

max f YT |θ, φ̂0 (Y, θ)

θ

9

A Bayesian would combine the likelihood function with a prior on θ to calculate the

posterior distribution of θ. We discuss the specifics of this in the context of specific

examples in the following section. For now, our primary focus is on the derivation of

the likelihood function. Procedurally, it involves two steps.

Step 1: Estimate φ0 given θ and Y

1a. For the pre sample period, replace φ̂t in the SSM with the REE, φ̄, of the model

(computed either analytically or numerically)

1b. Run the Kalman filter and smoother to estimate E(W |Y, θ)

1c. Construct for t = −S, . . . , −1 the vector ẑt = (1, yt0 , ŵt0 )0

1d. Calculate the OLS estimate φ̂0 by regressing Y on Z = (ẑ−S , . . . , ẑ−1 )

With this initial value of φ̂, we now turn to the evaluation of the likelihood function. Let w0 |Y0 ∼ N (ω0 , Ω0 ) denote the prior distribution of w0 based on some initial

information Y0 . The hyperparameters of the normal distribution can be specified in

one of several ways. We present two alternatives here. Assuming that the latent

process is stationary, these quantities take on the steady state values of ω0 = 0 and

vec(Ω0 ) = (Ip×p − F ⊗ F )−1 vec(Σ1 ). Alternatively, we could include w0 in W and

extend step 2. above by one additional iteration to get ω0 and Ω0 conditioned on Y

and θ. Subsequently, to evaluate the likelihood at a given value of θ, one essentially implements the Kalman filter with an additional step to update the learning parameters.

Thus, the second step in the procedure is as follows:

Step 2: Calculate the likelihood value as a function of θ and φ̂0

2a. Initialize s0 |Y0 ∼ N (ψ0 , Ψ0 ) where

y0

ψ0 =

ω0

and Ψ0 =

0 0

0 Ω0

2b. For t = 1, . . . , T , calculate

(i)

Ψt|t−1 Ψt|t−1 B 0

st

ψt|t−1

|Yt−1 , θ, φ̂t−1 ∼ N

,

BΨ0t|t−1

Γt

yt

Bψt|t−1

where

ψt|t−1 = µt−1 +Gt−1 ψt−1 ,

Ψt|t−1 = Gt−1 Ψt−1 G0t−1 +HΩH 0 ,

(ii)

0

Rt = Rt−1 + γ(ẑt−1 ẑt−1

− Rt−1 )

φ̂i,t = φ̂i,t−1 + γRt−1 ẑt−1 (yit − φ̂0i,t−1 ẑt−1 )

0

0

where zt−1 = (1, yt−1

, ŵt−1

)0

10

Γt = BΨt|t−1 B 0

(iii)

st |Yt , θ, φ̂t−1 ∼ N (ψt , Ψt )

where

ψt = ψt|t−1 + Ψt|t−1 B 0 Γ−1

t (yt − Bψt|t−1 ),

2c. Return the log-likelihood as

T

P

0

Ψt = Ψt|t−1 − Ψt|t−1 B 0 Γ−1

t BΨt|t−1

log(yt |Yt−1 , θ, φ̂t−1 )

t=1

Embedding steps 1 and 2 in a numerical maximization routine, one can calculate the

MLE of θ. Likewise, a Bayesian would combine these steps to evaluate the likelihood

for a given value of θ.

The following points are worth noting about the above procedure:

• It is easy to verify that for all t = 1, 2, . . . , T , ψt and Ψt replicate the structure

of ψ0 and Ψ0 . That is,

0 0

yt

and Ψt =

ψt =

ωt

0 Ωt

where ωt = E(wt |Yt , θ, φ0 ) and Ωt = V ar(wt |Yt , θ, φ0 ).

• As mentioned earlier, if it were possible to calculate φ̂t (and Rt ) conditional

on wt−1 instead of ωt−1 , then the preceding three steps form a coherent cycle.

Further, in this non-standard SSM, there is also the concern as to whether ωt−1

is the optimal estimate of wt−1 . As such we are unaware of any solution to this

problem within the frequentist setting without resorting to nonlinear techniques.

Our main goal here is to highlight this issue and we leave the resolution to future

work.

We conclude this section with the following note. To estimate φ̂0 within the sample,

one simply skips Step 1 above and treats φ̂0 as an additional set of parameters. But

this means that the MLE problem is now one of

max f YT |θ, φ̂0

θ,φ̂0

Examples 1 and 3 below explore both alternatives. We estimate the initial values of

the learning parameters with a training sample and then separately with the actual

sample along with θ.

3

Examples

In this section we provide three illustrative examples. The first two stylized examples

are based on simulated data, whereas the third is a simple New Keynesian (NK) model

that we fit to real data. In the spirit of the framework discussed in the previous section,

all three examples involve exogenous processes that follow a stationary AR(1) process.

11

3.1

Simulated Data Example 1

Consider the following model

e

y1,t = α + βy1,t+1

+ κy2,t + wt

(33)

e

+ vt

y2,t = λy1,t

(34)

wt = ρwt−1 + et

(35)

where et and vt are independent zero mean i.i.d. sequences with standard deviations

σ1 and σ2 , respectively. Equation (34), which appears to be ad-hoc in this setup, is

motivated by the Taylor rule specification in monetary DSGE models (Taylor 1993).

It’s role is explained in the estimation section below. Because y2,t depends on expectations over y1,t and not itself, we suppress it in the following discussion. Substituting

(34) into (33) one obtains

e

e

y1,t = α + βy1,t+1

+ κλy1,t

+ wt + κvt

(36)

which is of the form (1). Accordingly, the MSV solution is

y1,t = a + cwt−1 + et + κvt

(37)

with the resulting law of motion for y1,t under RE being

y1,t =

α

ρ

+

wt−1 + et + κvt

1 − β − κλ 1 − ρβ − κλ

(38)

Equation (37) serves as the agents’ PLM who update φt = (at , ct )0 following (5).

The ALM then takes the form

y1,t = α + (β + κλ)at−1 + (ρ + (ρβ + κλ)ct−1 )wt−1 + et + κvt

(39)

with the T -map defined as

T (a, c) = (α + (β + κλ)a, ρ + (ρβ + κλ)c)

In this case, the convergence criteria (28)-(29) simplify to β + κλ < 1 and ρβ + κλ < 1.

One completes the state space setup by defining

y1,t

α + (β + κλ)at−1

0 0 ρ + (ρβ + κλ)ct−1

Gt−1 = 0 0

λat−1

λct−1

st = y2,t µt−1 =

wt

0

0 0

ρ

1 κ

e

y

1

0

0

t

1,t

H = 0 1 u t =

yt =

B=

vt

y2,t

0 1 0

1 0

where y2,t = λat−1 + λct−1 wt−1 + εi,t is obtained by substituting yte in (34).

With the SSM setup, we now turn to the problem of parameter estimation. As

noted by Chevillon et al. (2010), the structural parameters in this model suffer from

12

identification when γ is small. This might be immediately apparent to the reader

because not all parameters are identified under RE. In light of this issue, we consider

three alternative versions of this model when estimating the unknown parameters.

Model 1(a) λ = κ = 0

Model 1(b) λ 6= 0 and κ = 0

Model 1(c) λ, κ 6= 0

The intent here is to understand the extent to which the sample data provides information about the structural parameters when additional measurements in the form of

y2,t are available. In this regard, the additional information afforded in Model 1(b) is

expectations of y1,t . It is assumed, however, that these expectations do not influence

the evolution of y1,t directly. In contrast, Model 1(c) assumes that these expectations

feedback to y1,t .

Separately, we are also interested in whether estimating φ0 together with θ affects

the estimates of the latter. For this experiment, we follow the two alternative approaches discussed in the previous section for estimating φ0 . That is, we first estimate

φ0 alongside θ using the sample data. We then compare this to the case when φ0 is estimated using a pre-sample. For fair comparison of the results from the two approaches

we work with the sample length throughout.

We generated 240 observations of yt for each of the three versions of the model. The

actual sample was preceded by a burn-in period of 5000 ensured that any lingering effects of the initial values of the unknowns set in the DGP had worn off. Table 1 presents

the data generating values for the parameters θ = {α, β, κ, σ12 , γ, λ, ρ, σ22 , a0 , c0 }. The

initial values of a0 and c0 reported are the values immediately preceding the sample

after an initial burn-in of 1000 observations. Table 1 reports the MLE of θ and φ0 in

all three cases of Example 1.

Our results confirm the difficulty in identifying the structural parameters in case (a)

while providing the new result that the initial values of the learning parameters are not

well identified either. As we turn to case (b) we see that adding a measurement with

no feedback improves identification of the learning parameters, but not the structural

parameters. However, once we have the feedback in case (c) we find that all the

estimates are fairly well identified. Our intuition for the improved identification in

cases (b) and (c) is as follows. The fact that agents do not form expectations over

the additional measurement reduces the set of possible time paths of the learning

parameters, resulting in a more well defined likelihood function. As regards to the

second concern above, the second column of results in each case indicates that the

estimates of the initial values of the learning parameters have little impact on the

estimates of the structural parameters.

Turning to the Bayesian approach, we suppose that the parameters are apriori independent. The prior distribution on each element of θ is centered at values that are

distinctly different from the DGP. We also assume fairly large standard deviations. In

particular, the gain parameter γ is allowed to vary uniformly between 0 and 1. Also,

the autoregressive parameter ρ of the exogenous process is assumed to be uniformly

distributed between −1 and 1. Note that this enforces the stationary constraint on

13

wt . The priors on a0 and c0 are centered at the REE corresponding to the prior mean

of θ. To capture the uncertainty regarding these quantities we specify large standard

deviations. Table 2 summarizes our prior. In addition to the parameter constraints

implied by the prior distribution, we also enforce the E-stability constraints mentioned

in the previous section.

In the estimation procedure we initialized the parameters at their prior mean. We

then ran the Tailored Randomized Block Metropolis-Hastings (TaRB-MH) sampler

(Chib and Ramamurthy (2010)) for 11000 iterations and discarded the initial 1000

draws as burn-in. See Appendix A for a brief overview of the TaRB-MH sampler.

Table 2 summarizes the posterior distribution based on the sampled draws. The values

in the left panel correspond to the case where a0 and c0 are estimated from a training

sample. For this experiment, the training sample comprised of the first 40 observations

with the remaining 200 observations contributing to the likelihood value. The posterior

summary in the right panel includes a0 and b0 as additional parameters. For conformity of the data sample, these estimates are also based on observations 41 through

240. The results indicate that the estimated marginal posteriors are practically indistinguishable for the two cases. Also noteworthy is the closeness of the posterior

mean to the data generating values of the parameters. This is not surprising given

the maximum likelihood estimates - the data carried substantial information about the

parameters.

To conclude this section, we plot the marginal prior and (kernel smoothed) posterior

of each parameter in Figure 1. In this figure, the dashed line is the prior, the green line

is the posterior for the training sample that corresponds to the left posterior panel in

Table 2 and the blue line is the posterior without the training sample that corresponds

to the right panel in Table 2. As mentioned earlier, the similarity between the estimates

for the two approaches are more evident in the figure. We also plot the simulated at

and ct for the PLM (dashed line) and ALM (circled line) based on the posterior draws

in Figure 2. The shaded regions capture the 95% band. These plots were generated

by first simulating the PLM and ALM for each draw from the posterior.3 Then for

each t we calculated the .025, .5 and .975 quantiles. The horizontal lines denote the

corresponding quantiles for the REE.

3.2

Simulated Data Example 2

Consider the univariate example associated with equation (12) presented in Section 2.1

that we repeat here for the reader’s convenience.

e

yt = α + δyt−1 + βyt+1

+ wt

wt = ρwt−1 + et

The parameter values that we use to generate the data (see Table 3) imply the following

values of the rational expectations solution (equations 19-21): ā = 1.264 b̄ = 0.052, and

3

The procedure is identical to Step 2 in the likelihood calculation. Recall that, for a given value

of θ and φ0 , at and ct are updated within the Kalman filter based on the PLM. The corresponding

quantities for the ALM are simply the non-zero elements in the first row of µt−1 and Gt−1 .

14

c̄ = 2.514. One can verify that this is the E-stable solution. The alternative solution,

obtained from the positive square root of the discriminant, is E-unstable.

When generating the data, we initialize the learning parameters sufficiently close

to the RE solution. However, in the process of updating these quantities by the RLS

algorithm it is entirely possible that they wander off the stable region as discussed

by Marcet and Sargent (1989). This happens because a particular estimate (by the

agents) of b may push the eigenvalues of DT to the unstable region. The solution to

this problem is to invoke a projection facility which nudges the learning parameters

back to the stable region, which in the case of CGLS is the circle with origin (1 −

1/γ, 0) and radium 1/γ. A projection facility can come in many forms. The simplest

implementation is to recall the latest value of the learning parameters that resulted

in eigenvalues in the stable region. This approach clearly poses the danger of getting

stuck at those latest stable values of the learning parameters. Practically, however,

this may not be a source of major concern as long as the corresponding values of

the structural parameters are less likely to have generated the data. For alternative

implementations, Marcet and Sargent (1989) provide a general guide to restricting the

stable space. Even in our simple example, however, defining the stable space of the

learning parameters can result in several inequalities, turning this process into an ordeal

for the practitioner. As mentioned earlier, there is a easier third alternative - that of

penalizing the likelihood function for unstable values of the learning parameters. From

a practical viewpoint, this can always be a fallback option that can be implemented

along with the simple projection facility.

We now focus on our ability to estimate a model with lagged endogenous variables.

Table 3 displays the results from varying sample sizes ranging from 200 to 2000. As

the results demonstrate, even with a very large sample size, the estimation of this class

of models is tenuous at best. In particular, notice that estimates of γ get worse as the

sample size increases, which is troublesome since the chief objective is the story about

learning. Table 3 also supports the claim made in the previous example that estimating

the initial values of the learning parameters has little impact on the estimates of the

structural parameters as shown by the second column for each sample size.

3.3

Application to a Monetary DSGE Model

As an application to a DSGE model, consider the following canonical model derived

from Woodford (2003)

e

xt = xet+1 − ψ(it − πt+1

) + εx,t

e

πt = λxt + βπt+1 + επ,t

it = θx xet + θπ πte + εi,t

(40)

(41)

(42)

Here, the endogenous variables are output gap xt , inflation πt and the nominal interest

rate it . Underlying this linear system is a nonlinear microfounded model comprising

households and firms that are intertemporal optimizers. The central bank, on the other

hand, sets the interest rate following the Taylor rule. As mentioned earlier, this interest

rate rule (42) is an ad-hoc specification in the sense that the economic agents do not

15

incorporate expectations of it in their decisions. Finally, the structural shocks εx,t and

επ,t follow independent stationary AR(1) processes. For these processes, we denote the

autoregressive coefficients ρx and ρπ , and the variance of the white noise terms σx2 and

σπ2 , respectively. We also assume that εi,t ∼ N (0, σi2 ).

As in the previous example, substituting (42) into (40) leads to the familiar form

(1) with N = K = 0

e

+ P wt + Qεi,t

(43)

yt = M1 yte + M2 yt+1

where

xt

yt =

πt

εx,t

−ψθx −ψθπ

1

ψ

wt =

M0 =

M1 =

επ,t

−λψθx −λψθπ

λ β + λψ

1 0

−ψ

ρx 0

P =

Q=

F =

λ 1

−λψ

0 ρπ

Note that here K = 0. As before, we write the PLM as

yt = a + cwt−1 + P et + Qεt

where

ax

a=

aπ

c=

cxx cxπ

cπx cππ

Substituting the expectational terms yields the following ALM

yt = (M0 + M1 )a + (M0 c + M1 cF + P F )wt−1 + P w̃t + Qεi,t

(44)

The corresponding T-map for the learning parameters is then

T (a) = (M0 + M1 )a

T (c) = M0 c + M1 cF + P F

(45)

(46)

Finally, note that the RE solution for this model is aRE = 0 and

vec(cRE ) = (I4 − F 0 ⊗ M1 − I2 ⊗ M0 )−1 (F 0 ⊗ I2 )vec(P )

The SSM is completed as before by including the additional measurement it = Sa +

Scwt−1 + εi,t , where S = (θx , θπ ).

We fit this model to the sample period 1954:III – 2007:I with the vector of quarterly

measurements on output gap, inflation and the nominal interest rate. These quantities

were calculated from the data on quarterly GDP, GDP deflator and the Federal Funds

Rate published by the St. Louis Fed as follows. Output gap was obtained by taking

the log difference of real GDP from its potential (as calculated by the Congressional

Budget Office). The inflation and interest rates were taken to be the demeaned GDP

deflator and annualized federal funds rate.

Given the complexity of the model we favor using Bayesian techniques. Collecting

16

the parameters of interest in the vector

θ = (ψ, λ, β, θx , θπ , ρx , ρπ , σx2 , σπ2 , σi2 , γ)0

we suppose that the intertemporal elasticity of substitution ψ apriori follows a Gamma

distribution with mean 4.0 and standard deviation 2.0. For the Taylor rule coefficients

θx and θπ we assume a normal prior with mean 0.25 and 1.0, respectively, and unit

variance. As is well documented in the literature, the discount factor β ∈ (0, 1) that is

consistent with the data usually exceeds its upper limit of 1. For this reason it is not

uncommon to fix its value close to 1. In our estimation we fix β = 0.95. Similarly, λ is

also pinned down by the cost adjustment parameter in the underlying structural model.

Because in this paper we deal only with the reduced form, we also fix λ to a reasonable

0.05. For the remaining model parameters, the prior specification is identical to that in

the previous example. Finally, for the initial learning parameters φ0 , we suppose that

each element follows a normal distribution with mean calculated as in the previous

example and a large standard deviation. Table 4 summarizes this prior.

This table also presents the posterior summary based on 9000 iterations of the

TaRB-MH algorithm beyond a burn-in of 1000. The left posterior panel corresponds

to the case where φ0 was estimated using a training sample. The first forty observations

from 1954:III to 1964:II were reserved for this purpose. The effective sample therefore

runs from 1964:III to 2007:I. Results in the center panel are also based on this sample

but now include estimates of φ0 as well. Once again, the estimated marginal posteriors

are similar in both cases. This can also be viewed in Figure 3 that plots the kernel

smoothed marginal posterior densities along with the prior densities of the parameters.

In contrast, the results in the last panel are starkly different. These estimates are based

on the full sample 1954:III to 2007:I. While in this paper we would not draw attention

to the parameter estimates per se, it is worth noting the tight posterior interval for γ.

This is in sharp contrast to the flat prior and is particularly striking in the third panel.

Recall that the convergence condition enforced here is valid only for small enough γ.

Finally, we reiterate our stance that if one estimates a model of learning one presumably is interested in the potential story of agents expectations. Therefore, we include

graphs of the reduced form coefficients of the ALM and PLM, that is M0 c+M1 cF +P F

and c respectively, in Figure 4. While we would not consider these results conclusive,

the graphs are certainly insightful. Note that these graphs represent how a lagged

shock to output or inflation influences current output or inflation. The graphs show

that both the ALM and PLM of shocks to output influencing output stay quite close to

the RE solution and the same is true for inflation on inflation. In addition, the ALM

and the PLM are generally overlapping. However, looking at the cross terms, shocks

of output on inflation and shocks of inflation on output, we find that the learning

parameters drift form the RE solution, but also that perceptions do not always align

with reality. If one considers this in context of the traditional Phillips curve, that is

in the short run shocks to inflation should influence output this provides some insight

to how perceptions of this relationship have changed over time. Since the early 1980’s

perceptions suggest that shocks to inflation have a positive influence on output and

that has influenced the ALM. However, the opposite story is not true, perceptions of

shocks on output on inflation have at times implied a positive relationship and at other

17

a negative relationship.

4

Conclusion

We conclude by noting that models with learning present a challenging problem to

the econometrician. In practical applications, not only are the parameter restrictions

imposed by the solution stringent, but the structure implied by learning limits the

econometrician to a second-best estimate of the variables that the agents incorporate.

This paper highlights these issues and provides an accessible estimation guide that is

useful for the applied researcher. In particular, this is applicable to the growing body

of work on NK-DSGE models and suggests a promising future for the role of learning

in this area.

A

Appendix: TaRB-MH Algorithm

The two key components of the Tailored Randomized Block MH (TaRB-MH) algorithm

are randomized blocks and tailored proposal densities. In each MCMC iteration, one

begins by dividing θ into a random number of blocks, where each block comprises

random components of θ. For a given block, one then constructs a Student-t proposal

density (with low degrees of freedom, say, 15) centered at the posterior mode of the

block and variance calculated as the negative inverse of the Hessian at the mode. The

final step is to draw a value from this proposal density and accept it with the standard

MH probability.

To illustrate this prodecure for an MCMC iteration, suppose that θ is divided into B

blocks as (θ1 , . . . , θB ). Consider the update of the lth block, l = 1, . . . , B with current

value θl . Denoting the current value of the preceding blocks as θ−l = (θ1 , . . . , θl−1 ) and

the following blocks as θ+l = (θl+1 , . . . , θB )

1. Calculate

θl∗ = arg max log f (y|θ−l , θl , θ+l )π(θl )

θl

using a suitable numerical optimization procedure

2. Calculate the variance Vl∗ as the negative inverse of the Hessian evaluated at θl∗

3. Generate θlp ∼ t(θl∗ , Vl∗ , νl ) where νl denotes the degrees of freedom

4. Calculate the MH acceptance probability α as

f (y|θ−l , θlp , θ+l )π(θlp ) t(θl |θl∗ , Vl∗ , νl )

,1

α = min

f (y|θ−l , θl , θ+l )π(θl ) t(θlp |θl∗ , Vl∗ , νl )

5. Accept θlp if u ∼ U (0, 1) < α, else retain θl and repeat for the next block.

18

References

Branch, William A and George W Evans, “A simple recursive forecasting model,”

Economics Letters, 2006, 91 (2), 158–166.

Chevillon, G, M Massmann, and S Mavroeidis, “Inference in models with adaptive learning,” Journal of Monetary Economics, 2010, 57 (3), 341–351.

Chib, S and Srikanth Ramamurthy, “Tailored randomized-block MCMC methods

for analysis of DSGE models,” Journal of Econometrics, 2010, 155 (1), 19–38.

Eusepi, Stefano and Bruce Preston, “Expectations, Learning, and Business Cycle

Fluctuations,” American Economic Review, October 2011, 101 (6), 2844–2872.

Evans, George W and Seppo Honkapohja, Learning and expectations in macroeconomics, Princeton: Princeton University Press, January 2001.

and

, “Robust learning stability with operational monetary policy rules,” in

Karl Schmidt-Hebbel and Carl Walsh, eds., Monetary Policy under Uncertainty

and Learning, Santiago: Central Bank of Chile, 2009, pp. 145–170.

,

, and Kaushik Mitra, “Notes on Agents’ Behavioral Rules Under Adaptive Learning and Studies of Monetary Policy,” CDMA Working Paper Series,

2011.

Hommes, Cars H and Gerhard Sorger, “Consistent Expectations Equilibria,”

Macroeconomic Dynamics, July 1998, 2, 287–321.

Marcet, Albert and Thomas J Sargent, “Convergence of Least-Squares Learning

in Environments with Hidden State Variables and Private information,” Journal

of Political Economy, 1989, 97 (6), 1306–1322.

McCallum, Bennett T, “On Non-Uniqueness in Rational Expectations Models: An

Attempt at Perspective,” Journal of Monetary Economics, 1983, 11 (2), 139–168.

, “E-Stability vis-a-vis determinacy results for linear rational expectations models,” Journal of Economic Dynamics and Control, April 2007, 31 (4), 1376–1391.

Milani, Fabio, “Expectations, learning and macroeconomic persistence,” Journal of

Monetary Economics, 2007, 54 (7), 2065–2082.

, “The Modeling of Expectations in Empirical DSGE Models: a Survey,” Advance

in Econometrics, July 2012, 28, 3–38.

Orphanides, Athanasios and John C Williams, “The decline of activist stabilization policy: Natural rate misperceptions, learning, and expectations,” Journal of

Economic Dynamics and Control, 2005, 29 (11), 1927–1950.

Preston, Bruce, “Learning about monetary policy rules when long-horizon expectations matter,” International Journal Of Central Banking, 2005, 1 (2), 81–126.

19

Slobodyan, Sergey and Raf Wouters, “Estimating a medium-scale DSGE model

with expectations based on small forecasting models,” Journal of Economic Dynamics and Control, 2012, 36 (1), 26–46.

Taylor, John, “Discretion versus policy rules in practice,” Carnegie-Rochester conference series on public policy, 1993.

Woodford, Michael, Interest and prices: foundations of a theory of monetary policy,

Princeton: Princeton University Press, 2003.

20

λ

κ

β

param

α

0.3000

1.0000

0.5000

true

2.0000

0.6791

(0.0648)

1.0548

(0.1055)

model 1a

2.8051

2.6092

(0.5034)

(0.5155)

0.2592

0.2989

(0.1215)

(0.1231)

0.6722

(0.0706)

1.0517

(0.1053)

-289.6026

0.2249

(0.1389)

0.3191

(0.0923)

-4.0664

(6.5200)

2.0203

(3.4174)

-289.4089

0.2674

(0.0561)

0.6818

(0.0656)

0.8906

(0.0892)

0.4849

(0.0486)

0.0209

(0.0183)

4.4511

(1.2312)

1.5421

(0.5047)

-484.1486

-483.4043

0.2895

(0.0137)

0.6944

(0.0563)

0.8776

(0.0878)

0.4887

(0.0489)

0.031

(0.0340)

Table 1: MLE of parameters in Example 1

mle

model 1b

3.0149

3.0896

(0.4801)

(0.5238)

0.2197

0.2311

(0.1038)

(0.1198)

0.5000

0.6000

σ22

0.1000

ρ

γ

(1a)3.98; (1b)3.24; (1c)9.91

1.0000

a0

(1a)1.06; (1b)0.93; (1c)1.38

σ12

c0

log-likelihood

Note:

i. Model index: (a) λ = κ = 0, (b) λ 6= 0 and κ = 0, and (c) λ, κ 6= 0.

model 1c

2.25

2.2888

(0.4991) (0.5175)

0.4471

0.4534

(0.0521) (0.0550)

1.0452

1.0386

(0.0563) (0.0558)

0.3006

0.305

(0.0050) (0.0044)

0.655

0.6551

(0.0529) (0.0541)

1.1305

1.1298

(0.1133) (0.1131)

0.4482

0.4542

(0.0450) (0.0455)

0.1429

0.1192

(0.0543) (0.0577)

11.5107

(1.0887)

1.2684

(0.6009)

-500.2818 -501.678

ii. Results in the left column for each version of the model: Joint estimation of θ and φ0 using sample data 41:240. Results

in the right column for each version of the model: φ0 initialized using a training sample comprising observations 1:40 and

θ estimated using sample data 41:240.

21

22

-508.53

mean

2.5831

0.4107

1.0600

0.3045

0.6886

1.1496

0.4636

0.1420

n.s.e.

0.0170

0.0021

0.0013

0.0001

0.0021

0.0018

0.0007

0.0008

.025

1.7348

0.2965

0.9485

0.2931

0.5817

0.9455

0.3809

0.0550

posterior

.975

mean

3.4771

2.4788

0.5040

0.4145

1.1826

1.0698

0.3154

0.3002

0.8442

0.6754

1.3989

1.1473

0.5590

0.4646

0.2446

0.1619

11.4283

1.0916

-509.83

n.s.e.

0.0225

0.0019

0.0014

0.0002

0.0018

0.0018

0.0008

0.0011

0.0237

0.0125

.025

1.5849

0.3074

0.9535

0.2871

0.5684

0.9430

0.3779

0.0564

9.2875

-0.0455

.975

3.4452

0.5141

1.2000

0.3125

0.8054

1.3909

0.5725

0.2732

13.6527

2.1549

Note: .025 and .975 denote the quantiles. n.s.e. is the numerical standard error. Posterior summary in the left panel: φ0 initialized

using a training sample comprising observations 1:40 and θ estimated using sample data 41:240 Posterior summary in the right panel:

joint estimation of θ and φ0 using sample data 41:240.

prior

param

true

mean s.d.

dist.

α

2.00

1.00 2.00

N

0.50

1.00 2.50

N

β

κ

1.00

0.10 1.50

N

λ

0.30

1.00 1.50

N

ρ

0.60

0.00 0.58 U(-1,1)

σ12

1.00

0.75 2.00

IG

2

σ2

0.50

0.75 2.00

IG

γ

0.10

0.50 0.29 U(0,1)

a0

9.91

2.50 7.50

N

c0

1.38

0.00 1.50

N

posterior ordinate (unnormalized)

Table 2: Posterior Summary of Parameters in Example 1

1

−.5

.5

0

b0

−2.5

0

−1.5

5

1

−3

3

.5

17.5

1

0

10

−1

−.5

2.5

a0

−3

−1

−5

2

1

−12.5

1.5

1.5

3

2

3.5

1.5

−2

0

−1

.5

0

2

2

1

1

1.5

2

2

0

0

.25

.25

.5

.5

.75

.75

1

1

Figure 1: Marginal prior-posterior plots of the parameters. Red: prior, Green: posterior with training sample, Blue: posterior without training

sample.

23

Figure 2: Simulated REE, PLM and ALM plots for at (top) and ct (bottom) in Example 1.

Shaded regions show the 95% band (darker shade is for PLM).

24

δ

β

param

α

0.0500

0.8000

dgp

0.2000

0.0500

0.8000

γ

1.1627

ρ

a0

-0.0045

1.0000

b0

2.7191

σ2

c0

log-likelihood

-399.3193

n = 500

0.5493

0.5093

(0.3524)

(0.2868)

0.7903

0.8062

(0.0490)

(0.0292)

0.1031

0.0417

(0.0951)

(0.0434)

0.7925

0.8155

(0.0399)

(0.0291)

0.8645

0.9763

(0.2069)

(0.1578)

0.0150

0.0173

(0.0090)

(0.0112)

0.5666

(1.2949)

-0.1103

(0.1324)

3.4863

(1.0969)

-1012.0615 -1013.0774

Table 3: DGP and MLE of Parameters in Example 2

mle

200

n = 1000

0.4559

0.4082

0.2904

(0.4970)

(0.1832)

(0.1807)

0.8365

0.8297

0.8142

(0.0516)

(0.0248)

(0.0197)

0.0069

0.0121

0.0520

(0.0988)

(0.0455)

(0.0291)

0.8008

0.8280

0.8055

(0.0517)

(0.0208)

(0.0220)

1.0389

0.9971

0.9554

(0.2169)

(0.1582)

(0.1027)

0.0345

0.0149

0.0115

(0.0070)

(0.0050)

(0.0067)

0.5719

(1.5204)

-0.1567

(0.0724)

3.3489

(0.7073)

-2015.4323 -2016.0206

n=

0.6060

(0.6564)

0.8226

(0.0494)

0.0069

(0.0955)

0.8526

(0.0444)

0.8690

(0.3810)

0.0396

(0.0133)

0.6984

(1.9551)

-0.1629

(0.1334)

3.9071

(1.5364)

-399.9057

n = 2000

0.0985

0.1463

(0.0962)

(0.0859)

0.8124

0.8272

(0.0146)

(0.0135)

0.0532

0.0297

(0.0288)

(0.0232)

0.7973

0.8058

(0.0168)

(0.0156)

0.9494

0.9830

(0.1065)

(0.0862)

0.0105

0.0118

(0.0035)

(0.0036)

1.4647

(0.7033)

0.2283

(0.1944)

2.2857

(0.4052)

-3936.8494 -3936.7587

Note: For each sample size (n), the left column presents the mle of all parameters; the right column presents the mle of the model parameters.

In the latter case the learning parameters where set at their data generating values.

25

26

-966.48

mean

0.073

0.077

1.071

0.455

0.404

1.225

1.714

5.976

0.112

.025.

0.027

-0.060

0.936

0.408

0.328

0.991

1.386

4.830

0.092

.975

0.128

0.223

1.229

0.507

0.483

1.511

2.107

7.337

0.133

mean

0.072

0.111

1.030

0.489

0.369

1.137

1.647

6.133

0.124

1.410

0.125

1.000

-0.981

2.245

0.341

-964.69

posterior

.025

.975

0.027 0.131

-0.024 0.271

0.893 1.184

0.427 0.557

0.282 0.453

0.913 1.406

1.323 2.028

4.893 7.538

0.092 0.147

0.113 2.819

-1.108 1.424

-0.891 3.525

-2.099 0.192

0.800 3.906

-0.905 1.406

mean

0.201

0.291

1.272

0.786

0.518

0.082

1.954

4.612

0.069

0.308

-2.085

5.380

-0.216

0.269

-0.606

-1144.774

.025

0.134

0.134

1.111

0.708

0.457

0.048

1.601

3.728

0.062

-1.246

-2.649

3.928

-1.311

-0.335

-1.074

.975

0.265

0.443

1.439

0.874

0.581

0.130

2.370

5.675

0.075

2.309

-1.537

7.178

0.785

0.874

-0.138

Note: .025 and .975 denote the quantiles. Sample data for posterior summary: Left panel: 1964:III to 2007:I with initial estimates

of φ0 and R0 based on observations from 1954:III to 1964:II. Center panel: 1964:III to 2007:I. Right panel: 1954:III to 2007:I.

prior

param

mean s.d.

dist.

ψ

4.00 2.00

G

0.25 1.00

N

θx

θπ

1.00 1.00

N

–

–

U(-1,1)

ρx

ρπ

–

–

U(-1,1)

2

σx

0.75 2.00

IG

σπ2

0.75 2.00

IG

2

σi

0.75 2.00

IG

γ

–

–

U(0,1)

a0x

0.00 5.00

N

a0π

0.00 5.00

N

c0xx

0.00 5.00

N

c0πx

0.00 5.00

N

c0xπ

0.00 5.00

N

c0ππ

0.00 5.00

N

log-posterior ordinate (unnormalized)

Table 4: Prior and Posterior Summary of Parameters in the NK Model

−1

0

−.5

.5

0

x

2

0

a0x

1

−10

0

b0

0

−20

−2.5

1

−5

1

2

1.5

.75

.5

.25

0

6

x

−.5

4

−.25

−1

2

0

a0

−.75

0

−2.5

0

b0x2

1

3

−5

−2.5

.5

20

−5

2

i

10

5

2.5

2.5

2.5

0

.6

1

1.2

1.5

1.8

1

2

2.4

1.25

.5

.75

2

x

0

.5

1

.25

0

5

8

2.5

−2.5

2

0

b0x1

−5

5

0

b0

2.5

−1.25

1.25

−2.5

5

Figure 3: Marginal prior-posterior plots of the parameters in the NK model. Red: prior, Green: posterior with training sample, Blue: posterior

without training sample. Training sample: 1954:III to 1964:II. Estimation sample: 1964:III to 2007:I

27

Figure 4: Simulated REE, PLM and ALM plots for ct,xx and ct,πx in the NK model for

sample period 1964:III to 2007:I (center panel in Table 4). Shaded regions show the 95%

band (darker shade is for PLM).

28

Figure 4: (cont’d) Simulated REE, PLM and ALM plots for ct,xπ and ct,ππ in the NK model

for sample period 1964:III to 2007:I (center panel in Table 4). Shaded regions show the 95%

band (darker shade is for PLM).

29