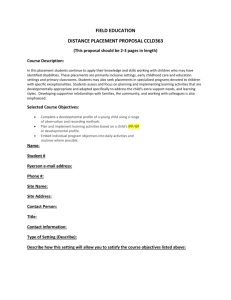

Improving Developmental Education Assessment and Placement:

advertisement