General-Purpose Code Acceleration with Limited-Precision Analog Computation

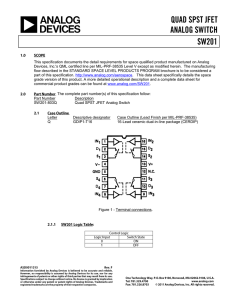

advertisement