STATEFUL-SWAPPING IN THE EMULAB NETWORK TESTBED

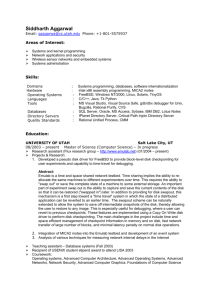

advertisement