Bundle Adjustment — A Modern Synthesis Bill Triggs , Philip F. McLauchlan

advertisement

Bundle Adjustment — A Modern Synthesis

Bill Triggs1 , Philip F. McLauchlan2 , Richard I. Hartley3 , and Andrew W. Fitzgibbon4

1

4

INRIA Rhône-Alpes, 655 avenue de l’Europe, 38330 Montbonnot, France

Bill.Triggs@inrialpes.fr

http://www.inrialpes.fr/movi/people/Triggs

2

School of Electrical Engineering, Information Technology & Mathematics

University of Surrey, Guildford, GU2 5XH, U.K.

P.McLauchlan@ee.surrey.ac.uk

3

General Electric CRD, Schenectady, NY, 12301

hartley@crd.ge.com

Dept of Engineering Science, University of Oxford, 19 Parks Road, OX1 3PJ, U.K.

awf@robots.ox.ac.uk

Abstract. This paper is a survey of the theory and methods of photogrammetric

bundle adjustment, aimed at potential implementors in the computer vision community. Bundle adjustment is the problem of refining a visual reconstruction to produce

jointly optimal structure and viewing parameter estimates. Topics covered include:

the choice of cost function and robustness; numerical optimization including sparse

Newton methods, linearly convergent approximations, updating and recursive methods; gauge (datum) invariance; and quality control. The theory is developed for

general robust cost functions rather than restricting attention to traditional nonlinear

least squares.

Keywords: Bundle Adjustment, Scene Reconstruction, Gauge Freedom, Sparse Matrices, Optimization.

1

Introduction

This paper is a survey of the theory and methods of bundle adjustment aimed at the computer

vision community, and more especially at potential implementors who already know a little

about bundle methods. Most of the results appeared long ago in the photogrammetry and

geodesy literatures, but many seem to be little known in vision, where they are gradually

being reinvented. By providing an accessible modern synthesis, we hope to forestall some

of this duplication of effort, correct some common misconceptions, and speed progress in

visual reconstruction by promoting interaction between the vision and photogrammetry

communities.

Bundle adjustment is the problem of refining a visual reconstruction to produce jointly

optimal 3D structure and viewing parameter (camera pose and/or calibration) estimates.

Optimal means that the parameter estimates are found by minimizing some cost function

that quantifies the model fitting error, and jointly that the solution is simultaneously optimal

with respect to both structure and camera variations. The name refers to the ‘bundles’

This work was supported in part by the European Commission Esprit LTR project Cumuli (B. Triggs), the UK EPSRC project GR/L34099 (P. McLauchlan), and the Royal Society

(A. Fitzgibbon). We would like to thank A. Zisserman, A. Grün and W. Förstner for valuable

comments and references.

B. Triggs, A. Zisserman, R. Szeliski (Eds.): Vision Algorithms’99, LNCS 1883, pp. 298–372, 2000.

c Springer-Verlag Berlin Heidelberg 2000

Bundle Adjustment — A Modern Synthesis

299

of light rays leaving each 3D feature and converging on each camera centre, which are

‘adjusted’ optimally with respect to both feature and camera positions. Equivalently —

unlike independent model methods, which merge partial reconstructions without updating

their internal structure — all of the structure and camera parameters are adjusted together

‘in one bundle’.

Bundle adjustment is really just a large sparse geometric parameter estimation problem,

the parameters being the combined 3D feature coordinates, camera poses and calibrations.

Almost everything that we will say can be applied to many similar estimation problems in

vision, photogrammetry, industrial metrology, surveying and geodesy. Adjustment computations are a major common theme throughout the measurement sciences, and once the

basic theory and methods are understood, they are easy to adapt to a wide variety of problems. Adaptation is largely a matter of choosing a numerical optimization scheme that

exploits the problem structure and sparsity. We will consider several such schemes below

for bundle adjustment.

Classically, bundle adjustment and similar adjustment computations are formulated

as nonlinear least squares problems [19, 46, 100, 21, 22, 69, 5, 73, 109]. The cost function

is assumed to be quadratic in the feature reprojection errors, and robustness is provided

by explicit outlier screening. Although it is already very flexible, this model is not really

general enough. Modern systems often use non-quadratic M-estimator-like distributional

models to handle outliers more integrally, and many include additional penalties related to

overfitting, model selection and system performance (priors, MDL). For this reason, we

will not assume a least squares / quadratic cost model. Instead, the cost will be modelled

as a sum of opaque contributions from the independent information sources (individual

observations, prior distributions, overfitting penalties . . . ). The functional forms of these

contributions and their dependence on fixed quantities such as observations will usually be

left implicit. This allows many different types of robust and non-robust cost contributions to

be incorporated, without unduly cluttering the notation or hiding essential model structure.

It fits well with modern sparse optimization methods (cost contributions are usually sparse

functions of the parameters) and object-centred software organization, and it avoids many

tedious displays of chain-rule results. Implementors are assumed to be capable of choosing

appropriate functions and calculating derivatives themselves.

One aim of this paper is to correct a number of misconceptions that seem to be common

in the vision literature:

• “Optimization / bundle adjustment is slow”: Such statements often appear in papers

introducing yet another heuristic Structure from Motion (SFM) iteration. The claimed

slowness is almost always due to the unthinking use of a general-purpose optimization routine that completely ignores the problem structure and sparseness. Real bundle

routines are much more efficient than this, and usually considerably more efficient and

flexible than the newly suggested method (§6, 7). That is why bundle adjustment remains the dominant structure refinement technique for real applications, after 40 years

of research.

• “Only linear algebra is required”: This is a recent variant of the above, presumably

meant to imply that the new technique is especially simple. Virtually all iterative refinement techniques use only linear algebra, and bundle adjustment is simpler than many

in that it only solves linear systems: it makes no use of eigen-decomposition or SVD,

which are themselves complex iterative methods.

300

B. Triggs et al.

• “Any sequence can be used”: Many vision workers seem to be very resistant to the idea

that reconstruction problems should be planned in advance (§11), and results checked

afterwards to verify their reliability (§10). System builders should at least be aware of the

basic techniques for this, even if application constraints make it difficult to use them.

The extraordinary extent to which weak geometry and lack of redundancy can mask

gross errors is too seldom appreciated, c.f . [34, 50, 30, 33].

• “Point P is reconstructed accurately”: In reconstruction, just as there are no absolute

references for position, there are none for uncertainty. The 3D coordinate frame is

itself uncertain, as it can only be located relative to uncertain reconstructed features or

cameras. All other feature and camera uncertainties are expressed relative to the frame

and inherit its uncertainty, so statements about them are meaningless until the frame

and its uncertainty are specified. Covariances can look completely different in different

frames, particularly in object-centred versus camera-centred ones. See §9.

There is a tendency in vision to develop a profusion of ad hoc adjustment iterations. Why

should you use bundle adjustment rather than one of these methods? :

• Flexibility: Bundle adjustment gracefully handles a very wide variety of different 3D

feature and camera types (points, lines, curves, surfaces, exotic cameras), scene types

(including dynamic and articulated models, scene constraints), information sources (2D

features, intensities, 3D information, priors) and error models (including robust ones).

It has no problems with missing data.

• Accuracy: Bundle adjustment gives precise and easily interpreted results because it uses

accurate statistical error models and supports a sound, well-developed quality control

methodology.

• Efficiency: Mature bundle algorithms are comparatively efficient even on very large

problems. They use economical and rapidly convergent numerical methods and make

near-optimal use of problem sparseness.

In general, as computer vision reconstruction technology matures, we expect that bundle

adjustment will predominate over alternative adjustment methods in much the same way as

it has in photogrammetry. We see this as an inevitable consequence of a greater appreciation

of optimization (notably, more effective use of problem structure and sparseness), and of

systems issues such as quality control and network design.

Coverage: We will touch on a good many aspects of bundle methods. We start by considering the camera projection model and the parametrization of the bundle problem §2, and

the choice of error metric or cost function §3. §4 gives a rapid sketch of the optimization

theory we will use. §5 discusses the network structure (parameter interactions and characteristic sparseness) of the bundle problem. The following three sections consider three

types of implementation strategies for adjustment computations: §6 covers second order

Newton-like methods, which are still the most often used adjustment algorithms; §7 covers

methods with only first order convergence (most of the ad hoc methods are in this class);

and §8 discusses solution updating strategies and recursive filtering bundle methods. §9

returns to the theoretical issue of gauge freedom (datum deficiency), including the theory

of inner constraints. §10 goes into some detail on quality control methods for monitoring

the accuracy and reliability of the parameter estimates. §11 gives some brief hints on network design, i.e. how to place your shots to ensure accurate, reliable reconstruction. §12

completes the body of the paper by summarizing the main conclusions and giving some

provisional recommendations for methods. There are also several appendices. §A gives a

brief historical overview of the development of bundle methods, with literature references.

Bundle Adjustment — A Modern Synthesis

301

§B gives some technical details of matrix factorization, updating and covariance calculation methods. §C gives some hints on designing bundle software, and pointers to useful

resources on the Internet. The paper ends with a glossary and references.

General references: Cultural differences sometimes make it difficult for vision workers

to read the photogrammetry literature. The collection edited by Atkinson [5] and the

manual by Karara [69] are both relatively accessible introductions to close-range (rather

than aerial) photogrammetry. Other accessible tutorial papers include [46, 21, 22]. Kraus

[73] is probably the most widely used photogrammetry textbook. Brown’s early survey

of bundle methods [19] is well worth reading. The often-cited manual edited by Slama

[100] is now quite dated, although its presentation of bundle adjustment is still relevant.

Wolf & Ghiliani [109] is a text devoted to adjustment computations, with an emphasis

on surveying. Hartley & Zisserman [62] is an excellent recent textbook covering vision

geometry from a computer vision viewpoint. For nonlinear optimization, Fletcher [29]

and Gill et al [42] are the traditional texts, and Nocedal & Wright [93] is a good modern

introduction. For linear least squares, Björck [11] is superlative, and Lawson & Hanson is

a good older text. For more general numerical linear algebra, Golub & Van Loan [44] is

the standard. Duff et al [26] and George & Liu [40] are the standard texts on sparse matrix

techniques. We will not discuss initialization methods for bundle adjustment in detail, but

appropriate reconstruction methods are plentiful and well-known in the vision community.

See, e.g., [62] for references.

Notation: The structure, cameras, etc., being estimated will be parametrized by a single

large state vector x. In general the state belongs to a nonlinear manifold, but we linearize

this locally and work with small linear state displacements denoted δx. Observations (e.g.

measured image features) are denoted z. The corresponding predicted values at parameter

value x are denoted z = z(x), with residual prediction error z(x) ≡ z−z(x). However,

observations and prediction errors usually only appear implicitly, through their influence

df

, and

on the cost function f(x) = f(predz(x)). The cost function’s gradient is g ≡ dx

d2 f

dz

its Hessian is H ≡ dx

2 . The observation-state Jacobian is J ≡ dx . The dimensions of

δx, δz are nx , nz .

2

2.1

Projection Model and Problem Parametrization

The Projection Model

We begin the development of bundle adjustment by considering the basic image projection

model and the issue of problem parametrization. Visual reconstruction attempts to recover a

model of a 3D scene from multiple images. As part of this, it usually also recovers the poses

(positions and orientations) of the cameras that took the images, and information about their

internal parameters. A simple scene model might be a collection of isolated 3D features,

e.g., points, lines, planes, curves, or surface patches. However, far more complicated scene

models are possible, involving, e.g., complex objects linked by constraints or articulations,

photometry as well as geometry, dynamics, etc. One of the great strengths of adjustment

computations — and one reason for thinking that they have a considerable future in vision

— is their ability to take such complex and heterogeneous models in their stride. Almost

any predictive parametric model can be handled, i.e. any model that predicts the values

of some known measurements or descriptors on the basis of some continuous parametric

representation of the world, which is to be estimated from the measurements.

302

B. Triggs et al.

Similarly, many possible camera models exist. Perspective projection is the standard,

but the affine and orthographic projections are sometimes useful for distant cameras, and

more exotic models such as push-broom and rational polynomial cameras are needed for

certain applications [56, 63]. In addition to pose (position and orientation), and simple

internal parameters such as focal length and principal point, real cameras also require various types of additional parameters to model internal aberrations such as radial distortion

[17–19, 100, 69, 5].

For simplicity, suppose that the scene is modelled by individual static 3D features Xp ,

p = 1 . . . n, imaged in m shots with camera pose and internal calibration parameters Pi ,

i = 1 . . . m. There may also be further calibration parameters Cc , c = 1 . . . k, constant

across several images (e.g., depending on which of several cameras was used). We are

given uncertain measurements xip of some subset of the possible image features xip (the

true image of feature Xp in image i). For each observation xip , we assume that we have

a predictive model xip = x(Cc , Pi , Xp ) based on the parameters, that can be used to

derive a feature prediction error:

xip (Cc , Pi , Xp ) ≡ xip − x(Cc , Pi , Xp )

(1)

In the case of image observations the predictive model is image projection, but other

observation types such as 3D measurements can also be included.

To estimate the unknown 3D feature and camera parameters from the observations,

and hence reconstruct the scene, we minimize some measure (discussed in §3) of their total

prediction error. Bundle adjustment is the model refinement part of this, starting from given

initial parameter estimates (e.g., from some approximate reconstruction method). Hence,

it is essentially a matter of optimizing a complicated nonlinear cost function (the total

prediction error) over a large nonlinear parameter space (the scene and camera parameters).

We will not go into the analytical forms of the various possible feature and image

projection models, as these do not affect the general structure of the adjustment network,

and only tend to obscure its central simplicity. We simply stress that the bundle framework

is flexible enough to handle almost any desired model. Indeed, there are so many different

combinations of features, image projections and measurements, that it is best to regard

them as black boxes, capable of giving measurement predictions based on their current

parameters. (For optimization, first, and possibly second, derivatives with respect to the

parameters are also needed).

For much of the paper we will take quite an abstract view of this situation, collecting the

scene and camera parameters to be estimated into a large state vector x, and representing

the cost (total fitting error) as an abstract function f(x). The cost is really a function of

the feature prediction errors xip = xip − x(Cc , Pi , Xp ). But as the observations xip are

constants during an adjustment calculation, we leave the cost’s dependence on them and

on the projection model x(·) implicit, and display only its dependence on the parameters

x actually being adjusted.

2.2

Bundle Parametrization

The bundle adjustment parameter space is generally a high-dimensional nonlinear manifold

— a large Cartesian product of projective 3D feature, 3D rotation, and camera calibration

manifolds, perhaps with nonlinear constraints, etc. The state x is not strictly speaking a

vector, but rather a point in this space. Depending on how the entities that it contains are

Bundle Adjustment — A Modern Synthesis

303

Fig. 1. Vision geometry and its error model are essentially

projective. Affine parametrization introduces an artificial

singularity at projective infinity, which may cause numerical problems for distant features.

represented, x can be subject to various types of complications including singularities,

internal constraints, and unwanted internal degrees of freedom. These arise because geometric entities like rotations, 3D lines and even projective points and planes, do not have

simple global parametrizations. Their local parametrizations are nonlinear, with singularities that prevent them from covering the whole parameter space uniformly (e.g. the many

variants on Euler angles for rotations, the singularity of affine point coordinates at infinity).

And their global parametrizations either have constraints (e.g. quaternions with q2 = 1),

or unwanted internal degrees of freedom (e.g. homogeneous projective quantities have a

scale factor freedom, two points defining a line can slide along the line). For more complicated compound entities such as matching tensors and assemblies of 3D features linked by

coincidence, parallelism or orthogonality constraints, parametrization becomes even more

delicate.

Although they are in principle equivalent, different parametrizations often have profoundly different numerical behaviours which greatly affect the speed and reliability of the

adjustment iteration. The most suitable parametrizations for optimization are as uniform,

finite and well-behaved as possible near the current state estimate. Ideally, they should

be locally close to linear in terms of their effect on the chosen error model, so that the

cost function is locally nearly quadratic. Nonlinearity hinders convergence by reducing

the accuracy of the second order cost model used to predict state updates (§6). Excessive

correlations and parametrization singularities cause ill-conditioning and erratic numerical

behaviour. Large or infinite parameter values can only be reached after excessively many

finite adjustment steps.

Any given parametrization will usually only be well-behaved in this sense over a relatively small section of state space. So to guarantee uniformly good performance, however

the state itself may be represented, state updates should be evaluated using a stable local

parametrization based on increments from the current estimate. As examples we consider

3D points and rotations.

3D points: Even for calibrated cameras, vision geometry and visual reconstructions are

intrinsically projective. If a 3D (X Y Z) parametrization (or equivalently a homogeneous

affine (X Y Z 1) one) is used for very distant 3D points, large X, Y, Z displacements

are needed to change the image significantly. I.e., in (X Y Z) space the cost function

becomes very flat and steps needed for cost adjustment become very large for distant

points. In comparison, with a homogeneous projective parametrization (X Y Z W ), the

behaviour near infinity is natural, finite and well-conditioned so long as the normalization

keeps the homogeneous 4-vector finite at infinity (by sending W → 0 there). In fact,

there is no immediate visual distinction between the images of real points near infinity

and virtual ones ‘beyond’ it (all camera geometries admit such virtual points as bona fide

projective constructs). The optimal reconstruction of a real 3D point may even be virtual

in this sense, if image noise happens to push it ‘across infinity’. Also, there is nothing to

stop a reconstructed point wandering beyond infinity and back during the optimization.

This sounds bizarre at first, but it is an inescapable consequence of the fact that the natural geometry and error model for visual reconstruction is projective rather than affine.

304

B. Triggs et al.

Projectively, infinity is just like any other place. Affine parametrization (X Y Z 1) is

acceptable for points near the origin with close-range convergent camera geometries, but

it is disastrous for distant ones because it artificially cuts away half of the natural parameter

space, and hides the fact by sending the resulting edge to infinite parameter values. Instead,

you should use a homogeneous

parametrization (X Y Z W ) for distant points, e.g. with

spherical normalization Xi2 = 1.

Rotations: Similarly, experience suggests that quasi-global 3 parameter rotation parametrizations such as Euler angles cause numerical problems unless one can be certain to

avoid their singularities and regions of uneven coverage. Rotations should be parametrized

using either quaternions subject to q2 = 1, or local perturbations R δR or δR R of

an existing rotation R, where δR can be any well-behaved 3 parameter small rotation

approximation, e.g. δR = (I + [ δr ]× ), the Rodriguez formula, local Euler angles, etc.

State updates: Just as state vectors x represent points in some nonlinear space, state

updates x → x + δx represent displacements in this nonlinear space that often can not

be represented exactly by vector addition. Nevertheless, we assume that we can locally

linearize the state manifold, locally resolving any internal constraints and freedoms that

it may be subject to, to produce an unconstrained vector δx parametrizing the possible

local state displacements. We can then, e.g., use Taylor expansion in δx to form a local

df

d2 f

cost model f(x + δx) ≈ f(x) + dx

δx + 12 δx dx

2 δx, from which we can estimate the

state update δx that optimizes this model (§4). The displacement δx need not have the

same structure or representation as x — indeed, if a well-behaved local parametrization is

used to represent δx, it generally will not have — but we must at least be able to update

the state with the displacement to produce a new state estimate. We write this operation

as x → x + δx, even though it may involve considerably more than vector addition. For

example, apart from the change of representation, an updated quaternion q → q + dq will

need to have its normalization q2 = 1 corrected, and a small rotation update of the form

R → R(1 + [ r ]× ) will not in general give an exact rotation matrix.

3

Error Modelling

We now turn to the choice of the cost function f(x), which quantifies the total prediction

(image reprojection) error of the model parametrized by the combined scene and camera

parameters x. Our main conclusion will be that robust statistically-based error metrics

based on total (inlier + outlier) log likelihoods should be used, to correctly allow for the

presence of outliers. We will argue this at some length as it seems to be poorly understood.

The traditional treatments of adjustment methods consider only least squares (albeit with

data trimming for robustness), and most discussions of robust statistics give the impression

that the choice of robustifier or M-estimator is wholly a matter of personal whim rather

than data statistics.

Bundle adjustment is essentially a parameter estimation problem. Any parameter estimation paradigm could be used, but we will consider only optimal point estimators,

whose output is by definition the single parameter vector that minimizes a predefined cost

function designed to measure how well the model fits the observations and background

knowledge. This framework covers many practical estimators including maximum likelihood (ML) and maximum a posteriori (MAP), but not explicit Bayesian model averaging.

Robustification, regularization and model selection terms are easily incorporated in the

cost.

Bundle Adjustment — A Modern Synthesis

305

A typical ML cost function would be the summed negative log likelihoods of the

prediction errors of all the observed image features. For Gaussian error distributions,

this reduces to the sum of squared covariance-weighted prediction errors (§3.2). A MAP

estimator would typically add cost terms giving certain structure or camera calibration

parameters a bias towards their expected values.

The cost function is also a tool for statistical interpretation. To the extent that lower

costs are uniformly ‘better’, it provides a natural model preference ordering, so that cost

iso-surfaces above the minimum define natural confidence regions. Locally, these regions

are nested ellipsoids centred on the cost minimum, with size and shape characterized by

d2 f

the dispersion matrix (the inverse of the cost function Hessian H = dx

2 at the minimum).

Also, the residual cost at the minimum can be used as a test statistic for model validity

(§10). E.g., for a negative log likelihood cost model with Gaussian error distributions,

twice the residual is a χ2 variable.

3.1

Desiderata for the Cost Function

In adjustment computations we go to considerable lengths to optimize a large nonlinear cost

model, so it seems reasonable to require that the refinement should actually improve the

estimates in some objective (albeit statistical) sense. Heuristically motivated cost functions

can not usually guarantee this. They almost always lead to biased parameter estimates, and

often severely biased ones. A large body of statistical theory points to maximum likelihood

(ML) and its Bayesian cousin maximum a posteriori (MAP) as the estimators of choice.

ML simply selects the model for which the total probability of the observed data is highest,

or saying the same thing in different words, for which the total posterior probability of the

model given the observations is highest. MAP adds a prior term representing background

information. ML could just as easily have included the prior as an additional ‘observation’:

so far as estimation is concerned, the distinction between ML / MAP and prior / observation

is purely terminological.

Information usually comes from many independent sources. In bundle adjustment

these include: covariance-weighted reprojection errors of individual image features; other

measurements such as 3D positions of control points, GPS or inertial sensor readings;

predictions from uncertain dynamical models (for ‘Kalman filtering’ of dynamic cameras

or scenes); prior knowledge expressed as soft constraints (e.g. on camera calibration or

pose values); and supplementary sources such as overfitting, regularization or description

length penalties. Note the variety. One of the great strengths of adjustment computations is

their ability to combine information from disparate sources. Assuming that the sources are

statistically independent of one another given the model, the total probability for the model

given the combined data is the product of the probabilities from the individual sources. To

get an additive cost function we take logs, so the total log likelihood for the model given

the combined data is the sum of the individual source log likelihoods.

Properties of ML estimators: Apart from their obvious simplicity and intuitive appeal,

ML and MAP estimators have strong statistical properties. Many of the most notable ones

are asymptotic, i.e. they apply in the limit of a large number of independent measurements,

or more precisely in the central limit where the posterior distribution becomes effectively

Gaussian1 . In particular:

1

Cost is additive, so as measurements of the same type are added the entire cost surface grows in

direct proportion to the amount of data nz . This means that the relative sizes of the cost and all of

306

B. Triggs et al.

• Under mild regularity conditions on the observation distributions, the posterior distribution of the ML estimate converges asymptotically in probability to a Gaussian with

covariance equal to the dispersion matrix.

• The ML estimate asymptotically has zero bias and the lowest variance that any unbiased

estimator can have. So in this sense, ML estimation is at least as good as any other

method2 .

Non-asymptotically, the dispersion is not necessarily a good approximation for the

covariance of the ML estimator. The asymptotic limit is usually assumed to be a valid

for well-designed highly-redundant photogrammetric measurement networks, but recent

sampling-based empirical studies of posterior likelihood surfaces [35, 80, 68] suggest that

the case is much less clear for small vision geometry problems and weaker networks. More

work is needed on this.

The effect of incorrect error models: It is clear that incorrect modelling of the observation

distributions is likely to disturb the ML estimate. Such mismodelling is to some extent

inevitable because error distributions stand for influences that we can not fully predict or

control. To understand the distortions that unrealistic error models can cause, first realize

that geometric fitting is really a special case of parametric probability density estimation.

For each set of parameter values, the geometric image projection model and the assumed

observation error models combine to predict a probability density for the observations.

Maximizing the likelihood corresponds to fitting this predicted observation density to the

observed data. The geometry and camera model only enter indirectly, via their influence

on the predicted distributions.

Accurate noise modelling is just as critical to successful estimation as accurate geometric modelling. The most important mismodelling is failure to take account of the

possibility of outliers (aberrant data values, caused e.g., by blunders such as incorrect

feature correspondences). We stress that so long as the assumed error distributions model

the behaviour of all of the data used in the fit (including both inliers and outliers), the

above properties of ML estimation including asymptotic minimum variance remain valid

in the presence of outliers. In other words, ML estimation is naturally robust : there is no

2

its derivatives — and hence the size r of the region around the minimum over which the second

order Taylor terms dominate all higher order ones — remain roughly constant as nz increases.

Within this region, the total cost is roughly quadratic, so if the cost function was taken to be the

posterior log likelihood, the posterior distribution is roughly Gaussian. However the curvature of

the quadratic (i.e. the inverse

matrix) increases as data is added, so the posterior standard

√dispersion

deviation shrinks as O σ/ nz − nx , where O(σ) characterizes the average standard deviation

from a single observation. For nz − nx (σ/r)2 , essentially the entire posterior probability

mass lies inside the quadratic region, so the posterior distribution converges asymptotically in

probability to a Gaussian. This happens at any proper isolated cost minimum at which second

order Taylor expansion is locally valid. The approximation gets better with more data (stronger

curvature) and smaller higher order Taylor terms.

This result follows from the Cramér-Rao bound (e.g. [23]), which says that the covariance of any

unbiased estimator is bounded below by the Fisher information or mean curvature of the posterior

2

d log p

x − x) − dx2 where p is the posterior probability, x the

log likelihood surface (

x − x)(

parameters being estimated, x the estimate given by any unbiased estimator, x the true underlying

x value, and A B denotes positive semidefiniteness of A − B. Asymptotically, the posterior

distribution becomes Gaussian and the Fisher information converges to the inverse dispersion (the

curvature of the posterior log likelihood surface at the cost minimum), so the ML estimate attains

the Cramér-Rao bound.

Bundle Adjustment — A Modern Synthesis

307

0.4

Gaussian PDF

Cauchy PDF

0.35

1000 Samples from a Cauchy and a Gaussian Distribution

0.3

100

0.25

Cauchy

Gaussian

0.2

80

0.15

0.1

0.05

0

-10

60

-5

0

5

10

8

7

Gaussian -log likelihood

Cauchy -log likelihood

40

6

5

20

4

3

2

0

0

1

0

-10

-5

0

5

100

200

300

400

500

600

700

800

900 1000

10

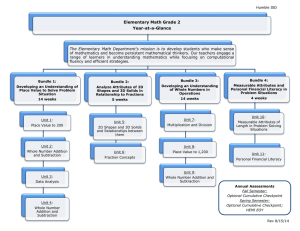

Fig. 2. Beware of treating any bell-shaped observation distribution as a Gaussian. Despite being

narrowerin the peak and broader in the tails, the probability density function of a Cauchy distribution,

−1

p(x) = π(1 + x2 ) , does not look so very different from that of a Gaussian (top left). But their

negative log likelihoods are very different (bottom left), and large deviations (“outliers”) are much

more probable for Cauchy variates than for Gaussian ones (right). In fact, the Cauchy distribution

has infinite covariance.

need to robustify it so long as realistic error distributions were used in the first place. A

distribution that models both inliers and outliers is called a total distribution. There is no

need to separate the two classes, as ML estimation does not care about the distinction. If the

total distribution happens to be an explicit mixture of an inlier and an outlier distribution

(e.g., a Gaussian with a locally uniform background of outliers), outliers can be labeled

after fitting using likelihood ratio tests, but this is in no way essential to the estimation

process.

It is also important to realize the extent to which superficially similar distributions can

differ from a Gaussian, or equivalently, how extraordinarily rapidly the tails of a Gaussian

distribution fall away compared to more realistic models of real observation errors. See

figure 2. In fact, unmodelled outliers typically have very severe effects on the fit. To see this,

suppose that the real observations are drawn from a fixed (but perhaps unknown) underlying

distribution p0 (z). The law of large numbers says that their empirical distributions (the observed distribution of each set of samples) converge asymptotically

in probability to p0 (z).

So for each

model,

the

negative

log

likelihood

cost

sum

−

log

pmodel (zi |x) converges

i

to −nz p0 (z) log(pmodel (z|x)) dz. Up to a model-independent

constant,

this is nz times

the relative entropy or Kullback-Leibler divergence p0 (z) log(p0 (z)/pmodel (z|x)) dz

of the model distribution w.r.t. the true one p0 (z). Hence, even if the model family does

not include p0 , the ML estimate converges asymptotically to the model whose predicted

observation distribution has minimum relative entropy w.r.t. p0 . (See, e.g. [96, proposition

2.2]). It follows that ML estimates are typically very sensitive to unmodelled outliers, as

regions which are relatively probable under p0 but highly improbable under the model

make large contributions to the relative entropy. In contrast, allowing for outliers where

308

B. Triggs et al.

none actually occur causes relatively little distortion, as no region which is probable under

p0 will have large − log pmodel .

In summary, if there is a possibility of outliers, non-robust distribution models such

as Gaussians should be replaced with more realistic long-tailed ones such as: mixtures of

a narrow ‘inlier’ and a wide ‘outlier’ density, Cauchy or α-densities, or densities defined

piecewise with a central peaked ‘inlier’ region surrounded by a constant ‘outlier’ region3 .

We emphasize again that poor robustness is due entirely to unrealistic distributional assumptions: the maximum likelihood framework itself is naturally robust provided that the

total observation distribution including both inliers and outliers is modelled. In fact, real

observations can seldom be cleanly divided into inliers and outliers. There is a hard core

of outliers such as feature correspondence errors, but there is also a grey area of features

that for some reason (a specularity, a shadow, poor focus, motion blur . . . ) were not as

accurately located as other features, without clearly being outliers.

3.2

Nonlinear Least Squares

One of the most basic parameter estimation methods is nonlinear least squares. Suppose

that we have vectors of observations zi predicted by a model zi = zi (x), where x is a

vector of model parameters. Then nonlinear least squares takes as estimates the parameter

values that minimize the weighted Sum of Squared Error (SSE) cost function:

f(x) ≡

1

2

zi (x) Wi zi (x) ,

zi (x) ≡ zi − zi (x)

(2)

i

Here, zi (x) is the feature prediction error and Wi is an arbitrary symmetric positive

definite (SPD) weight matrix. Modulo normalization terms independent of x, the weighted

SSE cost function coincides with the negative log likelihood for observations zi perturbed

by Gaussian noise of mean zero and covariance W−i 1. So for least squares to have a useful

statistical interpretation, the Wi should be chosen to approximate the inverse measurement

covariance of zi . Even for non-Gaussian noise with this mean and covariance, the GaussMarkov theorem [37, 11] states that if the models zi (x) are linear, least squares gives the

Best Linear Unbiased Estimator (BLUE), where ‘best’ means minimum variance4 .

Any weighted least squares model can be converted to an unweighted one (Wi = 1)

by pre-multiplying zi , zi , zi by any Li satisfying Wi = Li Li . Such an Li can be calculated efficiently from Wi or W−i 1 using Cholesky decomposition (§B.1). zi = Li zi

is calleda standardized residual, and the resulting unweighted least squares problem

minx 12 i zi (x)2 is said to be in standard form. One advantage of this is that optimization methods based on linear least squares solvers can be used in place of ones based

on linear (normal) equation solvers, which allows ill-conditioned problems to be handled

more stably (§B.2).

Another peculiarity of the SSE cost function is its indifference to the natural boundaries between the observations. If observations zi from any sources are assembled into a

3

4

The latter case corresponds to a hard inlier / outlier decision rule: for any observation in the ‘outlier’

region, the density is constant so the observation has no influence at all on the fit. Similarly, the

mixture case corresponds to a softer inlier / outlier decision rule.

It may be possible (and even useful) to do better with either biased (towards the correct solution),

or nonlinear estimators.

Bundle Adjustment — A Modern Synthesis

309

compound observation vector z ≡ (z1 , . . . , zk ), and their weight matrices Wi are assembled into compound block diagonal weight matrix W ≡ diag(W1 , . . . , Wk ), the weighted

squared error f(x) ≡ 12 z(x) W z(x) is the same as the original SSE cost function,

1

i zi (x) Wi zi (x). The general quadratic form of the SSE cost is preserved under

2

such compounding, and also under arbitrary linear transformations of z that mix components from different observations. The only place that the underlying structure is visible

is in the block structure of W. Such invariances do not hold for essentially any other cost

function, but they simplify the formulation of least squares considerably.

3.3

Robustified Least Squares

The main problem with least squares is its high sensitivity to outliers. This happens because

the Gaussian has extremely small tails compared to most real measurement error distributions. For robust estimates, we must choose a more realistic likelihood model (§3.1). The

exact functional form is less important than the general way in which the expected types

of outliers enter. A single blunder such as a correspondence error may affect one or a few

of the observations, but it will usually leave all of the others unchanged. This locality is

the whole basis of robustification. If we can decide which observations were affected, we

can down-weight or eliminate them and use the remaining observations for the parameter

estimates as usual. If all of the observations had been affected about equally (e.g. as by

an incorrect projection model), we might still know that something was wrong, but not be

able to fix it by simple data cleaning.

We will adopt a ‘single layer’ robustness model, in which the observations are partitioned into independent groups zi , each group being irreducible in the sense that it is

accepted, down-weighted or rejected as a whole, independently of all the other groups.

The partitions should reflect the types of blunders that occur. For example, if feature correspondence errors are the most common blunders, the two coordinates of a single image

point would naturally form a group as both would usually be invalidated by such a blunder,

while no other image point would be affected. Even if one of the coordinates appeared to

be correct, if the other were incorrect we would usually want to discard both for safety.

On the other hand, in stereo problems, the four coordinates of each pair of corresponding

image points might be a more natural grouping, as a point in one image is useless without

its correspondent in the other one.

Henceforth, when we say observation we mean irreducible group of observations

treated as a unit by the robustifying model. I.e., our observations need not be scalars, but

they must be units, probabilistically independent of one another irrespective of whether

they are inliers or outliers.

As usual, each independent observation zi contributes an independent term fi (x | zi ) to

the total cost function. This could have more or less any form, depending on the expected

total distribution of inliers and outliers for the observation. One very natural family are the

radial distributions, which have negative log likelihoods of the form:

fi (x) ≡

1

2

ρi ( zi (x) Wi zi (x) )

(3)

d

Here, ρi (s) can be any increasing function with ρi (0) = 0 and ds

ρi (0) = 1. (These

d 2 fi

guarantee that at zi = 0, f vanishes and dz2 = Wi ). Weighted SSE has ρi (s) = s, while

i

more robust variants have sublinear ρi , often tending to a constant at ∞ so that distant

310

B. Triggs et al.

outliers are entirely ignored. The dispersion matrix W−i 1 determines the spatial spread of zi ,

and up to scale its covariance (if this is finite). The radial form is preserved under arbitrary

affine transformations of zi , so within a group, all of the observations are on an equal

footing in the same sense as in least squares. However, non-Gaussian radial distributions

are almost never separable: the observations in zi can neither be split into independent

subgroups, nor combined into larger groups, without destroying the radial form. Radial

cost models do not have the remarkable isotropy of non-robust SSE, but this is exactly

what we wanted, as it ensures that all observations in a group will be either left alone, or

down-weighted together.

As an example of this, for image features polluted with occasional large outliers caused

by correspondence errors, we might model the error distribution as a Gaussian central peak

plus a uniform background of outliers.

This would give negative log likelihood contribu

tions of the form f(x) = − log exp(− 12 χ2ip ) + instead of the non-robust weighted

SSE model f(x) = 12 χ2ip , where χ2ip = xip Wip xip is the squared weighted residual

error (which is a χ2 variable for a correct model and Gaussian error distribution), and parametrizes the frequency of outliers.

8

7

Gaussian -log likelihood

Robustified -log likelihood

6

5

4

3

2

1

0

-10

3.4

-5

0

5

10

Intensity-Based Methods

The above models apply not only to geometric image features, but also to intensity-based

matching of image patches. In this case, the observables are image gray-scales or colors

I rather than feature coordinates u, and the error model is based on intensity residuals.

To get from a point projection model u = u(x) to an intensity based one, we simply

compose with the assumed local intensity model I = I(u) (e.g. obtained from an image

template or another image that we are matching against), premultiply point Jacobians by

dI

point-to-intensity Jacobians du

, etc. The full range of intensity models can be implemented

within this framework: pure translation, affine, quadratic or homographic patch deformation models, 3D model based intensity predictions, coupled affine or spline patches for

surface coverage, etc., [1, 52, 55, 9, 110, 94, 53, 97, 76, 104, 102]. The structure of intensity

based bundle problems is very similar to that of feature based ones, so all of the techniques

studied below can be applied.

We will not go into more detail on intensity matching, except to note that it is the

real basis of feature based methods. Feature detectors are optimized for detection not

localization. To localize a detected feature accurately we need to match (some function of)

Bundle Adjustment — A Modern Synthesis

311

the image intensities in its region against either an idealized template or another image of

the feature, using an appropriate geometric deformation

model, etc. For example, suppose

that the intensity matching model is f(u) = 12

ρ(δI(u)2 ) where the integration is

over some image patch, δI is the current intensity prediction error, u parametrizes the local

geometry (patch translation & warping), and ρ(·) is some intensity error robustifier. Then

dI

df

the cost gradient in terms of u is gu = du

=

ρ δI du . Similarly, the cost Hessian in

dI dI

d2 f

ρ ( du ) du . In a feature based

u in a Gauss-Newton approximation is Hu = du2 ≈

we have

model, we express u = u(x) as a function of the bundle parameters, so if Ju = du

dx

a corresponding cost gradient and Hessian contribution gx = gu Ju and Hx = Ju Hu Ju .

In other words, the intensity matching model is locally equivalent to a quadratic feature

matching one on the ‘features’ u(x), with effective weight (inverse covariance) matrix

Wu = Hu . All image feature error models in vision are ultimately based on such an

underlying intensity matching model. As feature covariances are a function of intensity

dI dI

gradients

ρ ( du ) du , they can be both highly variable between features (depending

on how much local gradient there is), and highly anisotropic (depending on how directional

the gradients are). E.g., for points along a 1D intensity edge, the uncertainty is large in the

along edge direction and small in the across edge one.

3.5

Implicit Models

Sometimes observations are most naturally expressed in terms of an implicit observationconstraining model h(x, z) = 0, rather than an explicit observation-predicting one z =

z(x). (The associated image error still has the form f(z − z)). For example, if the model

is a 3D curve and we observe points on it (the noisy images of 3D points that may lie

anywhere along the 3D curve), we can predict the whole image curve, but not the exact

position of each observation along it. We only have the constraint that the noiseless image

of the observed point would lie on the noiseless image of the curve, if we knew these. There

are basically two ways to handle implicit models: nuisance parameters and reduction.

Nuisance parameters: In this approach, the model is made explicit by adding additional

‘nuisance’ parameters representing something equivalent to model-consistent estimates

of the unknown noise free observations, i.e. to z with h(x, z) = 0. The most direct way

to do this is to include the entire parameter vector z as nuisance parameters, so that we

have to solve a constrained optimization problem on the extended parameter space (x, z),

minimizing f(z − z) over (x, z) subject to h(x, z) = 0. This is a sparse constrained

problem, which can be solved efficiently using sparse matrix techniques (§6.3). In fact,

for image observations, the subproblems in z (optimizing f(z − z) over z for fixed z

and x) are small and for typical f rather simple. So in spite of the extra parameters z,

optimizing this model is not significantly more expensive than optimizing an explicit one

z = z(x) [14, 13, 105, 106]. For example, when estimating matching constraints between

image pairs or triplets [60, 62], instead of using an explicit 3D representation, pairs or

triplets of corresponding image points can be used as features zi , subject to the epipolar

or trifocal geometry contained in x [105, 106].

However, if a smaller nuisance parameter vector than z can be found, it is wise to use

it. In the case of a curve, it suffices to include just one nuisance parameter per observation,

saying where along the curve the corresponding noise free observation is predicted to

lie. This model exactly satisfies the constraints, so it converts the implicit model to an

unconstrained explicit one z = z(x, λ), where λ are the along-curve nuisance parameters.

312

B. Triggs et al.

The advantage of the nuisance parameter approach is that it gives the exact optimal

parameter estimate for x, and jointly, optimal x-consistent estimates for the noise free

observations z.

Reduction: Alternatively, we can regard h(x, z) rather than z as the observation vector,

and hence fit the parameters to the explicit log likelihood model for h(x, z). To do this,

we must transfer the underlying error model / distribution f(z) on z to one f(h) on

h(x, z). In principle, this should be done by marginalization: the density for h is given

by integrating that for z over all z giving the same h. Within the point estimation

framework, it can be approximated by replacing the integration with maximization. Neither

calculation is easy in general, but in the asymptotic limit where first order Taylor expansion

h(x, z) = h(x, z + z) ≈ 0 + dh

z is valid, the distribution of h is a marginalization or

dz

maximization of that of z over affine subspaces. This can be evaluated in closed form for

some robust distributions. Also, standard covariance propagation gives (more precisely,

this applies to the h and z dispersions):

h(x, z) ≈ 0 ,

h(x, z) h(x, z) ≈ dh

z z dh

dz

dz

= dh

W−1 dh

dz

dz

(4)

where W−1 is the covariance of z. So at least for an outlier-free Gaussian model, the

reduced distribution remains Gaussian (albeit with x-dependent covariance).

4

Basic Numerical Optimization

Having chosen a suitable model quality metric, we must optimize it. This section gives a

very rapid sketch of the basic local optimization methods for differentiable functions. See

[29, 93, 42] for more details. We need to minimize a cost function f(x) over parameters x,

starting from some given initial estimate x of the minimum, presumably supplied by some

approximate visual reconstruction method or prior knowledge of the approximate situation.

As in §2.2, the parameter space may be nonlinear, but we assume that local displacements

can be parametrized by a local coordinate system / vector of free parameters δx. We try

to find a displacement x → x + δx that locally minimizes or at least reduces the cost

function. Real cost functions are too complicated to minimize in closed form, so instead

we minimize an approximate local model for the function, e.g. based on Taylor expansion

or some other approximation at the current point x. Although this does not usually give the

exact minimum, with luck it will improve on the initial parameter estimate and allow us to

iterate to convergence. The art of reliable optimization is largely in the details that make

this happen even without luck: which local model, how to minimize it, how to ensure that

the estimate is improved, and how to decide when convergence has occurred. If you not

are interested in such subjects, use a professionally designed package (§C.2): details are

important here.

4.1

Second Order Methods

The reference for all local models is the quadratic Taylor series one:

f(x + δx) ≈ f(x) + g δx + 12 δx H δx

quadratic local model

df

g ≡ dx

(x)

gradient vector

2

d f

H ≡ dx

2 (x)

Hessian matrix

(5)

Bundle Adjustment — A Modern Synthesis

313

For now, assume that the Hessian H is positive definite (but see below and §9). The local

model is then a simple quadratic with a unique global minimum, which can be found

df

(x + δx) ≈ H δx + g to zero for the stationary

explicitly using linear algebra. Setting dx

point gives the Newton step:

δx = −H−1 g

(6)

The estimated new function value is f(x + δx) ≈ f(x) − 12 δx H δx = f(x) − 12 g H−1 g.

Iterating the Newton step gives Newton’s method. This is the canonical optimization

method for smooth cost functions, owing to its exceptionally rapid theoretical and practical

convergence near the minimum. For quadratic functions it converges in one iteration, and

for more general analytic ones its asymptotic convergence is quadratic: as soon as the

estimate gets close enough to the solution for the second order Taylor expansion to be

reasonably accurate, the residual state error is approximately squared at each iteration.

This means that the number of significant digits in the estimate approximately doubles at

each iteration, so starting from any reasonable estimate, at most about log2 (16) + 1 ≈ 5–6

iterations are needed for full double precision (16 digit) accuracy. Methods that potentially

achieve such rapid asymptotic convergence are called second order methods. This is a

high accolade for a local optimization method, but it can only be achieved if the Newton step

is asymptotically well approximated. Despite their conceptual simplicity and asymptotic

performance, Newton-like methods have some disadvantages:

• To guarantee convergence, a suitable step control policy must be added (§4.2).

• Solving the n × n Newton step equations takes time O n3 for a dense system (§B.1),

which can be prohibitive for large n. Although the cost can often be reduced (very

substantially for bundle adjustment) by exploiting sparseness in H, it remains true that

Newton-like methods tend to have a high cost per iteration, which increases relative to

that of other methods as the problem size increases. For this reason, it is sometimes

worthwhile to consider more approximate first order methods (§7), which are occasionally more efficient, and generally simpler to implement, than sparse Newton-like

methods.

• Calculating second derivatives H is by no means trivial for a complicated cost function, both computationally, and in terms of implementation effort. The Gauss-Newton

method (§4.3) offers a simple analytic approximation to H for nonlinear least squares

problems. Some other methods build up approximations to H from the way the gradient

g changes during the iteration are in use (see §7.1, Krylov methods).

• The asymptotic convergence of Newton-like methods is sometimes felt to be an expensive luxury when far from the minimum, especially when damping (see below) is active.

However, it must be said that Newton-like methods generally do require significantly

fewer iterations than first order ones, even far from the minimum.

4.2

Step Control

Unfortunately, Newton’s method can fail in several ways. It may converge to a saddle

point rather than a minimum, and for large steps the second order cost prediction may be

inaccurate, so there is no guarantee that the true cost will actually decrease. To guarantee

convergence to a minimum, the step must follow a local descent direction (a direction

with a non-negligible component down the local cost gradient, or if the gradient is zero

314

B. Triggs et al.

near a saddle point, down a negative curvature direction of the Hessian), and it must make

reasonable progress in this direction (neither so little that the optimization runs slowly

or stalls, nor so much that it greatly overshoots the cost minimum along this direction).

It is also necessary to decide when the iteration has converged, and perhaps to limit any

over-large steps that are requested. Together, these topics form the delicate subject of step

control.

To choose a descent direction, one can take the Newton step direction if this descends

(it may not near a saddle point), or more generally some combination of the Newton and

gradient directions. Damped Newton methods solve a regularized system to find the step:

(H + λ W) δx = −g

(7)

Here, λ is some weighting factor and W is some positive definite weight matrix (often

the identity, so λ → ∞ becomes gradient descent δx ∝ −g). λ can be chosen to limit

the step to a dynamically chosen maximum size (trust region methods), or manipulated

more heuristically, to shorten the step if the prediction is poor (Levenberg-Marquardt

methods).

Given a descent direction, progress along it is usually assured by a line search method,

of which there are many based on quadratic and cubic 1D cost models. If the suggested

(e.g. Newton) step is δx, line search finds the α that actually minimizes f along the line

x + α δx, rather than simply taking the estimate α = 1.

There is no space for further details on step control here (again, see [29, 93, 42]). However note that poor step control can make a huge difference in reliability and convergence

rates, especially for ill-conditioned problems. Unless you are familiar with these issues, it

is advisable to use professionally designed methods.

4.3

Gauss-Newton and Least Squares

Consider the nonlinear weighted SSE cost model f(x) ≡ 12 z(x) W z(x) (§3.2) with

prediction error z(x) = z−z(x) and weight matrix W. Differentiation gives the gradient

dz

:

and Hessian in terms of the Jacobian or design matrix of the predictive model, J ≡ dx

df

g ≡ dx

= z W J

2

d f

H ≡ dx

2 = J WJ +

i (z

2

W)i ddxz2i

(8)

2

These formulae could be used directly in a damped Newton method, but the ddxz2i term in H

is likely to be small in comparison to the corresponding components of J W J if either: (i)

2

the prediction error z(x) is small; or (ii) the model is nearly linear, ddxz2i ≈ 0. Dropping

the second term gives the Gauss-Newton approximation to the least squares Hessian,

H ≈ J W J. With this approximation, the Newton step prediction equations become the

Gauss-Newton or normal equations:

(J W J) δx = −J W z

(9)

The Gauss-Newton approximation is extremely common in nonlinear least squares, and

practically all current bundle implementations use it. Its main advantage is simplicity: the

second derivatives of the projection model z(x) are complex and troublesome to implement.

Bundle Adjustment — A Modern Synthesis

315

In fact, the normal equations are just one of many methods of solving the weighted

linear least squares problem5 min δx 21 (J δx − z) W (J δx − z). Another notable

method is that based on QR decomposition (§B.2, [11, 44]), which is up to a factor of two

slower than the normal equations, but much less sensitive to ill-conditioning in J 6 .

Whichever solution method is used, the main disadvantage of the Gauss-Newton approximation is that when the discarded terms are not negligible, the convergence rate is

greatly reduced (§7.2). In our experience, such reductions are indeed common in highly

nonlinear problems with (at the current step) large residuals. For example, near a saddle

point the Gauss-Newton approximation is never accurate, as its predicted Hessian is always at least positive semidefinite. However, for well-parametrized (i.e. locally near linear,

§2.2) bundle problems under an outlier-free least squares cost model evaluated near the cost

minimum, the Gauss-Newton approximation is usually very accurate. Feature extraction

errors and hence z and W−1 have characteristic scales of at most a few pixels. In contrast,

the nonlinearities of z(x) are caused by nonlinear 3D feature-camera geometry (perspective effects) and nonlinear image projection (lens distortion). For typical geometries and

lenses, neither effect varies significantly on a scale of a few pixels. So the nonlinear corrections are usually small compared to the leading order linear terms, and bundle adjustment

behaves as a near-linear small residual problem.

However note that this does not extend to robust cost models. Robustification works

by introducing strong nonlinearity into the cost function at the scale of typical feature

reprojection errors. For accurate step prediction, the optimization routine must take account

of this. For radial cost functions (§3.3), a reasonable compromise is to take account of

the exact second order derivatives of the robustifiers ρi (·), while retaining only the first

order Gauss-Newton approximation for the predicted observations zi (x). If ρi and ρ are

respectively the first and second derivatives of ρi at the current evaluation point, we have

a robustified Gauss-Newton approximation:

gi = ρi Ji Wi zi

Hi ≈ Ji (ρi Wi + 2 ρi (Wi zi ) (Wi zi )) Ji

(10)

So robustification has two effects: (i) it down-weights the entire observation (both gi and

Hi ) by ρi ; and (ii) it makes a rank-one reduction7 of the curvature Hi in the radial (zi )

direction, to account for the way in which the weight changes with the residual. There

are reweighting-based optimization methods that include only the first effect. They still

find the true cost minimum g = 0 as the gi are evaluated exactly8 , but convergence may

5

6

7

8

d2 z

Here, the dependence of J on x is ignored, which amounts to the same thing as ignoring the dx2i

term in H.

The QR method gives the solution to a relative error of about O(C), as compared to O C 2 for the normal equations, where C is the condition number (the ratio of the largest to the smallest

singular value) of J, and is the machine precision (10−16 for double precision floating point).

The useful robustifiers ρi are sublinear, with ρi < 1 and ρi < 0 in the outlier region.

Reweighting is also sometimes used in vision to handle projective homogeneous scale factors

rather than error weighting. E.g., suppose that image points (u/w, v/w) are generated by a

homogeneous projection equation (u, v, w) = P (X, Y, Z, 1), where P is the 3 × 4 homogeneous image projection matrix. A scale factor reweighting scheme might take derivatives w.r.t.

u, v while treating the inverse weight w as a constant within each iteration. Minimizing the resulting globally bilinear linear least squares error model over P and (X, Y, Z) does not give

the true cost minimum: it zeros the gradient-ignoring-w-variations, not the true cost gradient.

Such schemes should not be used for precise work as the bias can be substantial, especially for

wide-angle lenses and close geometries.

316

B. Triggs et al.

be slowed owing to inaccuracy of H, especially for the mainly radial deviations produced

by non-robust initializers containing outliers. Hi has a direction of negative curvature if

ρi zi Wi zi < − 12 ρi , but if not we can even reduce the robustified Gauss-Newton

model to a local unweighted SSE one for which linear least squares methods can be used.

For simplicity suppose that Wi has already reduced to 1 by premultiplying zi and Ji by Li

where Li Li = Wi . Then minimizing the effective squared error 12 δzi − Ji δx2 gives

the correct second order robust state update, where α ≡ RootOf( 12 α2 −α−ρi /ρi zi 2 )

and:

ρi

zi zi

Ji

δzi ≡

Ji ≡ ρi 1 − α

(11)

zi (x)

1−α

zi 2

In practice, if ρi zi 2 − 12 ρi , we can use the same formulae but limit α ≤ 1 − for

some small . However, the full curvature correction is not applied in this case.

4.4

Constrained Problems

More generally, we may want to minimize a function f(x) subject to a set of constraints

c(x) = 0 on x. These might be scene constraints, internal consistency constraints on the

parametrization (§2.2), or constraints arising from an implicit observation model (§3.5).

Given an initial estimate x of the solution, we try to improve this by optimizing the

quadratic local model for f subject to a linear local model of the constraints c. This linearly

constrained quadratic problem has an exact solution in linear algebra. Let g, H be the

gradient and Hessian of f as before, and let the first order expansion of the constraints be

dc

. Introduce a vector of Lagrange multipliers λ for c.

c(x+δx) ≈ c(x)+C δx where C ≡ dx

d

(f+c λ)(x+δx) ≈

We seek the x+δx that optimizes f+c λ subject to c = 0, i.e. 0 = dx

g+H δx +C λ and 0 = c(x +δx) ≈ c(x)+C δx. Combining these gives the Sequential

Quadratic Programming (SQP) step:

H C −1 g

H C

δx

g

1

(12)

= −

, f(x + δx) ≈ f(x) − 2 g c

c

C 0

λ

c

C 0

−1

−1

H C

H − H−1 C D−1 C H−1 H−1 C D−1

,

D ≡ C H−1 C

=

(13)

C 0

D−1 C H−1

−D−1

At the optimum δx and c vanish, but C λ = −g, which is generally non-zero.

An alternative constrained approach uses the linearized constraints to eliminate some

of the variables, then optimizes over the rest. Suppose that we can order the variables

to give partitions x = (x1 x2 ) and C = (C1 C2 ), where C1 is square and invertible.

Then using C1 x1 + C2 x2 = C x = −c, we can solve for x1 in terms of x2 and c:

x1 = −C−11(C2 x2 + c). Substituting this into the quadratic cost model has the effect of

eliminating x1 , leaving a smaller unconstrained reduced problem H22 x2 = −g2 , where:

H22 ≡ H22 − H21 C−11 C2 − C2 C−1 H12 + C2 C−1 H11 C−11 C2

g2 ≡ g2 − C2 C−1 g1 − (H21 − C2 C−1 H11 ) C−11 c

(14)

(15)

(These matrices can be evaluated efficiently using simple matrix factorization schemes

[11]). This method is stable provided that the chosen C1 is well-conditioned. It works well

Bundle Adjustment — A Modern Synthesis

317

for dense problems, but is not always suitable for sparse ones because if C is dense, the

reduced Hessian H22 becomes dense too.

For least squares cost models, constraints can also be handled within the linear least

squares framework, e.g. see [11].

4.5

General Implementation Issues

Before going into details, we mention a few points of good numerical practice for largescale optimization problems such as bundle adjustment:

Exploit the problem structure: Large-scale problems are almost always highly structured

and bundle adjustment is no exception. In professional cartography and photogrammetric

site-modelling, bundle problems with thousands of images and many tens of thousands

of features are regularly solved. Such problems would simply be infeasible without a

thorough exploitation of the natural structure and sparsity of the bundle problem. We will

have much to say about sparsity below.

Use factorization effectively: Many of above formulae contain matrix inverses. This is

a convenient short-hand for theoretical calculations, but numerically, matrix inversion is

almost never used. Instead, the matrix is decomposed into its Cholesky, LU, QR, etc.,

factors and these are used directly, e.g. linear systems are solved using forwards and

backwards substitution. This is much faster and numerically more accurate than explicit

use of the inverse, particularly for sparse matrices such as the bundle Hessian, whose

factors are still quite sparse, but whose inverse is always dense. Explicit inversion is

required only occasionally, e.g. for covariance estimates, and even then only a few of

the entries may be needed (e.g. diagonal blocks of the covariance). Factorization is the

heart of the optimization iteration, where most of the time is spent and where most can be

done to improve efficiency (by exploiting sparsity, symmetry and other problem structure)

and numerical stability (by pivoting and scaling). Similarly, certain matrices (subspace

projectors, Householder matrices) have (diagonal)+(low rank) forms which should not be

explicitly evaluated as they can be applied more efficiently in pieces.

Use stable local parametrizations: As discussed in §2.2, the parametrization used for

step prediction need not coincide with the global one used to store the state estimate. It is

more important that it should be finite, uniform and locally as nearly linear as possible.

If the global parametrization is in some way complex, highly nonlinear, or potentially

ill-conditioned, it is usually preferable to use a stable local parametrization based on

perturbations of the current state for step prediction.

Scaling and preconditioning: Another parametrization issue that has a profound and toorarely recognized influence on numerical performance is variable scaling (the choice of

‘units’ or reference scale to use for each parameter), and more generally preconditioning

(the choice of which linear combinations of parameters to use). These represent the linear

part of the general parametrization problem. The performance of gradient descent and most

other linearly convergent optimization methods is critically dependent on preconditioning,

to the extent that for large problems, they are seldom practically useful without it.

One of the great advantages of the Newton-like methods is their theoretical independence of such scaling issues9 . But even for these, scaling makes itself felt indirectly in

9

Under a linear change of coordinates x → Tx we have g → T− g and H → T− H T−1, so the

Newton step δx = −H−1 g varies correctly as δx → T δx, whereas the gradient one δx ∼ g

318

B. Triggs et al.

Network

graph

A

1

C

2

Parameter

connection

graph

D

B

4

K1

2

A

3

3

12 34

K

K

12

AB C DE

A1

C3

D2

D3

D4

E3

E4

KK

12

C

B2

C1

K2

B

B1

B4

12 34

4

A

A2

J =

E

C

E

AB C DE

D

B

1

D

H =

E

1

2

3

4

K1

K2

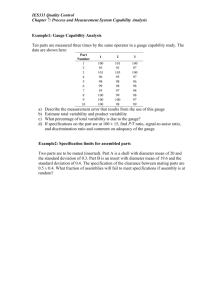

Fig. 3. The network graph, parameter connection graph, Jacobian structure and Hessian structure for

a toy bundle problem with five 3D features A–E, four images 1–4 and two camera calibrations K1

(shared by images 1,2) and K2 (shared by images 3,4). Feature A is seen in images 1,2; B in 1,2,4;

C in 1,3; D in 2–4; and E in 3,4.

several ways: (i) Step control strategies including convergence tests, maximum step size

limitations, and damping strategies (trust region, Levenberg-Marquardt) are usually all

based on some implicit norm δx2 , and hence change under linear transformations of x

(e.g., damping makes the step more like the non-invariant gradient descent one). (ii) Pivoting strategies for factoring H are highly dependent on variable scaling, as they choose

‘large’ elements on which to pivot. Here, ‘large’ should mean ‘in which little numerical

cancellation has occurred’ but with uneven scaling it becomes ‘with the largest scale’. (iii)

The choice of gauge (datum, §9) may depend on variable scaling, and this can significantly

influence convergence [82, 81].

For all of these reasons, it is important to choose variable scalings that relate meaningfully to the problem structure. This involves a judicious comparison of the relative

influence of, e.g., a unit of error on a nearby point, a unit of error on a very distant one,

a camera rotation error, a radial distortion error, etc. For this, it is advisable to use an

‘ideal’ Hessian or weight matrix rather than the observed one, otherwise the scaling might

break down if the Hessian happens to become ill-conditioned or non-positive during a few

iterations before settling down.

5

Network Structure

Adjustment networks have a rich structure, illustrated in figure 3 for a toy bundle problem.

The free parameters subdivide naturally into blocks corresponding to: 3D feature coordinates A, . . . , E; camera poses and unshared (single image) calibration parameters 1,

. . . , 4; and calibration parameters shared across several images K1 , K2 . Parameter blocks

varies incorrectly as δx → T− δx. The Newton and gradient descent steps agree only when

T T = H.

Bundle Adjustment — A Modern Synthesis

319

interact only via their joint influence on image features and other observations, i.e. via their

joint appearance in cost function contributions. The abstract structure of the measurement

network can be characterized graphically by the network graph (top left), which shows

which features are seen in which images, and the parameter connection graph (top right)

which details the sparse structure by showing which parameter blocks have direct interactions. Blocks are linked if and only if they jointly influence at least one observation. The

cost function Jacobian (bottom left) and Hessian (bottom right) reflect this sparse structure.