Data Model of Echocardiogram Video for Content Based Retrieval

advertisement

Data Model of Echocardiogram Video for Content Based Retrieval

1

2

2

1

2

Aditi Roy , V. Pallavi , Avishek Saha , Shamik Sural , J. Mukherjee , and A.K. Majumdar

1

School of IT,

2

2

Department of Computer Science and Engineering

IIT, Kharagpur, India

E-mail: {aditi.roy, shamik}@sit.iitkgp.ernet.in, {pallavi, avishek, jay, akmj}@cse.iitkgp.ernet.in

ABSTRACT

In this work we propose a new approach for modeling and

management of echocardiogram video data. We introduce

a hierarchical state-based model for representing an echo

video using objects present and their dynamic behaviors.

At first the echo video is segmented based on ‘view’,

which describes the presence of objects. Object behavior

is described by states and state transition using state

transition diagram. This diagram is used to partition each

view segment into states. We apply a novel technique for

detecting the states using Sweep M-mode. For efficient

retrieval of information, we propose indexes based on

views and states of objects. The proposed model thus

helps to store information about similar types of video

data in a single database schema and supports content

based retrieval of video data.

KEY WORDS

Echocardiogram video, View, State, Sweep M-mode,

Hierarchical state-based modeling.

1. Introduction

Echocardiography is a popular sonic method to analyze

cardiac structures and function [1]. In the current paper,

we address the issue of analyzing the spatio-temporal

structure of echo videos for the purpose of temporal

segmentation, browsing and efficient content based

retrieval.

In recent past, advances have been made in content-based

retrieval of medical images. Researches on echo video

summarization, temporal segmentation for interpretation,

storage and content-based retrieval of echo video based

on views have been reported [2] [3]. But this method is

heavily dependent on the available domain knowledge,

like, spatial structure of the echo video frames in terms of

the ‘Region of Interest’ (ROI). On the other hand, an

approach towards semantic content-based retrieval of

video data using object state transition model has been put

forward in [4][5]. In their work, they segment echo videos

based on states of the heart object. Thus, view-based

modeling and state-based modeling of echo video are

done separately. But hierarchical state-based modeling,

combining views and states, to store information about

similar types of video data in a single database schema for

efficient content based retrieval of video data, is an

untouched problem to the best of our knowledge.

In our work, we do hierarchical segmentation of echo

video based on views and states of the heart object by

exploiting specific structures of the video. The advantage

of our approach is that it allows storage and indexing of

the echo video at different levels of abstraction based on

semantic features of video objects.

The rest of the paper is organized as follows. In Section 2,

we explain modeling of echo video based on views and

states. In Section 3, we discuss the proposed system

architecture, and we conclude in Section 4 of the paper.

2. Modeling Echocardiogram Video based on

Views and States

Modeling of video data is important for defining the

object schema used in database. In case of echo video, our

aim is to retrieve dynamic behavior of the video objects.

To achieve this, a hierarchical state-based model has been

introduced. Due to the temporal nature of video data, the

visual information must be structured and broken down

into meaningful components.

2.1 Video Segmentation based on View

As mentioned above, the first step of video processing for

hierarchical state-based modeling is segmentation of the

input video into shots. A shot can be defined as a

sequence of interrelated frames captured from the same

camera location that represents a continuous action in

time and space. In echo video segmentation, traditional

definition of shot is not applicable. Echocardiogram video

is obtained by scanning the cardiac structure with an

ultrasound device. Hence, depending on transducer

placement, different views of echo video are obtained. In

this paper the views considered are: Parasternal Long

Axis View, Parasternal Short Axis view, Apical View,

Color Doppler, One dimensional image.

Echocardiogram View Detection and Classification.

We explore two methods to detect shot boundary, namely,

histogram based comparison and edge change ratio. We

combine them using majority voting to detect shots in

echo video and obtain 98% accuracy [6].

After detecting views, we classify the views by

considering signal properties of different views. We use

the fact that, for each view, different sets of cardiac

chambers are visible. The number of chambers present,

their orientation and the presence of heart muscles in each

view, gives different patterns of histogram. We identify

the views based on their unique histogram patterns. We

train a neural network for classifying the views using their

histogram of each frame. An overall precision of 95.34%

[6] is obtained.

2.2 State-based Video Representation

State transition diagram describes all the states that an

object can have, the events or conditions under which an

object changes state (transitions) and the activities

undertaken during the life of an object (actions). In an

echo video, the two states are systole and diastole.

Figure 1 shows the state transition diagram of heart. Heart

belongs in two states, namely, systole and diastole. When

ventricles start expanding, transition occurs from systole

state to diastole state. Similarly, transition occurs from

diastole to systole with ventricle contraction.

Fig. 1. State transition diagram of heart

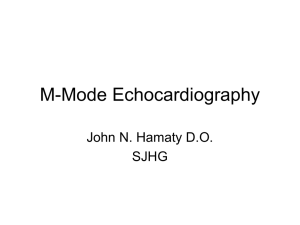

Identification of States using Sweep M-mode. For Mmode echocardiography, the signal is obtained from the

time sequence of a one-dimensional signal locating

anatomic structures from their echoes along a fixed axis

of emission. We use a multiple M-mode generation

method termed as ‘Sweep M-mode’. It is so named

because scanning the intensity along a straight line, while

the straight line is continuously swept by a specified

interval in a direction normal to a fixed axis generates

multiple M-modes. For obtaining sweep M-mode, user

draws a line perpendicular to the direction of left

ventricular wall motion (see vertical line in Figure 2).

Scanning the intensity value along the straight line

perpendicular to this vertical line creates sweep M-modes,

taking it as Y-axis, for each frame of the video considered

as X-axis. The Sweep M-modes are generated along the

horizontal broken straight lines as shown in Figure 2. One

such M-mode is shown in Figure 3(a).

Border Extraction. Before border extraction, we remove

noise and enhance the image by edge detection in Mmodes using Gaussian filtering and Sobel operator

respectively. Then, we extract the border by searching for

optimal path along time axis. We use a maximum

intensity tracking procedure [6] whose performance is

improved by using a local window to predict the position

of the next border point. Since border tracking of all the

M-modes is not perfect due to inherent noise,

discontinuities and absence of well-defined edges, we

need to select only those few that are meaningful. It has

been observed that in M-modes where tracking is perfect,

the distance between the two cardiac borders never

exceeds a threshold value. But if the distance value

exceeds the threshold, the M-modes are identified as mistracked. We use this as a heuristic to select the proper Mmodes. One such tracked M-mode corresponding to

Figure 3(a) is shown in Figure 3(b).

State Identification. For extracting the state information

from each M-mode, we first compute the distance

between the two endocardium borders in each frame, as

shown in Figure 3(b). Figure 3(c) shows the variation of

cardiac border distance with respect to time.

We use the distance information to classify the echo video

frames into two classes, namely, systole and diastole. The

troughs of Figure 3(c) indicate end systole points and the

peaks indicate the end diastole points. During diastole, as

the left ventricle expands, the cardiac border distance

increases. Thus in echo video, the frames corresponding

to positive slope in distance graph are classified as

diastolic state frames. Similarly, left ventricle contracts in

systole state. So, frames corresponding to negative slope

are classified as systolic. Thus the process of state

detection from an echo video is completed. The state

transition graph obtained from Figure 3(c) is shown in

Figure 3(d). It has been observed that the individual plots

for the selected M-modes have many false state

transitions. So we combine them using majority voting to

identify the state information of each frame. The

misclassification error is about 12.36%.

Fig. 2. User drawn straight line and cavity on a chosen

frame of video

(a)

(b)

(c)

(d)

Fig. 3. (a) M-mode image (b) Border extracted M-mode

image, (c) Variation cardiac border distance with time, (d)

State transition graph

3. System Architecture and Implementation

EchoView = (VideoID, EchoViewID, StartFrame, EndFrame,

ViewType)

We have developed a prototype system, which uses the

temporal segmentation techniques presented in the

previous section for hierarchical state-based indexing of

echo video.

EchoState = (VideoID, EchoViewID, StateID, StartFrame,

EndFrame, StateType)

3.1 General Architecture of the proposed System

The proposed system has an architecture that can be

subdivided into two functional units - the first one is for

database population and the second for database querying.

The system architecture is summarized in Figure 4. First,

the echo videos are split into short segments called views

by the view detector. The view detector basically

segments the videos based on the different views as

mentioned in Section 2.1. After that, view classifier

classifies each view. Then for each shot, the state

extractor identifies the states. Shot and state information

is stored in a database hierarchically. When a query is put

to the system, the query processor module interprets it and

retrieves the video segments based on its semantic

content. This technique leads to a simple and fast retrieval

of the desired video segment.

EchoSubState = (VideoID, EchoViewID, StateID, SubStateID,

StartFrame, EndFrame, SubStateType)

EchoVideo relation maintains information about a

video and the corresponding patient. For each new video a

unique VideoID is generated. The EchoView records

information about all views identified in each video. The

EchoState contains information about every state

identified in each view segment in a video. Similarly,

EchoSubState maintains information of the sub states for

each state. Appropriate database tables are created using

this schema and populated. The corresponding ER

diagram of the echo database is shown in Figure 5.

3.3 Database Population

In the current implementation, this step is performed

semi automatically. User can segment video for either

view indexing or state based indexing and populate the

database through a graphical interface. After performing

shot detection, shot classification is done for automatic

annotation of shots. User has option to annotate the views

manually if the shots are misclassified and also to

redefine the view boundaries. Once segmentation based

on views is completed, state detection operation is

performed for state information extraction for specified

view. This part is fully automated. After temporal

segmentation, database tables are updated accordingly. In

order to retrieve video segments corresponding to a

certain view and state efficiently and quickly, hierarchical

state-based indexing scheme is used.

3.4 Database Querying

Fig. 4. Architecture of the proposed system

3.2 Schema Specification

In order to retrieve video segments depicting certain

views and states and state transitions of objects, view

based indexing and state based indexing scheme is

adopted. Our video database has the following important

schema.

EchoVideo = (VideoName, VideoID, Duration, Location,

DateOfRecording, PatientName,Age,Sex)

A user-friendly interface is provided for ease of

specifying a query. For retrieval of the video segments

describing state in specified view, user has to select the

state name and view type accordingly. Subsequently

corresponding SQL queries are composed. It uses the

EchoState table, where state based indexing is used, to

identify the location of a state in a specified view.

Query on Views: Here, we demonstrate through following

example how view based information retrieval can be

achieved in the proposed video database management

system. Figure 6(a) shows the result of the query to

retrieve all the video segments corresponding to Long

Axis View from echocardiogram database of a particular

patient. In the query interface shown in Figure 6(a), the

retrieved segments are listed in the bottom part of the left

panel. User can play the video segments by selecting them

one by one. It may be noted that the retrieved segments

are shown with their segment number and the

corresponding start and end frame numbers of the

segment with respect to the original video.

Query on States: Since, the main idea of the proposed

approach of video modeling is to extract state information

of objects depicted in a video, content of a video is

described by states of the heart and their transitions. In

case of echocardiogram images, such state information

describes the functionality of heart. Hence, we

demonstrate through following examples how state based

information can be retrieved. Figure 6(b) shows the result

of the query to retrieve video segments where heart is in

systole state. The results of the query appear as video

segments with their start frame and end frame numbers

along with the video identifier as before.

Now, we demonstrate how the hierarchical view-state

relationship works. The following query is used to

retrieve segments in which the heart is in diastolic state in

Short Axis View. Figure 6(c) shows the query interface as

it appears after the query has been generated and

executed.

4. Conclusions

In this paper we have proposed a new approach for

hierarchical state-based modeling of echo video data by

identifying the views and states of objects. First, the state

detector detects the view boundaries using histogram

based comparison and edge change ratio. Next, the state

classifier classifies each view by considering signal

properties of different views. Then, the state extractor

extracts state information from each view segment using a

new type of M-mode generation method named as sweep

M-mode. We have used this approach of hierarchical

state-based modeling to develop an object relational video

database for storage and retrieval of echocardiogram

video segments. However, the finer (sub state)

segmentation of echocardiogram video is not done till

now. This is part of our future work.

Fig. 5. ER diagram for echo database

[3]S. Ebadollahi, S.F. Chang, & H. Wu, Automatic view

recognition in echocardiogram videos using parts-based

representation. Proc. IEEE Computer Society Conference

on Computer Vision and Pattern Recognition,

(CVPR’04), Vol. 2, June 2004, II- II-9.

[4]P.K. Singh, & A.K. Majumdar, Semantic contentbased retrieval in a video database. Proc. Int’l Workshop

on Multimedia Data Mining, (MDM/KDD’01), August

2001, 50-57.

(a)

[5]B. Acharya, J. Mukherjee, & A.K. Majumdar,

Modeling dynamic objects in databases: a logic based

approach. LNCS 2224, Springer Verlag, ER 2001, 449512.

[6]A. Roy, S. Sural, J. Mukherjee, & A.K. Majumdar,

Modeling of Echocardiogram Video Based on Views and

States. Indian Conference on Computer Vision, Graphics

and Image Processing, (ICVGIP’06). (To be published)

(b)

(c)

Fig. 6. Query result: (a) Long Axis View of heart (b)

Heart is in systolic state (c) Heart is in diastolic state and

in Short Axis View

Acknowledgement

We are grateful to Dr. S. Ramamurthy from AIIMS, New

Delhi for providing us the echocardiogram videos and the

information, which help us greatly in our work.

This work is partially supported by the Department of

Science and Technology (DST), India, under Research

Grant No. SR/S3/EECE/024/2003-SERC-Engg.

References

[1]H. Feigenbaum,

FEBIGER, 1997).

Echocardiography

(LEA

&

[2] S. Ebadollahi, S.F. Chang, & H. Wu, Echocardiogram

videos: summarization, temporal segmentation and

browsing. Proc. IEEE Int’l Conference on Image

Processing, (ICIP’02), Vol. 1, September 2002, 613-616.