Techniques for Structuring Database Records SALVATORE T. MARCH

advertisement

Techniques for Structuring Database Records

SALVATORE T. MARCH

Department of Management Science, School of Management, University of Minnesota, Minneapolis,

Minnesota 55455

Structuring database records by considering data item usage can yield substantial

efficienciesin the operating cost of database systems. However, since the number of

possible physical record structures for database of practical significanceis enormous, and

their evaluation is extremely complex, determining efficientrecord structures by full

enumeration is generally infeasible.This paper discusses the techniques of mathematical

clustering, iterative grouping refinement, mathematical programming, and hierarchic

aggregation, which can be used to quickly determine efficientrecord structures for large,

shared databases.

Categories and Subject Descriptors: D.4.2 [Operating Systems]: Storage Management-segmentation; D.4.3 [Operating Systems]: File Systems Management--file

organization;D.4.8 [Operating Systems]: Performance--modeling andpredietion; E.2

[Data]; Data Storage Representations; E.2 [Data]; Data Storage Representationsn

composite structures, primitive data items; H.2.1 [Database Management]: Logical

Design--schema and subschema; H.2.2 [Database Management]: Physical Design--

access methods

General Terms: Economics, Performance

Additional Key Words and Phrases: Aggregation, record segmentation, record structures

INTRODUCTION

Computer-based information systems are

critical to the operation and management

of large organizations in both the public

and private sectors. Such systems typically

operate on databases containing billions

of data characters [Jefferson, 1980] and

service user communities with widely varying information needs. Their efficiency

is largely dependent on the database

design.

Unfortunately, selecting an efficient

database design is a difficult task. Many

complex and interrelated factors must be

taken into consideration, such as the logical

structure of the data, the retrieval and update patterns of the user community, and

the operating costs and accessing charac-

teristics of the computer system. Lum

[1979] points out that despite much work

in the area, database design is still an art

that relies heavily on human intuition and

experience. Consequently, itspractice is becoming more difficult a s t h e applications

that the database must support become

more sophisticated.

An important issue in database design is

how to physically organize the data in secondary memory so that the information

requirements of the user community can be

met efficiently [Schkolnick and Yao, 1979].

The record structures of a database determine this physical organization. The task

of record structuring, is to arrange the

database physically so that (1) obtaining

"the next" piece of information in a user

Permission to copy without fee all or part of this material is granted provided that the copies are not made or

distributed for direct commercial advantage, the ACM copyright notice and the title of the publication and its

date appear, and notice is given that copying is by permission of the Association for Computing Machinery. To

copy otherwise, or to republish, requires a fee and/or specific permission.

© 1983 ACM 0010-4892/83/0300-0045 $00.75

Computing Surveys,Vol:.15,No. 1,March 1983

46

•

Salvatore T. March

CONTENTS

person who works for the company is an

instance of that entity). Clearly, an entityinstance cannot be stored in a database

(e.g., people do not survive well in databases); therefore, some useful description

is actually stored. The description of an

INTRODUCTION

entity-instance is a set of values for selected

I. P R O B L E M DEFINITION A N D R E L E V A N T

characteristics (commonly termed data

TERMINOLOGY

2. R E C O R D S E G M E N T A T I O N T E C H N I Q U E S

items) of the entity-instance (e.g., "John

2.1 Mathematical Clustering

Doe" is the value of the data item EMP2.2 InterativeGrouping Refinement

NAME

for an instance of the entity EM2.3 Mathematical Programming

PLOYEE). Two types of data items are

2.4 A Comparative Analysis of Record Segmentation Techniques

distinguished: (1) attribute descriptors (or

3. H I E R A R C H I C A G G R E G A T I O N

attributes), which are characteristics of a

4. S U M M A R Y

AND DIRECTIONS FOR

single entity, and (2) relationship descripFURTHER RESEARCH

tors, which characterize an association beACKNOWLEDGMENTS

tween the entity described and some other

REFERENCES

(describing) entity.

Typically, each entity-instance is described by the same set of data items which,

along with some membership criteria, is

used to define the entity. In addition, each

request has a low probability of requiring entity has some (subset of) data item(s),

physical access to secondary memory and termed the identifier schema, whose

(2) a minimal amount of irrelevant data is value(s) is(are) used to identify unique entransferred when secondary memory is ac- tity.instances. The entity EMPLOYEE, for

cessed. This is accomplished by organizing example, might be defined by the data

the data in secondary memory according to items: EMP-NAME, EMP-NO, AGE, SEX,

the accessing requirements of the user com- DEPARTMENT-OF-EMPLOYEE,

and

munity. Data that are commonly required ASSIGNED-PROJECTS. The membertogether are then physically stored and ac- ship criterion is, of course, people who work

cessed together. Conversely, data that are for the company; individual EMPLOYEEs

not commonly required together are not (entity-instances) are identified by the

stored together. Thus the requirements of value of EMP-NO (EMP-NO is the identithe user community are met with a minimal fier schema for the entity EMPLOYEE).

number of accesses to secondary memory EMP-NAME, EMP-NO, and AGE are atand with a minimal amount of irrelevant tribute descriptors since they apply only to

data transferred.

EMPLOYEE; DEPARTMENT-OF-EMThis paper presents alternative tech- PLOYEE and ASSIGNED-PROJECTS

niques that can be used to quickly deter- are relationship descriptors that associate

mine efficient record structures for large EMPLOYEEs with the D E P A R T M E N T s

shared databases. In order to delineate the in which they work and with the PROJnature of this problem more clearly, the ECTs to which they are assigned. Relationpaper briefly describes both logical and ship descriptors may be thought of as

physical database concepts prior to "logical connectors" from one entity to andiscussing the record structuring problem other.

in detail.

At the physical level, data items are typAt the logical level a database contains ically grouped to define database records

and provides controlled access to descrip- (or records). In general, records may be

tions of instances of entities as required by quite complex, containing repeating groups

its community of users. An entity is a cat- of data items describing one or more entiegory or grouping of things [Chen, 1976; ties. Further, for operating efficiency, these

Kent, 1978]; instances of an entity (or en- data items may be compressed or encoded

tity-instances) are members of that cate- or physically distributed over multiple recgory (e.g., EMPLOYEE is an entity and a ord segments. Records are typically named

A

v

Computing Surveys,Vol. 15,No. I,March 1983

Techniques for Structuring Database Reaords

°

47

ASSIGNED-PROJECTS

EPARTMENT'OF"EMPLOY~/,I

i

RetrievalRequests

Access Paths ]

Reports and

Other Outputs

DatabaseUpdates

EMPLOYEE

File Organization

DEPARTMENT

File Organization

PROJECT

File Organization

PhysicalDatabase

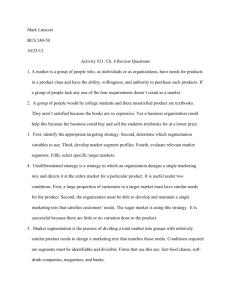

Figure 1. A physical database with three file organizations-

for the entities whose data items they contain. The record EMPLOYEE, for example,

might be defined as all attributes of the

entity E M P L O Y E E (EMP-NAME, EMPNO, AGE, and SEX) plus the relationship descriptor D E P A R T M E N T - O F - E M PLOYEE. The EMPLOYEE record might,

in turn, be divided into two record segments; EMP-SEG-1, containing EMPNAME and EMP-NO, and EMP-SEG-2,

containing AGE, SEX, and D E P A R T MENT-OF-EMPLOYEE.

A record-instance at the physical level

contains the description of an entity-instance at the logical level {i.e., strings of

bits that represent the values of its data

items). The set of all record-instances for a

particular record is called a file. Similarly,

the set of all segment-instances for a particular record segment is termed a subtile.

If a record is unsegmented, it has exactly

one segment (the record itself) and exactly

one subtile (the entire file). Subfiles reside

permanently in secondary memory in

groups called data sets; that is, a data set

is a physical allocation of secondary memory in which some number of subfiles are

maintained. Each data set has a set of accesspaths {algorithms and structures) that

are used to store, retrieve, and update the

segment-instances in the data set. A data

set with its associated access paths is

termed a file organization. A physical

database is a set of interconnected file organizations. Interconnections among file organizations must be maintained when the

descriptions of related entities are stored in

different data sets. As stated earlier, the,

terms record and segment define groupings

of data items. For convenience, however,

these items are also used, respectively, in

place of record-instance and segment-instance, provided that it is obvious from the

context that instances and not their definitions are of concern.

Figure I shows a physical database with

three file organizations, EMPLOYEE, DEP A R T M E N T , and P R O J E C T , where each

file organization is named for the file that

its data set contains. That is, the EMPLOYEE file organization's data set contains the E M P L O Y E E file, and so forth, for

the D E P A R T M E N T and P R O J E C T file

organizations. Interconnections between

the E M P L O Y E E f i l e organization and

the D E P A R T M E N T a n d P R O J E C T file

organizations are required for the relationship descriptors D E P A R T M E N T - O F - E M PLOYEE and A S S I G N E D - P R O J E C T S in

the E M P L O Y E E record. The values stored

for these relationship descriptors are

termed pointers, where a pointer is any

data item that can b e used to locate a

record (or segment ff records are segComputing Smweys, VoL 15, No. 1, March 1983

48

•

Salvatore T. March

mented). Two types of pointers are commonly used: symbolic pointers (typically

entity identifiers) and direct pointers (typically "relative record numbers" [IBM,

1974], i.e., the relative position of a record

in a data set). A symbolic pointer relies on

an access path of the "pointed to" file organization to retrieve records (segments).

Direct pointers, on the other hand, reference records (segments) by location. Hence

direct pointers are more efficient than symbolic pointers; however, they have wellknown maintenance disadvantages. For example, if a record (segment) is physically

displaced, in order to maintain some physical clustering when a new record (segment)

is added to the data set, all its direct

pointers must be updated. On the other

hand, symbolic pointers to that record (segment) axe still valid since its identifier was

not changed. Pointers (symbolic or direct)

are also used to connect records (segments)

residing in the same data set.

The record structures illustrated in Figure 1 are extremely simple (i.e., unsegmented records each defined for a single

entity). Many other alternatives exist. Any

of these records may, for example, be segmented and the associated subfiles stored

in individual data sets. Alternately, a single

record may be defined containing all data

items. Such a record could contain PROJE C T as a repeating group within EMPLOYEE, which, in turn, is a repeating

group within D E P A R T M E N T .

The criterion for selecting among alternative record structures is the minimization

of total system operating cost. This is typically approximated by the sum of storage,

retrieval, and update costs. The data storage cost for a physical database is easily

estimated by multiplying the size of each

subtile by the storage cost per character for

the media on which the subtile is maintained. In order to estimate retrieval and

update costs, however, the retrieval and

update activities of the user community

must be characterized and the access paths

available .to support those activities must

be known. A retrieval activity is characterized by selection (of entity-instances), projection (of data items pertaining to those

instances), and sorting (output ordering)

criteria and by a frequency of execution. An

Computing Surveys, Vol. 15, No. 1, March 1983

update activity is characterized by selection

(of entity-instances) and projection (data

items updated for those instances) criteria

and, again, by frequency of execution. Selection and projection criteria for both retrieval and update activities determine

which subfiles must be accessed. The frequency of execution, of course, determines

how often the activity occurs. The total

cost of retrieval and update also depends

on what access paths are available to support those activities. In addition, the access

paths themselves typically incur storage

and maintenance costs.

The access paths of a file organization

define a set of record selection criteria

which are efficiently supported. Each file

organization has a primary access path

which dictates the physical positioning of

records in secondary memory. Hashing

functions, indexed sequential, and sequential files are examples of types of primary

access paths. As discussed by Severance

and Carlis [1977], the selection of a primary

access path for a file organization is based

on the characteristics of the "dominant"

database activity. In addition to having a

primary access path, file organizations typicaUy have some number of secondary or

auxiliary access paths, such as inverted

files, lists, full indexes, and scatter tables.

These access paths are used to retrieve

subsets of records that have been stored via

the primary access path.

Secondary access paths are included in a

file organization in order to support the

retrieval activities more efficiently. They

do, however, typically increase data maintenance costs and require additional storage space. Subtle trade-offs between retrieval efficiency and storage and maintenance costs must be evaluated in selecting

the most cost effective secondary access

paths.

While access paths focus on the storage

and retrieval of records, the actual units

that they transfer between primary and

secondary memory, termed blocks, typically contain more than one record. The

number of records per block is termed the

blocking factor. The retrieval advantages

of blocking are obvious for sequential access paths where the number of secondarymemory accesses required to retrieve all of

Techniques for Structuring Database Records

a file organization's records is simply the

number of records divided by the blocking

factor. For direct access paths (including

secondary access paths), the analysis depends on both the blocking factor and the

proportion of records that are required, and

it is less straightforward. If records are unblocked (i.e., stored as one record per

block), then each record in a user request

requires a secondary-memory access.

Blocking may reduce the number of secondary-memory accesses since the probability that a single block contains multiple

required records increases (although not

linearly) with the blocking factor [Yao,

1977a]. The cost (measured in computer

time used) per secondary-memory access,

however, also increases (again not necessarily linearly) with the blocking factor

since more data must be transferred per

access [Severance and Carlis, 1977]. When

the proportion of records required by a user

request is large (over 10 percent [Martin,

1977]), the increased cost per secondarymemory access is typically outweighed by

the decreased number of accesses needed

to satisfy that request. Direct access paths

are more commonly used, however, when a

user request requires only a small proportion of records. The number of secondarymemory accesses is then approximately

equal to the number of records required

(i.e., approximately the same as when records are unblocked). In this case, blocking

does not reduce the number of secondarymemory accesses but only increases the

cost per block accessed. Therefore, the retrieval cost for that user is increased. Subtle

trade-offs between the number of accesses

and the cost per access must be evaluated

for each user request and blocking factors

that minimize total operating cost selected

when access paths are designed.

Both record structuring and access path

design are significant database design subproblems, and their solutions are interrelated [Batory, 1979]. However, access path

selection has been treated in detail elsewhere (see Severance and Carlis, 1977;

Martin, 1977; CSxdenas, 1977; Yao, 1977b;

March and Severance, 1978b) and will be

discussed further only as it relates to the

selection of efficient record structures. In

addition, the problems of data compression

*

49

and encoding are separable design issues

(see Maxwell and Severance, 1973; Aronson, 1977; Wiederhold, 1977; Severance,

1983), and in the following analysis it is

assumed that each data item has an encoded compressed length corresponding to

the average amount of space required to

store a single value for that data item.

To determine a set of efficient record

structures, one might proceed naively by

evaluating the operating cost of all possible

record structures (using time-space cost

equations from, e.g., Hoffer, 1975; March,

1978; or Yao, 1977a). However, the number

of possible alternatives is so large (on the

order of n n, where n is the number of data

items [Hammer and Niamir, 1979]) and the

evaluation of each alternative is so complex

that this approach is computationally infeasible.

The remainder of this paper is organized

as follows: in Section 1 the record structuring problem is formally defined and relevant terminology is presented; in Sections

2 and 3 alternative record structuring techniques are discussed; finally, Section 4 presents a summary and discussion of directions for further research.

1. PROBLEM DEFINITION AND RELEVANT

TERMINOLOGY

As discussed above, a database contains

and provides access to descriptions of instances of entities as required by its community of users. These descriptions are organized into data sets that are permanently

maintained in secondary memory. The design of record structures for these data sets

is critical to the performance of the database. This is evidenced by t h e order of

magnitude reduction in total system operating cost reported by Carlis and March

[1980] when flat file record structures were

replaced by more complex hierarchic ones

(see also Gane and Sarson, 1979).

To review our terminology, recall that a

data item is a characteristic or a descriptor

of an entity. For instance, E M P - N A M E is

a data item (a characteristic of the entity

EMPLOYEE), whereas " J O H N DOE" is a

value for that data item. At the physical

level, data items are grouped into records;

the data items of a record define the recComputingSurveys, VoL 1~, No. 1, March 1983

50

•

Salvatore T. March

Supplier 1

Part

SUP-NAME

SUP-NO

DESCRIPTION

ADDRESS

PART-NO

PRICE

AGENT

DISCOUNT

Part

Part

Q

t

o

P;rt

Supplier 2

(

I

I

Figure 2. An aggregation of supplier and part records.

ord's fields. Record-instances contain the

actual data values. The set of all instances

of a record is a file. For convenience,

"record" is used for "record-instance" when

"instance" is implied by the context. For

operating efficiency the items of a record

may be partitioned to define some number

of record segments {thus a segment has

fields defined by the subset of data items

that it contains); the set of segment-instances for a segment is a subtile. Again, for

convenience, "segment" is used for "segment-instance" when "instance" is implied

by the context. In a database management

system, data are physically stored in data

sets. Each data set contains some number

of subfiles.

The record structuring problem is to define records and record segments (i.e., assign data items to records and record segments, thus defining their fields), to assign

the associated subfiles to data sets, and,

when more than one subtile is assigned to

the same data set, to physically organize

the segments within the data set. The objective is to meet the information requirements of the user community most efficiently.

The problem of record structuring is a

difficult one. Three factors Contribute to

this difficulty: (1) modern databases typically have a complex logical data structure,

which yields an enormous number of alterComputing Surveys, Vol. 15, No. 1, March 1983

native record structures; (2) the complex

nature of the user activity on that logical

data structure requires the analysis of subtle and intricate trade-offs in efficiency for

users of the database; and (3) the selection

of record structures and access paths is

highly interrelated--each can have a major

impact on the efficiency of the other. Each

of these difficulties is discussed in more

detail below.

The logical structure of a database describes the semantic interdependencies that

characterize a composite picture of how the

user community perceives the data. Within the context of commercially available

database management systems (DBMSs),

three data models have been widely followed to represent the logical structure of

data: hierarchic, network, and relational

(see Martin, 1977; Date, 1977; Tsichritzis

and Lochovsky, 1982). Since IMS [McGee,

1977] is the most widely used hierarchic

DBMS, its terminology is used in the following discussion. There are several network D B M S s {e.g., IDMS, IDS II, D M S

1100 [C~rdenas, 1977]); however, they are

all based on the work of the Data Base

Task Group (DBTG) of the Conference on

Data Systems Languages (CODASYL)

[CODASYL, 1971, 1978] and are collec.

tively referred to as CODASYL DMBSs;

therefore the CODASYL terminology is

used. Relational D B M S s [Codd, 1970, 1982]

/

Techniques for Structuring Database Records

Supplier I

SUP-NO

AGENT

51

ADDRESS

NAME

Supplier 2

Supplier 3

Sup;tier N

SUP-SEG 1

Figure 3.

SUP-SEG 2

A segmentation of the supplier record.

are best exemplified by INGRES [Held,

Stonebreaker, and Wong, 1975] and System

R [Blasgen et al., 1981; Chamberlin et al.,

1981]; when terminology is unique to one of

these systems, it is so noted.

Each data model provides rules for

grouping data items into hierarchic segments in IMS, owner-member sets in CODASYL DBMSs, and normalized relations

in relational DBMSs. Database records

could be defined and their files stored in

data sets according to the groups specified

by a data model; however, performance

may be improved considerably by combining (or aggregating) some and/or partitioning (or segmenting) other of these groups.

Suppose, for example, that there are two

entities, SUPPLIER and PART, to be described in a database. Each SUPPLIER is

described by the attributes SUP-NAME,

SUP-NO, ADDRESS, and AGENT. Each

PART is described by the attributes DESCRIPTION, PART-NO, PRICE, and

DISCOUNT and by the relationship descriptor SUPPLIER-OF-PART (assume

that SUPPLIERs can supply many

PARTs, but each PART is supplied by

exactly one SUPPLIER). Database records

could be defined for both SUPPLIER and

PART and their files stored in SUPPLIER

and PART data sets, respectively. For

database activity directed through SUPPLIERs to the PARTs they supply, however, the aggregation (i.e., hierarchic arrangement) illustrated in Figure 2 is a more

efficient physical arrangement (see Gane

and Sarson, 1979; Schkolnick, 1977; Schkolnick and Yao, 1979). In this arrangement

each part record is stored with its SUPPLIER record, and the relationship descriptor SUPPLIER-OF-PART is represented by the physical location of PART

records.

On the other hand, database activities

typically require (project) only a subset of

the stored data items (see Hoffer and Severance, 1975; Hammer and Niamir, 1979).

For a user activity that requires only the

data items SUP-NO and AGENT, segmenting (i.e., partitioning into segments)

the SUPPLIER record, as shown in Figure

3, is an efficient physical arrangement. In

Figure 3 two segments are defined: SUPSEG1, containing SUP-NO and AGENT,

and SUP-SEG2, containing NAME and

ADDRESS. In order to satisfy the above

mentioned user activity, only the subtile

defined by SUP-SEG1 needs to be accessed. This subtile is clearly smaller than

the SUPPLIER file; therefore fewer accesses and fewer data transfers are required

to satisfy this user activity from the SUPSEG1 subtile than from the SUPPLIER

file. Hence this segmentation reduces the

cost of satisfying this user activity. If the

data items required by a user activity are

distributed over multiple segments, then

evaluating the cost ~ more complex. If

subfiles are independently accessed and

may be processed "in parallel," then cost

may still be reduced. However, this is not

common in practice; usually access to multiple subfiles proceeds serially and thus at

greater cost than access to a single subtile.

While record segmentation may significantly reduce retrieval costs, it can also

Computing Surveys, Vo]. !,5,No. 1, March 1983

52

•

Salvatore T. March

increase database maintenance costs. In

particular, inserting or deleting an instance

of a record typically requires access to each

of the record's subffles. Clearly, the more

subffles a record has, the more expensive

insertion and deletion operations become.

Record structuring may be viewed as the

aggregating and segmenting of logically defined data, within the context of a database

management system. IMS [McGee, 1977]

supports aggregation by permitting multiple hierarchic segments to be stored in the

same data set (termed "data set group" in

IMS). In this case, children segments are

physically stored immediately following

their parent segments. CODASYL DMBSs

support aggregation by allowing repeating

groups in the definition of a record type

and by permitting the storage of a MEMB E R record type in the same data set

(termed "area" in CODASYL) as its

OWNER, "VIA SET" and " N E A R

OWNER". In CODASYL, a SET defines a

relationship by connecting O W N E R and

M E M B E R record types (e.g., if SUPP L I E R and P A R T are record types, then

S U P P L I E R - O F - P A R T could be implemented as a S E T and S U P P L I E R as the

O W N E R and P A R T as the MEMBER).

Storing a M E M B E R record type " N E A R

O W N E R " directs the D B M S to place all

M E M B E R records physically near {presumably on the same block as) their respective O W N E R records. In order to specify " N E A R O W N E R " in a CODASYL

DBMS, "VIA SET" must also be specified,

indicating that access to the M E M B E R

records occurs primarily from the O W N E R

record via the relationship defined by the

SET. Relational D B M S s do not support

aggregation per se (i.e., "normalizing" relations requires, among other things, the removal of repeating groups [Date, 1977]).

However, because substantial efficiencies

can be achieved through the use of aggregation, Guttman and Stonebreaker [1982]

suggest its possible inclusion in INGRES

under the name "ragged relations" (since

the relations are not normalized). In System R [Chamberlin et al., 1981] aggregation

can be approximated by initially loading

several tables into the same data set

(termed "segment" in System R) in hierarchic order and supplying "tentative recComputing Surveys, Vol. 15, No. 1, March 1983

ord identifiers" during I N S E R T operations

to maintain the physical clustering [Blasgen et al., 1981].

All three types of D B M S s support record

segmentation. In IMS, segmentation is accomplished simply by removing data items

from one segment to form new segments,

which become that segment's children.

Similarly, in a CODASYL DBMS, segmentation is accomplished by removing data

items from one record type to form new

record types and defining SETs with these

new record types as M E M B E R S and

O W N E D BY the original record type. The

new (MEMBER) record types are stored

VIA S E T but not N E A R OWNER. In addition, a proposed CODASYL physical

level specification [CODASYL, 1978] suggests that record segmentation be supported at the physical level by allowing the

definition of multiple record segments,

termed storage records, for a single record

type. In a relational DBMS, record segmentation is accomplished by breaking up a

"base relation" [Date, 1977] into multiple

relations, each of which contains the identifier (key) of the original; these then become "base relations."

To be efficient, record structures must be

oriented toward some composite of users.

One design approach is simply to design the

record structures for the efficient processing of the single "most important" user

activity. Record structures that are efficient

for one user, however, may be extremely

inefficient for others. Since databases typically must serve a community of users

whose activities possess incompatible or

even conflicting data access characteristics,

such an approach is untenable.

An alternative design approach is to provide each user with a personalized database

consisting of only required data items, organized for efficient access. In a database

environment, however, many users often

require access to the same data items;

hence personalized databases would require

considerable storage and maintenance

overhead owing to data redundancy, and

are directly contrary to the fundamental

database concept of data sharing [Date,

1977]. The problem is to determine a set of

record structures that minimizes total system operating costs for all database users.

Techniques for Structuring Database Records

The task of structuring database records

is further complicated because the retrieval

and maintenance costs of a database depend on both its record structures and

its access paths. Consider, for example, a

database containing 1,000,000 instances of

a single record, where the record is defined

by a group of data items totaling 500 characters in length. Consider also a single user

request that selects 4 percent of the file

(i.e., 40,000 records) and projects 30 percent

(by length) of the data items (i.e., 150 characters) from each of these records. At the

physical level, records may be either unsegmented or segmented, and the access path

used to satisfy that user request may be

either sequential or direct.

Suppose that the physical database was

designed with unsegmented records. Satisfying the user request via a sequential access path would require a scan of the file

that, as discussed earlier, requires N / b

block-accesses, where N is the number of

records (here N = 1,000,000) and b is

the blocking factor of the file. Assuming a

realistic block length of 6,000 characters,

the blocking factor for this file is 12 (i.e., 12

500-character records may be stored in a

6,000-character block). Satisfying the user

request via a sequential access path would

require 83,334 block-accesses. Assuming

that the 40,000 required records are randomly distributed within the file, then

satisfying this user request via a direct

access path (such as an inverted list) would

require M * (1 - (l/M) K) block-accesses

[Yao, 1977a], where M = N / b and K is the

number of records selected (here K =

40,000). Again, assuming 6,000-character

blocks, yielding a blocking factor of 12, a

direct access path would require 31,368

block-accesses. If, instead, the physical

database was designed with segmented

records, one segment containing only those

data items required by the user request

under consideration, then only that subtile

must be processed. The expressions for the

number of accesses required for sequential

and direct access paths remain the same;

however, again assuming 6,000-character

blocks, the blocking factor increases to 40

(i.e., 40 150-character segments may be

stored in a 6,000-character block). A sequential access path would require 25,000

•

53

block-accesses to satisfy the:User request; a

direct access path would require 19,953.

Clearly, segmented records processed via

direct access path minimizes the number of

block-accesses required to satisfy the user

request; unfortunately, it may not be the

most efficient database design. Four additional factors must be considered: (1) the

types of direct access paths used to support

such user requests typically incur additional storage and maintenance costs; (2) in

a computer system where there is little

contention for storage devices, the cost per

access for a sequential access path is typically less than that for a direct access path,

and hence the number of block-accesses

may not be an adequate measure for comparison; (3) record segmentation typically

increases database maintenance costs by

increasing the number of subfiles that must

be accessed to insert and delete record instances; and (4) both record segmentation

and secondary access paths add to the complexity of the database design and hence

may increase the cost of software development and maintenance.

For the database and user request under

consideration, the simplest physical database, and the least costly to maintain, has

unsegmented records processed by a sequential access path. If a secondary access

path (say an inverted list) is added to that

physical database, then the number of

block-accesses is reduced by 62 percent

(from 83,334 to 31,768), a considerable savings even in a low-contention system. The

cost of maintaining the secondary access

path must, of course, be considered. On the

other hand, segmenting the records without

adding a secondary access path reduces the

number of block-accesses by 70 percent

(from 83,334 to 25,000) over unsegmented

records processed by a sequential access

path and by 37 percent (from 31,768 to

25,000) over unsegmented records processed by a direct access path. The addition

of a secondary access path further reduces

the number of block-accesses by 20 percent

(from 25,000 to 19,953). The significance of

this 20 percent reduction depends on the

relative cost of sequential access as compared to direct access and the additional

storage and maintenance costs incurred by

the secondary access path.

54

•

Salvatore T. March

As illustrated by the above discussion,

structuring database records is a difficult

task, and the selection of inappropriate record structures may have considerable impact on the overall performance of the database. In the next two sections, alternative

techniques for structuring database records

are presented and compared. Techniques

for the segmentation of flat files are discussed in Section 2; a technique for the

aggregation of more generalized logical data

structures is discussed in Section 3.

2. RECORD SEGMENTATION TECHNIQUES

In this section, techniques for structuring

database records in a flat file environment

are discussed. A flat file is one that is logically defined by a single group of singlevalued data items (i.e., a single normalized

relation).

Table 1 shows the logical representation

of a flat file personnel database that will be

used in the following discussion. As is

shown in that table, each employee is

described by a set D, of 30 data items di,

i - 1. . . . . 30; for instance, dl ffi Employee

Name, de ffi Pass Number. Each data item

has an associated length, li, corresponding

to the (average) amount of space required

to store a value for that data item, such as

30 characters for Employee Name. Processing requirements consist of a set U, of 18

user retrieval requests u~, r ffi 1. . . . ,18, each

of which is characterized by selection, projection, and ordering criteria (S, P, and O,

respectively), by the proportion of records

selectedpr, and by a frequency of access v~.

For example, retrieval request R5 has a

selection criterion based upon Pay Rate, a

projection criterion requiring Security

Code, Assigned Projects, Project Hours,

Overtime Hours, and Shift. Its ordering

criterion is by Department Code within

Division Code. The request selects an average proportion of 0.9 (90 percent) of the

employees and is required daily (its frequency is 20 times per month).

Retrieval requests R1 through R8 each

select a large proportion of the stored data

records (requests R1 through R3, in fact,

require all data records). These retrievals

are likely to be most efficiently performed

by using a sequential access path which

Computing Surveys, Vol. 15, No. 1, March 1983

retrieves the entire file (or required

subfiles). Retrieval requests R9 through

R18, on the other hand, each select a relatively small proportion of the stored records

(R15 through R16 retrieve individual data

records). These are likely to be best performed via some auxiliary access paths,

such as inverted lists or secondary indexes

[Severance and Carlis, 1977].

The record segmentation problem is to

determine an assignment of data items to

segments (recall that each segment defines

a subtile) that will optimize performance

for all users of the database. The problem

may be formally stated as

rain ~ vr Y, Xr~ RC(r, s)+ ~ SC(s),

AER

Ur~ U

s~.A

s~A

where R is the set of all possible segmentations (i.e., assignments of data items to

segments), A is one particular segmentation, s is a segment of the segmentation A,

and X~, is a 0-1 variable defined as follows:

Xrs ffi

r 1,

L0,

if segment s is required

by user request m;

otherwise.

Finally RC(r, s) is the cost of retrieval for

the subtile defined by segment s for the

retrieval request Ur, and SC(s) is the cost of

storage for segment s, including overhead

space required for its access paths (if any).

Hoffer and Severance [1975] point out

that, theoretically, this problem is trivial to

solve. Since retrieval costs are minimized

by accessing only relevant data and storage

and maintenance costs are minimized by

eliminating redundancy, each data item

should be maintained independently. Such

an arrangement would permit completely

selective data retrieval and would eliminate

data redundancy. Assuming that buffer

costs and file-opening costs are negligible,

this solution does, in fact, minimize retrieval cost for sequential fries that are independently processed in a paged-memory

environment [Day, 1965; Kennedy, 1973].

To show that this is true, it is sufficient to

look at the access cost for a single user, u~.

If each data item is assigned to an independent segment (i.e., di is assigned to segment i), then the access cost for each subtile

G~

b~

o~

T~

i ~ ~.

~J

c~

!!°

o~

a~

rJ~

fl

~ 1 ~ t C~r~

m~

56

•

Salvatore T. March

A paged-memory environment assumes

that any access to any subfile requires the

N

same amount of time and expense. ConCost(r, s) -- a ~ L,

sider, however, a system in which all

subtiles

of the database reside on a dediwhere a is the cost per page access {including the average seek and latency, as well as cated disk and there is little contention

page-transfer costs), B is the block size, and among users of the database. Sequential

hence (N/B)l~ is the number of page ac- access to a single subffle then requires only

cesses required to scan a subtile, where each one (minimal) disk-arm-seek operation for

segment is of length ls. Here, l~ is equal to each cylinder on which the subtile is stored

li, the length of the corresponding data item [IBM, 1974]. A n y access to a different

subtile, however, requires an average diskdi. The total access cost for that user is

seek operation. Thus access time (and

hence cost) increases significantly when

Xria N

died

~ li,

more than one subfUe must be sequentially

processed for a single user request. Actual

where each segment is now indexed by the time and cost estimates for such an envidata item that it contains.

ronment must take into consideration the

If this is not the optimal solution, then physical location of subfiles and the disthere must exist some subset of data items tance that the disk arm must move.

d i n , . . . , dn such that the access cost for user

Three major techniques have been sugur is reduced when those data items are gested for the determination of efficient

assigned to a single segment, say segment record segmentations: clustering, iterative

j. If u~ requires any data item in segment j , grouping refinement, and bicriterion prothen the subffle that it defines must be gramming. Each technique varies in the

retrieved. Since the number of accesses re- assumptions it makes about access paths

quired to retrieve a subffle via a sequential and the secondary memory environment it

access path is a linear function of the seg- considers. These variations yield signifment length, the retrieval cost for the icantly different problem formulations.

subtile defined by segment j is given by a Each, however, is restricted to a single flat

(N/B) (lm+ . . . + ln). Mathematically this file representation, and none permit the

yields

replication of data.

The techniques are first discussed indiN

a ~ (lm+ ..- + l~)max(x. . . . . . . Xrn)

viduallymclustering in Section 2.1, iterative

grouping refinement in Section 2.2, and biN

criterion programming in Section 2.3. Each

< a - ~ (xrml,,, + . . . + Xrdn).

then is used to solve the personnel database

problem shown in Table 1. These results

Clearly, this inequality is false since are analyzed and discussed in Section 2.4.

l~ . . . . . l~ > 0 and x ~ . . . . . Xr~ ~ (0, 1}.

Therefore, according to theory, the pre2.1 Mathematical Clustering

vious solution must have been optimal.

This appealing theoretical approach is Hoffer [1975] and Hoffer and Severance

untenable in practice for several reasons. [1975] developed, for the record-structuring

First, the complex nature of database activ- problem, a heuristic procedure that uses a

ity requires both random and sequential mathematical clustering algorithm and a

processing. For random processing (i.e., branch-and-bound optimization algorithm.

processing via a direct access path), the Their procedure is restricted to sequential

number of accesses required to satisfy a access paths but includes a detailed costing

user request is not a linear function of the model of secondary memory (in a movingsegment length. Hence, the preceding result head disk environment). The solution to

is not valid. In addition, the characteris- this problem is an assignment of data items

tics of secondary storage devices and their to segments such that the sum of retrieval,

data access mechanisms may not be well storage, and maintenance costs is minimodeled by a paged-memory environment. mized.

required by that user is given by

Computing Surveys, Vol. 15, No. 1, March 1983

Techniques for Structuring Database Records

Mathematically, clustering is simply the

grouping together of things which are in

some sense similar. The general idea behind

clustering in record segmentation is to organize the data items physically so that

those data items that are often required

together are stored in the same segment; in

a processing sense, such data items are

"similar." Conversely, data items that are

not often required together are stored in

different segments; they are "dissimilar." In

this way user retrieval requirements can be

met with a minimal amount of extraneous

data being transferred between main and

secondary memory. Unfortunately, user retrieval requirements are typically diverse,

and a method of evaluating trade-offs

among user requirements must be determined.

The clustering approach therefore first

establishes a measure of similarity between

each pair of data items. This measure reflects the performance benefits of storing

those data items in a common segment. It

then forms initial segments by using a

mathematical clustering algorithm to group

data items with high similarity measures.

Finally, a branch-and-bound algorithm selects an efficient merging of these initial

segments to define a record segmentation.

The factors included in the similarity

measure are critical to the value of this

approach. Hoffer and Severance [1975b]

developed a similarity measure for each

pair of data items (termed a pairwise similarity measure) based upon three characteristics:

(1) the (encoded} data item lengths (li for

data item dz);

(2) the relative weight associated with each

retrieval request (v~ for request Ur);

(3) the probability that two given data

items will both be required in a retrieval

request (p~j~for data items di and d/in

request u~).

The first characteristic, li, is obtained

directly from the problem definition (see

Table 1) and reflects the amount of data

that must be accessed in order to sequentially retrieve all values of that data item.

For any pair of data items, the bigger the

length of the data items, the more often

they must be retrieved together in order for

•

57

the retrieval advantage (for users requiring

both data items) to outweigh the retrieval

disadvantage (for users requiring one or the

other but not both data items). Hence a

pairwise data item similarity measure

should decrease with the lengths of the data

items.

The second characteristic, v~, is also obtained directly from the problem definition

and reflects the relative importance of each

user request measured by its frequency of

access. Again, for a pair of data items, the

more frequently they are retrieved together, the greater is the performance advantage of storing them in the same segment. A pairwise data item similarity measure should increase with the frequency

with which the data items are retrieved

together.

The final characteristic pi]~ is the probability of coaccess of data items di and d] in

retrieval request u~ and is defined as follows. Let p~,. be the probability that data

item d~ is required by user request u~. The

value of pir is determined by the user request's (re's) use of data item di. Three

possibilities exist:

(1) Data item d~ is used by m for selection.

Since only sequential access is permitted, the value of d~ must be examined

in each record to determine if the record is required by u~; therefore the

value ofp~ is equal to 1.

(2) Data item di is used by u~ for projection

only. Data item d/is required only from

those records which have been selected;

therefore the value of p~ is equal to p~,

the proportion of records selected

by Ur.

(3) Data item di is not used by request Ur.

Data item d~ is irrelevant for request u~

and therefore the value ofpi~ is 0.

The value ofp~j~ is then given by

p~'j~=

1,

O,

p~,

if pir "ffipy~;

if p~ -- 0 or py~ffi0;

otherwise.

For any pair of data items d/ and dj,

Hoffer's similarity measure is given by

S~(~) ffi

Y,a.r vrS~~ (~)

Ya,,r vr[ S~~ (~) ] '

Computing Sth~eys, Vol. 15, No. 1, March 1983

58

*

Salvatore T. March

where [x] denotes the smallest integer

greater than or equal to x, S~~ (a) denotes

the pairwise similarity of data items d~ and

dj in the user request u~, and a is a parameter that controls the sensitivity of the measure. The numerator is the sum of the

similarity measures for a pair of data items

in a retrieval request, summed over all retrieval requests and weighted by the frequency of retrieval. The denominator simply normalizes the similarity measure to

the interval [0, 1] since S~p (a) is itself in

the interval [0, 1], as discussed below.

S~~)(a) is given by

if

0,

S~f ~(a) ffi

( li Jr b)

(c~))~

li+ b

pijr -~

O;

otherwise,

'

where C~p represents the proportion of information useful to user request u~, which

is contained in a subffle consisting only of

data items di and dy. Assuming that pi~ ->

p]~, then it is given by

C¢r)

'J ---~

~0,

if p~/~ffiO;

li + p q r l j

L l~ + b

'

otherwise.

Since 0 _<p/y~ _< 1 for all di, dj, and ur, and

li -> 0, b -> 0 for all di and dy, then 0 _< C~,fl

1. Hence for any a _> 0, 0 _< (C~r~)~ _< 1

and 0 _< ~,~r~(a) <_ 1. For values of C~r~ < 1

(i.e., when piy~ < 1) larger values of a would

cause (C}/~))~ to decrease rapidly and hence

reduce the pairwise similarity measure

S}p (a) for that user request rapidly as piyr

decreased. Thus large values of a would

yield low similarity measures (except, of

course, when p#r ffi 1). Small values of a

(particularly 0 _< a -< 1) would yield high

similarity measures that would not decrease rapidly as p#r decreased.

Having as a basis these definitions, the

pairwise similarity measure Sij (a) does, in

fact, exhibit the characteristics suggested

earlier: for any pair of data items di and dy

(provided that p~yr> 0 for some user request

Ur), then Siy(~) decreases as the lengths l/

and b increase and Sq(a) increases as the

frequency of access or the probability of

coaccess of the data items increases.

Computing Surveys, Vol. 15, No. 1,March 1983

Using this measure to form a data-item

data-item similarity matrix, Hoffer and

Severance applied the Bond Energy Algorithm (see McCormick, Schweitzer, and

White, 1972) and used the results to form

initial clusters (groups) of data items. The

Bond Energy Algorithm manipulates the

rows and columns of the similarity matrix,

clustering large similarity values into blocks

along the main, upper left to lower right

diagonal. These blocks correspond to

groups of similarly accessed data items that

should be physically stored together. However, because block boundaries are generally fuzzy, initial clusters must be subjectively established from the permuted

matrix.

An optimal grouping of initial clusters is

determined by a branch-and-bound algorithm, which (implicitly) evaluates the expected cost of all possible combinations of

initial clusters and selects the one with

minimum cost. The branch-and-bound algorithm is more efficient than complete

enumeration since many (nonoptimal)

solutions are eliminated from consideration

without actually evaluating their costs. (See

Garfinkel and Nemhauser, 1972, for a general discussion of branch-and-bound algorithms.) The algorithm first divides the set

of all possible solutions into subsets and

then establishes upper and lower bounds

on cost for each subset. These bounds are

used to "fathom" (i.e., eliminate from consideration) subsets that do not contain an

optimal solution. A subset is fathomed if its

lower bound is higher than the upper bound

of some other subset (or if its lower bound

is higher than the cost of some known solution). Each remaining subset is further

divided into subsubsets, and the process is

repeated until only a small number of solutions remain (i.e., are not fathomed).

These remaining solutions are evaluated

and the minimum cost solution selected.

Hoffer and Severance [1975] report that

although the execution time of the branchand-bound algorithm is significantly affected by the number of initial clusters selected, the performance of the final solution

is not--provided, of course, that some

"reasonable" criteria are used to establish

the initial clusters. They also suggest the

potential for developing an algorithmic prox

Techniques for Structuring Database Revords

cedure to identify a "reasonable" set of

initial clusters, thereby reducing the subjective nature of this approach. They do not,

however, present such a procedure.

2.2 Iterative Grouping Refinement

Hammer and Niamir [1979] developed an

heuristic procedure for the record structuring problem which iteratively forms and

refines record segments based on a stepwise

minimization ("hill climbing") approach.

Their procedure considers the number of

page accesses for multiple record segments

that have been stored nonredundantly. Access may be either sequential or random

via any combination of predetined secondary indexes (i.e., direct access paths), each

of which is associated with exactly one data

item. In order to evaluate random retrieval,

they define the selectively, S~, of a data

item (S~ is used to represent the selectivity

of data item di) as the expected proportion

of records that contains a given value for

that data item. The selectivity of a data

item is estimated by the inverse of the

number of distinct values the data item

may assume. If an index exists for a data

item, then the selectivity of the index is

equal to the selectivity of the data item.

The performance of a segmentation is

evaluated by the number of pages that must

be accessed in order to satisfy all user requests. For each subtile required by a user,

the number of pages that must be accessed

is given by

where C~ is the number of combinations of

k objects t a k e n j at a time, N is the number

of records in the file organization, b is the

blocking factor (i.e., segments per page) for

the subffle, and s is the number of segments

required from the subtile.

This expression is derived by Yao [1977a]

and briefly discussed below. Assuming that

the s segments required by a user request

are uniformly distributed over the pages on

which the subtile is stored, then the retrieval problem may be viewed as a series

of s Bernoulli trials without replacement

(see Feller, 1970). Then C~-b/CN is the

probability that a page does not contain

•

59

any of the s required records. One minus

this quantity is, of course, the probability

that a page contains at least one required

record. Multiplying by N/b, the total number of pages, gives the expected number of

pages which must be accessed. For a more

detailed discussion of this derivation, the

interested reader is directed to Yao's paper

[Yao, 1977a].

The remaining factor, the number of segments required from each subtile, is calculated as follows. Assuming that the selectivities of data items are independent, that

selection is done first with all indexed data

items, and that subfdes are linked and directly accessed, then the number of record

segments required from a subtile, f, by a

user u~ is given by

s f f i N * II S ,

where Lf is the set of selection data items

required by user u~ that are either indexed

or appear in subfdes accessed before subtile

f is accessed.

The record structuring proceeds as follows. An initial segmentation is formed,

with each data item stored in a separate

segment. This is termed the trivial segmentation. The procedure iteratively defines

new segments by selecting the pair of existing segments that, when combined, yields

greatest performance improvement. When

no pairwise combination of existing segments yields improved performance, single

data item variations of the current segmentation are evaluated. If any variation is

found that improves the performance, then

the procedure is repeated, using that variation as the initial segmentation; otherwise,

the procedure terminates. While it is possible for the procedure to cycle a large

number of times, experimental evidence

suggests that for reasonable design problems it is not expected to cycle more than

once before terminating with an efficient, if

not optimal, record structuring. Hammer

and Niamir report determining record

structures yielding access cost savings of up

to 90 percent over the cost of using a singlesegment record structure. They do not,

however, consider the cost of data storage,

buffer space, or update operations, nor do

they consider the complexities of a movingComputing Surveys~Vol. 15, No. lj March 1983

60

•

Salvatore T. March

head-disk environment in which such databases are likely to be maintained.

the remainder of the data items; the length

of each is given by

2.3 Mathematical Programming

Eisner and Severance [1976] proposed a

mathematical programming approach to

the record structuring problem, which was

extended by March and Severance [1977]

and March [1978]. This approach views

record structuring as a constrained optimization problem in which the objective is to

minimize the sum of retrieval and storage

costs.

Based on a behavorial model of database

usage that predicts subsets of "high"-use

and "low"-use data items, the procedure is

restricted to considering at most two segments: "primary" and "secondary." Data

items with high usefulness, relative to their

storage and transfer costs, are isolated and

stored in a primary segment; the remaining

data items are stored in a secondary segment. All activity must first access the primary subtile, and may access the secondary

subtile (at additional cost) only if the request cannot be satisfied by the primary

subtile. The possibility of retrieving data

items by only accessing the secondary

subffle is not considered.

Eisner and Severance [1976] analyze this

design problem by assuming that activity

to the primary subfile is either all random

(with both subffles unblocked) or all sequential (with a blocked primary subfile

and an unblocked secondary subtile). In

addition, they assume that pointers stored

in the primary segments link the corresponding secondary segments. They formulate the problem as a bicriterion mathematical program and develop a parametric

solution procedure as discussed below.

A record segmentation, A, is defined by

a subset Da C D of data items that are

assigned to the primary segment. The

length of each primary segment is given by

WA---- F, l i + p ,

-diEDA

where p is the length of a system pointer.

(Note that if both primary and secondary

segments are sequentially processed, then

the pointer is unnecessary and hence p is

set to 0.) The secondary segments contain

Computing Surveys, Vol. 15, No. 1, March 1983

diED-DA

and WA + WA-- W, a constant.

Associated with any segmentation A is a

subset UA C U of users whose retrieval

requirements are not satisfied by the data

items assigned to the primary segment. The

cumulative frequency of retrieval by satisfied users during time period T is

VA =

E

uj~U-Ua

Vj.

The frequency of retrieval by dissatisfied

users is

(zA=~vj,

u]eUA

and VA + VA ---- V, a constant.

When a user requires a data item di that

is not part of the primary segment, then the

secondary subtile must be accessed as weft.

A frequently referenced data item, say di,

could be moved to the primary segment;

this inclusion, however, would create an

additional transportation burden (proportional to li, the length of data item di) for

all users of the database, and in particular

those users not needing di.

Consider a database with N records and

assume that primary and secondary subfiles

are both unblocked. Let al be the cost of a

single access to the primary subtile, tl be

the unit cost of data transfer from the primary subtile, and s~ be the unit cost of

primary subtile storage over the time interval T. Similarly define a~, t2, and s2 for the

secondary subffie. Given a specific segmentation A and assuming that the primary

and secondary subffles are both sequentially processed, then

Costa ~- N((al + tl WA) Y

+ (a2 "}" t2 W A ) ~rA

Jr S l W a 4" S2WA).

(1)

This equation is explained as follows:

(al + tl Wa) is the cost to access and transfer one primary segment. Multiplying by N

gives the cost to retrieve the entire primary

subtile. Since V is the total frequency of

retrieval for all users, N(a~ + t~WA)V

T e c h n i q u e s for S t r u c t u r i n g D a t a b a s e R e c o r d s

•

61

d~

U1

do

Uo

Figure 4. Networkrepresentationused by Eisner and Severance[1976l.

is the total cost of retrieval from the

primary subtile. Similarly, the term

N ( a 2 + t 2 W A ) V A is the total cost of

retrieval from the secondary subtile.

N(s~, WA) is the cost of storage for the

primary subtile, and N(s2, ~VA) is the cost

of storage for the secondary subtile.

Defining R to be the set of all possible

record segmentations, the design problem

becomes

min N((a~ + t~ WA) V + (a2 + t2WA) VA

A~R

+ sl WA + s2 WA).

(2)

This expression can be reduced to one in

WA and IYA to yield

min K3 - K4(K1 - W A ) ( K 2 --" fZA),

A~R

where

K1 = W + a2/t2,

K 2 = (tl V + sl - s2)/t2,

K3 = N(a~ V + s2 + t 2 K I K 2 ) ,

K 4 = Ntz.

(3)

sions (i.e., (K1 - W~) and (K2 - ~ ) ) . T he

fact that its solution also solves the objective (3) can be seen by noting that

(1) a constant may be added to or subtracted from an objective function

without changing its optimal solution

(hence K3 can be subtracted from (3));

(2) an objective function may be multiplied

or divided by an positive constant without changing its optimal solution

(hence the result of subtracting K3

from (3) can be divided by K4);

(3) maximizing an objective is equivalent

to minimizing its negative (hence the

change from a minimization in (3) to a

maximization in (4)).

Geoffrion [1967] has shown that if K1 _

WA >--O, K 2 >-- VA >--0 and R is a continuous

convex set, then a solution A* that solves

the objective

max c(K1 - WA) + (1 - c)(K2 - VA)

A~_R

(5)

over all c ~ [0, 1] also solves the objective

(4).

Eisner and Severance [1976] also examined objective functions of this form specifFor reasonable values of ai, t~, and si, K1,

ically for the record segmentation problem,

K2, K3, and K4 are nonnegative constants

and the objective function (3) is minimized where the set R is discrete. T h e y analyzed

the problem using the network formulation

by that segmentation which solves

illustrated in Figure 4. Data items and users

m a x ( K 1 - W A ) ( K 2 - VA).

(4) are represented by nodes in the network.

AER

Activities are described by edges that conT h e expression (4) is termed a bicriterion nect each user Ur to all data items required

m a t h e m a t i c a l p r o g r a m because its objec- by that user. User u~, for example, is shown

tive function is the product of two expres- to require data items d~, d3, and ds. Two

Compt~tiug Surveys, VoL 15, No. 1, March 1983

62

•

S a l v a t o r e T. M a r c h

additional nodes (do and uo) and a set of

directed edges (from do to di V di ~ D and

from uj to Uo V uy E U) complete the network representation

The capacity of an edge, (do, di) is given

by cli, where li is the length of data item di

and c is a parameter that varies the relative

cost of retrieval as compared to that of

storage. The capacity of an edge (uj, uo) is

given by (1 - c)vr, where Vr is the frequency

of retrieval by user Ur. The remaining network edges have infinite capacity. Intuitively, the capacities of the arcs represent

the relative importance of data item length

as compared to retrieval frequency for the

overall performance of a segmentation.

By using this network formulation and

standard network theory (see Ford and

Fulkerson, 1962), the maximum flow from

do to u0 may be quickly calculated, and is

given by

min CWA 4- (1 -- c) VA.

(6)

A~R

Noting that eq. (5) may be written as

max cK1 + (1 - c ) K 2

A~R

-- (cWA + (1 -- C)17A)

or

cK1 + (1 - c)K2 - rain cWA + (1 -- C) ~A,

AER

it is clear that for a given value of c, a

solution to (6) also solves (5). Using this

approach, Eisner and Severance developed

a procedure that produces a family of

"parametric" solutions for the parameter c.

On the basis of conditions established by

Eisner and Severance [1976] the procedure

terminates either with a parametric solution, which is known to be optimal, or with

the best parametric solution and a lower

bound on the optimal solution. If the best

parametric solution is not "sufficiently

close" to the lower bound, a branch-andbound procedure is used to find the optimal

solution.

March and Severance [1977] extended

this work in the area of blocked sequential

retrieval. They generalized the analysis to

a situation in which primary and secondary

subffles are arbitrarily blocked, subject to

the constraint of a limited buffer area; they

then presented an algorithm that deterComputing Surveys, Vol. 15, No. 1, March 1983

mines an optimal combination of record

structures and block size, given a fixed main

memory buffer allocation.

The more complex design problem is analyzed as follows. First, the Eisner-Sever.

ance procedure is used to produce the family of parametric solutions. Ranges on the

primary block size for which each parametric solution is known to be optimal are then

established. Within each such range an optimal combination of primary block size

and segmentation is determined and the

cost of that combination calculated. The

least cost combination becomes a global

upper bound for the problem (all other

combinations may, of course, be discarded).

For each range in which an optimal solution

is not known, a lower bound is determined.

A range may be fathomed (i.e., eliminated

from further consideration) if its lower

bound is greater than the global upper

bound. For each range which remains, a

branch-and-bound procedure that either

fathoms the range or determines an optimal

segmentation-primary block size combination for that range is used. This segmentation-primary block size combination then

becomes the global upper bound (otherwise

the range would have been fathomed).

When all ranges have been analyzed, the

global upper bound is, in fact, optimal.

The procedure does guarantee to produce

an optimal assignment of data items to

primary and secondary segments, and total

cost savings of 60 percent over the cost of

using single-segment record structures have

been demonstrated. While this procedure

considers the cost of data storage, it does

not explicitly consider buffer space or update costs. The procedure has, however,

been interfaced with a model of secondarymemory management (see March, Severance, and Wilens, 1981) that implicitly considers these effects.

2.4 A Comparative Analysis of Record

Segmentation Techniques

Three approaches to record segmentation

have been discussed: mathematical clustering [Hoffer and Severance, 1975], iterative

grouping refinement [Hammer and Niamir,

1979], and bicriterion programming [Eisner, and Severance, 1976; March and Severance, 1977]. Table 2 characterizes each of

Techniques for Structuring Database Records

Table 2.

•

63

A Characterization of Techniques for Record Segmentation

Scope of the problem

Technique

Access paths

Secondary

memory

environment

Class of record

structures

Evaluation

criteria

Composite of

retrieval

Storage and

maintenance

costs

Number of page

accesses

Mathematical

clustering

Branch and

bound

Composite of

retrieval

Storage costs

Bicriterion

mathematical

programming

Clustering

Sequential

Moving

head

disk

~_1 Segment

No redundancy

Iterative

grouping

refinement

Bicriterion

programming

Scan

Predefined

indexes

Any predefined

access paths

Paged

~1 Segment

No redundancy

Paged

1 or 2 segments

No redundancy

these techniques by the scope of the problem addressed (i.e., the types of data access

paths, the secondary-memory environment

modeled, the class of alternative record

structures allowed, and the criteria by

which alternatives are evaluated) and the

type of algorithm used.

Assuming a flat file logical data structure,

stored without redundancy, each approach

establishes an assignment of data items to

subffles, which improves the overall system

performance by either eliminating or reducing unnecessary data transfer. The mathematical clustering approach produces a permuted data-item × data-item similarity

matrix; this matrix must be subjectively

interpreted to form initial groups of data

items, which are then combined using a

branch-and-bound procedure. The iterative

grouping-refinement procedure iteratively

groups pairs of previously established data

item groups, and evaluates single data item

variations in the initial solution for improved performance. The mathematical

programming approach formulates the

problem as a bicriterion mathematical program which is solved by using a network

representation and classical operations research techniques. The solution isolates a

set of data items with high usefulness relative to their storage and transfer cost.

These data items are stored in a primary

segment; the remaining data items are

stored in a secondary segment.

The first two approaches are heuristic in

nature, and while both yield considerable

cost savings, neither can guarantee opti-

Type of

algorithm

Hill climbing

mality. The first, mathematical clustering,

is more limited than the second, iterative

grouping refinement, in that it only consid.

ers sequential retrieval activity, while the

latter considers both sequential and random retrieval. The third approach, bicriterion programming, guarantees optimality;

however, it is restricted to a maximum of

two segments processed as primary and

secondary. It is capable of modeling both

sequential and random retrieval; but for

random retrieval, it assumes that both primary and secondary segments are unblocked.

Each of these techniques was used to

solve the personnel database problem

shown in Table 1. Extensions and/or modifications were made to the various techniques to permit solution of this problem

and a meaningful comparison of results. In

order to facilitate this comparison, the

characterization of the computer system

and data set shown in Table 3 were assumed for all experiments. In addition,

since the clustering technique is limited to

sequential-only data access, this restriction

was also initially placed on the other two

techniques. Following a presentation of the

results from each technique, a comparative

analysis is given.

When applied to the personnel database

problem shown in Table 1, the clustering

approach yields the permuted similarity

matrix of Table 4. The values in Table 4

represent the "normalized" similarities between each pair of data items. These values

range from a minimum of 1, when the data

ComputingSurveys,YoL 15, No. 1, March 1983

64

°

S a l v a t o r e T. M a r c h

Table 3.

A Characterization of the Computer System and Data Set

System parameters

Disk device

Minumumdisk arm access time

Average disk arm access time

Rotation time

Size of a track (maximumblock size)

Tracks per cylinder

Cost allocation

Cost of bufferspace

Cost of disk space

Cost of retrieval time

Data set parameters

Number of data records

items are "rarely" required together, to a

maximum of 100, when the data items are

always required together. This particular

example illustrates the difficulty of establishing initial clusters from the permuted

similarity matrix. It is clear, for example,

that data items 17, 18, 29, and 30 should be

initially assigned to the same segment since

their similarities are all 100. Data item 28,

on the other hand, has pairwise similarities

of 51, 51, 51, and 53 with data items 17, 18,

29, and 30, respectively. Should it also be

included? If not, where should it be initially

assigned? The initial clusters shown in

boxes in Table 4 were subjectively established by the author simply by intuitively

looking for "high" similarity values. The

results from (implicitly) evaluating, via a

branch-and-bound algorithm, all possible

combinations of these initial clusters, in

both a dedicated disk environment and a

paged-memory environment, are summarized in Table 5.