From Information Retrieval (IR) to Argument Retrieval (AR) for Legal

advertisement

From Information Retrieval (IR) to

Argument Retrieval (AR) for Legal

Cases: Report on a Baseline Study

a

Kevin D. Ashley a,1 and Vern R. Walker b

University of Pittsburgh School of Law, Intelligent Systems Program

b

Maurice A. Deane School of Law at Hofstra University,

Research Laboratory for Law, Logic and Technology

Abstract. Users of commercial legal information retrieval (IR) systems often want

argument retrieval (AR): retrieving not merely sentences with highlighted terms,

but arguments and argument-related information. Using a corpus of argumentannotated legal cases, we conducted a baseline study of current legal IR systems in

responding to standard queries. We identify ways in which they cannot meet the

need for AR and illustrate how additional argument-relevant information could

address some of those inadequacies. We conclude by indicating our approach to

developing an AR system to retrieve arguments from legal decisions.

Keywords. Argument retrieval, legal information retrieval, default-logic

framework, presuppositional annotation, semantics, pragmatics

Introduction

Legal advocates and decision-makers often seek cases involving specified fact

patterns in order to find ideas and evidence for legal arguments, and to measure the past

success of those arguments. Today, litigators can submit a natural language description

of a desired fact pattern to commercial information retrieval (IR) tools, which then

retrieve and report the “highly relevant” cases using Bayesian statistical inference

based on term frequency [11]. What users of those systems often want, however, is not

merely IR, but AR, argument retrieval: not merely sentences with highlighted terms,

but arguments and argument-related information. For example, users want to know

what legal or factual issues the court decided, what evidence it considered relevant,

what outcomes it reached, and what reasons it gave.

Advances in text annotation, statistical text analysis, and machine learning,

coupled with argument modeling in AI and Law, can support extracting argumentrelated information from legal texts. This could enable systems to retrieve arguments as

well as sentences, to employ retrieved information in proposing new arguments, and to

explain any proposed evidence or conclusions.

In the next section, we describe a corpus of argument-annotated legal cases, which

we will use to develop and test tools to automate AR. Then, we describe a baseline

study of current legal IR systems in responding to standard queries, in order to evaluate

their adequacy for AR. We discuss ways in which the current systems are inadequate in

meeting the need for AR, and illustrate how additional argument-relevant information

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

1

Corresponding Author.

could address some of those inadequacies. We conclude by indicating our approach to

developing an AR system to retrieve arguments from legal decisions.

A Corpus of Argument-Annotated Legal Cases

Data for our long-term investigation into AR from legal cases comes from a corpus

of argument-annotated legal cases called the Vaccine/Injury Project Corpus (V/IP

Corpus).2 This set of legal cases has been annotated in terms of: the set of legal rules

governing the decision in the case, the set of legal issues derived from those rules, the

outcomes (findings of fact) on those issues, and the decision maker’s explanations of

those outcomes in terms of inferences from the evidence. The cases in the V/IP Corpus

are legal decisions under the National Vaccine Injury Compensation Program (NVICP),

a hybrid administrative-judicial system in the United States for providing compensation

to persons who have sustained vaccine-related injuries [13]. The National Childhood

Vaccine Injury Act of 1986 (NCVIA), administrative regulations, and the case law of

the federal courts provide the legal rules that govern compensation decisions.

One critical legal issue in these cases involves causation: a petitioner filing a claim

should receive compensation if, but only if, the vaccine received did in fact cause the

injury. When the federal Department of Health and Human Services contests a claim,

the Court of Federal Claims assigns a “special master” to conduct an adversarial

proceeding in which advocates for the claimant and for the government present

evidence on causation and other issues. The special master decides which evidence is

relevant to which issues of fact, evaluates the plausibility of the evidence, organizes

that evidence and draws reasonable inferences, and makes findings of fact.

In 2005, a federal Court of Appeals set forth three substantive conditions (subissues to be proved) for deciding the issue of causation in the most complex vaccine

cases. According to its decision in Althen v. Secretary of Health and Human Services

(reported at 418 F.3d 1274 (Fed.Cir. 2005)), the petitioner must establish, by a

preponderance of the evidence: (1) that a “medical theory causally connects” the type

of vaccine with the type of injury; (2) that there was a “logical sequence of cause and

effect” between the particular vaccination and the particular injury; and (3) that a

“proximate temporal relationship” existed between the vaccination and the injury (see

Althen at 1278). The first condition of Althen (requiring a “medical theory” of

causation) raises the issue of general causation (i.e., whether the type of vaccine at issue

can cause the type of injury alleged), while the second and third conditions together

raise the issue of specific causation (i.e., whether the specific vaccination in the

particular case caused the particular injury). To prevail, a petitioner must prove all three

conditions. The V/IP Corpus comprises every decision filed during a 2-year period in

which the special master applied the 3-prong test of causation-in-fact enunciated by

Althen (a total of 35 decision texts, typically 15 to 40 pages per decision).

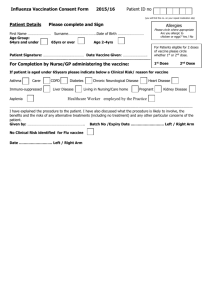

Each case has been annotated in terms of two types of argument features. First, a

default-logic framework (DLF) annotation represents (i) the applicable statutory and

regulatory requirements as a tree of authoritative rule conditions (i.e., a “rule tree”) and

(ii) the chains of reasoning in the legal decision that connect evidentiary assertions to

the special master’s findings of fact on those rule conditions [13]. Figure 1 displays an

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

2

This Corpus is the product of the Research Laboratory for Law, Logic and Technology (LLT Lab) at the

Maurice A. Deane School of Law at Hofstra University in New York.

excerpt of the DLF annotation for the first Althen condition in the Cusati case.3 The

rule condition (as applied to the particular vaccination and alleged injuries in the case)

is shown in bold-font at the top: “A ‘medical theory causally connect[s]’ the

vaccination on November 5, 1996 and an intractable seizure disorder and death,” citing

Althen. Indented below this rule condition is the Special Master’s conclusion on this

issue, in non-bold font: “‘MMR vaccine causes fever’ and ‘fever causes seizures’.” A

green circle (with color not visible in black and white) precedes the rule condition and

the finding, indicating that the Special Master has determined them to be satisfied/true.

Indented below the finding are the reasons (factors) that the Special Master gave as

support for the finding. The metadata fields in brackets after each assertion provide the

following information: “C:” = the page in the decision from which the assertion was

extracted; “S:” = the source of the assertion (i.e., the person or document asserting it; if

the special master is persuaded that the assertion is plausible/true, then the source is the

special master); “B:” = the basis for the assertion (e.g., the expert who stated it for the

record); “NLS:” = the natural language sentence or sentences from which the assertion

was extracted, which occur on the page(s) cited.

Figure 1. Partial DLF Model: Cusati Case Finding / Reasoning re 1st Althen Condition

Second, annotation in terms of presuppositional information identifies entities

(e.g., types of vaccines or injuries), events (e.g., date of vaccination or onset of

symptoms) and relations among them used in vaccine decisions to state testimony

about causation, assessments of probative value, and findings of fact. Table 1 lists

representative entities, events and relations commonly referred to in the V/IP corpus.

The semantic expressions include noun phrases that refer to real-world entities and

events, and causal relationships among types or specific instances of events.

Some presuppositional entities and relations in Table 1 apply to the evidentiary

assertion sentences in the Cusati excerpt in Figure 1. For example, the Covered-vaccine

is MMR vaccine, a Generic-injury is fever, and a Medical-theory-assertion takes as

relational arguments both vaccination with MMR vaccine and the occurrence of fever.

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

3

Cusati v. Secretary of Health and Human Services, United States Court of Federal Claims, Office of the

Special Masters, No. 99-0492V, 2005.

Table 1. Presuppositional Entities, Events and Relations in V/IP Cases (Noun Phrases: 1-6; Causal

Assertions: C1-C2)

Entity, Event or Relation

1. Covered-vaccine

2. Specific-date

3. Specific-vaccination

4. Generic-injury

5. Injury-onset

6. Onset-timing

C1. Medical-theoryassertion

C2. Specific-causationassertion

Meaning (Referent)

a type of vaccine covered by the VICP

a specific month, day, year

a vaccination with a Covered-vaccine on a Specific-date

a type of injury, adverse condition or disease

a symptom, sign or test result associated with the onset of a Generic-injury

time interval between Specific-vaccination and the earliest Injury-onset

assertion that a medical theory causally connects vaccination with a

Covered-vaccine with the occurrence of a Generic-injury

assertion that a Specific-vaccination caused an instance of a Generic-injury

The Design and Results of a Baseline Study of Current Legal IR Systems

We conducted a baseline study on the current state of IR for legal cases. As a

sample scenario, consider the Cusati case discussed above, in which petitioner’s

advocate sets out to prove causation based on the three Althen conditions. The advocate

would like to find out if there are any other cases involving a similar pattern of facts,

and how the evidence and arguments in such cases affected the results. We fashioned

sets of hypothetical facts based on reported cases to determine the current state of IR in

searching for cases involving similar fact patterns. We focused on ten cases in the V/IP

Corpus involving the first Althen condition: five decided for the petitioner (Cusati,

Casey, Werderitsh, Stewart, Roper) and five for the government (Walton, Thomas,

Meyers, Sawyer, Wolfe). (Case citations on file with authors.) In the latter five cases,

the first Althen condition was also decided for the government. We refer to each case as

the “source case” for constructing a standard search query based on its facts.

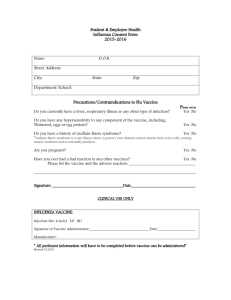

Using two commercial search systems for legal cases in the United States,

Westlaw Next® (WN) and Lexis Advance® (LA), we conducted searches based on

each of the ten cases with two types of queries, for a total of forty queries (10 cases * 2

queries * 2 IR systems). The queries were designed to return cases relevant to creating

legal arguments involving the facts in the case. We assume that most attorneys, in

seeking evidence or arguments related to proving or disproving Althen Condition 1

(using Medical-theory-assertions), would search for cases involving the same type of

vaccine (Covered-vaccine) and the same type of injury (Generic-injury) as in the fact

scenario, and for a similar period of time between the vaccination and the onset of the

injury (Onset-timing). If no specific time was specified in the source case, then the

query would be for any time after vaccination. For each of the ten cases, shown in

Table 2, we instantiated a query by substituting values from that case (i.e., the “source”

case) for Covered-vaccine, Generic-injury, and Onset-timing into two templates:

Q1. <Covered-vaccine> can cause <Generic-injury>

Q2. <Covered-vaccine> can cause <Generic-injury> <Onset-timing>

For instance, Q1 for the Casey case (i.e., Casey Q1) was: “Varicella vaccine can cause

encephalomyeloneuritis”. Casey Q2 was: “Varicella vaccine can cause

encephalomyeloneuritis within four weeks”. All queries were submitted as natural

language queries, specifying “Cases” as the content type desired and selecting “all state

and federal” for the jurisdiction option.

LA and WN each generates an annotated list of results for a query in order of

relevance (the “Results List”). Moreover, for each case in the Results List, they

generate the title of the case, court, jurisdiction, date, and citation as well as a report,

the “Case Report”. Figure 2 illustrates excerpts of the Results Lists and Case Reports

that LA and WN generated for the query, Casey Q2. In the LA Results List, the firstranked case happens to be the source case, that is, the Casey case. The first-ranked case

in the WN Results List is the Doe/17 case.

Table 2: Results of Baseline Study

!"#!"#$%&"'$%

!"#$%&!"!"##$%&'(&

!"#$%!!!"#$%&!!"

!""#$%&!'&!"#$%&!

!

!"#$%&!!!"##"$%!!

!"#$%&!"#$'!

!"#$%!!!"#$#%&!!

!"#$%&!"#$'!

!"#$%!!!"#!"#$%!!

!"#$%&!"#$'!

!"#$"#%&'(!

!!"#$"%$!!

!"#$%&!"#$'!

!"#$%&"!

!!"#$"%&!!

!"#$%&!"#$'!

!"#!"##$%&'!

!""#$#%&'()*+,-!

!"#$"!""!"#$%!

!!"#$%&'"(")&*+*"

!"#$!%"&'()*(

!"#$%!

!!"#"$%&&'()"

!"#$%&!'()(&

!"#$%&"!"!"#$"

!"#$%!

!!"#$%&'("

!"#$%&'%()*"

!"!"#$"%&'($"

!"#$%&'!()*+%!

!!"#!

!"#$%&#%'()*+),-.$)*

!"#$%!&%'()!'!&(*+,!

!"#$%&'()*#&")*%&+',-!

!"#$%&'(!

!"#$%&'()*+,#$-*#.+&+/!

!"#$"%&'()*&+,-.!

!"#$%!!"!

!"#$%!!"#$%"#&&!

!"#$%!!"!

!"#$%!!"#$%"&'#!

!"#$!"!"!

!"#$!"!"#$%"&%%!

!"#$!"!"!

!"#$!"!"#$%"&$'!

!"#$!"!"!

!"#$!"!"#!!"$%&!

!"#$!"!"!

!"#$!"!"#$#"%#$!

!"#$!"!"!!

!"#$!"!""#$%%!

!"#$!"!"!

!"#$!"!!!

!"#$!"!"!

!"#$!"!"#$%"&#'!

!"#$!"!"!

!"#$!"!"#$!"!!%!

!"#$!"!"!

!"#$!"!"#!$"%&'!

!"#$!"!"!

!"#$!"!"!##"$%%!

!"#$!"!"!!

!"#$!"!"#$%"#&'!

!"#$!"!"!

!"#$!"!"#$%"%##!

!"#$!"!"!

!"#$!"!"#$%"!#&!

!"#$!"!"!

!"#$!"!"#$%"&!$!

!"#$!"!"#!

!"#$!"!"#$%"%&$!

!"#$!"!"!

!"#$!"!"#$%"&'%!

!"#$!"!"!!

!"#$!"!"#!$"!%&!

!"#$!"!"!

!"#$!"!"#$%"&'!!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!"!

!""!

!""!

!"!

!""!

!"!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!""!

!"!

!"!

!""!

!""!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#!"!!

!"#$!!!

!"#!!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$%&!

!"#$!!!

!"#$%&!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#$!!!

!"#!!!!

!"#$!!!

!"#!!!!

!"#$!!!

!"#!"!!

!"#$!!!

!"#$!"!!

!"#$!!!!

!"#$!"!!

!"#$!"!!

!"#$!"!!

!"#$!!!

!"#$!"!!

!"#$%!!!

!"#$!"!!

!"#$%!!!

!"#$%&''"(!

!"#$%&'()"

!"#$%&#'()*)+,!

!"#$"%&'()*&+,,-.!

!"#$%&!"#$%&!

!"#$%&#'()*#'+,)%)(,+(

!"#$!"#$%&'&"$(&)'*+&#,&

!"#$%&''()!

!"#$%&%&'()*!

!"#"$"%%&#'&(&)*&+!

!"#$%&'(!!"#$%&'(!"$)*+!

!"#"$%!!!"##"$%!!

!"#$%&'!"#$%(!

!"#$%!

!"#$%&%'(!

!"#$%&#"'()!

!"#$%&!!!"##"$!!!

!"#$%&'!"#$%(!

!"#$%&'(!

!"#$%&'()*+&"#$&"(,&

!"#$%!&'(!

!"#$%&#"'()!

!"#$%&%&'()*!

!"#$%&#%'()*+),-.$)*

!"#$%!&%'!

!"#$%&'()*#&)+$&,'-!

!"#$!!"#$%&

!"#!$%&'($&)%*+&",+

!"#$%&!

!"#$"%&'()(*+,&-((.'!

!!"#!

!"#$%&'()(*+!

!"#$%&'($%&#)%$$&*$$+,!

!"#$%!!!!"#"$%!!

!"#$%&'!"#$%(!

!"#$%&!

!!"#$"%&!!

!"#$%&'!"#$%(!

!"#$%&!!!"#$"$%!!

!"#$%&'!"#!"#!

!!"#"$%&'&"()"

!"#"!"#$%&'(#

!"#$%&$'()*%

!"#!!!""#!!!!!

!!!!!!"#$%&'"!

!"!

!"!

!"!

!"!

!!

!"!

!!

!"!

!"!

!"!

!"!

!"!

!"!

!""!

!"!

!""!

!"!

!"!

!"!

!"!

!"!

!"!

!!

!"!

!!

!"!

!!

!"!

!"!

!"!

!"!

!!!

!"!

!"!

!"!

!"!

!!

!"!

!!

!"!

!!"#$%&'("

!"#$%&$'()*%

!"#!!""#!!!!!

!!!!!!"#$%&'"

!"#$%&!

!"#!

!"#!

!"#!

!"#!

!"#!

!"#!

!"#!

!"!!

!"#!

!"#!

!"#!

!"#!

!"#!

!"!

!"#!

!"!

!"#!

!"#!

!"#!

!"#!

!"#!

!"#!

!"!

!"#!

!"#!

!"#!

!"!

!"#!

!"#!

!"#!

!"!

!"!

!"#!

!"#!

!"!

!"!

!"#!

!"#!

!"#!

!"#!

!

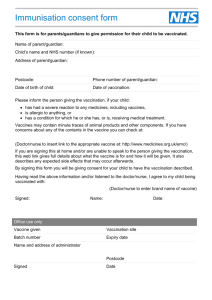

! Figure 2 illustrates, the LA Case Report for the Casey source case has two main

As

parts: a) an excerpt from the decision text with search terms highlighted, and b) an

Overview, a short summary of what the court decided. The WN Case Report for the

Doe/17 case also has two main parts: a) a short summary referring to a West key

indexing term (HEALTH) and b) (if the user selects the “Most Detail” option) several

brief excerpts from the case text with the search terms highlighted. These appear to

have been drawn from the opinion text and from the West headnotes, which are

prepared by human editors in accordance with a comprehensive West key legal index.

Rank-Ordered Lists of Cases Retrieved: For the twenty query searches on WN, the

system retrieved an average of 66 cases per query (cases that the system deemed

relevant according to its probabilistic criteria based on term frequencies), ranging from

a low of 41 to a high of 107 (Table 2, Col. 3). LA, by contrast, tended to report millions

of documents per query, although for some unknown reason it reported only eleven

(11) cases for the Q2 query based on the Werderitsh case.

To be useful, a search should return the most relevant cases at the top of the rankordered results list. For comparison, we gathered data on the top-10 cases in each list.

For all 40 queries, the percentage of returned cases in the top-10 cases that were federal

vaccine compensation cases was always at least 90% (Table 2, Col. 4). Occasionally,

some unrelated state law cases were returned, for example, having to do with trade

secrets, employment discrimination, or product liability. One of the highly relevant

cases to be returned should be the source case itself, whose facts formed the basis for

the query. For LA searches on the 20 queries, the source case appeared in the top-10

cases of the Results List 75% of the time (Table 2, Col. 5), and in the top-2 cases 60%

of the time. For two queries, LA returned the source case but it was not in the top-10

cases. WN returned the source case in the top-10 50% of the time (Table 2, Col. 5), and

in the top-2 15% of the time. For the remaining ten queries, WN returned the source

case, but it was not in the top-10 cases.

!"#$%&'()*+,"&!"#$%&#'()#&'*'

-.+/"+/&012"3&+,#"#'0"45%3&-,.)/"%%,'0,//)1"'/,1'/,$#"'"1/"23,%456"%41"$.)7#'8)&3)1'94$.'8"":#'6"*4,7&012"3&;,&$.,%'(,1<$,<"'

'

89&-*%"1&)9&6",:1&.;&<<6=&>??@&A969&-B*$5%&!CDE6&FG8&=>?>'@"A".,%'+4$.&'49'@"A".,%'+%,)5#'B"/"5C".'DEF'EGGH'

,I 'J1'#$5F'2"7741".'8,#'0,//)1,&"A',<,)1#&')*4$,"BB*&41'K$1"'LF'DLLH>'M3"',N"1$,&"A'0).$#')1'&3"')*4$,"BB*&)*,,$+"&C4&3'A)."/&%6',N,/:"A'

2"7741".O#'1".04$#'#6#&"5',1A',*H%"(&,1')55$1"P5"A),&"A')1Q,55,&4.6'."#241#"')1'3".'1".04$#'#6#&"5>'R#','."#$%&F'I$/7$+&;.H4&I""J%&

49'3".')*4$,"BB*&0,//)1,741F'2"7741".'C"<,1'&4'"S2".)"1/"'&3"'41#"&'49'#652&45#'49'3".'"+,"27*B.51"B.+"H4$K%>'T1"'#$/3'#652&45'8,#'

/"."C"%%)7#F','/41A)741'2,.7/$%,.%6',##4/),&"A'8)&3','1,&$.,%')*4$,"BB*&)19"/741F'83)/3'*'

CI 'T0".0)"8U'V"7741".'8,#',C%"'&4'2.40"'C6','2."241A".,1/"'49'"0)A"1/"'&3,&','0,.)/"%%,'0,//)1"'8,#'&3"'/,$#"')1'9,/&'49'3".'1"$.4%4<)/,%'

)1W$.)"#F'."1A".)1<'3".'"17&%"A'&4'/452"1#,741'2$.#$,1&'&4'3"';,741,%'+3)%A344A'-,//)1"'J1W$.6'R/&F'XE'=>?>+>?>'YY'ZGG,,PD'&4'ZGG,,PZX>'

>9&-.22.B*&)9&6",:1&.;&<<6=&>?8>&A969&-B*$5%&!CDE6&F>8'*'

[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[['

L"%/B*I&M"#/&!$%/&.;&N?&4"%HB/%&;.4&O*4$,"BB*&)*,,$+"&,*+&,*H%"&"+,"27*B.51"B.+"H4$K%&I$/7$+&;.H4&I""J%'

89 P."Q8R&)9&6",4"/*41&.;&<"*B/7&*+(&<H5*+&6"4)$,"%'=1)&"A'?&,&"#'+4$.&'49'@"A".,%'+%,)5#>'B"/"5C".'D\F'EGG]']X'@"A>+%>'\LD'EGG]'^('

HZZGXL\'

,I '_`R(M_'P'O*,,$+"%>'?2"/),%'a,#&".')1'O*,,$+"&.",#41,C%6'94$1A'%,/:'49'/."A)C)%)&6',#'&4'/%,)5,1&F'<)0"1'"0)A"17,.6'/41&.,A)/741#>'

CI '>>>'V"7741".b#'&3"4.6'49'/,$#,741'24#)&"A'&3,&'#3"'3,A','2."P"S)#71<'/41A)741'49'+-JBF'83)/3'8,#'#)<1)c/,1&%6',<<.,0,&"A'C6'3".')*4$,"BB*&

)*,,$+*K.+%F',#'"0)A"1/"AF')1'2,.&F'C6'&3"'41#"&'49'A),..3",',1A'4&3".'Q$P%):"'#652&45#',22.4S)5,&"%6'&84'I""J%&,d".'."/")0)1<'&3"'

#"/41A')*4$,"BB*&)*,,$+*K.+&41'K$1"'SF'EGGD>>>>'

>>>?2"/),%'a,#&".'A)A'14&',/&',.C)&.,.)%6',1A'/,2.)/)4$#%6F')1'e4fP&,C%"g'O*,,$+"&R/&'/,#"')1'83)/3'/%,)5,1&',%%"<"A',<<.,0,741'49'2.""S)#71<'

/41A)741',d".'."/")0)1<')*4$,"BB*&)*,,$+"F'C6'."%6)1<'41'/%,)5,1&b#'?4/),%'?"/$.)&6'A)#,C)%)&6'."/4.A#'2."/"A)1<')*,,$+*K.+%h'."/4.A#'#348"A'

&3,&'/%,)5,1&'3,A'#$f"."A'9.45'/3.41)/')..)&,C%"'C48"%'#61A.45"'iJj?I'C"94."'."/")0)1<')*,,$+*K.+%F'83)/3'8,#'/41&.,.6'&4'3".'

."2."#"1&,741#')1'O*,,$+"&R/&'/%,)5F',1A'$1A".5)1"A'3".'&3"4.6'49'/,$#,741',1A'3".'/."A)C)%)&6>';,741,%'+3)%A344A'O*,,$+"*'

>>>+%,)5,1&')1'e4fP&,C%"g'O*,,$+"&R/&'/,#"'5$#&'2."#"1&U'iDI'5"A)/,%'&3"4.6'/,$#,%%6'/411"/71<')*,,$+*K.+&,1A')1W$.6h'iEI'%4<)/,%'#"k$"1/"'

49',*H%"&,1A'"f"/&'#348)1<'&3,&')*,,$+*K.+&8,#'.",#41'94.')1W$.6h',1A'iZI'#348)1<'49'2.4S)5,&"'&"524.,%'."%,741#3)2'C"&8""1'

)*,,$+*K.+&,1A')1W$.6>';,741,%'+3)%A344A'O*,,$+"&J1W$.6'R/&F'XE'=>?>+>R>'YYZGG,,lDDi/IiDIi+Ii))IF'ZGG,,lDZi,IiDIiRI>>>>'

>>>'R&','#/3"A$%"A')1&".0)"8'&8"1&6P;.H4&I""J%&,d".'."/")0)1<'&3"'#"/41A')*,,$+*K.+&&3"."'8"."'14',A0".#"'"f"/&#'49'&3"')*,,$+"&

."/4.A"A>>>>&

>9&6/*2B";.4(&"#&4"B9&6/*2B";.4(&)9&6",4"/*41&.;&P"2/9&.;&<"*B/7&*+(&<H5*+&6"4)$,"%&*'

!

Figure 2: Sample Lexis Advance and Westlaw Next Case Reports for Casey Q2

Results-List Case Reports: For each of the 40 queries’ Results Lists, we examined

the Case Reports for each of the top-10 cases, and for the source case (whether it was in

the top-10 or not). We determined if the Case Report for the returned case included all

elements sought by the query (Table 2, Col. 6 & 7). Q1 sought three elements: a

particular vaccine, a particular alleged injury, and an indication that the vaccine caused

the injury. Q2 sought those same elements plus a fourth: an indication of the time-toonset between the vaccination and the manifestation of the injury. In so doing, we

examined only the Case Reports. Along with the Results List ranking, the Case Report

is a manifestation of what information the IR system “knows” (in a computational

sense) about the cases it returns and about how (well) a case relates to the user’s query.

In determining if a Case Report contained “all the elements sought by the query”

we adopted the following policies based on our assessment of a typical attorney’s

argumentation objectives. The Report text should include: (1) the vaccine by name

(e.g., measles-mumps-rubella), by well-known initials (e.g., MMR), or by alternative

name (e.g., commercial brand name); (2) the injury by name (e.g., myocarditis) or

alternative names; (3) with regard to causation, an assertion or direct implication, for

Q1, that the vaccine can[not] cause the injury, and for Q2, that the vaccine can[not]

cause the injury in the specific time period; and (4) for Q2, some specific time period.

For the 40 queries, we found that LA always returned at least one case in the top10 cases of the Results List whose Case Report had all three elements of Q1 (i.e., was

“Q1-complete”) or all four elements of Q2 (i.e., was “Q2-complete”) (Table 2, Col. 6).

WN failed to find any Q1-complete cases in the top-10 cases for three queries or any

Q2-complete cases in the top-10 cases for four queries. For two queries based on the

Roper case, WN reported Roper at rank Q1(21) and Q2(11) even though it was the

only case referring to gastroparesis in the WN Results List for either query. (LA

reported Roper at the first rank in its Results Lists for both queries.) In Werderitsh Q1,

LA did not return the source case in the top-10, or even in the top-250 of its Results

List, but Werderitsh was cited in LA’s Case Reports for seven of the cases in its top-10.

We also examined whether, when WN or LA returned the source case (whether or

not in the top-10), the corresponding Case Report contained all of the query’s elements.

On four occasions, WN’s Case Report for the source case was not Q2-complete even

though the query was based on its facts (Table 2, Col. 7). This occurred twice for LA. !

Case Report Decision Abstracts: For all 40 queries, we noted if the top-ten Case

Reports made clear which side won: 1) on the ultimate claim for vaccine compensation

(i.e., petitioner or the government); and 2) on the causation sub-issue under Althen

Condition 1. In general, this information about winners appeared much less frequently

in the WN Case Reports than in the LA Case Reports. WN reported ultimate-claim

outcomes about 29% of the time and causation sub-issue outcomes about 47% of the

time. LA reported ultimate-claim outcomes about 88% of the time and causation subissue outcomes about 93% of the time. As we discuss below, ultimate-claim and subissue outcomes can provide very useful information for AR.!

From IR Systems to AR Systems

Related Work: Some published research has addressed automatic semantic processing

of case decision texts for legal IR. The SPIRE program combined a HYPO-style casebased reasoning program, the relevance feedback module of an IR system, and a corpus

of legal case texts to retrieve cases and highlight passages relevant to bankruptcy law

factors [3]. Using narrative case summaries, the SMILE+IBP program classified case

texts in terms of factors [1] and analyzed automatically classified squibs of new cases

and predicted their outcomes. Other researchers have had some success in

automatically: assigning rhetorical roles to case sentences based on a corpus of 200

manually annotated Indian decisions [9]; categorizing legal cases by abstract Westlaw

categories (e.g., bankruptcy, finance and banking) [10] or general topics (e.g.,

exceptional services pension, retirement) [4]; extracting treatment history (e.g.,

‘‘affirmed’’, ‘‘reversed in part’’, or ‘‘overruled’’) [6]; classifying sentences as

argumentative based on manually classified sentences from the Araucaria corpus,

including court reports, and generating argument tree structures [8]; determining the

role of a sentence in the legal case (e.g., as describing the applicable law or the facts)

[5]; extracting from criminal cases the offenses raised and legal principles applied in

order to generate summaries [12] and extracting the holdings of legal cases [7].

As the baseline study revealed, the current legal IR systems are effective at

returning relevant cases based on natural language queries and probabilistic criteria. On

the other hand, the study identified ways in which the IR systems’ performance was not

ideal for AR – namely, in retrieval precision (e.g., source cases not ranked more highly

in Results Lists), and in the construction of Case Reports and composition of Decision

Abstracts. In contrast with prior work, we hypothesize that a system that identifies and

utilizes the kinds of semantic, presuppositional and DLF information relevant to legal

argument (i.e., semantic and pragmatic legal information) would perform better at AR

tasks. This section provides examples of such tasks and information.!

Identifying and Using Logical Structure within the Pragmatic Legal Context: The AR

task of identifying and using aspects of the logical structure of the argumentation and

reasoning in a case should improve the precision of IR. Frequently, relatively few

sentences in a lengthy opinion capture the significant reasoning. Some of them state the

legal rules, others recount evidence in the case, and still others explain the application

of legal rules to that evidence. In some decisions, those sentences are accurately

grouped under appropriate headings; in many decisions the sentences are intermingled. !

The argument goals of an AR system user might make some of those sentences

much more relevant than others. For example, given the fact-oriented purpose behind

queries like Q1 or Q2, it is highly unlikely that a sentence that merely re-states the legal

rule on causation in vaccine cases will be helpful. Yet in the search based on Casey, for

instance, all of the Case Reports for those cases that WN ranked higher than the source

case contained re-statements or summaries of the Althen rule itself. While such a

sentence contains instances of the search terms, it is not particularly helpful because it

contains no information about specific vaccinations, injuries, or durations. By contrast,

a system that could identify the presuppositional entities/events in Table 1 (e.g.,

Covered-vaccine, Generic-injury, and Onset-timing) may learn from the presence or

absence of such presuppositional information that a sentence merely re-states the law,

and does not apply the law to facts. Such semantic and pragmatic legal information

could focus on key sentences, rank cases more effectively, and improve precision

generally. For example, some knowledge of the pragmatic legal context might improve

precision in those instances in our study where the source case is the only Q1- or Q2complete case returned, but the source case is still not ranked at the top of the Results

List for the query (i.e., Roper, Casey, Stewart, Meyers, and Walton, Table 2, Col. 5).

An adequate AR system would also use this information to determine whether each

issue was decided in the case, and the conclusion reached (the finding of fact) on each

decided issue (i.e., which party won or lost). The system could generate a more

informative summary of the case decision – something that, as the baseline study

indicates, current IR systems cannot adequately perform in an automated way.

Attributing (Embedded) Assertions: An IR search might retrieve a sentence expressing

a causal assertion, but that sentence might recount the allegations in the case, or an

entry in a medical record, or the testimony of an expert witness, or a finding of the

factfinder. The “attribution problem” is determining the participant to whom the AR

system should attribute the assertion. For example, in the Walton Q2 search, LA ranked

the source case first, apparently on the basis of a sentence recounting the causal

testimony of a witness. However, the Special Master found against the petitioner in

Walton both on the ultimate claim for compensation and on the first Althen condition,

discounting the quoted witness testimony because the petitioner had “relied upon a

temporal relationship between vaccination and onset of symptoms” not established.

An adequate AR system needs the capability of analyzing a natural language

sentence like “Dr. Smith testified that the vaccine can cause the injury” into (A) the

embedded assertion (“the vaccine can cause the injury”) and (B) the person to whom

the assertion should be attributed (e.g., Dr. Smith). This will probably require the

construction of a discourse model of participants for the process of producing and

assessing the evidence. Moreover, an AR system would place a priority on determining,

for any significant assertion, whether to attribute it to the factfinder – even if a witness

also made the assertion in the first instance.

Utilizing Presuppositional Information: Once an AR system has extracted the assertion

content from a sentence, it would need to identify the references to presuppositional

entities, events and relations, such as those listed in Table 1. A system with such

expanded semantic-pragmatic information should be able to analyze arguments about

vaccinations and adverse events.

For example, vaccine names are clearly important in vaccine compensation claims.

We can refer to vaccines using generic names (“varicella”), popular names

(“Chickenpox”), or commercial brand names (“VARIVAX”). Some vaccines are

contained in composite vaccines with other names or initials. For instance, Quadrigen

vaccine is a combination of the DPT and polio vaccines. For purposes of the Thomas

queries (“DPT vaccine can cause acute encephalopathy and death”), cases involving

assertions about Quadrigen-caused injuries might be relevant. An AR system that had

sufficient semantic information about vaccines should have better success at resolving

the co-reference problem for presuppositional entities or events.

Rudimentary temporal reasoning would also be helpful. For example, in the Wolfe

Q2 queries (i.e., “Hepatitis B vaccine can cause intractable seizure disorder after about

one day”), neither WN nor LA ranked the source case in the top-10 cases of their

respective Results Lists. One reason for this, presumably, is that the Wolfe case does

not mention “one day” or “day”, but mentions “12 hours” in the following sentence:

“The temporal relationship between the immunization and the chain of seizure activity

which followed, starting within the 12 hours after the immunization, compel [sic] one

to conclude that there is a causal relationship between the two.” As another example, in

response to Walton search Q2 (“MMR vaccine can cause myocarditis after over three

weeks”), the following expressions all seem to have misled LA: “three test cases”,

“several weeks of evidentiary hearings”, “after the vaccine (i.e., no time specified)”,

“after the designation of this case”, “all three special masters”, “three theories”,

“after determining the evidence”. An AR system should be able to retrieve and analyze

sentences that contain the presuppositional information about temporal relationships

that is important for argumentation.

Background information is also needed to filter out cases and sentences that are not

really relevant to reasoning about injuries. For instance, the Sawyer queries sought

cases involving injuries to the arm that might have been caused by the tetanus vaccine.

The IR systems, however, returned cases that involved arms in other ways: Garcia, for

instance, involved vaccinations “in the right and left arms”; Pociask involved a

vaccinee with a chronic arm problem; and the Hargrove decision referred to “the site

that the antigen was introduced [the arm].” An AR system should be able to distinguish

such assertions and identify those about a vaccination causing injury to an arm.

Finally, if the user’s goal is retrieving arguments relevant to proving or disproving

Althen Condition 1, then assertions about general causation (Medical-theory-assertions,

Table 1) and specific causation (Specific-causation-assertions) are highly important.

Such presuppositional information poses not only lexical challenges (recognizing

alternative ways of expressing causal relations, such as “results in” and “brings about”),

but also pragmatic challenges (recognizing successful and unsuccessful ways of

reasoning to causal conclusions). Current IR systems do little more than search for key

terms, without a coherent model incorporating the kinds of semantic and pragmatic

legal information needed for adequate AR.

Conclusions & Future Work

Although the baseline study suggests that current legal IR systems are quite

effective at returning relevant cases, it identified a number of ways in which the IR

systems’ performance was not ideal from the standpoint of AR. We anticipate that a

module added to a full-text legal IR system could extract semantic and pragmatic legal

information from the top n cases returned and analyze them to improve retrieval

precision and construct better Case Reports and Decision Abstracts.

We assume that, given a corpus of argument-annotated legal cases, a program

could learn to extract this argument-related information from new case texts using a

multi-level annotation approach based on the DeepQA architecture of the IBM Watson

question-answering system [2]. We are now attempting to achieve that goal.

References

[1] Ashley, K., Brüninghaus, S. “Automatically Classifying Case Texts and Predicting Outcomes.” In

Artificial Intelligence and Law 17: 2 125-165 (2009).

[2] Ashley, K., Walker, V. “Toward Constructing Evidence-Based Legal Arguments Using Legal Decision

Documents and Machine Learning.” In: Proceedings ICAIL 2013 176-180 ACM, New York (2013).

[3] Daniels J., Rissland E. Finding legally relevant passages in case opinions. Proceedings ICAIL 1997. 3946 ACM, New York (1997).

[4] Gonçalves T., Quaresma P. Is linguistic information relevant for the text legal classification problem?

In: Proceedings ICAIL 2005. 168–176 ACM, New York (2005).

[5] Hachey B., Grover C. Extractive summarization of legal texts. Artificial Intelligence and Law 14:305–

345 (2006).

[6] Jackson P., Al-Kofahi K., Tyrrell A., Vacher A. Information extraction from case law and retrieval of

prior cases. Artificial Intelligence 150:239–290 (2003).

[7] McCarty, L. Deep semantic interpretations of legal texts. Proceedings ICAIL 2007, 217–224 ACM,

New York (2007).

[8] Mochales, R. and Moens, M-F. Argumentation Mining. Artificial Intelligence and Law 19:1-22 (2011).

[9] Saravanan M, Ravindran B Identification of rhetorical roles for segmentation and summarization of a

legal judgment. Artificial Intelligence and Law 18:45–76 (2010).

[10] Thompson, P. Automatic categorization of case law. Proc. ICAIL 2001. 70–77 ACM, NY (2001).

[11] Turtle, H. Text Retrieval in the Legal World, Artificial Intelligence and Law, 3:5-6 (1995).

[12] Uyttendaele C, Moens M-F, Dumortier J SALOMON: automatic abstracting of legal cases for effective

access to court decisions. Artificial Intelligence and Law 6:59–79 (1998).

[13] Walker, V., Carie, N., DeWitt, C. and Lesh, E. A framework for the extraction and modeling of factfinding reasoning from legal decisions: lessons from the Vaccine/Injury Project Corpus. Artificial

Intelligence and Law 19:291-331 (2011).