A Language of Suggestions for Program Parallelization

advertisement

A Language of Suggestions for Program

Parallelization

Chao Zhang‡ , Chen Ding† , Kirk Kelsey† , Tongxin Bai† , Xiaoming Gu† , Xiaobing Feng?

†

Department of Computer Science, University of Rochester

?

Key Laboratory of Computer System and Architecture,

Institute of Computing Technology, Chinese Academy of Sciences

‡

Intel Beijing Lab

The University of Rochester

Computer Science Department

Rochester, NY 14627

Technical Report 948

July 2009

Abstract

Coarse-grained task parallelism exists in sequential code and can be leveraged to boost

the use of chip multi-processors. However, large tasks may execute thousands of lines of

code and are often too complex to analyze and manage statically. This report describes

a programming system called suggestible parallelization. It consists of a programming interface and a support system. The interface is a small language with three primitives for

marking possibly parallel tasks and their possible dependences. The support system is

implemented in software and ensures correct parallel execution through speculative parallelization, speculative communication and speculative memory allocation. It manages

parallelism dynamically to tolerate unevenness in task size, inter-task delay and hardware

speed.

When evaluated using four full-size benchmark applications, suggestible parallelization

obtains up to a 6 times speedup over 10 processors for sequential legacy applications up

to 35 thousand lines in size. The overhead of software speculation is not excessively high

compared to unprotected parallel execution.

The research is supported by the National Science Foundation (Contract No. CNS-0834566, CNS0720796), IBM CAS Faculty Fellowship, and a gift from Microsoft Research. Any opinions, findings, and

conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily

reflect the views of the funding organizations.

1

Introduction

It is generally recognized that although automatic techniques are effective in exploiting loop

parallelism (Hall et al., 2005; Blume et al., 1996; Allen and Kennedy, 2001; Wolfe, 1996),

they are not sufficient to uncover parallelism among complex tasks in general-purpose code.

Explicit parallel programming, however, is not yet a satisfactory solution. On the one hand,

there are ready-to-use parallel constructs in mainstream languages such as Fortran, C, C++

and Java and in threading libraries such as Microsoft .NET and Intel TBB. On the other

hand, parallel programming is still difficult because of non-determinism. A program may

run fine in one execution but generate incorrect results or run into a deadlock in the next

execution because of a difference in thread interleaving.

One possible solution, which is explored in this report, is to let a user identify parallelism but let an automatic system carry the burden of safe implementation. We present

suggestible parallelization, where a user (or an analysis tool) parallelizes a sequential program by inserting suggestions or cues. A cue indicates likely rather than definite parallelism.

It exists purely as a hint and has no effect on the semantics of the host program—the output of an execution with suggestions is always identical to that of the original sequential

execution without suggestions, independent of task scheduling and execution interleaving.

We address the problem of extracting coarse-grained parallelism from legacy sequential

code. There are at least two main difficulties. First, the parallelism among large tasks may

depend on external factors such as program input. Second, such parallelism depends on

program implementation. It is often unclear whether a large piece of complex code can be

executed in parallel, especially if it uses third-party libraries including proprietary libraries

in the binary form. The benefit of parallelization hinges not only on these uncertain dependences but also on the unknown granularity and resource usage. The goal of suggestible

parallelization is to relieve a programmer of such concerns and shift the burden to the implementation system. It aims to enable a programmer to parallelize a complex program

without complete certainty of its behavior.

We use a form of software speculative parallelization, which executes a program in parallel based on suggestions, monitors for conflicts, and reverts to sequential execution when

a conflict is detected. It is based on a system called BOP , which implements speculation

tasks using Unix processes because it assumes that part of program code is too complex

to analyze; therefore, all data may be shared, and most memory accesses may reach any

shared data (Ding et al., 2007). During execution, BOP determines whether a set of tasks

are parallel and whether they have the right size and resource usage to benefit from parallel

execution. It has been used to parallelize several pieces of legacy code as well as one usage

of a commercial library.

The BOP system has two significant limitations. At the programming level, there is

no way to indicate possible dependences between tasks. At the implementation level, it

executes one task group at a time and does not start new speculation until the entire group

finishes.

In this report, we extend the BOP system with three additions.

• The suggestion language: We use a construct from BOP to suggest possible paral1

lelism. We extend Cytron’s post/wait construct (Cytron, 1986) in suggesting task

dependence. These suggestions may be incorrect or incomplete.

• Continuous speculation: We build a new run-time system that supports irregular task

sizes and uneven hardware speed by starting a speculation task whenever a processor

becomes available.

• Parallel memory allocation: We support parallel memory allocation and deallocation

during continuous speculation.

Speculative parallelization has been an active area of research in the past two decades.

Related issues are being addressed in a growing body of literature on atomic sections and

transactions. Most problems of software speculation have been studied in thread-based

solutions (Mehrara et al., 2009; Tian et al., 2008; Kulkarni et al., 2007; Cintra and Llanos,

2005; Welc et al., 2005; Dang et al., 2002; Gupta and Nim, 1998; Rauchwerger and Padua,

1995). The problems are different in a process-based solution. For example, a thread

replicates data at the array or object level and uses indirection to access them speculatively.

A process replicates the virtual address space and accesses data without indirection. As a

result, our design has to use different types of meta-data and different strategies in heap

management (memory allocation in particular), correctness checking and error recovery.

Speculation incurs significant run-time costs. These include not just the effort wasted

on failed speculation but also the overhead of monitoring and checking necessary even when

all speculation succeeds. We will evaluate the benefit and cost of our design using fullsize benchmark programs and show that the loss in efficiency is not excessive compared to

unprotected parallel executions.

2

The Suggestion Language

The suggestion language has three primitives shown in Table 1. We first describe them

using a running example and then discuss the interaction with the speculation system.

2.1

Spawn and Skip

Spawn-and-skip (SAS ) encloses a code block, as shown in Table 1. When an execution

reaches a SAS block, it spawns a new speculative task. The new task skips ahead and

executes the code after the block, while the spawning task executes the code in the block.

SAS parallelism cascades in two ways. It is nested if the block code reaches another SAS

block and spawns an inner task, or it is iterative (non-overlapping) if the post-block code

reaches a SAS block and spawns a peer task.

Although different in name and syntax, SAS is identical in meaning to the construct

called a possibly parallel region (PPR ). Although a single construct, PPR can express function parallelism, loop parallelism, and their mixes (Ding et al., 2007). In this report, we

use SAS and PPR as synonyms: one reflects the operation while the other its intent. As for

PPR we allow only non-overlapping spawns (by suppressing SAS calls inside a SAS block).

2

SAS {

//code block

}

spawn and skip: fork a speculative

process that executes from the

end of the code block

Post(channel,

addr, size)

post to channel a total of size

bytes from address addr

Wait(channel)

wait for data from channel

(a) the language has three suggestions: SAS for possible

parallelism, and Post-Wait for possible dependences

work.display

while (! work.empty) {

w = work.dequeue

t = w.process

result.enqueue( t )

}

result.display

SAS { work.display }

chid = 0

while ( ! work.empty ) {

w = work.dequeue

SAS {

t = w.process

if ( chid > 0 ) Wait(chid)

result.enqueue( t )

Post(chid, &result.tail, sizeof(...))

}

chid ++ }

result.display

(b) a work loop with

two display calls

(c) suggested parallelization with

mixed function and loop parallelism

queue work, result

Figure 1: The suggestion language in (a), illustrated by an example in (b,c)

We demonstrate the suggestion language using the example program in Figure 1(b).

The main body is a work loop, which takes a list of items from the work queue, invokes

the process function on each item, and stores the result in the result queue. It displays

the work and result queues before and after the work loop. The code does not contain

enough information for static parallelization. The size of the work queue is unknown. The

process call may execute thousands of lines of code with behavior dependent on the work

item. The queue may be implemented with third-party code such as the standard template

library.

The example program may be parallelizable. This possibility is expressed in Figure 1(c)

using two SAS blocks. The first block indicates the possible (function) parallelism between

the pre-loop display and the loop. The second block indicates the possible (loop) parallelism.

They compose into mixed parallelism—the pre-loop display may happen in parallel with

the loop execution.

SAS is a general primitive. An execution of a SAS block yields the pairwise parallelism

between the code block and its continuation. It is composable. An arbitrary degree of

parallelism can be obtained by different or repeated SAS block executions. The recursive

nature means that parallel tasks are spawned one at a time. Because of sequential spawning,

the code between SAS tasks naturally supports sequential dependences outside SAS blocks

3

such as incrementing a loop index variable. Dependences inside SAS blocks, however, need

a different support, as we discuss next.

2.2

Post and Wait

Post and wait are shown with their complete parameters in Table 1(a). They express possible dependences between SAS tasks. A data dependence requires two actions for support:

• communication: the new value must be transferred from the source of the dependence

to the sink

• synchronization: the sink must wait for the source to finish and the new value to

arrive

Communication is done through a named channel. SAS tasks are processes and have identical virtual address spaces. The posted data is identified by the data address and size.

A post is non-blocking, but a wait blocks upon reading an empty channel and provides

synchronization by suspending the calling task. After the channel is filled, the waiting task

retrieves all data from the channel and then continues execution.

SAS post-wait provides a means of speculative communication. It has two mutually

supportive features:

Unrestricted use The suggestion language places no restrictions on the (mis-)use of parallelization hints as far as program correctness is concerned. This is valuable considering the many ways a programmer may make mistakes. A hint may be incorrect: the

source, the sink, or the data location of the dependence may be wrong, a post may

miss its matching wait or vice versa, or they may cross-talk on the wrong channels.

Furthermore, a hint may be too imprecise. For example, a post may send a large

array in which only one cell is new. Finally, hints may be incomplete or excessive.

They may show not enough or more than enough dependences.

Run-time support The SAS run-time system has two important properties. First, it

guarantees sequential progress (through the use of competitive recovery, to be discussed in Section 2.3). An incorrect or mismatched post or wait is ignored when the

front of the recovery execution sweeps past them. Second, the system monitors the

data access by a task. At a post, the system communicates only memory pages that

have been modified, avoiding unnecessary data transfer.1 The monitoring system may

also detect errors in suggestions and missed dependences and report them to a user.

With unrestricted use and run-time support, the suggestion language acquires unusual

flexibility in expressiveness—not only does an incorrectly annotated program run correctly,

but it may run correctly in parallel. Our running example demonstrates this flexibility.

1

The run-time system also pools multiple posts and delivers them together to a waiting task, effectively

aggregating the data transfer.

4

In the example loop, each iteration inserts into the result queue, thus creating a dependence to other insertions in previous iterations. In Figure 1(c), the dependence is expressed

by inserting a wait before the list insertion and a post after the insertion. At run time,

they generate the synchronization and communication by which each task first waits for the

previous insertion, then receives the new tail of the list, and finally makes its own insertion

and sends the result to the next task.

The code in Figure 1(c) uses a channel identifier, chid, for two purposes. One is to

avoid waiting by the first iteration. The other is to ensure that communication occurs only

between consecutive iterations. Without the channel numbering, the program may lose

parallelism but it will still produce the correct result. With the channel numbering, the

suggestions still have a problem because the post in the last iteration is redundant. The

redundant post may not be removable if the last iteration of the while-loop is unknown

until the loop exits or if there are hidden early exits. Conveniently for a programmer, the

extra post is harmless because it does not affect correctness nor the degree of parallelism

due to the speculative implementation.

Suggestions can be occasionally wrong or incomplete, while parallelization is still accomplished if some of them are correct. This feature represents a new type of expressiveness in

a parallel programming language. It allows a user to utilize incomplete knowledge about a

program and parallelize partial program behavior.

2.3

Implicit Synchronization and Competitive Recovery

A conflict happens in a parallel execution when two concurrent tasks access the same datum

and at least one task writes to it. This is known as the Bernstein condition (Allen and

Kennedy, 2001). Since conflicts produce errors, they are not allowed in conventional parallel

systems. In SAS , conflicts are detected and used to enable implicit synchronization. As a

result, errors happen as part of a normal execution. This leads to the use of competitive

recovery, which starts error recovery early assuming an error will happen later.

Implicit synchronization A parallel task has three key points in time: the start point,

the end point, and the use point. Since there can be many use points, most parallel languages let a user specify a synchronization point in code that precedes all use points. The

synchronization point can be implicit or explicit. In OpenMP, a barrier synchronization

is implied at the end of a parallel loop or section. Other languages use explicit constructs

such as sync in Cilk and get in Java.

For ease of programming, SAS does not require a user to write explicit synchronization.

The first use point of a SAS task happens at the first parallel conflict, which is detected

automatically by the speculation substrate. Implicit synchronization permits a user to

write parallel tasks without complete knowledge of its use points. For example in the code

in Figure 1(c), the last SAS task exits the loop and displays the result queue. Since

some of the results may yet to be added, the last task may make a premature use of the

queue and incur a conflict that implicitly delay the display until all results are ready. The

synchronization happens automatically without user intervention.

5

Sometimes a user knows about use points and can suggest synchronization by inserting

posts and waits. In the example loop, properly inserted post-wait pairs may allow existing

results being displayed while new results are being computed. If the user misses some use

points, implicit synchronization still provides a safety net in terms of correctness.

Competitive recovery Speculation may fail in two ways: either a speculative task incurs

a conflict, or it takes too long to finish. SAS overcomes both types of failures with the use

of an understudy task. The understudy task starts from the last correct state and nonspeculatively executes the program alongside the speculative execution. It has no overhead

and executes at the same speed as the unmodified sequential code. If speculation fails

because of an error or high overhead, the understudy will still produce the correct result

and produce it as quickly as the would-be sequential run. Figuratively, the understudy task

and speculation tasks are the two teams racing to finish the same work, and the faster team

wins. In the worst case when all speculation fails, the program finishes in about the same

time as the fastest sequential run. We call it competitive recovery because it guarantees

(almost) zero extra slowdown due to recovery.

The speculation-understudy race is a race between parallel and sequential execution.

But it is actually more than a race. Neither the understudy nor the speculation has to

execute the entire program. When either one finishes the work, the other is aborted and

re-started. The last finish line becomes the new starting line. Thus, competitive recovery

is as much a parallel-sequential cooperation as a parallel-sequential competition.

The example code in Figure 1(b,c) may have too little computation for a parallel execution, especially one using heavy weight processes. In that case, competitive recovery will

finish executing the loop first and abort all speculation. If the amount of computation is

input dependent, the run-time system would switch between sequential and parallel modes

to produce the fastest speed, without any additional burden on the programmer.

3

Continuous Speculation

3.1

Basic Concepts

A speculation system runs speculative tasks in parallel while ensuring that they produce

the same result as the sequential execution. It deals with three basic problems.

• monitoring data accesses and detecting conflicts — recording shared data accesses by

parallel tasks and checking for dependence violations

• managing speculative states — buffering and maintaining multiple versions of shared

data and directing data accesses to the right version

• recovering from erroneous speculation — preserving a safe execution state and reexecuting the program when speculation fails

6

A speculation task can be implemented by a thread or a process. Most software methods

target loop parallelism and use threads. These methods rely on a compiler or a user to

identify shared data and re-direct shared data access when needed. A previous system,

BOP , shows that processes are cost-effective for very coarse-grain tasks (Ding et al., 2007).

BOP protects the entire address space. It divides program data into four types, shared data,

likely private data, value-checked data, and stack data, and manages them differently.

A process has several important advantages when being used to implement a general

suggestion language. First, a process can be used to start a speculation task anywhere

in the code, allowing mixing parallelism from arbitrary SAS blocks. Second, a process is

easier to monitor. By using the page protection mechanism, a run-time system can detect

which pages are read and modified by each task without instrumenting a program. For

example, there is no instrumentation for shared data or its access in the parallelized code

in Figure 1(c). Third, processes are well isolated from each other’s actions. Modern OS

performs copy-on-write and replicates a page when it is shared by two tasks. Logically, all

tasks have an identical but separate address space. This leads to easy error recovery. We

can simply discard an erroneous task by terminating the task process.

3.2

Managing Speculative Access

For coarse-grain tasks, most cost comes from managing data access. A process-based design

has three advantages in minimizing the cost of shared data access. First, page protection

incurs a monitoring step only at the first access. There is no monitoring of subsequent

accesses to the same page. Second, each task has its private copy of the address space; data

access is direct and has no indirection overhead. Finally, we use lazy conflict detection, that

is, checking correctness after a task finishes and not when the task executes, so there is no

synchronization delay for shared data accesses.

Although it incurs almost no overhead at the time of a speculative access, a processbased design has several downsides. First, the cost of creating a process is high. However,

if a task has a significant length, the cost is amortized. Second, page-level monitoring leads

to false sharing and loss of parallelism. The problem can be alleviated by data placement,

which is outside the scope of this report. Finally, modified data must be explicitly moved

between tasks. Unless one modifies the operating system, the data must be explicitly copied

from one address space to another. To reduce the copying cost, we use group commits.

Group commit As part of the commit, a correct speculation task must explicitly copy

the changed data to later tasks. For a group of k concurrent tasks on k processors, each task

can copy its data in two ways: either to the next task or to the last task of the group. The

first choice incurs a copying cost quadratic to k because the same data may be copied k − 1

times in the worst case. The second choice has the minimal, linear cost, where each changed

datum is copied only once. We call this choice group commit or group update. It is a unique

requirement in process-based speculation. We next show how to support group commit in

continuous speculation, when new tasks are constantly being started before previous tasks

finish.

7

3.3

Dual-group Activity Window

On a machine with k processors, the hardware is fully utilized if we can always run k active

tasks in parallel. Continuous speculation tries to accomplish this by starting the next

speculation task whenever a processor becomes available. At each moment of execution,

speculative activities are contained in the activity window, which is the set of speculative

tasks that have started but not yet completed. Here completion means the time after

correctness checking and group commit. In SAS , tasks are spawned one at a time, so there

is a total order in their starting time. We talk about “earlier” and “later” tasks based on

their relative spawning order.

Conceptually tasks in an activity window are analogous to instructions in an instructionreordering buffer (IRB) on modern processors. Continuous speculation is analogous to

instruction lookahead, but the implementation is very different because a task has an unpredictable length, it can accumulate speculative state as large as its address space allows,

and it should commit as part of a group.

A dual-group activity window divides the active tasks into two groups based on their

spawning order in such a way that every task in the first group is earlier than any task

in the second group. When a task in either group finishes execution, the next task enters

the window into the second group. When all tasks in the first group complete the group

commit, the entire group exits, and the second group stops expanding and becomes the first

group in the window. The next task starts the new second group, and the same dual-group

dynamics continue in the activity window.

For a speculative system that requires group commits, the dual-group activity window is

both a necessary and sufficient solution to maximize hardware utilization. It is obvious that

we need groups for group commits. It turns out that two groups are sufficient. When there

is not enough active parallelism in the activity window, the second group keeps growing by

spawning new tasks to utilize the available hardware.

Continuous speculation is an improvement over the BOP system. It uses batch speculation: the system spawns k tasks on k processors and stops new spawning until the current

batch of tasks finishes. Batch speculation is effectively a single-group activity window. It

leads to hardware underutilization when the parallelism in the group is unbalanced. Such

an imbalance may happen for the following four reasons.

• Uneven task size In the extreme case, one task takes much longer than other tasks,

and all k − 1 processors will be idle waiting.

• Inter-spawn delay The delay is caused by the time spent in executing the code between

SAS blocks. With such delay, even tasks of the same size do not finish at the same

time, again leaving some processors idle.

• Sequential commit Virtually all speculative systems check correctness in the sequential

order, one task at a time. Later checks always happen at a time when the early tasks

are over and their processors are idle. In fact, this makes full utilization impossible

with a single-group activity window.

8

• Asymmetrical hardware Not all processors have the same speed. For example, a

hyperthreaded core has a different speed when running one or two tasks. As a result,

identical tasks may take a different amount of time to finish, creating the same effect

as uneven task size.

A dual-group window solves these four problems with continuous speculation. The

solution makes speculative parallelization more adaptive by tolerating variations in software

demand and hardware resources. All four problems become more severe as we use more

processors in parallel execution. Continuous speculation is necessary to obtain a scalable

performance. As we will show in evaluation, continuous speculation has a higher cost at

low processor counts but produces a greater speedup at high processor counts.

Next we discuss four components of the design: controlling the activity window, updating data, checking correctness, and supporting competitive recovery.

Token passing For a system with p available processors, we need to reserve one for

competitive recovery (as discussed later in this section). We use k = p − 1 processor tokens

to maintain k active tasks. The first k tasks in a program form the first group. They start

without waiting for a token but finish by passing a token. Each later task waits for a process

token before executing and releases the token after finishing. To divide active tasks into

two groups, we use a group token, which is passed by the first group after a group commit

to the next new task entering the activity window. The new task becomes the first task of

the next group.

Variable vs. fixed window size The activity window can be of a fixed size or a variable

size. Token passing bounds the group size from below to be at least k, the number of

processor tokens. It does not impose an upper bound because it allows the second group

to grow to an arbitrary size to make up for the lack of parallelism in the activity window.

Conceivably there is a danger of a runaway window growth as a large group begets an even

larger group. To bound the window and group size, we augment the basic control by storing

the processor tokens when the second group exceeds a specific size. The window expansion

stops until the first group finishes and releases the group token. In evaluation, we found

that token passing creates an “elastic” window that naturally contracts and does not enter

a run away expansion. However, the benefit of variable over fixed window size is small in

our tests.

Triple updates Upon successful completion, a speculative task needs to commit its

changes to shared data. In a process-based design, this means copying modified pages

from the task process to other processes. In continuous speculation, a modified page is

copied three times in a scheme we call triple updates. Consider group g. The first update

happens at the group commit and copies modified pages in all but the last task of g to the

last task. This must be done before the understudy process can start after g.

The next group, g+1, is executing concurrently with g, and may have nearly finished

when g finishes. Since there is no sure benefit in updating g+1, we copy the changes by g

9

to group g+2 in the second update. Recall that g and g+2 are serialized by the passing of

the group token. The second update ensures that g + 2 and later speculation have the new

data before their execution. This is in fact the earliest point for making the new data of g

visible to the remaining program execution.

The third update of group g happens at the end of group g+1. As in the first update,

the goal here is to produce a correct execution state before starting the understudy process

after g+1. Because of group commit and a dual-group activity window, the changes of each

group are needed to form a correct state for two successive understudy processes, hence the

need for the two updates.

In process-based design, inter-task copying is implemented by communication pipes. We

need 3 pipes for each group. Since the activity window has two groups, we need a total of

6 pipes, independent of the size of the activity window.

Dual-map checking Correctness checking is sequential. After task t passes the check,

we check t+1. The speculation is correct if task t+1 incurs no write-read and write-write

conflicts on the same page.2 The page accesses of each task are recorded in two access maps,

one for reads and one for writes. To be correct, the read and write set of task t+1 cannot

overlap with the write set of preceding tasks in the activity window. In batch speculation,

the writes of task t+1 are checked against the union of the write maps up to task t. During

the check, the write set of t+1 is merged into the unified write map for use by t+2. The

unified map is reset after each group.

In continuous speculation, a task executes in parallel with peer tasks from two groups.

Each group g must also check with the writes by g-1. We use two unified maps, one for g-1

and one for g. Each task checks with both unified maps and extends the g unified map.

The inter-group check may be delayed to the end of g and accomplished by a group check.

However, the group check is too imprecise to support speculative Post-Wait, which we will

discuss in Section 3.4.

There are two interesting problems in implementing dual-map checking: how to reuse

the maps, and when to reset them for the next use. With map reuse, a total of two unified

maps suffice regardless of the size of the activity window. In naive thinking, we should

be able to reset the unified map of g-1 after g finishes. However, this would be too early

because the unified map is needed by g − 1 in the second update. The correct reset point

is after the first task of g+1.

Competitive recovery In continuous speculation, the understudy task is started after

the first task, as illustrated in Figure 2. As the activity window advances, the understudy

task is re-started after each task group. An understudy task is always active (after the

first task) as long as speculation continues. As a result, it requires a processor constantly,

leaving one fewer processor for parallel execution. We refer to this as the “missing processor”

problem in continuous speculation.

2

Write-write conflicts may seem harmless with page replication. However, page-level monitoring cannot

rule out a write-read conflict. After a write, the later task opens the access permission for the page, which

allows subsequent reads to the page and opens the possibility of unmonitored write-read conflicts. To ensure

10

i=1

i=2

LEAD

A1

i=3

A2

Group A

i=4

UNDY

B1

i=5

B2

Group B

2nd Update A

1st Update A

UNDY

i=6

C1

i=7

C2

Group C

2nd Update B

3rd Update A

and

1st Update B

UNDY

i=8

D1

i=9

UNDY

D2

Group D

3rd Update B

and

1st Update C

be

Figure 2: Example

of 9 SAS tasks

3 processors,

showingscheme

the use of token

Figure 3:execution

An illustration

of theonimproved

rotating

passing and triple updates. Processor tokens are passed along solid lines with a double

headed arrow. The group token is passed along dotted lines. The hands point to the triple

killed.

thefirst

understudy

the speculation

lead in themaintains

next stage.

updatesAnd

by the

two groups.becomes

Continuous

2 active speculation

tasks and 1 understudy task (marked “UNDY”) at all times.

3.1

Token Relay

In comparison, batch speculation does not suffer from the missing processor problem. In

each

batch, speculation

the first task isisnot

speculative

backup execution.

The understudy

The n-depth

spawned

byand

theneeds

n −no1-depth

speculation

but waits unprocess

starts

after

the

lead

process.

If

all

batch

tasks

were

to

have

the

same

size, the

til there is a free processor. We use a single token pipe to pass the baton

from a

understudy would hardly run. In comparison in continuous speculation, all tasks in the

compulsively killed understudy or a completely finished speculation to the new but

activity window are speculative after the first task finishes.

suspended one. When such a process dying, it writes to the pipe and the waiting one

A question is where to draw the finish line for the parallel-sequential race. Since the unwill derstudy

start to isdo

its real work. The cores are kept as busy as possible.

run with two speculation groups, it has two possible finish lines: the completion

We

don’t

need or

to the

pass

the baton

from

a compulsively

because

of the

first group

completion

of both

groups.

We choose thekilled

secondspeculation

because favoring

it means

the track

speculations

and no deeper

speculations.

speculation

meansfor

that

it won’t give is

upwrong

the competition

as long as

there is a chance the

3.2

parallel execution may finish early. A victory by the understudy, on the other hand, means

no performance improvement and no benefit from parallel execution.

Triple Updates

Anspeculations

example Figure

2 showswin,

an example

of continuous

There are

9 SAS

If the

in a group

the changed

data byspeculation.

them is updated

three

times.

tasks, represented by vertical bars marked with i = 1, . . . , 9. There are 3 processors p = 3,

The first update is after conflict checking and for the understudy in the next group

so there are k = p − 1 = 2 processor tokens. The first 3 SAS tasks form the first group,

to guarantee correctness. The second and third updates are for deeper speculations

correctness,

we have to the

disallow

write-write page

sharing.data. The first update is an incomplete

which

start without

previously

changed

as the same as in batch scheme which doesn’t need to update the changed data by

the last speculation. The second and third

11 updates are complete which update all

4

Post (channel, addrs)

1 remove unmodified pages from send list

2 copy the pages to channel

Wait (channel)

3 wait for data from channel

4 for each page

5 if an order conflict

drop the page from the channel

6

7 else if page has been accessed by me

abort me // receiver conflict

8

9 else accept the page

PageFaultHandler (page)

10 if writing to a posted page

// sender conflict

11 abort next task

EndSAS

12 if the last spec in the group

13 copy pages from group commit

14

skip page if recved before by a wait

(a) Implementation of speculative post and wait, including synchronization (line 3) and data

transfer (lines 1,2,4-9) and handling of sender, receiver, and order conflicts (5-6, 7-8, 10-11).

Task 1

Task 2

Post(1, x)

Wait(2)

Post(3, x)

Post(2, x)

Task 3

Wait(3)

Wait(1)

(b) Example tasks and post/wait

dependences.

A stale page is

transferred by Post(1), but Task 3

ignores Wait(1) (conflict detection at

line 5) and runs correctly.

Figure 3: The implementation of speculative post/wait and an example.

A. When a task finishes, it passes a processor token, shown by a line with a double headed

arrow, to a task in the next group B. When group A finishes, it passes the group token,

shown by a dotted line, to start group C. The tasks enter the activity window one individual

at a time and leave the window one group at a time. In the steady state, it maintains two

task groups with two speculation tasks active at all times. This can be seen in the figure.

For example, the token passing mechanism delays task B1 until a processor is available.

After finishing, group A copies its speculative changes three times: at the end of A for

the understudy of B, C, at the end of B for the understudy of C, D, and at the start of C

for its and future speculation. The understudy tasks are marked “UNDY”. An understudy

task is always running during the continuous speculation.

3.4

Speculative Post and Wait

Post and wait complement each other and also interact with run-time monitoring during

speculation and the commit operation after speculation. The related code is shown in

Figure 3 with numbered lines. Run-time monitoring happens at PageFaultHandler, called

12

when a page is first accessed by a speculation task. Commit happens at EndSAS, called at

the end of a SAS block.

Post(channel, addrs) removes the unmodified pages from the send list (at line 2),

which not only avoids redundant communication but also makes later conflict checking more

accurate because we know every page has been written to by the sender. Wait(channel)

blocks until the waited channel is filled (line 3). For each page, it first checks for conflicts

before accepting it (lines 5–9).

Conflict handling Incorrect use of BOP post and wait may create three types of conflicts.

The sender conflict happens when a page is modified after it is posted, in which case the

receiver sees an intermediate state of the sender. This is detected at the page fault handler

(line 10). We conservatively abort all later speculation (line 11). The receiver conflict

happens when a received page has been accessed before the Wait call, which means that

the receiver has missed the new data from the sender (recall from line 2 that only modified

pages are transferred) and should be aborted. The receiver conflict is checked at line 7.

An order conflict happens when multiple post/wait pairs communicate the same page in

a conflicting order. For example, a receiver receives two inconsistent versions of a page from

two senders. Since only modified pages are transferred, one of the two copies must contain

stale data. Order conflicts are checked at the time of receipt (line 5). The receiver tests

the overlap of communication paths. The communication path of a post/wait pair includes

the intermediate tasks between the sender task and the receiver task in the spawning order.

Two communication paths have a correct overlap if two conditions are met: they start at

the same sender, or the end of one path is the start of the other. Any other overlap is an

order conflict, and the incoming page is dropped. The previously received page may be still

be incorrect. Such error is detected as part of the correctness checking at the time of the

commit.

As a suggestion, either a post or a wait may miss its matching half. Unreceived posts are

removed when the changes by the sender task has become visible to everyone in the activity

window. Let the posting task be t, its result is fully visible when the succeeding task group

exits the activity window. Starving waits cause speculation to fail and be aborted by the

understudy task.

An example The three example tasks in Figure 3(b) demonstrate an order conflict when

three post/wait pairs communicate the same page x, which is modified by the first two tasks

and used by the third task. Post-wait serialized the writes by the first two tasks. However,

Post(1) is misplaced. It tries to send a stale version of x to the third task. At Wait(1),

Task 3 detects an incorrect overlap in the communication path and ignores the transferred

page. When post-wait actions are considered at commit time, we see that Task 3 incurs no

dependence conflict due to x (which would not be the case if its two waits happened in the

reverse order). Such order conflicts may happen in real programs. In pointer-based data

structures, different pointers may refer to the same object. In addition, two objects may

reside on the same page, and a correct order at the object level may lead to inconsistency

at the page level. This example again shows the flexibility of the suggestion language and

its implementation in tolerating erroneous and self-contradictory hints.

13

programs

bzip2

SPEC INT

2000

hmmer

SPEC INT

2006

namd

SPEC FP

2006

sjeng

SPEC INT

2006

prog.

description

source

size, prog.

language

sequential time

and

num. SAS tasks

distribution of task sizes (seconds)

min

1st qu.

183 SAS tasks,

52 sec. total

0.28

0.28

0.29

0.29

0.30

hmm-calibrate:

50 SASs, 929 sec.

4.4

8.0

8.8

9.6

14.9

hmm-search:

50 SASs, 439 sec.

18.6

18.7

18.8

18.8

19.2

data

4,244 lines,

compression written in C

mean 3rd qu.

max

gene

matching

35,992

lines,

written in C

molecular

dynamics

5,315 lines,

in C++

38 SASs,

1055 sec.

27.7

27.8

27.8

27.8

27.8

computer

chess

13,847

lines, in C

15 SASs,

1811 sec.

4.3

51.9

122.8

127.9

419.5

global heap pages heap pages

sequential

num.

committed post/wait

var size

allocated

allocated

time (sec) instructions

pages

pages

(pages) outside SAS inside SAS

num. of

page

faults

53

1.04E+11

74

1,608

0

0

732

984

hmmer

1,368

9.28E+11

10,704

582,574

1,267,511

0

3,083

11,230

namd

1,055

2.33E+12

64

11,558

900

34,200

0

34,357

sjeng

1,811

2.70E+12

880

43,947

0

0

0

1021

bzip2

Table 1: Characteristics of four application benchmarks

3.5

Dynamic Memory Allocation

In a speculation system, each SAS task may allocate and free memory independently

and in parallel with other tasks. A unique problem in process-based design is to maintain an identical address space across all tasks and to make speculative allocation and

deallocation in one task visible to all other tasks. The previous BOP system pre-allocates a

fixed amount of space to each task at the start of a batch and aborts speculation if a task

attempts to allocate too much space. The speculative allocation is made visible after the

batch finishes. Pre-allocation does not work for continuous speculation since the number of

speculation tasks is not known.

We have developed a SAS allocator building on the design of Hoard, an allocator for

multithreaded code (Berger et al., 2000), and McRT-Malloc, an allocator for transactional

memory (Hudson et al., 2006). As Hoard, the SAS allocator maintains a global heap divided

into pages. New pages are allocated from the global heap into per-thread local heap, and

near empty pages are returned to the global heap. To reduce contention, the SAS allocator

allocates and frees a group of pages each time at the global level. To support speculation

and recovery, the SAS allocator delays freeing the data allocated before a task until the end

of the task, as done in McRT-Malloc.

The SAS allocator has a few unique features. Since SAS tasks by default do not share

14

memory, the allocator maintains the meta-data of the global heap in a shared memory

segment visible to all processes. To reduce fragmentation, the SAS allocator divides a page

into chunks as small as 32 bytes. The per-page meta-data is maintained locally on the page,

not visible to the outside until the owner task finishes.

4

Evaluation

Testing environment We use the compiler support of the BOP system (Ding et al.,

2007), implemented in GNU GCC C compiler 4.0.1. All programs are compiled with “-O3”.

Batch and continuous speculation are implemented in C and built as a run-time library.

We use a Dell workstation which has four dual-core Intel 3.40 GHz Xeon processors for a

total of 8 CPUs. Each core supports two hyper-threads. The machine has 16MB of cache

per core and 4GB of physical memory.

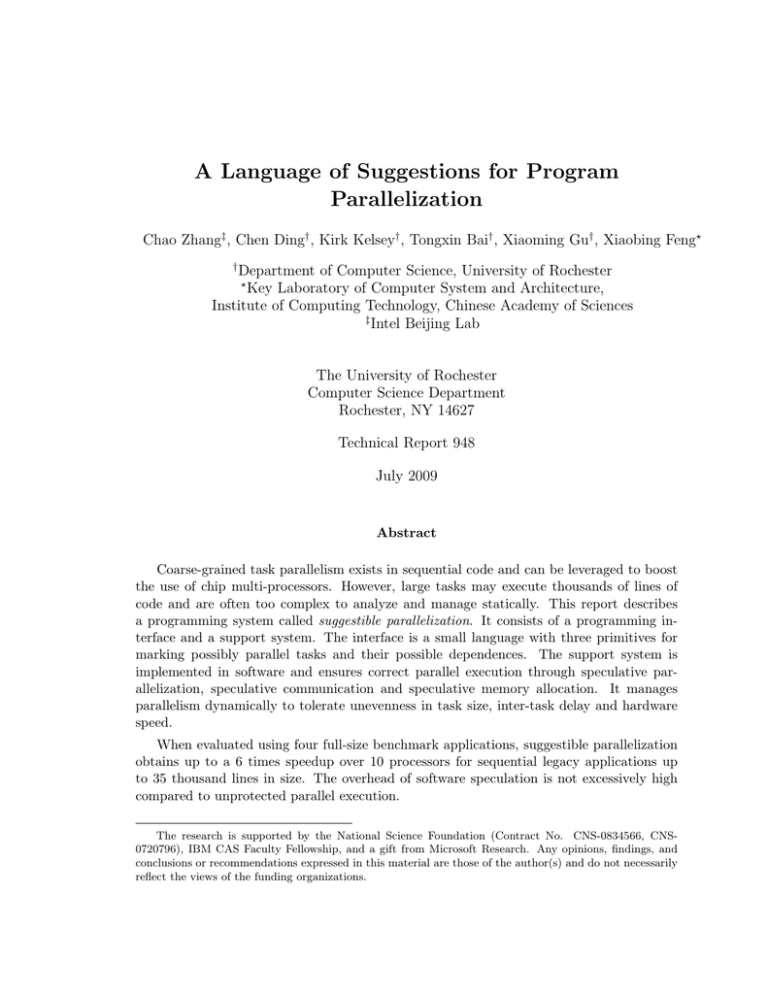

Benchmarks Our test suite is given in Table 1. It consists of four benchmark programs,

including hmmer and sjeng from SPEC INT 2006, namd from SPEC FP 2006, and bzip2

from SPEC CPU 2000. We chose them for three reasons. First, they were known to us

to have good coarse-grained parallelism. In fact, all of them are over 99.9% parallelizable.

Second, they showed different characteristics in task size (see Table 1) and inter-task delay,

enough for us to evaluate the factors itemized in Section 3.3 and test the scalability of our

design. Finally, it turned out that some of them have parallel implementations that do not

use speculation. They allowed us to measure the overhead of the speculation system.

The programs are parallelized manually using the suggestion language and the help

of a profiling tool (running on the test input). The transformation includes separating

independent computation slices and recomputing their initial conditions for Namd, lifting

the random number generation (and its dependence) out of the parallel gene-search loop for

hmmer, removing dependences on four global variables, and marking several global variables

as checked variables (to enable parallelism across multiple chess boards) for Sjeng.

Post-wait is used in bzip2 to pass to the next task the location of the compressed data,

in hmmer-search to add results to a histogram and update a global score, and in hmmercalibrate to conduct a significance test over previous results and then update a histogram.

Hmmer and namd allocate 5GB and 4MB data respectively inside SAS tasks. Because it

lacks support for post-wait and parallel memory allocation, the original BOP system can

parallelize only one of the tests, sjeng. In evaluation, batch speculation refers to the one

augmented with post-wait and dynamic memory allocation.

As Table 1 shows, the applications have one to two static SAS blocks and 15 to 183

dynamic SAS tasks. The parallelism is extremely coarse. Each task has on average between

500 million and 10 billion instructions. The programs use a large amount of data, including

a quarter to 40 mega-byte of global data, 4 mega-byte to 2 giga-byte of dynamic allocation

outside SAS blocks, and up to 5 gig-byte of dynamic allocation inside SAS blocks.

Without speculation support, it would be near impossible for us to ensure correctness.

All programs are written in C/C++ and make heavy use of pointer data and indirections.3

3

One program, Namd, was converted into C from C++ using an internal tool.

15

x

x

x

x

x

o

o

o

o

o

6

+

5

5

o

x

o

o

2

+

x

o

x

o

x

+

x

o

0

0

+

o

x

non−speculative

continuous speculation

batch speculation

1

3

2

+

4

4

x

+

1

+

+

+

speedup

+

+

3

6

bzip2, 4K LOC

2

4

6

8

10

num. processors p

Figure 4: Bzip2 tasks has a significant inter-task delay and requires post-wait. Execution time is reduced from 53 to 15.8 seconds by batch speculation and to 11.3 seconds by

continuous speculation. The non-speculative version is bzip2smp by Isakov.

The size of the source code ranges from over 4 thousand in bzip2 to near 36 thousand in

hmmer. The SAS tasks may execute a large part of a test program including many loops

and functions. Not all code is included in the source form. In bzip2, up to 25% of executed

instructions are inside the glibc library. For complex programs like these, full address

space protection is important not just for correctness but also for efficiency (to avoid the

extra indirection in shared data access, as discussed in Section 3.2).

Continuous vs. batch speculation Figure ?? compares the speedup curve between

continuous and batch speculation, parameterized by the number of processors p shown by

the x-axis.4 Unless explicitly noted, all speedups are normalized based on the sequential

time shown in Table 1.

Three programs benefit from continuous speculation. Bzip2 is the only one with a nontrivial inter-task delay of about 16% of the task length, caused by the sequential reading of

the input file between compression tasks. The post-wait is also sequential and accounts for

4% of running time, although the post-wait code runs in parallel with the code for file input.

The improvement of continuous speculation over batch increases from 6% when p = 3 to

63% at p = 6 and stays between 39% and 63% for p between 6 and 11. Continuous at p = 5

is faster than batch at p = 11, a savings of 6 processors. The speedup curve levels off with 7

or more processors because the inter-task delay limits the scalability due to Amdahl’s law.

4

Because of the missing processor problem, continuous speculation automatically switches to batch speculation if p = 2.

16

hmmer, 35K LOC

+

+

8

8

+

+

4

x

o

+

+

x

o

o

x

o

x

o

x

+

x

o

o

x

o

6

x

o

0

non−speculative

continuous speculation

batch speculation

0

+

x

o

x

o

x

4

+

x

o

2

+

2

speedup

6

+

2

4

6

8

10

num. processors p

Figure 5: Hmmer has uneven task sizes in one SAS block and needs post-wait and dynamic

memory allocation. Execution time is reduced from 1548 to 326 seconds by batch and to

270 by continuous speculation. The non-speculative version uses OpenMP.

o

o

5

o

x

x

x

x

2

o

x

x

o

continuous speculation

batch speculation

0

0

x

o

1

x

o

1

x

o

3

3

o

x

2

speedup

4

o

o

x

4

5

o

x

6

6

namd, 5K LOC

2

4

6

8

10

num. processors p

Figure 6: Namd has perfectly balanced parallelism but needs dynamic memory allocation.

Execution time is reduced from 1090 to 197 seconds by batch and to 182 by continuous

speculation.

17

4

+

4

sjeng, 14K LOC

+

+

x

x

x

x

x

2

+

x

+

x

o

o

x

o

o

o

o

o

o

1

1

speedup

+

3

+

2

3

+

+

x

o

0

0

non−speculative

continuous speculation

batch speculation

1

2

3

4

5

6

7

8

num. processors p

Figure 7: Sjeng has very uneven task sizes. Execution time is reduced from 1924 seconds

to 855 by batch and to 666 by continuous speculation. We manually created the nonspeculative version.

Two tests, hmmer and sjeng, are improved in continuous speculation because of their

uneven task sizes. In Sjeng, the size of the 15 SAS tasks ranges from 4.3 seconds to 420

seconds (Table 1). Continuous speculation is 23% (at p = 5) to 76% (at p = 6) faster than

batch. Batch speculation shows a parallel anomaly: the performance was decreased by 26%

as the number of processors was increased from 5 to 6. This was because the two longest

SAS tasks were assigned to the same batch in the 5-processor run but to two batches in the

6-processor run, increasing the total time from the maximum of the two tasks’ durations to

their sum.

Compared to sjeng, hmmer has a smaller variance in task size in the calibration phase.

The sizes range between 4.4 seconds and 14.9 seconds. Continuous speculation is initially

35% slower than batch because of the missing-processor problem. However, it has better

scalability and catches up with the batch performance at p = 8 and outruns batch by 9%

at p = 11, despite using one fewer processor.

Namd has an identical task size, between 27.7 and 27.8 seconds in all 38 SAS tasks, and

no inter-task delay. Both continuous and batch speculation show near-linear scaling. The

parallel efficiency ranges between 82% at p = 2 to 71% at p = 8. At p = 9, continuous

speculation gains an advantage because of hyperthreading as we discuss next.

Hyperthreading induced imbalance Asymmetrical hardware can cause uneven task

sizes even in programs with equally partitioned work. On the test machine, hyperthreading

is engaged when p > 8. Some tasks have to share the same physical CPU, leading to

18

10 12 14 16 18

0

50

100

4

6

8

10 12 14 16 18

8

6

max 7 spec tasks (8 total)

max 6 spec tasks (7 total)

max 5 spec tasks (6 total)

4

size of activity window

bzip2 window dynamics

150

logic time (PPR instance num.)

Figure 8: The window size in three executions of bzip2, for p = 6, 7, 8, where a size greater

than 2(p − 1) means that more SAS tasks are needed to utilize p processors.

asymmetry in task speed in an otherwise homogeneous system. Batch speculation shows

erratic performance after p = 8, shown by a 5% drop in parallel performance in hmmer and

a 22% drop in namd, both at p = 9.

The performance of continuous speculation increases without any relapse. When p is

increased from 8 to 11, the performance is increased by 8% in hmmer and 17% in namd,

elevating the overall speedup from 4.6 to 5.0 and from 5.1 to 6.0 respectively. Three hyperthreads effectively add performance roughly equivalent to a physical CPU.

Variable-size activity window Continuous speculation adds new tasks to the second

group of the activity window when the existing tasks are not enough to keep all processors

utilized while the first group is running. A variable-size window does not place an upperbound on the size of the second gorup. However, the scheme raises the question whether a

larger window begets an even larger window, and the window grows indefinitely whenever

there is a need to expand.

We examine the dynamic window sizes during the executions of Bzip2, the program in

our test set with the most SAS tasks and hence the most activity windows. Figure 8 shows

on the x-axis the sequence number of the tasks and on the y-axis the number of tasks in

the activity window when task i exits the window (which is the largest window containing

i). The window dynamics shows that monotonic window growth does not happen. For

example at p = 7, the window size grows from the normal size of 12 to a size of 17 and

then retreats equally quickly back to 12. Similar dynamics is observed in all test cases.

The experiments show that the variable-size activity window is “elastic”: expanding to a

larger size when there is insufficient parallelism and contracting to the normal size when the

19

parallelism is adequate. When we measure the performance, we found that using variable

window sizes does not lead to significant improvement except in one program, sjeng. When

p = 6, 7, continuous speculation using variable-size windows is 14% and 6% faster than

using fixed-size windows.

Other factors of the design We have manually moved dependent operations outside

SAS tasks and found that post-wait produces similar performance compared to SAS tasks

without post-wait. With perfectly even parallelism, Namd has none of the benefit and

should expose fully the overhead of continuous speculation. The nearly parallel curves in

Figure 6 show that the overhead comes entirely from competitive recovery. Other overheads

such as triple updates and dual-map checking do not affect performance or scalability. One

factor we did not evaluate is the impact of speculation failure, since our test programs are

mostly parallel (except for conflicts after the last SAS task). A recent study shows that

adaptive spawning is an effective solution (Jiang and Shen, 2008). We plan to consider

adaptive spawning in our future work.

The cost of speculation Speculation protects correctness and makes parallel programming easier. The downside is the cost. We have obtained non-speculative parallel code for

three of the applications: bzip2smp, hand-parallelized by Isakov,5 hmmer we parallelized

with OpenMP in ways similar to (Srinivasan et al., 2005), and sjeng we parallelized by

hand. In terms of the best parallel performance, speculation is slower than non-speculative

code by 23%, 38%, and 28% respectively. There are two significant factors in the difference.

One is the cost of speculative memory allocation in hmmer. The other is data placement of

global variables. To avoid false sharing, our speculation system allocates each global variable on a separate page, which increases the number of TLB misses especially in programs

with many global variables such as sjeng (880 memory pages as shown in Table 1).

5

Related Work

Parallel languages Most languages let a user specify the unit of parallel execution explicitly: threads in C/C++ and Java; futures in parallel Lisp (Halstead, 1985); tasks in

MPI (MPI), Co-array Fortran (Numrich and Reid, 1998), and UPC (El-Ghazawi et al.,

2005); and parallel loops in OpenMP (OpenMP), ZPL (Lewis et al., 1998), SaC (Grelck

and Scholz, 2003) and recently Fortress (Allen et al., 2007). Two of the HPCS languages—

X10 (Charles et al., 2005) and Chapel (Chamberlain et al., 2007)—support these plus atomic

sections. Most are binding annotations and require definite parallelism.

SAS is similar to future, which was pioneered in Multilisp (Halstead, 1985) to mean

the parallel evaluation of the future code block with its continuation. The term future

emphasizes the code block (and its later use). Spawn-and-skip (SAS ), however, emphasizes

the continuation because it is the target of speculation.

5

Created in 2005 and available from http://bzip2smp.sourceforge.net/. The document states that the

parallel version is not fully interchangeable with bzip2. It is based on a different sequential version, so our

comparison in Figure 4 uses relative speedups.

20

Future constructs in imperative languages include Cilk spawn (Blumofe et al., 1995)

and Java future. Because of the side effect of a future task, a programmer has to use a

matching construct, sync in Cilk and get in Java, to specify a synchronization point for

each task. These constructs specify both parallelism and program semantics and may be

misused. For example, Java future may lead an execution to deadlock.

Speculative support allows one to use future without its matching synchronization.

There are at least three constructs: safe future for Java (Welc et al., 2005), ordered transaction (von Praun et al., 2007) and possibly parallel region (PPR) (Ding et al., 2007) for

C/C++. They are effectively parallelization hints and affect only program performance,

not semantics. Data distribution directives in data parallel languages like HPF are also

programming hints, but they express parallelism indirectly rather than directly (Allen and

Kennedy, 2001; Adve and Mellor-Crummey, 1998). SAS augments these future constructs

with speculative post-wait to express dependence in addition to parallelism.

Post and wait were coined for do-across loop parallelism (Cytron, 1986). They are

paired by an identifier, which is usually a data address. They specify only synchronization,

not communication, which was sufficient for use by non-speculative threads. Speculation

may create multiple versions of shared data. Signal-wait pairs were used for inter-thread

synchronization by inserting signal after a store instruction and wait before a load instruction (Zhai, 2005). A compiler was used to determine the data transfer. In an ordered

transaction, the keyword flow specifies that a variable read should wait until either a new

value is produced by the previous transaction or all previous transactions finish (von Praun

et al., 2007). Flow is equivalent to wait with an implicit post (for the first write in the

previous task).

SAS post-wait is similar to do-across in that the two primitives are matched by an

identifier. They are speculative, like signal-wait and flow. However, SAS post-wait provides

the pieces missing in other constructs: it specifies data communication (unlike do-across

post-wait and TLS signal-wait), and it marks both ends of a dependence (unlike flow ). As

far as we know, it is the first general program-level primitive for expressing speculative

dependence. The example in Figure 1(c) demonstrates the need for this generality.

Instead of describing dependences, another way is to infer them as the Jade language

does (Rinard and Lam, 1998). A programmer lists the set of data accessed by each function,

and the Jade system derives the ordering relation and the communication between tasks.

Our system addresses the problem of (speculative) implementation of dependences and is

complementary to systems like Jade.

Software methods of speculative parallelization Loop-level software speculation was

pioneered by the LRPD test (Rauchwerger and Padua, 1995). It executed a loop in parallel, recorded data accesses in shadow arrays, and checked for dependences after the parallel

execution. Later techniques speculatively privatized shared arrays (to allow for false dependences) and combined the marking and checking phases (to guarantee progress) (Cintra and

Llanos, 2005; Dang et al., 2002; Gupta and Nim, 1998). The techniques supported parallel

reduction (Gupta and Nim, 1998; Rauchwerger and Padua, 1995). Recently, a copy-ordiscard model, CorD, was developed to manage memory states for general C code (Tian

et al., 2008). It divided a loop into prologue, body, and epilogue and supported dependent

21

operations. These techniques are fully automatic but do not provide a language interface

for a user to manually select parallel tasks or remove dependences.

There are three types of loop scheduling (Cintra and Llanos, 2005). Static scheduling

is not applicable in our context since the number of future tasks is not known a priori.

The two types of sliding windows are analogous to batch and continuous speculation in this

report. Continuous speculation is a form of pipelined execution, which can be improved

through language (Thies et al., 2007) and compiler (Rangan et al., 2008) support. Our

design originated in the idea of RingSTM for managing transactions (Spear et al., 2008).

These techniques use threads and require non-trivial code changes to manage speculative states and speculative data access. Safe future used a compiler to insert read and

write barriers and a virtual machine to store speculative versions of a shared object (Welc

et al., 2005). Manual or automatic access monitoring were used to support speculative data

abstractions in Java (Kulkarni et al., 2007) and implement transaction mechanism for loop

parallelization (with static scheduling) (Mehrara et al., 2009). They all needed to redirect

shared data accesses. Two techniques used static scheduling of loop iterations (Mehrara

et al., 2009; Welc et al., 2005). Thread-based speculation is necessary to exploit fine-grained

parallelism. In addition, it has precise monitoring and can avoid false sharing. However,

some systems monitor data conservatively or at a larger granularity to improve efficiency.

This may lead to false alerts.

In comparison, we focus on coarse-grained tasks with possibly billions of accesses to

millions of potentially shared data. We use processes to minimize per-access overhead, as

discussed in Section 3.2. Process-based speculation poses different design problems, which

we have solved using a dual-group activity window, triple updates, dual-map checking, and

competitive recovery.

Process-based speculation was used previously in the BOP system (Ding et al., 2007).

We have augmented BOP in three ways: speculative post-wait, continuous speculation, and

parallel memory allocation. The original BOP system cannot parallelize any of our test

programs except for one. For that program, sjeng, continuous speculation is 23% to 76%

faster than the original batch speculation.

6

Summary

We have presented the design and implementation of suggestible parallelization, which includes a minimalistic suggestion language with just three primitives and several novel runtime techniques including speculative post-wait, parallel memory allocation, and continuous

speculation with a dual-group activity window, triple data updates and competitive error

recovery. Suggestible parallelization is 2.9 to 6.0 times faster than fully optimized sequential execution. It enables scalable performance and removes performance anomalies for

programs with unbalanced parallelism and inter-task delays and on machines with asymmetrical performance caused by hyperthreading.

A school of thought in modern software design argues for full run-time checks in production code. Tony Hoare is quoted as saying that removing these checks is like a sailor wearing

a lifejacket when practicing on a pond but taking it off when going to sea. We believe the

22

key question is the overhead of the run-time checking or in the sailor analogy, the weight of

the lifejacket. Our design protects parallel execution at an additional cost of between 23%

and 38% compared to unprotected parallel execution, which is a significant improvement

over the previous system and consequently helps to make speculation less costly for use in

real systems.

Suggestible parallelization attempts to divide the complexity in program parallelization

by separating the issues of finding parallelism in a program and maintaining the semantics

of the program. It separates the problem of expressing parallelism from that of implementing it. While this type of separation loses the opportunity of addressing the issues together,

we have shown that our design simplifies parallel programming and enables effective parallelization of low-level legacy code.

Acknowledgments

We wish to thank Jingliang Zhang at ICT for help with the implementation of BOP -malloc.

We wish also to thank Bin Bao, Ian Christopher, Bryan Jacobs, Michael Scott, Xipeng

Shen, Michael Spear, and Jaspal Subhlok for the helpful comments about the work and the

report.

References

Adve, Vikram S. and John M. Mellor-Crummey. 1998. Using integer sets for data-parallel program

analysis and optimization. In Proceedings of the ACM SIGPLAN Conference on Programming

Language Design and Implementation, pages 186–198.

Allen, E., D. Chase, C. Flood, V. Luchangco, J. Maessen, S. Ryu, and G. L. Steele. 2007. Project

fortress: a multicore language for multicore processors. Linux Magazine, pages 38–43.

Allen, R. and K. Kennedy. 2001. Optimizing Compilers for Modern Architectures: A Dependencebased Approach. Morgan Kaufmann Publishers.

Berger, Emery D., Kathryn S. McKinley, Robert D. Blumofe, and Paul R. Wilson. 2000. Hoard:

a scalable memory allocator for multithreaded applications. In Proceedings of the International

Conference on Architectual Support for Programming Languages and Operating Systems, pages

117–128.

Blume et al., W. 1996. Parallel programming with polaris. IEEE Computer, 29(12):77–81.

Blumofe, R. D., C. F. Joerg, B. C. Kuszmaul, C. E. Leiserson, K. H. Randall, and Y. Zhou. 1995. Cilk:

An efficient multithreaded runtime system. In Proceedings of the ACM SIGPLAN Symposium on

Principles and Practice of Parallel Programming. Santa Barbara, CA.

Chamberlain, Bradford L., David Callahan, and Hans P. Zima. 2007. Parallel programmability

and the chapel language. International Journal of High Performance Computing Applications,

21(3):291–312.

Charles, Philippe, Christian Grothoff, Vijay Saraswat, Christopher Donawa, Allan Kielstra, Kemal

Ebcioglu, Christoph von Praun, and Vivek Sarkar. 2005. X10: an object-oriented approach to

non-uniform cluster computing. In Proceedings of the ACM SIGPLAN OOPSLA Conference,

pages 519–538.

23

Cintra, M. H. and D. R. Llanos. 2005. Design space exploration of a software speculative parallelization scheme. IEEE Transactions on Parallel and Distributed Systems, 16(6):562–576.

Cytron, R. 1986. Doacross: Beyond vectorization for multiprocessors. In Proceedings of the 1986

International Conference on Parallel Processing. St. Charles, IL.

Dang, F., H. Yu, and L. Rauchwerger. 2002. The R-LRPD test: Speculative parallelization of

partially parallel loops. Technical report, CS Dept., Texas A&M University, College Station, TX.

Ding, C., X. Shen, K. Kelsey, C. Tice, R. Huang, and C. Zhang. 2007. Software behavior oriented

parallelization. In Proceedings of the ACM SIGPLAN Conference on Programming Language

Design and Implementation.

El-Ghazawi, Tarek, William Carlson, Thomas Sterling, , and Katherine Yelick. 2005. UPC: Distributed Shared Memory Programming. John Wiley and Sons.

Grelck, Clemens and Sven-Bodo Scholz. 2003. SAC—from high-level programming with arrays to

efficient parallel execution. Parallel Processing Letters, 13(3):401–412.

Gupta, M. and R. Nim. 1998. Techniques for run-time parallelization of loops. In Proceedings of

SC’98.

Hall, Mary W., Saman P. Amarasinghe, Brian R. Murphy, Shih-Wei Liao, and Monica S. Lam. 2005.

Interprocedural parallelization analysis in SUIF. ACM Transactions on Programming Languages

and Systems, 27(4):662–731.

Halstead, R. H. 1985. Multilisp: a language for concurrent symbolic computation. ACM Transactions

on Programming Languages and Systems, 7(4):501–538.

Hudson, Richard L., Bratin Saha, Ali-Reza Adl-Tabatabai, and Ben Hertzberg. 2006. McRT-Malloc:

a scalable transactional memory allocator. In Proceedings of the International Symposium on

Memory Management, pages 74–83.

Jiang, Yunlian and Xipeng Shen. 2008. Adaptive software speculation for enhancing the costefficiency of behavior-oriented parallelization. In Proceedings of the International Conference on

Parallel Processing, pages 270–278.

Kulkarni, Milind, Keshav Pingali, Bruce Walter, Ganesh Ramanarayanan, Kavita Bala, and L. Paul

Chew. 2007. Optimistic parallelism requires abstractions. In Proceedings of the ACM SIGPLAN

Conference on Programming Language Design and Implementation, pages 211–222.

Lewis, E, C. Lin, and L. Snyder. 1998. The implementation and evaluation of fusion and contraction

in array languages. In Proceedings of the SIGPLAN ’98 Conference on Programming Language

Design and Implementation. Montreal, Canada.

Mehrara, Mojtaba, Jeff Hao, Po-Chun Hsu, and Scott A. Mahlke. 2009. Parallelizing sequential

applications on commodity hardware using a low-cost software transactional memory. In Proceedings of the ACM SIGPLAN Conference on Programming Language Design and Implementation,

pages 166–176.

MPI. 1997. Mpi-2: Extensions to the message-passing interface. Message Passing Interface Forum

http://www.mpi-forum.org/docs/mpi-20.ps.

Numrich, R. W. and J. K. Reid. 1998. Co-array Fortran for parallel programming. ACM Fortran

Forum, 17(2):1–31.

24

OpenMP.

2005.

OpenMP

application

program

http://www.openmp.org/drupal/mp-documents/spec25.pdf.

interface,

version

2.5.

Rangan, Ram, Neil Vachharajani, Guilherme Ottoni, and David I. August. 2008. Performance scalability of decoupled software pipelining. ACM Transactions on Architecture and Code Optimization,

5(2).

Rauchwerger, L. and D. Padua. 1995. The LRPD test: Speculative run-time parallelization of loops

with privatization and reduction parallelization. In Proceedings of the ACM SIGPLAN Conference

on Programming Language Design and Implementation. La Jolla, CA.

Rinard, M. C. and M. S. Lam. 1998. The design, implementation, and evaluation of Jade. ACM

Transactions on Programming Languages and Systems, 20(3):483–545.

Spear, Michael F., Maged M. Michael, and Christoph von Praun. 2008. RingSTM: scalable transactions with a single atomic instruction. In Proceedings of the ACM Symposium on Parallel

Algorithms and Architectures, pages 275–284.

Srinivasan et al., U. 2005. Characterization and analysis of hmmer and svm-rfe. In Proceedings of

the IEEE International Symposium on Workload Characterization.