NEUROPHYSIOLOGY OF PERFORMANCE MONITORING AND ADAPTIVE BEHAVIOR

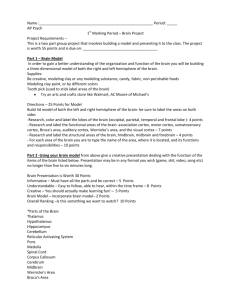

advertisement