Document 14076935

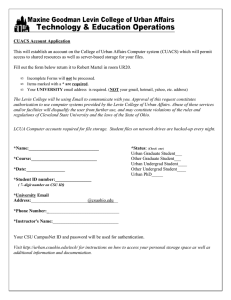

advertisement