A Matter Degrees of Engaging

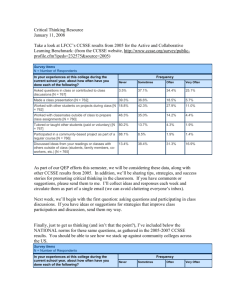

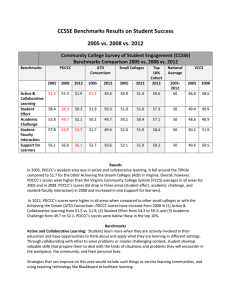

advertisement