The Stability of Geometric Inference in Location Determination

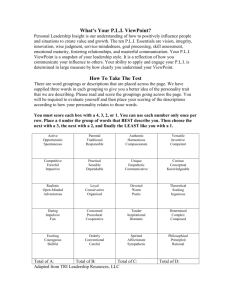

advertisement