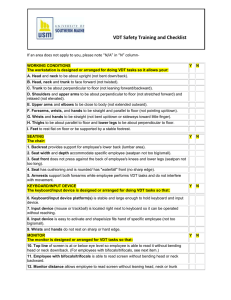

CIFE

advertisement