Given name:____________________ Family name:___________________ Student #:______________________

advertisement

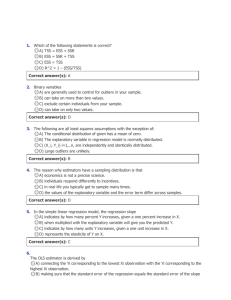

Given name:____________________ Student #:______________________ Family name:___________________ BUEC 333 FINAL Multiple Choice (2 points each) 1) Suppose you draw a random sample of n observations, X1, X2, …, Xn, from a population with unknown mean μ. Which of the following estimators of μ is/are biased? a.) half of the first observation you sample, X1/2 b.) X 2 c.) X 2 d.) a and b e.) all of the above 2) Suppose you want to test the following hypothesis at the 5% level of significance: H0: β1 = 0 H1: β1 ≠ 0 Which of the following statements is/are true? a.) the probability of erroneously rejecting the alternative when it is true is 0.025 b.) the probability of erroneously rejecting the alternative when it is true is 0.05 c.) the probability of failing to reject the alternative when it is false is 0.95 d.) the probability of failing to reject the alternative when it is false is 0.05 e.) none of the above 3) Omitting a constant term from our regression will likely lead to: a.) a lower R2, a lower F statistic, and biased estimates of the independent variables b.) a higher R2, a lower F statistic, and biased estimates of the independent variables c.) a higher R2, a lower F statistic, and biased estimates of the independent variables d.) a higher R2, a higher F statistic, and biased estimates of the independent variables e.) none of the above 4) Suppose we estimate the linear regression Yˆi 10 2 X i 5Fi where Yi is person i’s hourly wage, Xi is years of employment experience, and Fi equals one for women and zero for men. If we redefined the variable Di to equal one for men and zero for women instead, the least squares estimates would be: a.) Yˆi 5 2 X i 5Di b.) Yˆi 5 2 X i 5Di c.) Yˆi 10 2 X i 5Di d.) Yˆi 10 2 X i 5Di e.) none of the above 1 5) When you visually inspect regression residuals for heteroskedasticity in conjunction with the White test, under the null we would expect the relationship between the squared residuals and the explanatory variable to be such that a.) as the explanatory variable gets bigger the squared residual gets bigger b.) as the explanatory variable gets bigger the squared residual gets smaller c.) when the explanatory variable is quite small or quite large the squared residual will be large relative to its value otherwise d.) there is no evident relationship e.) all of the above 6) The law of large numbers says that: a.) the sample mean is a consistent estimator of the population mean in large samples b.) the sampling distribution of the sample mean approaches a normal distribution as the sample size approaches infinity c.) the behaviour of large populations is well approximated by the average d.) the sample mean is an unbiased estimator of the population mean in large samples e.) none of the above 7) Omitting a relevant explanatory variable that is uncorrelated with the other independent variables causes: a.) bias b.) no bias and no change in variance c.) no bias and an increase in variance d.) no bias and a decrease in variance e.) none of the above 8) Second-order autocorrelation means that the current error term, ɛt, is a linear function of: a.) ɛt-1 b.) ɛt-1 squared c.) ɛt-1 and ɛt-2 d.) ɛt-2 e.) none of the above 9) The Durbin-Watson test: a.) assumes that the population error terms are normally distributed b.) tests for positive serial correlation c.) tests for second-order autocorrelation d.) tests for multicollinearity e.) none of the above 10) The RESET test is designed to detect problems associated with: a.) specification error of an unknown form b.) heteroskedasticity c.) multicollinearity d.) serial correlation e.) none of the above 2 11) In the regression model ln Yi 0 1 ln X i i : a.) β1 measures the elasticity of X with respect to Y b.) β1 measures the percentage change in Y for a one unit change in X c.) β1 measures the percentage change in X for a one unit change in Y d.) the marginal effect of X on Y is not constant e.) none of the above 12) If a random variable X has a normal distribution with mean μ and variance σ2 then: a.) X takes positive values only b.) ( X ) / has a standard normal distribution c.) ( X ) 2 / 2 has a chi-squared distribution with n degrees of freedom d.) ( X ) /( s / n ) has a t distribution with n-1 degrees of freedom e.) none of the above 13) Which of the following pairs of independent variables would violate Assumption IV? That is, which pairs of variables are perfect linear functions of each other? a.) Right shoe size and left shoe size of students enrolled in BUEC 333 b.) Consumption and disposable income in Canada over the last 30 years c.) Xi and 2Xi d.) Xi and Xi2 e.) none of the above 14) In the linear regression model, adjusted R2 measures: a.) the proportion of variation in Y explained by X b.) the proportion of variation in X explained by Y c.) the proportion of variation in Y explained by X, adjusted for the number of observations d.) the proportion of variation in X explained by Y, adjusted for the number of independent variables e.) none of the above 15) For a model to be correctly specified under the CLRM, it must: a.) be linear in the coefficients c.) include all relevant independent variables and their associated transformations c.) have an additive error term d.) all of the above e.) none of the above 16) In the linear regression model, the stochastic error term: a.) is unbiased b.) measures the difference between the independent variable and its predicted value c.) measures the variation in the dependent variable that cannot be explained by the independent variables d.) a and c e.) none of the above 3 17) In the Capital Asset Pricing Model (CAPM): a.) β measures the sensitivity of the expected return of a portfolio to specific risk b.) β measures the sensitivity of the expected return of a portfolio to systematic risk c.) β is greater than one d.) α is less than zero e.) R2 is meaningless 18) Suppose all of the other assumptions of the CLRM hold, but the presence of heteroskedasticity causes the variance estimates of the OLS estimator to be underestimated. Because of this, when testing for the significance of a slope coefficient using the critical t-value of 1.96, the type I error rate a.) is higher than 5% b.) is lower than 5% c.) remains fixed at 5% d.) not enough information e.) none of the above 19) Suppose that in the simple linear regression model Yi = β0 + β1Xi + εi on 100 observations, you calculate that R2= 0.5, the sample covariance of X and Z is 1500, and the sample variance of X is 100. Then the least squares estimator of β1 is: a.) not calculable using the information given b.) 1/3 c.) 1 / 3 d.) 2/3 e.) none of the above 20) From a gravity model of trade, you estimate that Pr[0.9828 distance 0.7982] 95% , this allows you to state that: a.) there is a 95% chance that all potential estimates of the coefficient on distance are in this range b.) you fail to reject the null hypothesis that the true coefficient on distance is equal to zero at the 5% level of significance. c.) there is a 5% chance that some of the potential estimate of the coefficient on distance fall outside of this range d.) all of the above e.) none of the above 4 Short Answer #1 (10 points) Consider the R2 of a linear regression model. a.) Explain why the R2 can only increase or stay the same when you add a variable to any given regression. b.) Explain the relationship between the R2 and the F statistic of any particular regression. c.) Derive an expression relating the two variables and indicate whether they demonstrate a linear relationship. d.) Concerned about the possible effects of first-order serial correlation on an original set of OLS estimates, suppose you used Generalize Least Squares instead. In this case, can you use the R2 of the two regressions as a criteria for determining a preferred specification? Explain your answer. e2 ESS TSS RSS RSS i i a.) The R is defined as R 1 1 2 TSS TSS TSS Yi Y 2 2 i As can clearly be seen in the last term, the addition of an independent variable does nothing to change the sum of the squared deviations of the independent variable from its sample mean. The addition of this independent variable could decrease the value of the sum of squared residuals if OLS determines that this independent variable can increase the fit of the regression. At worst, it will assign a value of zero to the coefficient of the new independent variable, leaving the sum of squared residuals constant. b.) They both capture the degree to which a particular regression specification fits the data. In this case, R2 measures the proportion of the total variation in the dependent variable which is explained—or fitted—by the regression under consideration. The F statistic provides a mean of determining the joint statistical significance of the included independent variables. It gives a means of assessing the fit of a regression using the methodology of hypothesis testing by constructing null in which none of the independent variables has any effect on the dependent variable in the population regression model c.) Starting from the F statistic, F ESS / k ESS (n k 1) ESS RSS where R 2 =1 RSS / (n k 1) RSS k TSS TSS F TSS * R 2 (n k 1) TSS * R 2 (n k 1) TSS TSS * R 2 k TSS (1 R 2 ) k F R 2 (n k 1) (1 R 2 ) k By visual inspection, this is clearly a non-linear relationship between the two. This is primarily related to the fact the R2 is bound in between zero and one and the F is bounded by zero but can take values much larger than one. d.) No, the R2 from these two regressions are simply not comparable as the value of the total sum of squares changes across the two regressions. In the simplest (univariate) case, the OLS estimator is contending with the following population regression model: Yt 0 1 X t t so that TSS i Yi Y 2 5 Page intentionally left blank. Use this space for rough work or the continuation of an answer. Considering the same model in the context of the GLS estimator, the corresponding population regression model is Yt Yt 1 0 (1 ) 1 ( X t X t 1 ) ut Yt* 0* 1 X t* ut in which case TSS i (Yt Yt 1 ) E (Yt Yt 1 ) 2 6 Short Answer #2 (10 points) Many times in this course, we have stated that if the assumptions of the classical linear regression model hold, then the OLS estimator is BLUE by the Gauss-Markov theorem. a.) For each of the assumptions, state and explain what they mean. b.) For each of the letters (B, L, U, E), state and explain what concept they stand for. c.) For B and U, state which related assumptions establish these properties. d.) Briefly explain one attractive feature of having the seventh assumption of the CNLRM satisfied. e.) Briefly explain one unattractive feature of the seventh assumption of the CNLRM. a.) Assumption 1: The regression model is: a.) linear in the coefficients, b.) is correctly specified, and c.) has an additive error term. Assumption 2: The error term has zero population mean or E(εi) = 0. Assumption 3: All independent variables are uncorrelated with the error term, or Cov(Xi,εi) = 0 for each independent variable Xi (we say there is no endogeneity). Assumption 4: No independent variable is a perfect linear function of any other independent variable (we say there is no perfect collinearity). Assumption 5: Errors are uncorrelated across observations, or Cov(εi,εj) = 0 for two observations i and j (we say there is no serial correlation). Assumption 6: The error term has a constant variance, or Var(εi) = σ2 for every i (we say there is no heteroskedasticity). b.) BEST: this refers to the size of the sampling variance of an estimator; in particular, BEST designates that an estimator attains the minimum sampling variance possible LINEAR: this refers to the type of relationship in between the estimator and the dependent variable; that is, we can calculate the estimator as a LINEAR function of the dependent variable. UNBIASED: this refers to the expected value of the estimator across random samples; for an estimator, to be UNBIASED its expected value must equal the true parameter value being estimated. ESTIMATOR: this refers to the exercise of using a sample of data to come up with our best guess as to the true parameter value of interest. c.) BEST: Assumptions 4 through 6. UNBIASED: Assumptions 1 through 3. d.) This is one of the ways forward in allowing us to conduct hypothesis tests in the context of the classical linear regression model. In particular, we have the t- and F- tests of statistical and joint significance in mind here. e.) It is actually fairly restrictive assumption and one which we are making about an entity (the population regression model’s error term) which will fundamentally cannot observe. Consequently, a perhaps more attractive way forward comes in invoking the Central Limit Theorem instead, provided we have a sufficiently large sample size. 7 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 8 Short Answer #3 (10 points) Consider the OLS estimator of the constant term, β0, in a linear regression model. a.) What are the three components of this estimator? b.) Explain why we are generally not interested in the particular value of the estimated constant term. c.) Using a picture, show what happens when you suppress the constant term when the true constant term is less than zero and your population regression model is Yi = β0 + β1X1i + ɛi. d.) Do you expect β1-hat to have positive or negative bias? Explain why. a.) Number one: the true parameter value for the constant term. Number two: the constant impact of any specification errors. Number three: the sample mean of the residuals (if this is not equal to 0). b.) Ideally, we want to purge our results of “garbage” like numbers two and three. The role of the estimated constant is to act as a garbage collector. Consequently, we cannot put too much weight on its interpretation. c.) An example of what such a picture could look like would be the following The darker line indicates the true population regression model, mapping our independent variable (on the X axis) into the dependent variable (on the Y axis). The basis of the regression calculations will then be the observations indicated by the dotes. The grey line through the origin then indicates the constrained estimation of the linear relationship between the two variables. d.) As can be seen above, we would expect that this mistake would impart a negative bias in our OLS estimates for the slope coefficient as the true (black) line has a greater slope than that which is estimated when we constrain the line through the origin. 9 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 10 Short Answer #4 (10 points) In Lecture 17, we suggested that there are two potential remedies for serial correlation: Generalized Least Squares (GLS) and Newey-West standard errors. Indicate whether the following statements are true, false, or indeterminate and explain your reasoning (NB: no partial credit given for correct but unexplained answers). a.) GLS is BLUE. b.) The GLS coefficient estimates are identical to OLS coefficient estimates. c.) The error term in the GLS model is homoskedastic. d.) With Newey-West standard errors, the coefficient estimates are identical to OLS coefficient estimates. e.) Newey-West standard errors are unbiased estimators of the sampling variance of the GLS estimator. a.) True, the GLS estimator demonstrates the attractive features of being best (having the minimum sampling variance), linear, and unbiased. GLS corrects for problems related to serial correlation (but also potentially heteroskedasticity), provided we can correctly specify the nature of the problem with the errors. The big difference with OLS is that we must simultaneously estimate the regression coefficients and the auto-correlation parameter. b.) True, by construction, the beta’s from the original estimating equation make an appearance in the formulation of the GLS estimator. This is one of the other nice features of the estimator. c.) Indeterminate, as we have only considered the case of GLS with serial correlation. It takes a little bit more by way of assumptions to ensure that the errors in the population regression model are homoskedastic. d.) True, the Newey-West procedure keeps the OLS coefficient values unchanged, but simply uses a different expression for the estimate of the sampling variance (or in other words, the standard error). e.) False, the Newey-West standard errors are known to be biased, simply less so than the baseline OLS model. Instead, Newey-West standard errors are consistent estimators. That is, they get arbitrarily close to their true value (in a probabilistic sense) when the sample size goes to infinity. 11 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 12 Short Answer #5 (10 points) Consider the very simple linear regression model Yi 1 i where the error i satisfies the six classical assumptions in an i.i.d. random sample of N observations. a.) What minimization problem does the least squares estimator of β1 solve? That is, simply state what OLS seeks to do in this and every case. b.) Solve that problem for the least squares estimator of β1. Show your work. c.) Is the estimator you derived in part b.) unbiased? If yes, demonstrate it. If not, explain why not. d.) What is the sampling variance of the least squares estimator of β1? Show your work. a.) The least squares estimator seeks to minimize the sum of squared residuals, or e Y ˆ Y 2Y ˆ ˆ n Minˆ 1 n i 1 2 i n 2 i i 1 1 n n 2 i 1 i i 1 i 1 i 1 2 1 b.) This simply entails taking the partial derivative of our expression above for the sum of squared residuals w.r.t. β1-hat, setting it equal to zero, and solving for β1-hat: n ei2 n n i 1 i 1 2Yi 2 ˆ1 0 i 1 ˆ1 n n 2ˆ 2Y 1 i 1 i 1 i n 2nˆ1 2 Yi i 1 n ˆ1 Y i 1 n i Y c.) Yes, from the following expression, 1 n 1 n 1 1 1 n E ˆ1 E (Y ) E Yi E 1 i E 1 E ( i ) n1 0 1 n i 1 n i 1 n n n i 1 d.) This one is a little more tough… n 1 n 1 n 1 n Var ˆ1 Var Yi 2 Var Yi 2 Var Yi 2 Cov Yi , Y j i 1 j i n i 1 n i 1 n i 1 Provided that we have i.i.d. sampling of our dependent variable, we can then state the following Y2 1 n 1 n 2 1 2 ˆ Var 1 2 Var Yi 2 Y 2 n Y n i 1 n i 1 n n 13 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 14 Short Answer #6 (10 points) Consider the following set of results for a log-log specification of a gravity model of trade for the years from 1870 to 2000. For the following statistical tests, specify what the null hypothesis of the relevant test is and provide the appropriate interpretation given the results above: a.) the t test associated with the independent variable, GDP (use a critical value of 2.58) b.) the F test associated with GDP and DISTANCE in combination (use a critical value of 4.61) c.) the RESET test using the F statistic (use a critical value of 6.64) d.) the Durbin-Watson test (use a lower critical value of 1.55 and upper critical value of 1.80) e.) the White test (use a critical value of 15.09) 15 Page intentionally left blank. Use this space for rough work or the continuation of an answer. a.) H0: βGDP = 0 versus H1: βGDP ≠ 0 Since the test statistic of 158.2 is so much larger (in absolute value) than the critical value, it is unlikely that the null is true, so we consequently reject it and regard the coefficient on GDP as being statistically significant. b.) H0: β1 = β2 = ... = βk = 0 versus H1 : at least one βj ≠ 0, where j = 1, 2, ... , k Since the test statistic of 14040.15 is so much larger (in absolute value) than the critical value, it is unlikely that the null is true, so we consequently reject it and consider that collectively our independent variables are important in explaining the variation observed in our dependent variable…provided the errors are normal! c.) The null hypothesis in this case is one of correct specification. Technically speaking, we are evaluating the joint significance of the coefficients for all the powers (greater than one) of the predicted value of Y. Thus, the RESET test suggests that we decisively reject the null hypothesis of having the correct specification. Unfortunately, it gives us no further indication of how to deal with this problem. d.) The null hypothesis in this case is no positive autocorrelation. Thus, the Durbin-Watson test suggests that we reject the null hypothesis of no positive autocorrelation, suggesting instead we very likely have problems with serial correlation in this specification. e.) The null hypothesis in this case is no heteroskedasticity. Thus, the White test suggests that we reject the null hypothesis of homoscedasticity, suggesting instead we very likely have problems with heteroskedasticity in this specification. 16 Useful Formulas: k k 2 2 X2 Var ( X ) E X X xi X pi X E ( X ) pi xi i 1 k Pr( X x) Pr X x, Y yi Pr(Y y | X x) i 1 m E Y | X x yi PrY yi | X x i 1 i 1 Var (Y | X x) yi E Y | X x PrY yi | X x Ea bX cY a bE( X ) cE (Y ) 2 i 1 XY Cov( X , Y ) x j X yi Y Pr X x j , Y yi k Pr( X x, Y y) Pr( X x) E Y E Y | X xi Pr X xi k k i 1 m Var a bY b 2Var (Y ) i 1 j 1 Cov X , Y Var X Var Y Corr X , Y XY Var aX bY a 2Var ( X ) b 2Var (Y ) 2abCov( X ,Y ) E Y 2 Var (Y ) E (Y ) 2 E XY Cov( X ,Y ) E( X ) E(Y ) 1 X n t 1 n 2 s xi x n 1 i 1 n x Cova bX cV ,Y bCov( X ,Y ) cCov(V ,Y ) 2 X i i 1 X Z s/ n s XY X 2 X ~ N , n rXY s XY / s X sY n 1 xi x yi y n 1 i 1 X n For the linear regression model Yi 0 1 X i i , ˆ1 i 1 i X Yi Y n X i 1 i X 2 & βˆ0 Y ˆ1 X Yˆi ˆ0 ˆ1 X 1i ˆ2 X 2i ˆk X ki e2 ESS TSS RSS RSS i i R 1 1 2 TSS TSS TSS Yi Y e / (n k 1) R 1 Y Y / (n 1) e / n k 1 ˆ ˆ Var X X 2 e 2 i i i 2 2 s where E s 2 2 n k 1 2 2 i i 2 i i i i 1 2 i Z ˆ j H Var[ ˆ j ] ~ N 0,1 Pr[ˆ j t* /2 s.e.(ˆ j ) j ˆ j t* /2 s.e.(ˆ j )] 1 e e d e T t 2 t T 2 t 1 t t 1 t F i ˆ1 H ~ tn k 1 s.e.( ˆ1 ) ESS / k ESS (n k 1) RSS / (n k 1) RSS k 2 2(1 ) 17