Given name:____________________ Family name:___________________ Student #:______________________

advertisement

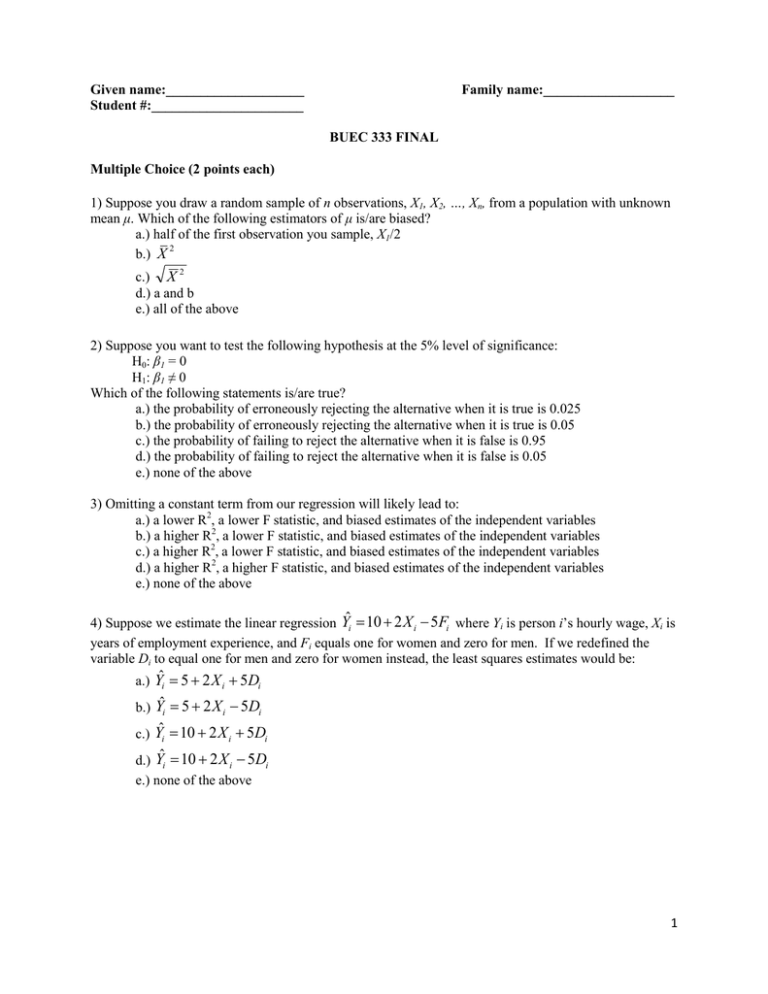

Given name:____________________ Student #:______________________ Family name:___________________ BUEC 333 FINAL Multiple Choice (2 points each) 1) Suppose you draw a random sample of n observations, X1, X2, …, Xn, from a population with unknown mean μ. Which of the following estimators of μ is/are biased? a.) half of the first observation you sample, X1/2 b.) X 2 c.) X 2 d.) a and b e.) all of the above 2) Suppose you want to test the following hypothesis at the 5% level of significance: H0: β1 = 0 H1: β1 ≠ 0 Which of the following statements is/are true? a.) the probability of erroneously rejecting the alternative when it is true is 0.025 b.) the probability of erroneously rejecting the alternative when it is true is 0.05 c.) the probability of failing to reject the alternative when it is false is 0.95 d.) the probability of failing to reject the alternative when it is false is 0.05 e.) none of the above 3) Omitting a constant term from our regression will likely lead to: a.) a lower R2, a lower F statistic, and biased estimates of the independent variables b.) a higher R2, a lower F statistic, and biased estimates of the independent variables c.) a higher R2, a lower F statistic, and biased estimates of the independent variables d.) a higher R2, a higher F statistic, and biased estimates of the independent variables e.) none of the above 4) Suppose we estimate the linear regression Yˆi 10 2 X i 5Fi where Yi is person i’s hourly wage, Xi is years of employment experience, and Fi equals one for women and zero for men. If we redefined the variable Di to equal one for men and zero for women instead, the least squares estimates would be: a.) Yˆi 5 2 X i 5Di b.) Yˆi 5 2 X i 5Di c.) Yˆi 10 2 X i 5Di d.) Yˆi 10 2 X i 5Di e.) none of the above 1 5) When you visually inspect regression residuals for heteroskedasticity in conjunction with the White test, under the null we would expect the relationship between the squared residuals and the explanatory variable to be such that: a.) as the explanatory variable gets bigger the squared residual gets bigger b.) as the explanatory variable gets bigger the squared residual gets smaller c.) when the explanatory variable is quite small or quite large the squared residual will be large relative to its value otherwise d.) there is no evident relationship e.) all of the above 6) The law of large numbers says that: a.) the sample mean is a consistent estimator of the population mean in large samples b.) the sampling distribution of the sample mean approaches a normal distribution as the sample size approaches infinity c.) the behaviour of large populations is well approximated by the average d.) the sample mean is an unbiased estimator of the population mean in large samples e.) none of the above 7) Omitting a relevant explanatory variable that is uncorrelated with the other independent variables causes: a.) bias b.) no bias and no change in variance c.) no bias and an increase in variance d.) no bias and a decrease in variance e.) none of the above 8) Second-order autocorrelation means that the current error term, ɛt, is a linear function of: a.) ɛt-1 b.) ɛt-1 squared c.) ɛt-1 and ɛt-2 d.) ɛt-2 e.) none of the above 9) The Durbin-Watson test: a.) assumes that the population error terms are normally distributed b.) tests for positive serial correlation c.) tests for second-order autocorrelation d.) tests for multicollinearity e.) none of the above 10) The RESET test is designed to detect problems associated with: a.) specification error of an unknown form b.) heteroskedasticity c.) multicollinearity d.) serial correlation e.) none of the above 2 11) In the regression model ln Yi 0 1 ln X i i : a.) β1 measures the elasticity of X with respect to Y b.) β1 measures the percentage change in Y for a one unit change in X c.) β1 measures the percentage change in X for a one unit change in Y d.) the marginal effect of X on Y is not constant e.) none of the above 12) If a random variable X has a normal distribution with mean μ and variance σ2 then: a.) X takes positive values only b.) ( X ) / has a standard normal distribution c.) ( X ) 2 / 2 has a chi-squared distribution with n degrees of freedom d.) ( X ) /( s / n ) has a t distribution with n-1 degrees of freedom e.) none of the above 13) Which of the following pairs of independent variables would violate Assumption IV? That is, which pairs of variables are perfect linear functions of each other? a.) Right shoe size and left shoe size of students enrolled in BUEC 333 b.) Consumption and disposable income in Canada over the last 30 years c.) Xi and 2Xi d.) Xi and Xi2 e.) none of the above 14) In the linear regression model, adjusted R2 measures: a.) the proportion of variation in Y explained by X b.) the proportion of variation in X explained by Y c.) the proportion of variation in Y explained by X, adjusted for the number of observations d.) the proportion of variation in X explained by Y, adjusted for the number of independent variables e.) none of the above 15) For a model to be correctly specified under the CLRM, it must: a.) be linear in the coefficients c.) include all relevant independent variables and their associated transformations c.) have an additive error term d.) all of the above e.) none of the above 16) In the linear regression model, the stochastic error term: a.) is unbiased b.) measures the difference between the independent variable and its predicted value c.) measures the variation in the dependent variable that cannot be explained by the independent variables d.) a and c e.) none of the above 3 17) In the Capital Asset Pricing Model (CAPM): a.) β measures the sensitivity of the expected return of a portfolio to specific risk b.) β measures the sensitivity of the expected return of a portfolio to systematic risk c.) β is greater than one d.) α is less than zero e.) R2 is meaningless 18) Suppose all of the other assumptions of the CLRM hold, but the presence of heteroskedasticity causes the variance estimates of the OLS estimator to be underestimated. Because of this, when testing for the significance of a slope coefficient using the critical t-value of 1.96, the type I error rate: a.) is higher than 5% b.) is lower than 5% c.) remains fixed at 5% d.) not enough information e.) none of the above 19) Suppose that in the simple linear regression model Yi = β0 + β1Xi + εi on 100 observations, you calculate that R2= 0.5, the sample covariance of X and Z is 1500, and the sample variance of X is 100. Then the least squares estimator of β1 is: a.) not calculable using the information given b.) 1/3 c.) 1 / 3 d.) 2/3 e.) none of the above 20) From a gravity model of trade, you estimate that Pr[0.9828 distance 0.7982] 95% , this allows you to state that: a.) there is a 95% chance that all potential estimates of the coefficient on distance are in this range b.) you fail to reject the null hypothesis that the true coefficient on distance is equal to zero at the 5% level of significance. c.) there is a 5% chance that some of the potential estimate of the coefficient on distance fall outside of this range d.) all of the above e.) none of the above 4 Short Answer #1 (10 points) Consider the R2 of a linear regression model. a.) Explain why the R2 can only increase or stay the same when you add a variable to any given regression. b.) Explain the relationship between the R2 and the F statistic of any particular regression. c.) Derive an expression relating the two variables and indicate whether they demonstrate a linear relationship. d.) Concerned about the possible effects of first-order serial correlation on an original set of OLS estimates, suppose you used Generalize Least Squares instead. In this case, can you use the R2 of the two regressions as a criteria for determining a preferred specification? Explain your answer. 5 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 6 Short Answer #2 (10 points) Many times in this course, we have stated that if the assumptions of the classical linear regression model hold, then the OLS estimator is BLUE by the Gauss-Markov theorem. a.) For each of the assumptions, state and explain what they mean. b.) For each of the letters (B, L, U, E), state and explain what concept they stand for. c.) For B and U, state which related assumptions establish these properties. d.) Briefly explain one attractive feature of having the seventh assumption of the CNLRM satisfied. e.) Briefly explain one unattractive feature of the seventh assumption of the CNLRM. 7 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 8 Short Answer #3 (10 points) Consider the OLS estimator of the constant term, β0, in a linear regression model. a.) What are the three components of this estimator? b.) Explain why we are generally not interested in the particular value of the estimated constant term. c.) Using a picture, show what happens when you suppress the constant term when the true constant term is less than zero and your population regression model is Yi = β0 + β1X1i + ɛi. d.) Do you expect β1-hat to have positive or negative bias? Explain why. 9 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 10 Short Answer #4 (10 points) In Lecture 17, we suggested that there are two potential remedies for serial correlation: Generalized Least Squares (GLS) and Newey-West standard errors. Indicate whether the following statements are true, false, or indeterminate and explain your reasoning (NB: no partial credit given for correct but unexplained answers). a.) GLS is BLUE. b.) The GLS coefficient estimates are identical to OLS coefficient estimates. c.) The error term in the GLS model is homoskedastic. d.) With Newey-West standard errors, the coefficient estimates are identical to OLS coefficient estimates. e.) Newey-West standard errors are unbiased estimators of the sampling variance of the GLS estimator. 11 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 12 Short Answer #5 (10 points) Consider the very simple linear regression model Yi 1 i where the error i satisfies the six classical assumptions in an i.i.d. random sample of N observations. a.) What minimization problem does the least squares estimator of β1 solve? That is, simply state what OLS seeks to do in this and every case. b.) Solve that problem for the least squares estimator of β1. Show your work. c.) Is the estimator you derived in part b.) unbiased? If yes, demonstrate it. If not, explain why not. d.) What is the sampling variance of the least squares estimator of β1? Show your work. 13 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 14 Short Answer #6 (10 points) Consider the following set of results for a log-log specification of a gravity model of trade for the years from 1870 to 2000. For the following statistical tests, specify what the null hypothesis of the relevant test is and provide the appropriate interpretation given the results above: a.) the t test associated with the independent variable, GDP (use a critical value of 2.58) b.) the F test associated with GDP and DISTANCE in combination (use a critical value of 4.61) c.) the RESET test using the F statistic (use a critical value of 6.64) d.) the Durbin-Watson test (use a lower critical value of 1.55 and upper critical value of 1.80) e.) the White test (use a critical value of 15.09) 15 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 16 Page intentionally left blank. Use this space for rough work or the continuation of an answer. 17 Useful Formulas: k k 2 2 X2 Var ( X ) E X X xi X pi X E ( X ) pi xi i 1 k Pr( X x) Pr X x, Y yi Pr(Y y | X x) i 1 m E Y | X x yi PrY yi | X x i 1 i 1 Var (Y | X x) yi E Y | X x PrY yi | X x Ea bX cY a bE( X ) cE (Y ) 2 i 1 XY Cov( X , Y ) x j X yi Y Pr X x j , Y yi k Pr( X x, Y y) Pr( X x) E Y E Y | X xi Pr X xi k k i 1 m Var a bY b 2Var (Y ) i 1 j 1 Cov X , Y Var X Var Y Corr X , Y XY Var aX bY a 2Var ( X ) b 2Var (Y ) 2abCov( X ,Y ) E Y 2 Var (Y ) E (Y ) 2 E XY Cov( X ,Y ) E( X ) E(Y ) 1 X n t 1 n 2 s xi x n 1 i 1 n x Cova bX cV ,Y bCov( X ,Y ) cCov(V ,Y ) 2 X i i 1 X Z s/ n s XY X 2 X ~ N , n rXY s XY / s X sY n 1 xi x yi y n 1 i 1 X n For the linear regression model Yi 0 1 X i i , ˆ1 i 1 i X Yi Y n X i 1 i X 2 & βˆ0 Y ˆ1 X Yˆi ˆ0 ˆ1 X 1i ˆ2 X 2i ˆk X ki e2 ESS TSS RSS RSS i i R 1 1 2 TSS TSS TSS Yi Y e / (n k 1) R 1 Y Y / (n 1) e / n k 1 ˆ ˆ Var X X 2 e 2 i i i 2 2 s where E s 2 2 n k 1 2 2 i i 2 i i i i 1 2 i Z ˆ j H Var[ ˆ j ] ~ N 0,1 Pr[ˆ j t* /2 s.e.(ˆ j ) j ˆ j t* /2 s.e.(ˆ j )] 1 e e d e T t 2 t T 2 t 1 t t 1 t F i ˆ1 H ~ tn k 1 s.e.( ˆ1 ) ESS / k ESS (n k 1) RSS / (n k 1) RSS k 2 2(1 ) 18