Framework of Communication Activation Robot Participating in Multiparty Conversation

advertisement

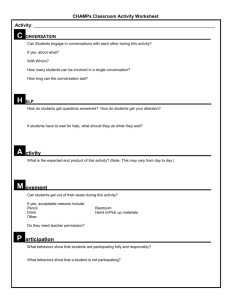

Dialog with Robots: Papers from the AAAI Fall Symposium (FS-10-05) Framework of Communication Activation Robot Participating in Multiparty Conversation Yoichi Matsuyama, Hikaru Taniyama, Shinya Fujie and Tetsunori Kobayashi Department of Computer Science and Engineering, Waseda University, Japan 3-4-1 Okubo, Room 55N-05-09 Shunjuku-ku, Tokyo 169-8555, Japan matsuyama@pcl.cs.waseda.ac.jp participation structure based on these analysis. Mutlu, et al. (Mutlu et al. 2009) indicated that a robot can establish participant roles of its conversational partners using its eye gaze cues. Bohus, et al. (Bohus and Horvitz 2009) considered a model of multiparty engagement, where multiple people may enter and leave conversations, and interact with the system using paralinguistic information such as eye gaze although they use character agent not physical robot. In this manner, a robotic system should decide its behavior using results of participation role estimation based on not only linguistic but also paralinguistic information to participate in multiparty conversation. Breazeal, et al. developed robots that had capabilities of expression of paralinguistic information(Breazeal et al. 2008). They are equipped with sufficient degrees of freedom to express the information. Their MDS robot can express paralanguage for social interaction using eyelids, eyebrows, mouth and other facial parts. However, there is few case studies to apply both recognition and expression of paralinguistic to multiparty conversation using a physical robot system. Therefore, in this paper, we propose a framework of both hardware and software for a robot to participate in multiparty conversation with embodiment that can recognize and express paralanguage. In this paper, we focus on an entertainment game task that is played among elderly people and staffs at an elderly care facility(Matsuyama et al. 2008). Previously, various robots for elderly care have been produced. In Ifbot case (Kato et al. 2004) , ten thousands of utterance scripts are prepared for human-robot conversation. Some of the users reported that Ifbot can change relationship among the families and release depressed moods when confronting the human’s terminal stages. Seal robot Paro (Wada and T.Shibata 2006) was also designed for elderly care. They reported not only psychical and physiological effectiveness such as relaxing, increasing motivation to communication and improving vital signs, but also social effectiveness such as increasing frequency of communication among patients and nurses. Particularly, we focus on “Nandoku” game as multiparty conversation activation task in daycare center. Nandoku game is one recreation game which can be described as multiparty conversation with a master of ceremony(MC) and several panelists. In a Nandoku game, MC writes down a “Kanji” question which is difficult to pronounce even for Japanese, and then panelists try to figure out its pronuncia- Abstract We propose a framework for a robot to participate in and activate multiparty conversation. In multiparty conversation, the robot should select its behavior based on both linguistic information and participation structure. In this paper, we focus on multiparty conversation game “Nandoku,” which is often played in elderly care facilities. The robot acts as one of the participants, and tries to promote the communication activeness. The framework handles the dialogue situation from three aspects: multiparty conversation, game progress and communication activation, and selects the most effective robot’s behavior according to these three aspects. 1 Introduction We propose a framework for a conversational robot participating in multiparty conversation and activating it. Embodiment is indispensable for conversation. In this paper, we define embodiment in conversation as to exist in conversational situation and possess capabilities to understand its conversational partners’ intention and express its own intention to them with its physical body. Fujie, et al. argue that combination of linguistic and paralinguistic information can improve communication efficiency (Fujie, Fukushima, and Kobayashi 2004). Paralanguage is physical signals that complement linguistic information, such as prosody, timing of utterance, direction of eye gaze, face expression, gesture, and position. In real life, most of our conversation at home and workplaces are progressed by more than one person. Recognition and expression of paralanguage are more complicated than the one-to-one situation. There are several researches about behaviors of robots and character agents in multiparty conversation. Their goals are to recognize current role of a robot and select appropriate behavior to improve the quality of conversation. Such a work was based on psychological analysis of participation structure in multiparty conversation that Goffman(E.Goffman 1981) and Clark(H.Clark 1996) organized. Matsusaka, et al. (Matsusaka, Tojo, and Kobayashi 2003) considered an actual robot system as first case that participates in multiparty conversation using estimation of c 2010, Association for the Advancement of Artificial Copyright Intelligence (www.aaai.org). All rights reserved. 68 tion. We consider conversational robot system participating in these multiparty conversation and activating it with capabilities of recognition and expression of paralanguage. In this task, a robot should share the conversational situation and obey the rules of both multiparty conversation and game task. Moreover, it should select its behavior that can promote communication activeness. In this paper, we consider a framework to evaluate and decide behaviors of a conversational robot from three points of view; multiparty, game progress and conversation activation. In the following section, we consider a model for participation role estimation in multiparty conversation. In the third section, we consider a methodology to activate multiparty conversation. In the forth section, we describe our new robotic hardware called “SCHEMA [ e:ma]” and software architecture. In the fifth section, we discuss this framework, and in the final section we conclude this paper. Speaker Side-participants Pi : ri P :r P : r’ accept / reject Pk : rk Pi···k : participants (i = k) ri···k : role of each participant Participation Structure Figure 2: Function of participation role Goffman(E.Goffman 1981) and Clark(H.Clark 1996) indicates the participants’ roles in the participant structure of multiparty conversation. The participants’ roles are divided into a person who are allowed to participate in the conversation and a person who are not allowed to participate in the conversation. Moreover, the former is divided into three roles; Speaker who is primary speaker, Addressee who is primary listener, Side-Participant who is not addressed listener. Participants progress conversation with changing their roles dynamically. Not only speaker but also both addressee and side-participant should be careful to their behaviors depending on their own roles. Otherwise, the conversation can not be progressed properly. In multiparty conversation research, the next speaker estimation is the most important problem to solve. In addition, a robot should posses policies to select its behavior depending on their roles to participate in multiparty conversation. Clark(H.Clark 1996) observed human-human interaction in multiparty conversation and indicates that conversation has the objective structure shown in Fig. 1. Each participant is assigned to the following roles. • Participant in conversation – Speaker (SPK) – Addressee (ADR) – Side-Participant (SPT) • Not participant but listener – Bystander (BYS) – Eavesdropper (EAD) Participants change their roles dynamically to progress the multiparty conversation smoothly. 2.2 Overhearers Figure 1: Participation structure(H.Clark 1996) 2 Participating Multiparty Dialogue 2.1 Addressee Some behaviors of participants posses functions to change states of other participants. For instance, when a speaker gazes at side-participant, it has ability to change the role of side-participant into addressee. We use the term “ability to change ” here because the speaker needs the sideparticipantsf acceptance to change the role. Participants request to each other to change roles of other participants. And they repeat accepting or rejecting answer to the requests to change its and others’ roles. We propose a description methodology shown in Fig. 2. The examples are as follows. Requests are categorized as assignment to itself; “Which role do I want to be?” and assignment to other participants; “Which role of other participant’s do I want to change?” For instance, A behavior maintaining its turn can be regarded as “speaker’s request to assign speaker to itself(speaker)” (Fig. 3(a)). An addressing behavior can be regarded as “speaker’s request to assign addressee to side-participant” (Fig. 3(b)). Answers are categorized as acceptance and rejection. A behavior accepting turn can be regarded as “addressee’s acceptance to speaker’s request to assign speaker to addressee ” (Fig. 4(a)). In contrast, a behavior rejecting turn can be regarded as “addressee’s rejection to speaker’s request to assign speaker to addressee” (Fig. 4(b)). 3 Communication Activation Task 3.1 NANDOKU Game The Request-Answer model in the previous section is observed in point of view of participation structure in multiparty conversation. In order that robot progresses a particular task with participating multiparty conversation, we should describe functions in other points of view. In this paper, we focus on participation in Nandoku game and activation of conversation among the panelists. In Nandoku game, MC writes down on a whiteboard or project on Request-Answer Mondel In this section, we propose a model of effectiveness of participants’ behaviors including a robot in participation structure. 69 Pi : SPK Pi : SPK 3.2 Functions of Behaviors in Quiz Game Task Pi : SPK Pi : SPK P : SPT (a) Panelists’ behaviors in Nandoku game are not only to answer questions but also encourage other panelists to answer. Therefore, we define functions of robot’s behavior in the point of view of progressing the game as the following four. P : ADR (b) 1. ANSWER ... Function to offer answer 2. ASK_HINT ... Function to ask MC for hint 3. LET_ANSWER ... Function to encourage other panelists to answer 4. INFORM ... Function to offer trivia information depending on a question Figure 3: Example of Request. (a) speaker’s request to assign itself(speaker) to speaker, (b) speaker’s request to assign side-participant to addressee Pi : SPK P : ADR Pi : SPK P : SPK P : ADR accept 3.3 Function of Behaviors in Communication Activation P : SPK reject P : ADR In Nandoku game, it succeeds in communication activation by giving chances to other panelists to answer. These successful situation can be realized by not only encouraging someone to answer directly but also answering to giving a hint to other panelists or asking MC for a hint. And it can be also realized when a robot reacts MC’s utterance to attract attentions of other panelists. And when either MC or a robot offers interesting information the situation should be activated. However, because this type of function should be included by functions in the point of view of progressing game, we can share these functions there. For this reason, we define the following four function in the point of view of activating communication. 1. REACT_TO_ALMOST ... Function to react to MC’s utterance “Almost” 2. REACT_TO_CORRECT ... Function to react to MC’s utterance “Correct” 3. HESITATE ... Function to hesitate to answer (to say something when MC encourage a robot to answer but has no idea) 4. MUTTER ... Function to mutter (to say something suggesting hint) 1. and 2. are functions of reactive behaviors to MC’s specific actions. 3. is a function without substantial utterance out of function of ANSWER and INFORM behaviors in the previous section. 4. is a function of behaviors to say something independent from progressing the game but dependent on the question. P : ADR (a) (b) Figure 4: Example of Answer. (a) addressee’s acceptance to speaker’s request to assign addressee to speaker, (b) addressee’s rejection to speaker’s request to assign addressee to speaker Whiteboard MC Robot (as a panelist) Panelist A Panelist B Panelist C Figure 5: Situation of NANDOKU Game a screen a Japanese Kanji question which is difficult to pronounce even for Japanese and then panelists answer the pronunciation of it. The situation is shown in Fig. 5. MC makes a question for the game, encourages panelists to answer, evaluates the answers and offer information related with the question. The panelists can answer when they are asked by MC or at any given point in time. 4 Framework 4.1 Hardware Design To implement our proposal methodology, we produced a conversational robot called “SCHEMA” (Matsuyama et al. 2009) that has necessary and sufficient degrees of freedom for multiparty conversation shown in Fig. 6 It is approximately 1.2[m] height, which is the same level of eyes of an adult male sitting down a chair. It has 10 degrees of freedom for right-left eyebrows, eyelids, right-left eyes(roll and pitch) and neck(pitch and yaw). It can express anxiousness and surprise using its eyelid and control eye gaze using eyes, neck and autonomous turret. And it This kind of game is played as a recreation in elderly care facilities to activate communication and their brain. MC is usually a care staff in the facilities and elderly people are panelists. In this research, a robot participates in the game as one of panelists to activate communication. For this reason, we should also consider functions of robot’s behavior in two more points of view; “Proceeding Nandoku game” and “Activating Communication.” 70 Figure 7: System architecture Figure 6: conversational robot SCHEMA [ e:ma] the game progress viewpoint and the conversation activation viewpoint. Functions of behavior in multiparty conversation are Request-Answer model as is described in 2.2. For instance, answering something is a behavior that has a function of “Somebody’s request to assign speaker to himself/herself.” Here are two important points. First, a behavior often has multiple functions. For instance, the answering behavior has also a function of “Acceptance to somebody’s request to assign speaker to himself/herself” and a function of “Rejection to somebody’s request to assign speaker to the other participant.” Second, because these roles and related requests are changing momentarily, the system needs to calculate based on specific situations to confirm functions completely. For instance, the function of “Acceptance to speaker’s request” of a behavior doesn’t make sense when there is no request. Functions of behaviors in progressing game are the four types as described in 3.2. Some behaviors have one of these functions, and some have none. The behaviors without the functions are independent of progress of the game. For instance, mutter behaviors can sometimes be hints to other panelists accidentally, but it is independent of the game progress. Functions of behaviors in communication activation are the four types as described in 3.3. As with game progress functions, some behaviors have one of these functions, and others have none. has 6 degrees of freedom for each arm, which can express gestures. One degree of freedom is assigned to mouth to indicate explicitly whether the robot is speaking or not. A computer is inside the belly to control robot’s actions and an external computer sends commands to execute various behaviors though WiFi network. 4.2 Software Modules The system overview is shown in Fig. 7. This system is categorized as the input group, the behavior selection group and the output group. The detail of the behavior selection group is described in the following section. The input group consists of a mobile device and speech recognizers as sensors to understand the environment. The mobile device is for MC’s question selection. When a question is selected in the device, it is projected on a screen. System is notified the current question through a WiFi network. Headset microphones for each participants including MC are used for input of speech recognizers. The output group consists of an action player and a speech synthesizer. The action player executes robot’s physical action and the speech synthesizer output speech, which are synchronized with each other. 4.3 Behavior Selection Stuation Understanding We also deal with situation understanding from the multiparty conversation viewpoint, the game progress viewpoint and the conversation activation viewpoint. Situation in the multiparty conversation viewpoint can be interpreted as each participant’s role in participation structure as is described in 2. The multiparty conversation state manager estimates the situation by states of speech recognizers to recognize the current speaker, state of robot’s speech synthesizer and history of robot’s behaviors. As a general rule, a participant The behavior selection group progresses to select a behavior of the highest value based on situations from various behaviors in the behavior dictionary. Behavior Dictionary The behavior dictionary contains various predefined behaviors. In each behavior, utterances, gestures and functions utilized for evaluation are defined. As we described in 3.1, functions of behaviors are interpreted differently in different points of view. In this research, because of focusing on activating Nandoku game, we deal with functions from the multiparty conversation viewpoint, 71 whose speech recognizer is working should be estimated as the current speaker. And when the result of speech recognizer includes a participant’s name, he or she should be estimated to be requested as addressee. When several participants speak at a same time, the state manager estimates next speaker according predefined rules. In particular, MC gets first priority as speaker when MC is speaking. Situation in Nandoku game is categorized as a long term and a short term situation. The long term situation is changeable status in long period in the game task. For instance, information of the current question, the current game state(Pre-answering state or Post-answering state). Long term situation changes when MC selects question with the mobile device or the speech recognizer recognizes MC’s specific keyword such as “Correct.” The short term situation is changeable status in short period in a state of game. For instance, when MC asks someone for answer, MC evaluates someone’s answer. Short term situation changes when the speech recognizer recognizes MC’s specific keyword such as a participant’s name and “Almost.” A situation in communication activation is described how each panelist is activated. In particular, each panelist has its activeness value from 0 to 100. We assume that panelist who answers more frequently is more likely to be activated. The activation state manager increases activeness of a panelist who becomes speaker in the multiparty conversation viewpoint or a panelist who are asked for answer in the activeness viewpoint. And it decreases activeness gently to avoid unreasonable increase of activeness. a w1 w2 w3 Figure 8: Behavior Evaluation. After system calculates functions of each behavior, each evaluator calculate value based on optional situations and functions. Evaluation value of each behavior is the sum of the waited values. In the case of real number value, the evaluation value changes continuously. This case is depent on communication activation. For instance, “Ask panelist A for answer” behavior has little effectiveness when A has high activeness value. But when A has low activeness, the behavior should affect effectively. So that the value is described as follows. a MAX − aA if aA < aMAX e= aMAX 0.0 otherwise Behavior Evaluation The behavior evaluation group starts to progress when each state manager generate triggers. It evaluates all behaviors in behavior dictionary and returns a behavior with the highest value and transfer it to output group. According to predefined rules, each state manager generate triggers. The multiparty conversation state manager generates its triggers when participation roles change and, participants request or answer. The game state manager generates its trigger when situation of game changes. The activation state manager generates its trigger when panelists’ communication activeness is lower than a threshold. The flow of behavior evaluation is shown in Fig. 8. The behavior evaluation starts with calculating functions of behaviors. Although most of functions of behaviors are predefined statically as is described in 4.3, as for the multiparty conversation viewpoint, functions are decided based on situation using partially predefined functions. After this understanding process, the system can evaluate value of behaviors using several functions and situation in each viewpoint. Evaluation process are progressed by multiple evaluators. Each evaluator evaluates using optional information in state managers and functions in each behavior. Evaluation is categorized as simple true and false value or real number value. In the case of true and false value, evaluators evaluate based rules such as “From viewpoint of game progress, current situation is that MC asks a robot for answer. Therefore this behavior has function ANSWER.” Here, aA is A’s activeness and aMAX is maximum expectation of execution of asking behavior for answer, which is predefined. The final evaluation value of each behavior is waited sum of each evaluator. An example of an evaluation(true and false evaluation) is shown in Table 1. In this example, the evaluator follows (1) the multiparty conversation viewpoint; a robot should not assign participants to bystander role, (2) the game progress viewpoint; a robot should reply MC’s requests and should not disturb the game progress. And it is independent from the activation viewpoint. This is a part of multiple evaluators in this framework. System designer can add their evaluators to the system to improve robot’s behaviors easily. 5 Discussion This robotic system understands situations and evaluates behaviors using both linguistic and paralinguistic information to participate in and activate multiparty conversation. In this paper, we propose methodology to understand in three viewpoint and evaluate behaviors with multiple evaluators. 72 Table 1: Example of evaluator that calculates using true or false value Point of view Multiparty Conversation Multiparty Conversation Weight −100.0 +100.0 Nandoku Game +100.0 Nandoku Game −100.0 Activation +100.0 Activation +100.0 Situation ∗ Optional request Pre-answering State MC’s Request to Answer to Robot Pre-answering Other panelist is answering Pre-answering MC’s utterance “Almost.” Pre-answering MC’s utterance “Correct.” Function Request to assign other Side Participant to Bystander Acceptance to the request ANSWER ANSWER REACT TO ALMOST REACT TO CORRECT Currently the system recognizes MC’s speech as linguistic information and duration of utterance of all participants as paralinguistic information. However, these paralinguistic information is not sufficient for this robot to participate in multiparty conversation. The next speaker estimation problem needs not only speech information but also visual information such as participants’ directions of eye gaze. We will consider to add both visual and auditory information to this framework to understand paralanguage. System designers can add various evaluators to change its behavior. However, currently weight of each evaluator are predefined. We will consider learning mechanisms to update each weight and method of evaluators. Also, the current system can only passively response to participants’ auditory information. For instance, after a robot asks one of panelists for answer, it can not expand the conversation. Therefore we will consider planning methodology to expand conversation depending on a current topic or question in the game. national conference on computer graphics and interactive techniques, ACM SIGGRAPH 2008, new tech demos. E.Goffman. 1981. Forms of Talk. University of Pennsylvania Press. Fujie, S.; Fukushima, K.; and Kobayashi, T. 2004. A conversation robot with back-channel feedback function based on linguistic and non-linguistic information. In Proc. of 2nd Intl. Conf. on Autonomous Robots and Agents, ICARA2004, 379–384. H.Clark. 1996. Using Language. Cambridge, UK, Cambridge University Press. Kato, S.; Ohshiro, S.; H.Itoh; and Kimura, K. 2004. Development of a communication robot ifbot. In Proceedings of 2004 IEEE International Conference on Robotics and Automation (ICRA), 697–702. Matsusaka, Y.; Tojo, T.; and Kobayashi, T. 2003. Conversation robot participating in group conversation. The Institute of Electronics, Information and Communication Engineers (IEICE) Transactions on Information and Systems E86-D(1):26–36. Matsuyama, Y.; Taniyama, H.; Fujie, S.; and Kobayashi, T. 2008. Designing communication activation system in group communication. In Proc. Humanoids2008, 629–634. Matsuyama, Y.; Taniyama, H.; Hosoya, K.; Tsuboi, H.; Fujie, S.; and Kobayashi, T. 2009. Schema: multi-party interaction-oriented humanoid robot. In International conference on computer graphics and interactive techniques, ACM SIGGRAPH ASIA 2009 Emerging Technologies. Mutlu, B.; Shiwa, T.; Kanda, T.; Ishiguro, H.; and Hagita, N. 2009. Footing in human-robot conversations: How robots might shape participant roles using gaze cues. In Proceeding of Human Robot Interaction 2009, 61–68. Wada, K., and T.Shibata. 2006. Robot therapy in a care house - its sociopsychological and physiological effects on the residents. In Proceedings of 2006 IEEE International Conference on Robotics and Automation (ICRA), 3966– 3971. 6 Conclusion We propose integrated framework for a robot participating in and activating multiparty conversation using paralinguistic information. The system understands situation and evaluates functions of behavior in three view points. In order to activate multiparty conversation, we will consider learning mechanism for multiple evaluator to update automatically by situation and also consider mechanism for expanding topic. 7 Acknowledgments TOSHIBA corporation provided the speech synthesizer engine customized for our spoken dialogue system. We also wish to thank the staff of NPO Community Care Link Tokyo Care-town Kodaira Day Care Service Center. References Bohus, D., and Horvitz, E. 2009. Model for multiparty engagement in open-world dialogue. In Proceedings of SIGDIAL 2009, 225–234. Breazeal, C.; Grupen, R.; Deegan, R.; Weber, J.; and Narendran, K. 2008. Mobile, dexterous, social robots for mobile manipulation and human-robot interaction. In Inter- 73