Which States Can Be Changed by Which Events? Niloofar Montazeri

advertisement

Logical Formalizations of Commonsense Reasoning: Papers from the 2015 AAAI Spring Symposium

Which States Can Be Changed by Which Events?

Niloofar Montazeri

Jerry R. Hobbs

niloofar@isi.edu

Hobbs@isi.edu

Information Sciences Institute/University of Southern California, Marina del Rey, California

Related works in the area of event semantics include detecting happens-before relations (Chklovski and Pantel

2004), causal relations (Girju 2003) and entailment relations (Pekar 2006) between events. The work by (Sil and

Yates, 2011) is the closest to ours. They identify STRIPS

representations of events which include such information

as preconditions, post-conditions and delete-effects of

events. The latter is defined as “conditions that held before

occurrence of event but no longer hold afterwards” and is

precisely what we are looking for. (Sil and Yates, 2011)

have limited delete-effects to those that are negations of

preconditions (e.g., “unhurt” is a precondition of “maim”

and its negation, “hurt” is a delete-effect), but we also extract conditional delete-effects such as “If x teaches, then

x’s retirement will put an end to it”. In addition, we find

possible delete-effects such as “If x is happy, realizing y

may put an end to it”.

Abstract

We present a method for finding (STATE, EVENT) pairs

where EVENT can change STATE. For example, the event

“realize” can put an end to the states “be unaware”, “be confused”, and “be happy”; while it can rarely affect “being

hungry”. We extract these pairs from a large corpus using a

fixed set of syntactic dependency patterns. We then apply a

supervised Machine Learning algorithm to clean the results

using syntactic and collocational features, achieving a precision of 78% and a recall of 90%. We observe 3 different relations between states and events that change them and

present a method for using Mechanical Turk to differentiate

between these relations1.

Introduction

Knowledge about event semantics plays an important role

in both understanding natural language and reasoning

about the world. In a series of papers (Montazeri and

Hobbs, 2011, 2012; Hobbs and Montazeri, 2014) we have

described our effort in manually axiomatizing change-ofstate verbs in terms of predicates in core theories of commonsense knowledge. This paper is an extension to our

previous work (Montazeri et. al, 2013) in which we investigated the possibility of automatically extracting axioms

for change-of-state verbs from text by detecting the states

that an event can change. As before, we use hand crafted

syntactic dependency patterns to extract candidate (STATE,

EVENT) pairs; but unlike our previous filtering method in

which we ranked the pairs and applied a threshold, we use

a supervised Machine Learning algorithm to filter the candidates. We observe 3 different relations between states

and events that change them (which result in 3 different

types of axioms) and present a method for using Mechanical Turk to categorizes pairs based on these relations.

Methodology

Data Set: We harvested information from the ClueWeb09

dataset, whose English portion contains just over 500 million web pages2.

Patterns: We use lexico-syntactic patterns for extracting

candidate (STATE, EVENT) pairs. In our patterns, STATE

and EVENT are two phrases that are in an adverbialcomplement relation and have a common argument. Here

are simple verbal representations of our syntactic patterns:

“used to STATE, before EVENT” , “STATE until t when

EVENT”, “STATE until EVENT”, “if EVENT, no longer

STATE”, “no longer STATE because EVENT”, “became/got/came STATE after EVENT”, “became/got/came

STATE when EVENT”, “although EVENT, still/continued

STATE”, “stopped STATE, because EVENT” “how can

STATE if EVENT”, “no longer STATE if EVENT”.

In each pattern, EVENT is a verb phrase with verb VE and

STATE is a verb phrase with either (1) a verb VS in passive

1

This research was funded by the Office of Naval Research under Contract No. N00014-09-1-1029.

Copyright © 2015, Association for the Advancement of Artificial Intelligence (www.aaai.org). All rights reserved.

2

122

http://lemurproject.org/clueweb09.php/

form (e.g., was detained)3 or (2) a “being” verb VBS, with

a noun, adjective, or a prepositional phrase (e.g., remained

successful, was hero, was in team). A “being” verb is a

verb in the set {“be”, “remain”, “become”, “get”, “stay”,

“keep”}. To apply the constraint that STATE and EVENT

have a common argument, the subject or the object of the

second phrase (according to their order in the sentence)

should be a pronoun that refers to the subject or object of

the first phrase. Some examples are: “(John was detained)

until March when (the authorities released him)”, “(John

was happy) until (he heard the news)” and “if (John hears

the news), (he will get upset)”.

EVENT and hence we used the following fine-grained tags

for annotating the results:

• Category-1: STATE is a precondition of EVENT and will

no longer hold after EVENT. Examples are (lost (y), find

(x, y)) and (alive (x), die (x)). Pairs in this category result in axiom with the format:

EVENT’(e, x, y) → changeFrom’(e, e0) & STATE’(e0, x/y)5

• Category-2: STATE is not a precondition of EVENT, but

if STATE holds before EVENT, occurrence of EVENT

will surely put an end to it. Examples are (teacher (x),

retire (x)) and (married (x), die (x)). Pairs in this category result in axioms with the format:

EVENT’(e, x, y) & STATE’(e0, x/y)→ changeFrom’(e, e0)

Parsing the Corpus and Applying Patterns: Since our

patterns require syntactic information, we parsed the sentences using the fast dependency parser described in (Tratz

and Hovy, 2011)4. Before parsing this corpus, we first filter

out sentences that won’t match any of our patterns, using a

set of regular expressions derived from the patterns. Next,

we parse these sentences and apply our syntactic dependency patterns to extract STATE and EVENT from each

sentence along with several features such as pronounresolution (whether the common theme is the object or

subject of the event), the pattern that was matched against

the sentence, state and event verb’s voice (passive/active),

and whether the state has been represented by an adjective

or a noun.

In the next step, we aggregate the features extracted for the

same (STATE, EVENT) pairs into (STATE, EVENT, f, P, F)

tuples; where f is the frequency of the pair (which shows

how many times it was extracted by any change-of-state

pattern), P is a dictionary structure that shows for each

pattern, how many times it was matched against the

(STATE, EVENT) pair, and F is a dictionary structure that

keeps the most frequent value for each feature. We then

drop the “being” verbs for nouns, adjectives and prepositional phrases; and construct the argument structures for

states and events based on the most frequent pronounresolution case. After removing tuples with empty events

like “be”, “have”, “get”, “do”, etc., we get about 68000

instances, examples of which are (lost (y), find (x, y)) and

(teacher (x), retire (x)).

• Category-3: Similar to the situation for Category-2, but

EVENT only sometimes puts an end to STATE. Examples

are (happy (x), realize (x, y)) and (confused (x), read (x,

y)). Such pairs result in defeasible axioms with the format:

EVENT’(e, x, y) & STATE’(e0, x/y) & etc6→ changeFrom’(e,

e0)

We refer to pairs that belong to any of the above categories

as “change-of-state” and the rest as “non-change-of-state”

pairs. Table 1 shows the distribution of change-of-state

pairs and the finer-grained categories for all annotated

pairs. In total, 69% of the annotated pairs are change-ofstate pairs. We consider this as the baseline precision for

our method.

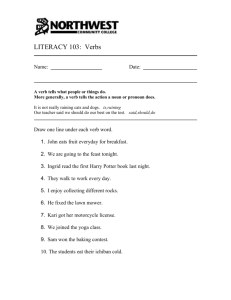

Change-of-State

Non-change-of-state

69%

%Pairs

Cat1

Cat2

Cat3

12%

16%

41%

31%

Table 1: Distribution of Pair Categories

Filtering the Results

In order to increase the 69% baseline precision of the simple pattern-matching method, we used the C4.5 decision

tree learning algorithm (Quinlan 1986) to classify the extracted pairs into change-of-state/non-change-of-state categories. The features we used are pronoun resolution,

matched patterns, state and event verb’s voice (passive/active), and whether the state has been represented by

an adjective or a noun) plus the following statistical information: 1) number of distinct patterns that have extracted

the pair, and 2) Pointwise Mutual Information (PMI) between (STATE, EVENT) and the set of change-of-state pat-

Assessing the Quality of the Results:

Preparing the Evaluation Data Set

One of the authors annotated 870 (STATE, EVENT) pairs

which are a combination of random and high-frequency

pairs. While trying to annotate these pairs, we found 3

types of change-of-state relationships between STATE and

3

5

In the case that the verb is a state verb, we can also consider active

forms, however, in this work we only consider passive forms.

4

http://www.isi.edu/publications/licensed-sw/fanseparser/

For space reasons we have unified two alternative axioms into one:

STATE’(e0, x/y) means STATE’(e0,x) or STATE’(e0,y)

6

The predicate etc means “Some other conditions hold”

123

terns. We compute this mutual information using the following formula:

provided by all the 4 annotators and get the final answer

for each question. MACE (Multi-Annotator Competence

Estimation) is an implementation of an item-response

model that learns in an unsupervised fashion to a) identify

which annotators are trustworthy and b) predict the correct

underlying labels. It is possible to have MACE produce

answers in which it has a confidence above 90%. We have

used this feature in our experiment.

Since we had two types of questions, we ran MACE on

each set of answers separately. As a result, we got for each

pair, 2 answers: a yes/no answer for question 1 and a 3choice answer (surely/sometimes/rarely) for question 2.

We then aggregated the answers to obtain the final category of the pair according to Table 2.

In the following, we refer to Mace as M and to the author

that annotated the pairs as A. We measure the agreement

only on those cases where MACE was sure about its answer and hence produced one. In evaluating the performance of Mechanical Turk, we are particularly sensitive to

false positives, as they will reduce precision, while false

negatives only reduce recall. We consider the following

cases as false positives: for binary yes/no questions: M said

“yes”, but A said “no”. For 3 choice questions: 1) M said

“surely”, but A said “sometimes” or “rarely” 2) M said

“sometimes”, but A said “rarely”. Table 3 shows the

agreement between M and A (which is above 80%) and

percentage of false-positives (which is less than 8%) for

different types of questions.

Where pti represents pattern i, P (state, event, pti)

represents the probability that pattern i extracts (state,

event) and * is a wildcard. We normalized the PMI values

using the discounting factor presented in (Pantel and Ravichandran, 2004) to moderate the bias towards rare cases.

We achieved a precision of 78% and a recall of 90% in a

10-fold cross validation test on our 870 annotated pairs,

which means about 10% improvement over the random

selection baseline.

Categorizing Pairs With Mechanical Turk

We performed an experiment with Mechanical Turk to

investigate whether we can use crowd sourcing for categorizing the change-of-state pairs into the 3 finer grained

categories which we introduced earlier. For each (STATE,

EVENT) pair, we asked 2 questions from the annotators.

Here is an instantiated version of the two questions for the

pair (lost(y), find(x,y)):

1. If I hear "something/someone is found"

a. I can tell that it/she was lost before being found

b. I cannot tell whether it/she was lost before being

found

2. If something/someone is lost:

a. finding will surely put an end to it.

b. finding will sometimes put an end to it.

c. finding will rarely put an end to it.

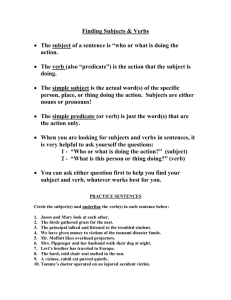

Agreement

Sometimes

Rarely

Yes

Cat1

Cat3

None

No

Cat2

Cat3

None

85%

7.5%

Final Answer

82%

We have presented a method for extracting (STATE, EVENT)

pairs in which EVENT can put an end to STATE, with a precision of 78%. We observed 3 different relations between

states and events that change them (which result in 3 different types of axioms) and presented a method for using

Mechanical Turk to differentiate between these relations.

In future, we would like to consider synonymy of events

and consolidate the data extracted for synonym events

which will hopefully boost the quality of extractions. As

for categorizing the pairs, we can adopt the method used

by (Sil and Yates, 2011) for identifying preconditions of

events (which is important for identifying Category-1

pairs). Finally, we can try using machine learning and statistical analysis to categorize the pairs automatically.

From the 870 pairs that we had annotated by ourselves, we

randomly selected 20 pairs per each change-of-state category, plus 20 non-change-of-state pairs, a total of 80 pairs.

We divided them into 8 assignments each containing 10

pairs (for each pair, 2 questions, and hence 20 questions

per assignment). We required that each assignment be

answered by 4 subjects. After collecting the results, we

used MACE7 (Hovy, et. al 2013) to aggregate the answers

MACE

can

be

downloaded

http://www.isi.edu/publications/licensed-sw/mace/

6%

3 Choice

Conclusions and Future Work

Table 2: Final Categories Based on Answers to Questions

7

86%

Table 3: Agreement and False Positives for different types of questions

We can aggregate the answers to these 2 questions according to Table 2 to obtain the right category.

Surely

False Positives

Yes/No

from:

124

References

Chklovski, Timothy, and Patrick Pantel. Verbocean: Mining the

web for fine-grained semantic verb relations. In proceedings of

EMNLP. Vol. 4. 2004.

Girju, Roxana. Automatic detection of causal relations for question answering. In proceedings of the ACL 2003 workshop on

Multilingual summarization and question answering-Volume 12.

2003.

Hobbs, Jerry R., and Niloofar Montazeri. The Deep Lexical Semantics of Event Words. Frames and Concept Types. Springer

International Publishing, 2014. 157-176.

Hovy, Dirk., Berg-Kirkpatrick, Taylor, Vaswani, Ashish, and

Eduard Hovy, Learning Whom to trust with MACE. In proceedings of NAACL-HLT (pp. 1120-1130), 2013.

Montazeri, Niloofar, and Jerry R. Hobbs. Elaborating a knowledge base for deep lexical semantics. In proceedings of the Ninth

International Conference on Computational Semantics. Association for Computational Linguistics, 2011.

Montazeri, Niloofar, and Jerry R. Hobbs. Axiomatizing Changeof-State Words. In M. Donnelly and G. Guizzardi (eds.), Formal

Ontology in Information Systems: In proceedings of the Seventh

International Conference (FOIS 2012), IOS Press, Amsterdam,

Netherlands, pp. 221-234

Montazeri, Niloofar, Hobbs, Jerry R. and Eduard H. Hovy. How

Text Mining Can Help Lexical and Commonsense Knowledgebase Construction. In proceedings of 11th International Symposium on Logical Formalizations of Commonsense Reasoning

(Commonsense 2013)

Pantel, Patrick, and Deepak Ravichandran , Automatically Labeling Semantic Classes. In proceedings of the 2004 Human Language Technology Conference (HLT- NAA Cl-04), Boston, MA,

2004, pp. 321–328.

Pekar, Viktor. Acquisition of verb entailment from text. In proceedings of the Human Language Technology Conference of the

NAACL, Main Conference. 2006.

Quinlan, J. Ross. Induction of decision trees. Machine learning

1.1 (1986): 81-106.

Sil, Avirup, Fei Huang, and Alexander Yates. Extracting Action

and Event Semantics from Web Text. AAAI Fall Symposium:

Commonsense Knowledge. 2010.

Sil, Avirup, and Alexander Yates. Extracting STRIPS Representations of Actions and Events. RANLP. 2011.

Tratz, Stephen, and Eduard Hovy. A fast, accurate, nonprojective, semantically-enriched parser. In proceedings of the

Conference on EMNLP.2011

125