An Automated Machine Learning Approach Applied To Robotic Stroke Rehabilitation

advertisement

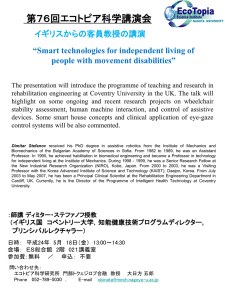

AAAI Technical Report FS-12-01 Artificial Intelligence for Gerontechnology An Automated Machine Learning Approach Applied To Robotic Stroke Rehabilitation Jasper Snoek, Babak Taati and Alex Mihailidis University of Toronto 500 University Ave. Toronto, ON, Canada Abstract 3. Apply a selection of standard discriminative machine learning algorithms and compare results. While machine learning methods have proven to be a highly valuable tool in solving numerous problems in assistive technology, state-of-the-art machine learning algorithms and corresponding results are not always accessible to assistive technology researchers due to required domain knowledge and complicated model parameters. This short paper highlights the use of recent work in machine learning to entirely automate the machine learning pipeline, from feature extraction to classification. A nonparametrically guided autoencoder is used to extract features and perform classification while Bayesian optimization is used to automatically tune the parameters of the model for best performance. Empirical analysis is performed on a real-world rehabilitation research problem. The entirely automated approach significantly outperforms previously published results using carefully tuned machine learning algorithms on the same data. This strategy is unsatisfying for a number of reasons. The performance of each machine learning algorithm, for example, is dependent on the method used for feature extraction. Consider a classification task where the structure of interest within the data lies on some nonlinear latent manifold. This structure can be captured by a nonlinear feature extraction followed by a linear classifier or conversely linear feature extraction followed by a nonlinear classifier. Although this is a simple example, it elucidates the fact that there are underlying complexities that make the comparison of various approaches challenging or less meaningful. In general, each machine learning algorithm also requires the setting of non-trivial hyperparameters. Often these parameters govern the complexity of the model or the amount of regularisation and require expert domain knowledge and time-consuming cross-validation procedures to select. Some examples of these hyperparameters include the number of hidden units in a neural network, the regularisation term in support vector machines and the number of dimensions in principal components analysis. The combinations of feature extraction methods, discriminative machine learning models and corresponding hyperparameters of each forms a vast space to explore for the best result and strategy. Researchers in assistive technology in general do not possess the advanced machine learning domain knowledge necessary to intuitively explore the vast space of machine learning models and parameterizations. However, assistive technology is a domain that requires high accuracy and relatively small improvements in performance can translate to significant real world impact. Consider, for example, the difference between 95% and 99.5% accuracy for a classifier that detects falls in an older adult’s home from sensor data. Such a discrepancy in classification accuracy can be translated to lives saved or a reduction in false positive classifications large enough to make the classifier useful rather than irritating. Such improvements can be garnered through more appropriate combinations of feature extraction, discriminative learning and better hyperparameters. This short paper highlights the findings of (Snoek, Adams, and Larochelle 2012; Snoek, Larochelle, and Adams 2012) in the context of assistive technology. The paper does not demonstrate novel methods or empirical anal- As better healthcare worldwide is improving longevity and the baby boomer generation is aging, the proportion of elderly adults within the population is rapidly growing. Healthcare systems and governments are seeking new ways to alleviate the burden on society of caring for this aging population. Artificial intelligence has been shown to be a promising solution, as many of the simpler tasks that burden caregivers can be automated. This also suggests solutions for promoting independence and aging in place, because it alleviates the need for the constant presence of a caregiver in the home. The benefits of the application of machine learning to problems in assistive technology are becoming ever more clear. However, the application of machine learning to problems in assistive technology remains challenging. In particular, it is often unclear what machine learning model or approach is most appropriate for a given task. A common paradigm is to apply multiple standard machine learning tools in a black box manner and compare the results. This proceeds according to the following steps: 1. Collect data representative of the problem of interest. 2. Extract a set of features from these data. c 2012, Association for the Advancement of Artificial Copyright Intelligence (www.aaai.org). All rights reserved. 38 yses but rather seeks to stimulate discussion and demonstrate a promising solution to a significant practical problem in the application of machine learning to assistive technology. In this paper, we explore the use of an integrated and entirely automatic approach to perform feature extraction, classification and hyperparameter selection applied to a real-world rehabilitation research problem. In particular, we explore the use of an unsupervised machine learning model that is guided to learn a representation that is more appropriate for a given discriminative task. This nonparametrically guided autoencoder (Snoek, Adams, and Larochelle 2012) uses a neural network to learn a nonlinear encoding that captures the underlying structure of the input data while being constrained to maintain an encoding for which there exists a mapping to some discriminative label information. This model integrates the feature extraction and discriminative tasks and reduces the complexity induced by exploring various combinations of feature extraction algorithms and classifiers. The nonparametrically guided autoencoder (NPGA) requires a number of hyperparameters, such as the number of hidden units of the neural network, that are nontrivial to select. However, recent advances in machine learning have developed extremely effective methods for automatically optimizing such hyperparameters. A black-box optimization strategy known as Bayesian Optimization has recently been shown (Bergstra et al. 2011; Snoek, Larochelle, and Adams 2012) to be particularly well suited to this task, consistently finding better parameters, and doing so more efficiently, than machine learning experts. In this work it is shown that such a fully automated strategy arising from the combination of the NPGA and Bayesian Optimization can outperform a carefully hand tuned machine learning approach on a real-world rehabilitation problem (Taati et al. 2012). This is significant as it suggests that assistive technology researchers can achieve state-of-the-art results on their problems without requiring expert knowledge or tedious algorithm and parameter tuning. ture but also enforces that a mapping to label information exists. The training objective of the NPGA can be formulated as finding the optimal model parameters, φ? , ψ ? , Γ? , under the combined objective of the autoencoder, Lauto and Gaussian process, LGP , parameterized by hyperparameter α ∈ [0, 1], φ? , ψ ? , Γ? = arg min (1−α)Lauto (φ, ψ) + αLGP (φ, Γ). φ,ψ,Γ This objective can be further extended to incorporate the loss of a parametric multi-class logistic regression mapping, LLR , to the labels: L(φ, ψ, Λ, Γ ; α, β) = (1−α)Lauto (φ, ψ) + α((1−β)LLR (φ, Λ) + βLGP (φ, Γ)), where an additional hyperparameter, β, trades off the contribution of the nonparametric guidance afforded by the Gaussian process with the parametric classifier. This additional loss thus can direct the model to learn a representation that is harmonious with the actual logistic regression classifier that will be used at test time. The result is a model that is trained to extract features from some data such that they are explicitly enforced to encode structure that captures the major sources of variation in the data but also are well suited to the classifier that will be used at test time. The model hyperparameters permit one to flexibly interpolate between three common models, an autoencoder, a neural network for classification and a Gaussian process latent variable model. A caveat, however, is that one must search the space of hyperparameters to find the best formulation for a given problem. Fortunately, Bayesian Optimization can be used to efficiently find the best setting of the hyperparameters in a fully automated way. Nonparametrically Guided Autoencoder The NPGA is a semiparametric latent variable model. It leverages both parametric and nonparametric approaches to create a latent representation of input data that encodes the salient structure of the data while enforcing that more subtle discriminative structure is encoded as well. The parametric component consists of an autoencoder (Cottrell, Munro, and Zipser 1987), a neural network that is architecturally designed to learn an encoding of the data that captures the salient structure while discarding noise. This is achieved by creating a neural network that is trained to reconstruct the input data at its output while constraining the complexity of an internal coding layer. Often, however, the structure relevant to some discriminative task is not captured within the most prominent sources of variation. The innovation of the NPGA is to leverage a theoretical interpretation of Gaussian processes (Rasmussen and Williams 2006) as a kind of infinite neural network in order to augment the autoencoder with a nonparametric Gaussian process mapping to some additional label information. The autoencoder then learns a latent representation of the data that captures the salient struc- Bayesian Optimization Bayesian optimization (Mockus, Tiesis, and Zilinskas 1978) is a methodology for finding the extremum of noisy blackbox functions. Given some small number of observed inputs and corresponding outputs of a function of interest, Bayesian optimization iteratively suggests the next input to explore such that the optimum of the function is reached in as few function evaluations as possible. Provided that the function of interest is continuous and follows some loose assumptions, Bayesian optimization has been shown to converge to the optimum efficiently (Bull 2011). For an overview of Bayesian optimization and example applications see (Brochu, Cora, and de Freitas 2010). Recently, Bayesian optimization has been shown to be effective for optimizing the hyperparameters of machine learning algorithms (Bergstra et al. 2011; Snoek, Larochelle, and Adams 2012). In this work the Gaussian process expected improvement formulation of (Snoek, Larochelle, and Adams 2012) is used. 39 (a) The Robot (b) Using the Robot (c) Depth Image (d) Skeletal Joints Figure 1: The rehabilitation robot setup and sample data captured by the sensor. Empirical Analysis exercises using the robotic arm and it records their posture as a temporal sequence of seven estimated upper body skeletal joint angles (see Figures 1(c), 1(d) for an example depth image and corresponding pose skeleton captured by the system). A machine learning classifier is applied to the joint angles to classify between five different classes of posture. These include proper posture and four common cases of improper posture resulting from users’ natural tendency to compensate for limited agility. (Taati et al. 2012) collected a data set consisting of seven users each performing each class of action at least once, creating a total of 35 sequences (23,782 frames). They compare the use of a multiclass support vector machine (Tsochantaridis et al. 2004) and a hidden Markov support vector machine (Altun, Tsochantaridis, and Hofmann 2003) in a leave-one-subject-out test setting to distinguish these classes and report best per-frame classification accuracy rates of 80.0% and 85.9% respectively. The approach outlined in this work is empirically validated on a real-world application in assistive technology for rehabilitation. About 15 million people suffer stroke worldwide each year, according to the World Health Organization. Up to 65% of stroke survivors have difficulty using their upper limbs in daily activities and thus require rehabilitation therapy (Dobkin 2005). The frequency at which rehabilitation patients can perform rehabilitation exercises, a significant factor determining the rate of recovery, is often limited due to a shortage of rehabilitation therapists. This motivated the development of a robotic system to automate the role of a therapist providing guidance to patients performing repetitive upper limb rehabilitation exercises by (Kan et al. 2011), (Huq et al. 2011), (Lu et al. 2012) and (Taati et al. 2012). The system allows a user to perform upper limb reaching exercises with a robotic arm (see Figures 1(a), 1(b)) while it dynamically adjusts the amount of resistance to match the user’s ability level. The system can thus alleviate the burden on therapists and allow patients to perform exercises as frequently as desired, significantly expediting rehabilitation. The system’s effectiveness is critically dependent on its ability to discriminate between correct and incorrect posture and prompt the user accordingly. Currently, the system (Taati et al. 2012) uses a Microsoft Kinect sensor to observe a patient while they perform upper limb reaching In this work an NPGA is used to encode a latent embedding of postures that provides better discrimination between different posture types. The same data is used as in (Taati et al. 2012). The input to the model is the seven skeletal joint angles, i.e., Y = R7 , and the label space is over the five classes of posture. Unfortunately, the NPGA model requires setting a number of non-trivial hyperparameters. Rather than adjust these manually or perform a grid search, validation set error is optimized over the hy- 40 Model Bull, A. D. 2011. Convergence rates of efficient global optimization algorithms. Journal of Machine Learning Research (34):2879–2904. Cottrell, G. W.; Munro, P.; and Zipser, D. 1987. Learning internal representations from gray-scale images: An example of extensional programming. In Conference of the Cognitive Science Society. Dobkin, B. H. 2005. Clinical practice, rehabilitation after stroke. New England Journal of Medicine 352:1677–1684. Huq, R.; Kan, P.; Goetschalckx, R.; Hbert, D.; Hoey, J.; and Mihailidis, A. 2011. A decision-theoretic approach in the design of an adaptive upper-limb stroke rehabilitation robot. In International Conference of Rehabilitation Robotics (ICORR). Kan, P.; Huq, R.; Hoey, J.; Goestschalckx, R.; and Mihailidis, A. 2011. The development of an adaptive upper-limb stroke rehabilitation robotic system. Neuroengineering and Rehabilitation. Lu, E.; Wang, R.; Huq, R.; Gardner, D.; Karam, P.; Zabjek, K.; Hbert, D.; Boger, J.; and Mihailidis, A. 2012. Development of a robotic device for upper limb stroke rehabilitation: A user-centered design approach. Journal of Behavioral Robotics. Mockus, J.; Tiesis, V.; and Zilinskas, A. 1978. The application of Bayesian methods for seeking the extremum. Towards Global Optimization 2:117–129. Rasmussen, C. E., and Williams, C. K. I. 2006. Gaussian Processes for Machine Learning. Cambridge, MA: MIT Press. Snoek, J.; Adams, R. P.; and Larochelle, H. 2012. On nonparametric guidance for learning autoencoder representations. In International Conference on Artificial Intelligence and Statistics. Snoek, J.; Larochelle, H.; and Adams, R. P. 2012. Practical bayesian optimization of machine learning algorithms. In Advances in Neural Information Processing Systems (To Appear). Taati, B.; Wang, R.; Huq, R.; Snoek, J.; and Mihailidis, A. 2012. Vision-based posture assessment to detect and categorize compensation during robotic rehabilitation therapy. In International Conference on Biomedical Robotics and Biomechatronics. Tsochantaridis, I.; Hofmann, T.; Joachims, T.; and Altun, Y. 2004. Support vector machine learning for interdependent and structured output spaces. In International Conference on Machine Learning. Accuracy SVMM ulticlass (Taati et al. 2012) Hidden Markov SVM (Taati et al. 2012) `2 -Regularized Logistic Regression NPGA 80.0% 85.9% 86.1% 91.7% Table 1: Experimental results on the rehabilitation data. Perframe classification accuracies are provided for different classifiers on the test set. Bayesian optimization was performed on a validation set to select hyperparameters for the `2 -regularized logistic regression and NPGA algorithms. perparameters of the model using Bayesian optimization. The Gaussian process expected improvement algorithm was used to search over α ∈ [0, 1], β ∈ [0, 1], 10 − 1000 hidden units in the autoencoder and an additional GP latent dimensionality H ∈ [1, 10]. The best validation set error observed by the algorithm, on the twelfth of thirty-seven iterations, was at α = 0.8147, β = 0.3227, H = 3 and 242 hidden units. These settings correspond to a per-frame classification error rate of 91.70%, which is significantly higher than the 85.9% reported by the best method of (Taati et al. 2012). Results obtained using various models are presented in Table 1. Interestingly, it seems clear that the best region in hyperparameter space is a combination of all three objectives, the parametric logistic regression, nonparametric guidance and unsupervised autoencoder learning. This suggests that manually finding the best combination of feature extraction and classification algorithm would be challenging. The final product of the system is a simple neural network for classification, which is directly applicable to this real-time setting. Conclusion In this paper, a methodology was presented to combine feature extraction and classification into a single model and to optimize the model hyperparameters automatically. The approach was empirically validated on a real-world rehabilitation research problem, for which state-of-the-art results were achieved. The approach is very general, and as such can be applied to potentially many problems in the domain of assistive technology. This is valuable as the need for careful exploration of machine learning models and model parameters, which often requires significant domain knowledge, is obviated. References Altun, Y.; Tsochantaridis, I.; and Hofmann, T. 2003. Hidden markov support vector machines. In International Conference on Machine Learning. Bergstra, J. S.; Bardenet, R.; Bengio, Y.; and Kégl, B. 2011. Algorithms for hyper-parameter optimization. In Advances in Neural Information Processing Systems. Brochu, E.; Cora, V. M.; and de Freitas, N. 2010. A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. pre-print. arXiv:1012.2599. 41