Robust, Scalable Hybrid Decision Networks

The Intersection of Robust Intelligence and Trust in Autonomous Systems: Papers from the AAAI Spring Symposium

Robust, Scalable Hybrid Decision Networks

Jason Scholz, Ian Dall, Don Gossink, Glen Smith, Darryn Reid

scenarios.

Joint and Operations Analysis Division, Defence Science and Technology Organisation, Australia

POC: jason.scholz@defence.gov.au

Abstract

We present a framework for designing and evaluating systemically-robust and efficient hybrid (human and machine) decision networks. We propose the degree of diversity for enterprise variables be chosen according to the expected demands of the decision scenario, with margins for risk and uncertainty. We illustrate the use of the model by comparing two mission case studies and outline a theoretical approach for analyzing enterprise settings. As the robustness of the enterprise framework ultimately rests on the ability to encode and adapt expertise in software, we explain our approaches to the development of robust intelligence.

Hybrid C2 Dimensions

Seven major tenets defined the Ubiquitous Command and

Control (UC2) concept by Lambert and Scholz (2007).

UC2 was developed in response to a range of issues identified in Network Centric Warfare (NCW), including the dearth of consideration of decision automation potential and deleterious affects on human decision-making performance (Lambert and Scholz 2005). A hybrid humanmachine decision society was presented in UC2 with extreme robustness (the defensive perspective) or extreme maneuver (the offensive perspective), where every platform boasts a similar and significant C2 capability, achieved through ubiquitous deployment of decision automation. We argue, that in keeping with Clausewitz's principles of ‘Economy of Force’ and ‘Coherency’ between tactical activity and political purpose, that these tenets further be considered as variables to be chosen in the design or composition of C2 systems for missions and

Since all the individuals on a platform are constrained to maneuver in the same way, in the UC2 context, it is natural to consider the platform as a decision making entity.

However, it is more general to consider decision making

Copyright © 2013, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved. collectives (DMC). A DMC is any group which may have individual humans or machines and other DMCs as members and makes decisions.

The seven tenets of UC2 may be reframed as Hybrid C2 dimensions as follows:

1. Decision Devolution is the degree to which decisions are made by the entities which will enact them. Decision devolution aligns with the “power to the edge” sentiment of NCW. High decision devolution has the potential to provide greater DM speed, flexibility and redundancy. This parameter may span a range from full devolution, through devolution of DM with externally imposed processes, constraints and oversight, to fully centralized decision making.

2. Decision-making (DM) Capability is the degree to which a DMC has the information, knowledge, ability and resources to make decisions. Adequate DM capability is a pre-requisite for decision devolution. This dimension may be considered to have three sub-dimensions: individual

DM capability (range low to high), number of individuals

(range low to high) and the degree of diversity between individuals. The degree of diversity involves a trade-off between extremes of being identical (which is vulnerable if individual DM performance is not perfect) and being completely different (where individuals may not be able to interoperate).

3. Decision Automation is the degree to which decision making is performed by a machine without human intervention. Except in trivial cases, machine decision making is implemented in computer software. DM expertise implemented in software is easily replicated, adapted, distributed and may be underpinned by formal guarantees about applicability and performance. Decision

Automation is required to achieve a DM capability on unmanned platforms (eg missiles) and to augment DM on manned platforms (eg ships). It may take two forms, automated decision makers (eg agents), and automated decision aids. The degree of employment of these forms of automation is variable. Decision aids may range from none

71

to some, for every human individual. Automated decision makers may range from none, to some on every platform.

4. Human-Machine Integration is the degree to which a DMC comprising humans and machines behaves as one coherent entity. Human Machine Integration enables DM performance to be maximized by offsetting the weaknesses of machine DM by the strengths of human DM, and viceversa. Effective integration requires efficient humanmachine interaction and trust.

Maximising DM performance will often involve mixed initiative where some decisions, in some circumstances, are best made by machines and some decisions are best made by humans. The degree to which a machine holds the elements of Command is contentious. Command comprises the most tightlycontrolled aspects of military DM in authority, responsibility and competency. An automated agent's competency depends on the expertise embedded in it, its responsibility comes from the social agreements it forms based on that competency, and its authority depends on the role it assumes in social agreements.

Efficient interaction lowers the communication barrier between humans and machines. Rich interaction requires machines to represent and reason about the goals and knowledge of humans and to make human-comprehensible and traceable reasoning. Rich interaction facilitates trust.

This variable may range from no integration to full integration of mixed initiative and interaction.

5. Distribution is composed of two sub dimensions: spatial and intentional. Spatial distribution is the degree of spatial diversity, ranging from the complete physical collocation of DMC members (which may be vulnerable to missile strike) to high physical dispersion. Intent distribution is diversity of purpose, ranging from a single source (which may be vulnerable to blackmail for a human, or cyber attack for a machine) to every individual being a source of intent.

6. Social Coordination is the ability of a group of semiautonomous individuals or collectives to achieve common purpose through social protocols. It is required to achieve coherence or unify the diversity presented by the former five variables. This ensures that intent (Command) and capability (plans and assets to achieve the intent by changing the world and thereby the perceptions) are matched, and controlled through execution. This may be conceptualized as an Agreement Protocol which both humans and machines can easily understand. The social coordination variable ranges from no coordination (e.g. shared training, doctrine and experience only) to full coordination where dynamic agreements may be possible between any individuals in principle.

7. Management Constraints are bounds imposed on the social coordination that individuals or collectives may engage in. At one end of this range is “hierarchical directive” where authority, responsibility, competency and information flows are highly managed via each progressive level of a hierarchy. At the other end of the range is laissez faire . There are four levels of management constraint proposed which arise from natural constraints. These are the Individual level for self-management of cognitive abilities (eg by education), the Platform level for management by common location (eg by a ship's

Commander), the Team level for management by common intent across multiple platforms, and the Societal level for management by collaboration and competition across multiple teams.

Enterprise Settings

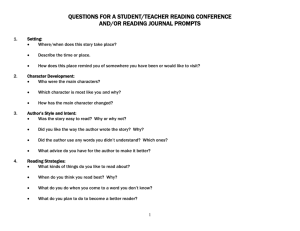

We propose the requisite diversity of hybrid decision networks be chosen according to the assessed needs of the mission or scenario. Greater mission complexity, in terms of uncertainty, number of hostile entities and so on, indicates a need to set the slider settings shown in Figure 1 further to the right, whereas Economy of Force principles indicates a need to set the slider settings further to the left.

These governance settings are about the right mechanisms for the right job across the hybrid enterprise and ensuring that the conditions under which those choices work well are maintained, or the settings are adapted as required. The development of a tool to aid in estimating these settings would be significant for Commanders.

Methods for determining these settings might be approached in several ways. The most comprehensive would be development of DM theory. Alternatively, multiagent Monte Carlo simulation is possible (especially where such a simulation represents the expected mission environment). We first present a case study, contrasting two DM systems to demonstrate that it is possible to characterize situations and scenarios in terms of these enterprise variables.

Case Studies

We illustrate enterprise settings for a mission-critical air reconnaissance and a naval blockade situation as portrayed in the year 2000 film “Thirteen Days” and inspired by the

1962 Cuban Missile Crisis. The relative comparison of same-period systems is illustrative, as is the ability to handle DM systems with low-levels of decision automation. Table 1 summarizes the air reconnaissance mission in terms of these dimensions.

Dimension

Description Setting

1 Mission ordered directly by the President, whose intent was communicated via special advisor to the pilot.

Low

72

Dimension

Description Setting

2 Decision capability: individual DM capability (M), diversity (H), number (L)

Medium

3 Low altitude, high-speed surprise pass without a/craft radar on, fly plane, switch on camera, avoid ground-based small arms and AA fire. Not possible without telephone decision aid for communication of intent. Significant human DM but low on decision aids.

Low

4 High pilot-aircraft integration DM, but low on decision integration.

5 Two sources of intent (L), President and

Joint Chiefs / Chief AF. Distributed but few decision makers.

6 Dynamic social agreement on intent prior, and after mission.

Low

Low

High

7 Highly controlled, but with cooperation and conflict from two sources of intent.

Medium

Table 1.

An Air Reconnaissance mission.

Table 2 summarizes the “quarantine” scenario.

Dimension

Description Setting

1 Quarantine mission was left to Chief of

Navy, but actions did not meet president's intent “this is not a blockade, it’s about language: President Kennedy communicating with President Krushchev” intervention by SECDEF.

Medium

-Low

2 Decision capability: individual DM capability (M), diversity (H), number (H).

Multiple US ships, Russian submarine presence (unknown at the time), Russian

Freighters, several war-rooms...

Medium

-High

3 Decision aids: Dots on maps, location

SITREPS (a lot manual), communications.

Medium

4 Moderate decision integration in locating freighters, accurately predicting time to cross “the line”, etc.

Medium

5 Highly distributed, but one source of intent and many DMs.

Medium

6 Some intent correction. Dynamic agreement to let a Russian vessel pass

(we’d look “silly if it was carrying baby food”).

High

Dimension

Description Setting

7 Less tightly-controlled except for critical time point near crossing the line. Multiple levels of control running from individuals

(CN's error realization), ship platforms (star shell firings), etc...

Medium

-High

Table 2.

A Naval “Quarantine” scenario.

These settings are contrasted in figure 1.

The air example has lower diversity in all areas, and is more vulnerable. For example, unavailable telephony is likely to have compromised achieving the intent of the

President, as no other means of telecommunication would have been sufficiently influential, and there was not enough time to travel for a face to face meeting.

2.

3.

1.

4.

Hierarchical

Directive

5.

7.

6.

Air

Recon

Example

Naval

Quarantine

Example

2.

1.

3.

7. Management Constraints

4.

5.

6.

7.

Individual, Platform

Team & Sociological levels

Agreements without protocol

One source of intent

Physically collocated

6. Coordination

5b. Intentional Distribution

5a. Spatial Distribution

Dynamic agreements with anyone

Any individual may express intent

Physically distributed

Humans only or

Machines only

4. Human-Machine Integration

Human DM fully integrated with machines

Humans DMs only

No machine aids

Low individual capability

Few members

Low diversity

3b. Automated DMs

3a. Automated Decision Aids

2. Decision Devolution

Automated DM’s on every platform

Automated DM aids for every human

High individual capability

Many members

High diversity

Devolved Centralized

1. Decision Devolution

Figure 1.

Variable ranges and two illustrative cases.

A Theoretical Approach

Consider a scenario represented abstractly as in figure 2.

73

S

3,1

S

5,1

S

2,1

S

1,1 S

6,1

S

2,2

S

4,1 k

1

=3 n

1,1

=1 k

2

=1 n

2,2

=11

S

3,4 k

3

=2 n

3,4

=11

S

4,2

S

6,2

S

6,3

S

2,3 k

4

=3 n

4,1

=301

Figure 2.

Example scenario and preferred COA.

A directed acyclic graph of vertexes represents decision states (situations) where each situation has some number of choices k , and the best Course of Action (COA) is the sequence determined as shown.

The problem may be formulated as finding an acceptable probability of traversing the COA, given n decision makers. We investigate the relationship between transition probability (probability of correct decision) and n in the following.

The basis of our theory development is in group decision making, particularly, political situations where decisions are arrived at by some voting scheme. This is far more flexible than it may at first seem. A weighted voting scheme of utility maximizing agents can model a spectrum of real-world arrangements. For example, one DM gets all the weight (single commander), to a few DM’s weighted more than others, to majority vote (more than 1/2 in favor), super-majority (e.g. 2/3 in favor), or unanimous decision.

Indeed, political group theories provide useful foundations for our endeavor.

The Condorcet Jury Theorem (CJT) expresses the probability of a group of individuals arriving at a correct decision. Let n voters ( n odd) choose between two options, with equal apriori likelihood of being correct. Assuming independent judgments and equal probability of being correct, where 0.5< p <1, then the probability of the group being correct by single-preference majority vote is given by,

P n

=

n

∑

h = ( n + 1 )/ 2

( n h

) p h

(

1

−

p

)

n − h

Note this converges to P n

=1 quickly, e.g. if p =0.8, then

P n

>0.99 for n =13. Note for n even, and p >1/2, the Chernoff

Bound states,

P n

1 e

2 n ( p 1 / 2 )

2

This may be rearranged to give an estimate of the minimum number of individuals to achieve a given P n n

1

p 1 / 2

2 ln

1

1 P n

Not all individuals have equal probability of choosing the correct option, as decision competencies are heterogeneous. In the near term future, for a given situation, the difference between human and machine decision competence in Mixed Initiative is likely to be striking, and we expect the correct choice of type of decision maker to be clear. This is just as well, as Boland

(1989) demonstrates in the heterogeneous case with nonidentical probabilities of correct decision (competence) p

1

, p

2

,..., p n

for n individuals, that for any level of average p 1 / 2 1 competence

/( 2 n ) p then P n

p provided

. So, the average competence for a heterogeneous group needs to be higher than for a homogeneous group. In the case of automated decision aids integrated with a human, we expect increased decision competence compared with non-augmented humans and this may be modeled accordingly.

Kanazawa (1998) further shows that for any given level of average competence, heterogeneous groups are more capable of arriving at the correct decision than homogeneous groups, supporting our diversity assertion.

We can model a group with heterogeneous competencies, as independent Bernoulli random variables,

X

1

, X

2

,..., X n where X i

with associated probabilities of correct individual decision p

1

, p

2

,..., p n

. A majority decision for this heterogeneous group is given simply by X X

1

X

2

...

X n

. This constitutes a

Poisson-Binomial distribution for which the probability of being correct by majority vote ( n odd) is,

P n

n h ( n

/ A p i

A 1 ) 2 A F h i j

( 1 p j

)

Noting F h

is the set of all subsets of h integers that can be selected from (1,2,…, n ). To illustrate, for n =3 the majority includes all three combinations of two correct

(and one wrong) and the single combination of all correct thus,

P

3

p

1 p

2

( 1 p

3

) p

1 p

3

( 1 p

2

) p

2 p

3

( 1 p

1

) p

1 p

2 p

3

In Table 3, we compute the majority decision probability

P n

for n =5. Each case has identical mean =0.768 but increasing variance in p i

values. This illustrates the degree to which increasing diversity increases probability of the group being correct.

74

p

1 p

2 p

3 p

4 p

5

P n

1 0.768 0.768 0.768 0.768 0.768 0.9145

2

0

2 0.8 0.75 0.7 0.78 0.81 0.9156 0.0078

3 0.6 0.95 0.5 0.98 0.81 0.9438 0.1798

4 1 0.55 1 0.48 0.81 0.9555 0.2398

Table 3 . Example majority probability for n =5, with identical mean as a function of increasing variance.

If decision makers are of unequal competence, the

Bayesian optimum decision rule is for weighted voting

(Shapley and Grofman, 1984), w i

log

1 p i p i

To illustrate, consider a group with competencies ( 0.9,

0.9, 0.6, 0.6) who make independent choices. If we let the member with maximum competence decide P

N

=0.900, if we let the group decide by majority rule, P

N

=0.877; if we let the group decide under a weighted majority voting rule with weights (1/3,1/3,1/9,1/9,1/9) then P

N

=0.927.

A further assumption of CJT is that individual DM votes are independent. However, in practice, dependence is to be expected as a result of mutual influence prior to a group decision. CJT has been extended to correlated votes in

Ladha (1995), where a bound for arbitrary correlations is given as,

P n

p if 1 n ( p 0 .

25 )

( n 1 ) p

2

Where is the average of the coefficients of correlation.

Note that some votes can be highly correlated, so long as the average correlation is below the threshold. Indeed, it is in the interest of the group to seek negatively correlated individuals (even if they have the same competence) providing they represent differing (including opposing) schools of thought, to ensure this condition.

Ladha develops specific results for two plausible distributions, namely Polya and Hypergeometric types, representing positive and negative correlations respectively. Positive to low correlations may be expected under conditions of common intent, and negative to low correlations where voters intent may be mutually exclusive. In summary, the majority of a group does better than any one voter under arbitrary correlation conditions, and the probability that a majority attains the superior alternative monotonically increases as the coefficient of correlation decreases for the specific distributions examined.

List and Goodin (2001) extend CJT to include two or more options. Given n voters and k options ( x

1

, x

2

,…, x k

), where each voter has probability ( p

1

, p

2

,…, p k

) of voting for option ( x

1

, x

2

,…, x k

) respectively, the probability of the collective choosing option x i

(plurality voting) is based on the multinomial distribution,

P ( X

1

n

1

,..., X k

n k

: N i

) k

N

i n !

j 1 p h j j n j

!

Where N i

equals the set of all k tuples of votes for the k option categories for which option i is the winner. Table 4 illustrates with some example values.

Number of options k

Probabilities p

1

, p

2

,…, p k

Probability correct option is chosen for group size n n =11 n =301

2

3

0.6,0.4

0.4,0.35,0.25

0.5,0.3,0.2

0.753

0.410

0.664

1.000

0.834

1.000

5 0.21,0.2,0.2,0.2,0.19

0.3,0.2,0.2,0.2,0.1

0.157

0.360

0.308

0.980

Table 4. Probability of the correct option chosen, List and

Goodin (2001).

We note from table 4 that when faced with k options, probabilities just above 1/ k for the correct choice and just under for incorrect ones, that the probability of group decision convergence increases far more slowly with n .

Thus we have demonstrated the value of diversity in terms of number of decision makers, decision competency and variations, decision correlation and as a function of the number of options. The mathematical relationships initiated here provide a basis for a computational theory to explain and predict hybrid enterprise governance settings.

Robust Machine Intelligence

The benefits of our proposed hybrid decision network over a human-only decision network ultimately rests on how effectively machine decision capabilities can be achieved.

So we explain our approach towards this goal.

Machine capabilities do not need to be at human intelligence levels to be useful, however, the more otherwise unique human-level expertise that can be captured in software, the greater the ratio of machines to humans achievable for a wider range of situations.

In order to provide higher-level functions in automated awareness and resource management, machines need symbolic relational reasoning abilities (Scholz et al , 2012).

Traditional approaches to reasoning using mathematical logics are powerful but nonetheless limited. Few logics deal explicitly with uncertainty or inconsistency, resulting in a lack of real-world applications or at best, severe brittleness. For example, Description Logics (proposed for the Semantic Web) that are presented with inconsistent information (i.e. a database that states both “X is true” and

“X is false”) can prove that any sentence in their formal

75

system is true (and any sentence is false)! It is for this reason that great care is taken in pre-processing ontologies to eliminate inconsistencies in such systems. In contrast, human reasoning is rife with inconsistencies. Furthermore, richly expressible logics are needed for any real-world application. For this reason we identify the need for probabilistic logics (of at least first order) or fuzzy logics.

Clearly it is not feasible for operators to supply all knowledge required by the machine, and especially not probability values. Further as we require similar (and not identical) machine capabilities, local “adaptation” or learning is required. This supports the need for Machine

Learning.

A coherent unification of mathematical logic (eg first order logics FOL and higher), probability theory and machine learning was proposed by De Raedt and Kersting

(2003).

One proposal for this unification is Markov Logic

Networks (MLN) (Richardson and Domingos, 2006) and implemented as Alchemy. A MLN is a template for translating “weighted” FOL statements into a ground

Markov Random Field (MRF), which may subsequently be used for inference, structure learning and parameter

(weight) learning. In a MLN, weights define the degree of support for a logical sentence, thereby allowing “degrees” of inconsistency. MLN's have a high degree of expressibility and many computational tricks have been developed to help reduce computational complexity, but issues remain.

We have experienced knowledge representation limitations with MLN's. Weights in MLN's are a construct inherited from MRF's and do not translate trivially into probabilities. If human-provided formulas exist in the machine with associated probabilities (perhaps determined from prior experience) they do not remain fixed, and can change significantly in response to alteration of the ground network (e.g. just by adding new data). Jain et al (2010) has proposed a framework to alleviate problem this called

Adaptive MLN's (AMLN) implemented as ProbCog.

Structure learning remains the greatest challenge for

MLN's. Indeed, state-of-the-art inductive logic programming performance possibly still rests with nonprobabilistic systems such as Aleph based on Muggleton

(1995), the output of which can be used as input to an

AMLN.

Learning extends beyond the constraints of the formal system under which inference is defined, to include the invention of new predicates and the ability to transfer knowledge across domains. Deep Learning research to date has focused on narrowly-defined graphical models such as the restricted Boltzmann Machine after Hinton (2007), and has not yet found generalization to probabilistic graphical models.

We believe multi-layer hierarchical control systems resembling the layered, hidden variables of Deep

Architectures presents a sound approach for future AI research. Perceptual Control Theory (PCT) (Powers, 1973) provides inspiration for such an approach, and is supported by a body of experimental evidence in psychology and neuroscience

1

. A key principle of PCT is that “behaviour is the control of perception”, so autonomous agents seek to minimise the error between their desired and actual perceptions of the world, with behaviour being a consequence. Control elements at higher levels drive lower levels, with outputs that are not commands, but “requests for a perception”. If non-achievement of control persists or conflict exists between perceptions, reorganization is postulated and involves meta-cognition in the creation of new control elements. The whole is achieved through as many as 11 levels of control in the human brain stemming from control of intensity, to control of sensation, configuration, transition, sequence and so on.

Unlike AI models to date, a PCT-based architecture is continuously “active”, trying to minimise perceptual errors

(i.e. noting this equates to maximization of utility) at all levels – not only sensor driven perceptions.

Conclusion

We have presented a framework for robust decision making at an enterprise level, and offered an approach for analysis and design. Ultimately the scalable robustness benefits of this framework hinges on the success of our endeavors in machine intelligence. We have summarized recent approaches and signaled our future research direction. We propose that a Perceptual Control based model through massive-scale parallelism may provide a viable approach to situated AI.

References

Lambert, D.A, and Scholz, J.B. 2007. Ubiquitous Command and

Control, International Journal of Intelligent Decision

Technologies.

1(3):35-40.

Lambert, D.A, and Scholz, J.B. 2005. A Dialectic for Network

Centric Warfare, Proceedings of the 10th International Command and Control Research and Technology Symposium (ICCRTS).

Boland, P.J. 1989. Majority Systems and the Condorcet Jury

Theorem. The Statistician , 38:181-189.

Kanazawa, S. 1998. A brief note on a further refinement of the

Condorcet Jury Theorem for Heterogeneous Groups,

Mathematical Social Sciences , 35:69-73.

Shapley, L. and Grofman, B. 1984. Optimizing Group

Judgmental Accuracy in the Presence of Interdependencies,

Public Choice , 43: 329-343, Martinus Nijhoff Publishing.

Ladha, K.K. (1995). Information Pooling through Majority-Rule

1

See http://www.pctweb.org/EmpiricalEvidencePCT.pdf

76

Voting: Condorcet’s Jury Theorem with Correlated Votes,

Journal of Economic Behavior and Organization , 26,353-372,

Elsevier Publishing.

List, C. and Goodin, R.E., 2001. Epistemic Democracy:

Generalizing the Condorcet Jury Theorem, Journal of Political

Philosophy , 9(3), 277-306, Blackwell Publishing.

Scholz, J.; Lambert, D.; Gossink, D.; Smith, G., 2012. A

Blueprint for Command and Control: Automation and interface,

15th International Conference on Information Fusion (FUSION),

2012 , 211-217, 9-12 July.

De Raedt, L., and Kersting, K. 2003. Probabilistic logic learning.

ACM SIGKDD Explorations Newsletter , 5 (1), 31-48.

Richardson, M., and Domingos, P. (2006). Markov Logic

Networks. Machine Learning.

62 (1-2): 107–136

Jain, D., Bartels, A., Beetz, M. 2010. Adaptive Markov Logic

Networks: Learning Statistical Relational Models with Dynamic

Parameters. Proceedings of the 19 th

European Conference on

Artificial Intelligence , 937-942.

Muggleton, S. 1995. Inverse entailment and Progol. New generation computing , 13 (3-4), 245-286.

Hinton, G. E. 2007. Learning multiple layers of representation.

Trends in cognitive sciences , 11 (10), 428-434.

Powers, W.T. 1973. Behaviour: The Control of Perception ,

Chicago, Aldine Publ.

77