Using Common Sense Invariants in Belief Management for Autonomous Agents

advertisement

Knowledge Representation and Reasoning in Robotics: Papers from the AAAI Spring Symposium

Using Common Sense Invariants

in Belief Management for Autonomous Agents

Gerald Steinbauer and Clemens Mühlbacher

Institute for Software Technology

Graz University of Technology

Graz, Austria

{cmuehlba,steinbauer}@ist.tugraz.at

∗

Abstract

the robot fail to pick up the package. After the action is performed to robot’s beliefs that it picked up the package. If

the real world is not sensed to detect that its internal belief

is inconsistent the robot will start to move to B without the

package. Thus it will fail in finishing its task successfully. In

the case that the robot senses the world and detects the fault

it can perform another action to pick up the package again.

Thus the goal can be successfully achieved.

In order to find these inconsistencies between the internal

belief and the real world sensor measurements are taken. But

often we cannot directly observe the information we need

to detect an inconsistency. Thus we need additional background knowledge to detect the inconsistency. This problem

can be seen as a ramification problem of the sensor measurements.

Gspandl et.al. presented in (Gspandl et al. 2011) a method

which uses background knowledge to detect faults. The

background knowledge was represented as invariants which

have to hold in every situation. An example for such an

invariant is that an object cannot be physically at different

places at the same time. Additional to the detection also a

diagnosis is applied to discover the real cause of actions. IndiGolog (Giacomo et al. 2009) was used to implement the

high-level control.

IndiGolog is a high-level agent programming language.

It opens up the possibility to mix imperative and declarative programming. Thus some parts of the program can be

imperatively specified while other parts are executed using

reasoning. Continuing the example from above the procedure to deliver an object can be specified imperatively but

the selection which delivery task should be performed next

can be specified declaratively.

IndiGolog is based on the situation calculus (McCarthy

1963) and generates a history of actions which were performed. This history together with information about the

initial situation is used to represent the internal belief of the

robot. As mentioned above the usage of background knowledge is essential to be able to detect inconsistencies. But it

is a tedious task to specify this knowledge by hand. Therefore, we propose to reuse existing common sense knowledge

bases such as Cyc as background knowledge. In this paper we present a method to map the situation of the world

represented in IndiGolog to a common sense knowledge

base. Thus the background knowledge of the common sense

For an agent or robot it is essential to detect inconsistencies

between its internal belief and the real world. To detect these

inconsistencies we reuse background knowledge from a common sense knowledge base as invariants. This enables us to

detect and diagnose inconsistencies which can be missed if no

background knowledge is used. In order to be able to reuse

common sense from other sources a mapping between the

knowledge representation in the robot system and the common sense knowledge bases is introduced. In this paper we

specify the properties which have to be respected in order to

achieve a proper mapping. Moreover, we show a preliminary

implementation of such a mapping for the Cyc knowledge

base and evaluate this implementation in a simple delivery

robot domain.

Introduction

In the field of autonomous agents and robots faults are not

totally avoidable. Such faults can occur due to imperfect

execution of actions or noisy sensor measurements. Such

faults may prevent a robot from finishing its task successfully. But also other robots or humans may change the world

in an unexpected way letting the robot fail in executing its

task. A simple example is a robot which delivers a package from location A to B. The robot can fail to deliver this

package if the pickup action fails due to a slippery gripper.

Or a person snatches the package from the robot during delivery. These are simple examples of the non-deterministic

interaction of a robot with its environment through acting

and sensing. A dependable robot has to deal with such situations.

These faults in the interaction lead to a situation where

the internal belief is in contradiction with the real world. To

overcome this problem it is necessary to detect and repair

such inconsistencies. The robot grounds its decision which

action to execute next to achieve a goal on this internal belief. Thus if the internal belief is in contradiction with the

real world the agent will perform a wrong or even dangerous

action. If we continue with the example above let’s assume

∗

The work has been partly funded by the Austrian Science Fund

(FWF) by grant P22690.

c 2014, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

63

call sensing actions. If an action α is a primitive action

Ψα (s) ≡ > and RealSense(α, s) ≡ >.

To deal with sensing we extend the basic action theory

with the sensing axioms {Senses} and axioms for refining

programs as terms C to D∗ = D ∪ C ∪ {Sensed }. See (Giacomo et al. 2009) for a detailed discussion.

As mentioned above we use the history of performed actions also to perform diagnosis. To trigger a diagnosis we

need to check that the world evolved as expected. The world

evolved as expected if the internal belief together with the

sensor readings is consistent. Definition 1 states the consistency of the world. Please note that we use the terms situation and history interchangeably to refer to a sequence of

actions executed starting in a particular situation.

Definition 1. A history σ is consistent iff D∗ |= Cons(σ)

with Cons(·) inductively defined as:

.

1. Cons() = Invaria(S0 )

.

2. Cons(do(α, δ̄)) = Cons(δ̄) ∧ Invaria(do(α, δ̄))∧

[SF (do(α, δ̄)) ∧ RealSense(α, do(α, δ̄))∨

¬SF (α, δ̄) ∧ ¬RealSense(α, do(α, δ̄))]

knowledge base can be used to detect violations without

the task of specifying the background knowledge within IndiGolog. This idea is inspired from the ontology mapping,

which is done to construct lager knowledge bases out of

smaller ones (see for example (Kalfoglou and Schorlemmer

2003) for a survey of ontology mapping).

The remainder of the paper is organized as follows. In the

next section we present our belief management with invariants. In the succeeding section the theoretical aspects of the

mapping are discussed. In the following section we explain

how this is implemented based on the existing belief management. Afterwards we present preliminary experimental

results of the implementation. Related research is discussed

in the following section. We conclude the paper in the succeeding section and point out some future work.

Belief Management with Invariants

As mentioned above we use IndiGolog to represent and execute high-level programs. IndiGolog uses the situation calculus (McCarthy 1963) (second-order logical language) to

reason about the actions that were performed. This enables

IndiGolog to represent a situation s of the world with an

initial situation S0 and a history of performed actions. To

specify this initial situation we use the axioms DS0 . The

evolution from one situation to another one uses the special

function symbol do : action × situation → situation 1 .

To model the preconditions of actions we use Reiter’s variation of the situation calculus (Reiter 2001). Thus we specify the precondition of an Action α with the parameters ~x

through Poss(α(~x), s) ≡ Πα (~x). All preconditions are collected in the axioms Dap . The essential parts to evolve the

world are the effects of actions. These are modeled using

the successor state axioms Dssa . These axioms have the form

F (~x, do(α, s)) ≡ ϕ+ (α, ~x, s) ∨ F (~x, s) ∧ ¬ϕ− (α, ~x, s). ϕ+

models the action effect which enables a fluent (set the fluent

to true). ϕ− models the action effect which disables a fluent (set the fluent to false). A fluent is a situation-depended

predicate. Using foundation axioms Σ and unique name

axioms for actions Duna we form the basic action theory

D = Σ ∪ Dssa ∪ Dap ∪ Duna ∪ DS0 . For a more detailed

explanation we refer the reader to (Reiter 2001).

The effects of sensor measurements are modeled in a similar way. The sensor measurements are modeled as a fluent

(SF ) which indicates which change occurs through a sensing action (De Giacomo and Levesque 1999). The fluent is

defined as SF (α, s) ≡ Ψα (s) and indicates that if action α

is performed in situation s the result is Ψα (s). Additionally

we use the helper predicate RealSense(α, s). This predicate has the value of the sensor for Fluent F connected via

the sensing of α. For detailed information how this predicate is defined we refer the reader to (Ferrein 2008). Please

note that SF (α, s) ≡ RealSense(α, s) must hold in a consistent world. The set of all these sensing axioms is called

{Sensed }.

In the remaining of the paper we call actions without sensor results primitive actions. Actions with sensor results we

Where Invaria(δ̄) represents the background knowledge

(invariants) as a set of conditions that have to hold in all

situations δ̄.

To perform a diagnosis we follow the basic approach from

(Iwan 2002). The goal is to find an alternative action history that explains the inconsistency. For this purpose special

predicates for action variations (Varia) and exogenous action insertions (Inser ) are used. The variations are defined

through Varia(α, A(~x), σ) ≡ ΘA (α, ~x, σ). Where ΘA is

a condition such that the action A is a variation of actions

α in the situation σ. This variations are used to donate the

different possible outcomes of an action.2 . The insertions

are defined similar as Inser (α, σ) ≡ Θ(α, σ), where Θ is

a condition such that some action α is a valid insertion into

the action history σ. Insertions are used to model exogenous

events, which occur during the execution.

To make it possible to find such a diagnosis in practice we

use some assumptions. Assumption 1 states that the primitive actions are modeled correctly. This is a reasonable assumption as we do not want actions that contradict invariants

in their correct execution.

Assumption 1. A primitive action α never contradicts an

invariant if executed in a consistent situation δ̄:

D∗ |= ∀δ̄.(Cons(δ̄) ∧ Invaria(do(α, δ̄)))

Through the definition of Ψα (s) and RealSense(α, s) for

primitive actions and the Assumption 1 we can simply state

Lemma 1.

Lemma 1. The situation do(α, δ̄) resulting from the execution of a primitive action α in a consistent situation δ̄ is

always consistent:

D∗ |= ∀δ̄.prim act(α) ⇒ (Cons(δ̄) ⇒ Cons(do(α, δ̄))).

Where predicate prim act(α) represents if an action α is

primitive. This result is obvious as a primitive action never

introduces an inconsistency to a consistent situation.

1

We will use do([α1 , . . . , αn ], σ) as an abbreviation for the

term do(αn , do(αn−1 , · · · do(α1 , σ) · · · ))

2

The variations of actions could be considered as the fault

modes for an action

64

Furthermore according to the Definition 1 of the consistency and a consistent past we can state Lemma 2.

result of this failure is that the object is not carried by the

robot. We do not need to model why the action failed. It

doesn’t matter if the gripper was slippery or the software

module calculate a wrong grasping point. The only interesting point is that we know that the robot failed to pick up

the object. According to this knowledge the robot is able to

diagnose a wrong grasping action. Please note that the information about the root cause of a fault can be used to improve

the diagnosis if causal relations are modeled too.

Lemma 2. A sensing action α is inconsistent with a consistent situation δ̄ iff :

SF (α, δ̄) 6≡ RealSense(α, do(α, δ̄))

or

D∗ 6|= Invaria(do(α, δ̄))

We call SF (α, δ̄) 6≡ RealSense(α, do(α, δ̄)) an obvious

fault or obvious contradiction. The next assumption states

that the initial situation S0 is modeled correctly.

Use of Common Sense Knowledge as

Background Model

Assumption 2. The initial situation S0 never contradicts

the invariant:

DS0 |= Invaria(S0 )

The basic idea is to map the actual belief of the agent represented in the situation calculus to the representation of the

common sense knowledge base (KB ) and to check the consistency of the mapped belief with the background knowledge in this representation. For this we add the mapped belief to KB and check if the extended knowledge base is still

consistent. This is different to other mappings which map

the situations as well as the domain theory. Such a mapping

was proposed for example in (Thielscher 1999). Because

we are only interested in a consistency check of a single situation to map only the situation is sufficient. The mapping

from one representation to another is defined in Definition 2.

Please note that the representation in the situation calculus

is denoted by LSC . LKB denotes the representation of the

knowledge base we map to.

Definition 2. Function MLSC ,LKB : LSC × S → LKB represents the transformation of a situation and an extended

basic action theory to a theory in LKB .

The mapping has to fulfill some properties to be proper.

The first property states that the used constants have the

same semantics regardless if they are used in the situation

calculus or in KB . The second property is that the mapping

has to maintain the consistency in respect to the invariant.

This property is similar to the query property (Arenas et al.

2013) which is used to specify knowledge base mappings

for OWL.

To specify the second property we use I to donate one invariant of the background knowledge we want to use. This

could be for example the knowledge that an object is only

in one place in one situation. Additionally we use the term

implementation I to specify the representation of an invariant. An invariant I can be represented in situation calculus

as ISC (I) or in KB as IKB (I). It does not matter how this

implementation is done. It is only important that the corresponding reasoner interpret the implementation in such a

way that it represents the invariant I. The set of all these

implementations of invariants in the background knowledge

is denoted as ISC (BK ) and IKB (BK ) respectively. Please

note that in our case ISC (BK ) is equivalent to Invaria(δ̄).

The property which has to hold for the mapping function

(MLSC ,LKB ) in all situations S is defined in Definition 3.

Definition 3. The mapping MLSC ,LKB is proper if the

transferred situation s together with the background model

IKB (BK ) lead to a contradiction if and only if the situation s together with the original implementation of the background knowledge lead to a contradiction:

This assumption is necessary as we are not able to repair

situation S0 through changing the history. The fundamental

axioms for situations (see (Reiter 2001)) state that there is

no situation prior to S0 (6 ∃s(s @ S0 )).

With the Assumption 2 and the Lemma 1 we can state

Theorem 1.

Theorem 1. A history δ̄ = do([a1 , ..., an ], S0 ) can only become inconsistent iff it contains a sensing action:

D∗ |= ¬Cons(δ̄) ⇔ ∃ai ∈ [a1, ..., an ].sens act(ai )

Where predicate sens act(α) represents if an action α is

a sensing action.

Proof. Through Assumption 2 we know that Cons() is

true. Through Lemma 1 we know that the consistency

will not change if a primitive action is performed. Thus

Cons(δ̄) remains true if δ̄ comprises only primitive actions.

Thus Cons(δ̄) can only becomes false through a sensing action.

Through Lemma 2 and Theorem 1 we can state a condition for an inconsistent situation.

Lemma 3. A situation δ̄ = do([a1 , ..., an ], S0 ) becomes

inconsistent iff it contains a sensing action and either there

is a contradicting sensing result or the invariant is violated:

D∗ |= ¬Cons(δ̄) ⇔ ∃ai ∈ [a1, ..., an ].sens act(ai ) ∧

[SF (α, δ̄)

6≡

RealSense(α, do(α, δ̄))∨

¬Invaria(do(α, δ̄))].

Furthermore we assume that only actions change the real

world. Thus if the two situations are different an action had

been performed. Finally we request that all possible actions

and their alternative outcomes and all possible exogenous

events are modeled. Moreover, we request that the number

of actions and their alternatives as well as the number of possible occurrences of exogenous events is finite. This leads to

the conclusion that once an inconsistency occurred we are

able to find a consistent alternative action sequence through

a complete search.

To illustrate these assumptions let us reconsider the example from the introduction. We like to model the task of

delivering a package from a location A to B. We model the

task using a pick, a place and a movement action. During

the movement we have to consider that the object the robot

is caring is also moving. As a possible variation of an action

we model that the pick action of a package can fail. The

65

IKB (BK ) ∪ MLSC ,LKB (D∗ , s) |=LKB ⊥ ⇔

D∗ ∪ II (BK ) |=LSC ⊥

its predicates and their properties. An important property

is a partly uniqueness. This means that if all parts of a

predicate are specified except one constant the constant is

uniquely defined. For instance such predicates can be used

to model that an object is only at one place at the time.

Beside explaining the mapping to Cyc it is important

to mention that we are only considering steady invariants

(Thielscher 1998). This means we are not interested in effects which will be caused by sensor readings in the future

and are enabled by the current situation. These effects are

called stabilizing invariants (Thielscher 1998). If we would

also consider stabilizing invariants the reasoner of Cyc have

also to reason about situations and their evolution. Additionally we would have to properly specify what consistency

means in case of multiple possible solution due the stabilizing invariant. Thus we avoid these problems by using use

steady invariants.

The mapping from the situation calculus to Cyc maps

three fluents. The transformation functions of the mapping

are shown in Listing 1 and 24 . The first fluent isAt(x, y, s)

represents the position x of an object y. The second fluent

holding(x, s) represents which object x the robot is holding. The last fluent specify if the robot is holding something.

The last fluent carryAnything(s) is used to interpret the

result of a sensing action. The background knowledge we

used in the situation calculus consists of two simple statements. The first statement specifies that every object is only

at one place in one situation. The second statement specifies

the relation between holding a specific object and sensing

the holding the object.

This definition states that regardless if we considered the

situation and the invariant implemented in the situation calculus or if we consider the mapped situation and the invariant implemented in KB both lead to the same result for the

consistency. With the help of Lemma 2 and the Definition 3

of the mapping we can state Lemma 4

Lemma 4. A sensing action α which does not lead to an

obvious fault is inconsistent if the following holds:

IKB (BK ) ∪ MLSC ,LKB (D∗ , s) |=LKB ⊥.

In order to avoid problems through the different interpretation of undefined or unknown fluents we request that the

mapping maps all fluent and constants. This is very restrictive but it is necessary if we map from a representation which

uses the closed world assumption like IndiGolog to a representation with the open world assumption. Moreover, we

assume that the number of fluents and constants is finite 3 .

With a mapping which respects the above mentioned properties we are able to check for inconsistencies in a different

representation.

Implementation Using Cyc

To map the internal belief it is sufficient to map the fluents in

a situation s. We will now define a proper mapping in more

detail for the common sense knowledge base Cyc. We assume for now n constants c1 , ..., cn and m relational fluents

F1 (x̄1 , s), ..., Fm (x̄m , s) used in the extended basic action

theory. x̄i represents the set of variable of the ith fluent. In

order to define the mapping we define a set of transformation

functions for constants and fluents.

Listing 1: Mapping of the Fluents

RFisAt (x, y) →

( i s a s t o r e d A t {x} A t t a c h m e n t E v e n t )

( o b j e c t A d h e r e d T o s t o r e d A t {x} {y } )

( o b j e c t A t t a c h e d s t o r e d A t {x} {x } )

R̄FisAt (x, y) →

( i s a s t o r e d A t {x} A t t a c h m e n t E v e n t )

( n o t ( o b j e c t A d h e r e d T o s t o r e d A t {x} {y } ) )

( o b j e c t A t t a c h e d s t o r e d A t {x} {x } )

RFholding (x) →

( i s a robotsHand HoldingAnObject )

R̄Fholding (x) →

( not ( i s a robotsHand HoldingAnObject ) )

RFcarryAnything →

( i s a robotsHand HoldingAnObject )

R̄FcarryAnything →

( not ( i s a robotsHand HoldingAnObject ) )

Definition 4. For constant symbols {ci } we define a transformation functions RC : ci ∈ LSC → LKB .

Definition 5. For a positive fluent Fi we define a transformation function RFi : T |x̄i | → LKB . For a negative fluent

Fi we define a transformation function R̄Fi : T |x̄i | → LKB .

Where T denotes a term.

The mapping MLSC ,LKB (D∗ , s) can now be defined as

follows:

Definition 6. The mapping MLSC ,LKB (D∗ , s) consists of a

set of transformed fluents and constants such that:

(∀i∀x̄i .(D∗ , s) |=LSC

MLSC ,LKB (D∗ , s) ≡

[

Fi (x̄i , s)|RFi (x̄i ) ∪

RC (x))∪

x∈x̄i

∗

(∀i∀x̄i .(D , s) |=LSC ¬Fi (x̄i , s)|R̄Fi (x̄i ) ∪

[

RC (x))

x∈x̄i

Listing 2: Mapping of the Constants

We implemented such a mapping from the situation calculus to Cyc. Cyc (Reed, Lenat, and others 2002) is a common sense knowledge base which also integrated many other

knowledge bases. Cyc uses higher order logic to represent

RC (x) →

( i s a {x} P a r t i a l l y T a n g i b l e )

Please note that in the case of situation calculus invariants we coded this knowledge by hand. In the case of Cyc

3

The fluents are predicates of finite many first order terms. Thus

the mapping can be done in finite time if the number of constants

is finite.

4

{x} and {y} represent the unique string representation of the

related constant in Cyc.

66

Experimental Results

invariants such constraints are already coded in. For instance the used class AttachmentEvent for the predicate

storedAt {x} allows only to attach an object to one location. If an object is attached to several locations a violation

is reported by Cyc. The class PartiallyTangible represents

physical identities.

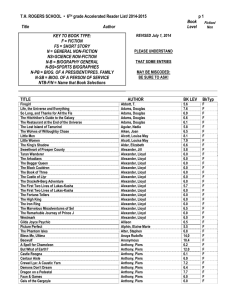

We performed three experiments with the above presented

implementation. In all experiments a simple delivery task

had to be performed. The robot had to deliver objects from

one room to another. To perform this task the agent was able

to perform three actions: (1) pick up an object, (2) go to

destination place, and (3) put down an object. Each of these

actions can fail. A pick up action can fail in such a way

that the object is not to be picked up. The pickup action can

fail at all or picks up a wrong object from the same room.

The goto action can end up in a wrong room. The put down

action can fail in such a way that the robot is still holding the

object. Additionally to these internal faults an exogenous

event can happen which teleports an object in another room.

The probabilities of the different action variations and the

exogenous event can be seen in Table 1.

We use the Prolog implementation of IndiGolog (Giacomo et al. 2009) to interpret the high-level control program.

This implementation offers the possibility the check if a fluent is true in a situation. It uses the action history, the initial

situation and regression to answer if a fluent holds (see (Reiter 2001)). This makes it possible to make a snapshot of all

fluents for the current situation. This snapshot can be transferred through the mapping to Cyc.

As we discussed above we use the mapping to check if an

action history is consistent with sensor readings. To check

if a situation is consistent we use the algorithm depicted in

Algorithm 1.

Fault

go to wrong location

pick up wrong object

pick up fails

put down fails

random movement of object

Algorithm 1: checkConsistency

input : α . . . action to perform in the situation,

δ̄ . . . situation before execution action α

output: true iff situation is consistent

1

2

3

4

5

6

7

8

9

10

11

Probability

0.05

0.2

0.2

0.3

0.02

Table 1: Probabilities of faults of actions and the exogenous

event.

if sens act(α) then

if SF (α, δ̄) 6≡ RealSense(α, do(α, δ̄)) then

return f alse

end

δ̄ 0 = do(α, δ̄)

M = MLSC ,LKB (D∗ , δ̄ 0 )

if ¬checkCycConsistency(M) then

return f alse

end

end

return true

The different experiments differ in the size of the domain.

Each experiment5 ran for 20 different object settings (different initial locations and goals for object). Each object setting

was performed 5 times with different seed for the random

number generator. This ensures that the object setting or

the triggered faults does not prefer a particular solution. For

each test run a timeout of 12 minutes was used.

The experiments used three different high-level control

programs. The first program (HBD 0) used no background

knowledge but direct detection of contradictions. The second program (HBD 1) used background knowledge handcoded into IndiGolog. The third program (HBD 2) used the

proposed mapping and background knowledge in Cyc.

To implement the programs we used the Teleo-Reactive

(TR) approach (Nilsson 1994) to perform the task. The TR

program was used to ensure that after a change of the belief due to the diagnosis the program can be continued towards its goal. To perform the experiments with the different programs we used a simulated environment based on

ROS. The simulated environment evolves the world according to the performed actions. The effects are simulated according to the desired action of the high-level program such

that the non-deterministic outcomes are randomly selected

to reflect the given fault probabilities. Exogenous movements are randomly performed before an action is executed

according to their probabilities. Similar experiments with

simulated and real robots and the belief management system

were presented in (Gspandl et al. 2012).

First, the algorithm checks if a sensor reading is directly

inconsistent with the situation. This is done to find obvious

faults. For example if we expect that an object is at location

A and we perform an object detection at A with the result

that the object is not there. The conclusion is simple that the

sensor reading and the internal belief contradict each other.

Next the algorithm uses the mapping to check if the invariant

is violated. It maps the current situation to Cyc and checks

the consistency within the Cyc common sense knowledge

base.

Through Lemma 3 it is sufficient if we check only the

sensing actions. The algorithm is executed in each iteration

of the interpretation of the high-level control program. Thus

if we check D∗ |= Cons(δ̄.α) we have already checked

D∗ |= Cons(δ̄). Using this knowledge and the Lemma 2

we check if the sensing results lead to an obvious fault in

Line 2. Otherwise we use the proper mapping and therefore

Lemma 4 holds. Therefore we can conclude that the algorithm is correct.

5

The tests were performed on an Intel quad-core CPU with

2.40 GHz and 4 GB of RAM running at 32-bit Ubuntu 12.04. The

ROS version Groovy was used for the evaluation.

67

The tasks were performed in a simulated building with 20

respectively 29 rooms. Different numbers of rooms and objects were used to evaluate the scalability of the approach.

The number of successful delivered objects, the time to deliver the object as well as the fact if a test run was finished

in time was recorded.

In the first experiment three objects in a setting of 20

rooms had to be delivered. The success rate of being able

to deliver the requested objects for the whole experiment

can be seen in Table 2. Additionally the percentage of runs

that resulted in a timeout (TIR) are reported. The average

run-time for one object setting can be seen as well. For the

reported run-times we omitted the cases where the program

ran into a timeout.

HDB 0

HDB 1

HDB 2

success rate/%

TIR/%

54.11

95.55

79.80

18.81

1.98

36.63

significantly increases the run-time if the environment is increased. Please note that the environment is only increased

by a factor of 1.45. Thus the increase of the run-time increases faster than the environment size. This also points out

that an efficient reasoning for the background knowledge is

essential to avoid problems in scaling.

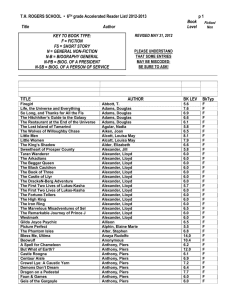

In the third experiment nine objects in a setting of 20

rooms had to be delivered. The success rate of being able

to deliver the requested objects for the whole experiment

can be seen in Table 4. Additionally the percentage of runs

that resulted in a timeout (TIR) are reported. The average

run-time for one object setting can be seen as well. For the

reported run-times we omitted the cases where the program

ran into a timeout.

average run-time/s

mean

stdv.

3.93

4.03

5.95

7.10

399.18

153.09

HDB 0

HDB 1

HDB 2

success rate/%

TIR/%

22.01

73.49

18.23

32.00

29.00

100

average run-time/s

mean

stdv.

216.30

305.99

206.41

291.54

-

Table 2: Success rate, timeout rate (TIR) and the average

run-time of the approaches for experiment 1.

Table 4: Success rate, time out rate (TIR) and the average

run time of the approaches for experiment 3.

In Table 2 it can be seen that the number of object movements which ran in a timeout is much higher in the case of

the Cyc background knowledge. The high number of timeouts is a result of a higher run-time due to the external consistency check. The run-time is currently the main drawback for the mapping to Cyc. The high run-time is caused

by the mapping as well as the reasoning in Cyc. But Table

2 shows that the diagnosis is significantly more successful if

background knowledge is used. Otherwise it failed often to

detect and diagnose the fault.

In the second experiment three objects in a setting of 29

rooms had to be delivered. The success rate of being able to

deliver the requested objects for the whole experiment can

be seen in Table 3. Additionally the percentage of runs that

resulted in a timeout (TIR) are reported. The average runtime for one object setting can be seen as well. For the reported run-time we omitted the cases where the program ran

into a timeout.

In Table 4 it can be seen that the number of object deliveries that ran into a timeout is 100 percent using the Cyc

background knowledge. This means that it was not possible

to move nine objects in 12 minutes. If the program with the

IndiGolog background knowledge was used only 29 percent

of the objects settings ran into a timeout.

An interesting observation is that the HBD 1 scaled better with the increase of the number of delivered object than

HBD 0. This indicates that also the time to detect an error

is smaller if the background knowledge is used. We suppose

that it is an advantage if the right fault was earlier detected.

Please note that we trade a higher run-time for a higher success rate. Although, the run-time of the Cyc-based approach

is currently significantly higher it still has the advantage of

using already formalized common sense knowledge.

HDB 0

HDB 1

HDB 2

success rate/%

TIR/%

57.89

95.44

72.98

14.0

1.0

50.0

Related Research

Before we conclude the paper we want to discuss related

work on dependability, belief management, logic mapping,

common sense, diagnosis in the situation calculus and plan

diagnosis.

One well known framework for dependable high-level

control of robots is the BDI (Belief-Desire-Intention) framework. The BDI approaches consist of a belief of the agent

about the current state of the world. The agent tries to fulfill

a desire. Intentions are carried out according to the current

belief to achieve the desire. These intentions are revised every time the belief or the desire changes. Thus the agent reacts to changes in the environment. It is also possible to post

sub-goals as new desires to make a progress towards a goal.

See (Dennis et al. 2008) for a unification semantic for the

different BDI approaches. In the context of robotics the procedural reasoning system (PRS) follows this approach and

is used as an executive layer in the LAAS robot architecture

(Alami et al. 1998).

average run-time/s

mean

stdv.

3.64

228.52

13.14

65.80

560.00

123.99

Table 3: Success rate, timeout rate (TIR) and the average

run-time of the approaches for experiment 2.

Table 3 shows again the high number of timeouts in cases

where CYC mapping is used. Another interesting observation is the increase in the run-time. The run-time of the

HBD 1 increased by a factor of 2.23 and the run-time of

the HBD 2 increase by a factor of 1.43. Please note that

the increase of these run-times was limited due to the timeout. This indicates that the usage of background knowledge

68

out is the work concerning diagnosis using the situation calculus. In (Mcllraith 1999) a method was proposed to diagnose a physical system using the situation calculus. The

method tries to find an action sequence to infer the observations. The method does not modify any actions which

were performed. Instead the action sequence is searched as

a planning problem. Also the use of Golog as a controller

for the physical system which was proposed in (McIlraith

1999) does not change this paradigm.

A final related domain is plan diagnosis (Roos and Witteveen 2005; De Jonge, Roos, and Witteveen 2006). The

problem of identifying problems in plan execution is represented as model-based diagnosis problem with partial observations. The approach treats actions like components that

can fail like in classical model-based diagnosis. The currently executed plan, partial observations and a model how

plan execution should change the agent’s state are used to

identify failed actions. Moreover, the approach is able to

identify if the action failed by itself or due to some general

problem of the agent.

In contrast to the BDI approach IndiGolog uses no subgoals to achieve a task. Instead planning and a high-level

program are used to guide the agent to a certain goal. The

reactive behavior of the BDI system can also be achieved

in IndiGolog using a teleo-reactive (TR) program (Nilsson

1994). Such programs are also used in our implementation

to react to a repaired belief. To react quickly to changes in

the environment long-term planning is often omitted in BDI

systems. This problem was tackled in (Sardina, de Silva,

and Padgham 2006) with a combination of BDI and Hierarchical Task Networks (HTN). Using this combination longterm planning can be achieved. This proposed system is related to IndiGolog due to the possibility to use long-term

planning which is also possible in IndiGolog. Another point

we want to mention about the proposed approach is that it

also uses a history of performed actions. Thus it would be

possible to integrate our belief management approach into

the system as well.

Beside the relation to other high-level control we want to

point out the relation of our approach to other belief management approaches. Many different approaches exits that represent how trustworthy a predicate is. For example the anytime belief revision which was proposed in (Williams 1997)

use weighting for the predicates in the belief. Possibilistic

logic which can be related to believe revision techniques (see

(Prade 1991) for more details) uses the likelihood of predicates. Another method to specify the trust for a predicate in

a similar framework as the situation calculus was proposed

in (Jin and Thielscher 2004). The influence of performed

actions is also considered for determining trust. In relation

to the basis of the belief management system presented in

this paper (Gspandl et al. 2011) all these approaches differ

in the fact that we rank different beliefs instead of predicates

within one belief. Although it is left for future work to integrate the weighting of predicates into our approach.

If we look at the mapping of logic the work presented

in (Cerioli and Meseguer 1993) has to be mentioned. The

addressed mappings can be used to use parts of one logic in

another one. The difference to our approach is that we are

not interested to transfer another reasoning capability into

our system. Instead we want to use some kind of oracle

which tells us if the background knowledge is inconsistent

with our belief.

Before we start the discussion about different methods to

represent common sense knowledge we want to point out

that our proposed method does not rely on any special kind

of representation. As mentioned above the only interesting

aspect is that the predicates can be mapped in the system and

preserve the consistency regarding the background knowledge. Thus all these methods could be used in the implementation instead of Cyc. In (Johnston and Williams 2009b) a

system was proposed which represent common sense knowledge using simulation. This knowledge can also be learned

(Johnston and Williams 2009a). Due to the simulation it is

possible to check if a behavior contradicts simple physical

constraints. In (Küstenmacher et al. 2013) the authors use a

naive physics representation to detect and identify faults in

the interaction of a service robot with its environment.

Another relation to the work of others we want to point

Conclusion and Future work

In this paper we presented how a mapping of the belief of

an agent represented in the situation calculus to another representation can be used to check invariants on that belief.

The mapped belief is checked for consistency with background knowledge modeled in the representation of the target knowledge base using its reasoning capabilities. The

advantage of this mapping is that existing common sense

knowledge bases can be reused to specify invariants. We formalized the mapping and discussed under which condition

the consistency is equivalent in both systems. Moreover, we

presented a proof-of-concept implementation using Cyc and

integrated it into a IndiGolog-based high-level control.

An experimental evaluation showed that background

knowledge is able to significantly improve the detection and

diagnosis of inconsistencies in the agent’s belief. Furthermore, the evaluation showed that the run-time for the mapping as well as the reasoning in Cyc is high. Thus in future

work we will evaluate if other knowledge bases are better

suitable for that purpose. One such knowledge base is presented in (Borst et al. 1995) and is used to specify physical

systems and their behavior. Moreover, we will investigate in

the future if the mapping could be performed lazy such that

only predicates are mapped which are essential to find an inconsistency. Finally, we have to investigate if the run-time

drawback of using for instance Cyc is still significant if the

domain and handcrafted invariants become more complex.

References

Alami, R.; Chatila, R.; Fleury, S.; Ghallab, M.; and Ingrand,

F. 1998. An architecture for autonomy. The International

Journal of Robotics Research 17(4):315–337.

Arenas, M.; Botoeva, E.; Calvanese, D.; and Ryzhikov, V.

2013. Exchanging OWL 2 QL Knowledge Bases. The Computing Research Repository (CoRR) (April 2013).

Borst, P.; Akkermans, J.; Pos, A.; and Top, J. 1995. The

physsys ontology for physical systems. In Working Papers of

69

McIlraith, S. A. 1999. Model-based programming using

golog and the situation calculus. In Proceedings of the Tenth

International Workshop on Principles of Diagnosis (DX99),

184–192.

Mcllraith, S. A. 1999. Explanatory diagnosis: Conjecturing

actions to explain observations. In Logical Foundations for

Cognitive Agents. Springer. 155–172.

Nilsson, N. J. 1994. Teleo-reactive programs for agent control. Journal of Artificial Intelligence Research 1(1):139–

158.

Prade, D. D.-H. 1991. Possibilistic logic, preferential models, non-monotonicity and related issues. In Proc. of IJCAI,

volume 91, 419–424.

Reed, S. L.; Lenat, D. B.; et al. 2002. Mapping ontologies

into cyc. In AAAI 2002 Conference Workshop on Ontologies

For The Semantic Web, 1–6.

Reiter, R. 2001. Knowledge in Action. Logical Foundations

for Specifying and Implementing Dynamical Systems. MIT

Press.

Roos, N., and Witteveen, C. 2005. Diagnosis of plans

and agents. In Multi-Agent Systems and Applications IV.

Springer. 357–366.

Sardina, S.; de Silva, L.; and Padgham, L. 2006. Hierarchical planning in BDI agent programming languages: A formal approach. In Proceedings of the fifth international joint

conference on Autonomous agents and multiagent systems,

1001–1008. ACM.

Thielscher, M. 1998. Reasoning about actions: Steady

versus stabilizing state constraints. Artificial Intelligence

104(1):339–355.

Thielscher, M. 1999. From situation calculus to fluent calculus: State update axioms as a solution to the inferential

frame problem. Artificial intelligence 111(1):277–299.

Williams, M.-A. 1997. Anytime belief revision. In IJCAI

(1), 74–81.

the Ninth International Workshop on Qualitative Reasoning

QR, 11–21.

Cerioli, M., and Meseguer, J. 1993. May i borrow your

logic? In Mathematical Foundations of Computer Science

1993. Springer. 342–351.

De Giacomo, G., and Levesque, H. J. 1999. An incremental

interpreter for high-level programs with sensing. In Logical

Foundations for Cognitive Agents. Springer. 86–102.

De Jonge, F.; Roos, N.; and Witteveen, C. 2006. Diagnosis

of multi-agent plan execution. In Multiagent System Technologies. Springer. 86–97.

Dennis, L. A.; Farwer, B.; Bordini, R. H.; Fisher, M.; and

Wooldridge, M. 2008. A common semantic basis for BDI

languages. In Programming Multi-Agent Systems. Springer.

124–139.

Ferrein, A. 2008. Robot Controllers for Highly Dynamic Environments with Real-time Constraints. Ph.D. Dissertation,

Knowledge-based Systems Group, RWTH Aachen University, Aachen Germany.

Giacomo, G. D.; Lespérance, Y.; Levesque, H. J.; and Sardina, S. 2009. Multi-Agent Programming: Languages,

Tools and Applications. Springer. chapter IndiGolog: A

High-Level Programming Language for Embedded Reasoning Agents, 31–72.

Gspandl, S.; Pill, I.; Reip, M.; Steinbauer, G.; and Ferrein,

A. 2011. Belief Management for High-Level Robot Programs. In The 22nd International Joint Conference on Artificial Intelligence (IJCAI), 900–905.

Gspandl, S.; Podesser, S.; Reip, M.; Steinbauer, G.; and

Wolfram, M. 2012. A dependable perception-decisionexecution cycle for autonomous robots. In Robotics and Automation (ICRA), 2012 IEEE International Conference on,

2992–2998. IEEE.

Iwan, G. 2002. History-based diagnosis templates in the

framework of the situation calculus. AI Communications

15(1):31–45.

Jin, Y., and Thielscher, M. 2004. Representing beliefs in the

fluent calculus. In ECAI, volume 16, 823.

Johnston, B., and Williams, M.-A. 2009a. Autonomous

learning of commonsense simulations. In International Symposium on Logical Formalizations of Commonsense Reasoning, 73–78.

Johnston, B., and Williams, M.-A. 2009b. Conservative and

reward-driven behavior selection in a commonsense reasoning framework. In AAAI Symposium: Multirepresentational

Architectures for Human-Level Intelligence, 14–19.

Kalfoglou, Y., and Schorlemmer, M. 2003. Ontology mapping: the state of the art. The knowledge engineering review

18(1):1–31.

Küstenmacher, A.; Akhtar, N.; Plöger, P. G.; and Lakemeyer, G. 2013. Unexpected Situations in Service Robot

Environment: Classification and Reasoning Using Naive

Physics. In 17th annual RoboCup International Symposium.

McCarthy, J. 1963. Situations, Actions and Causal Laws.

Technical report, Stanford University.

70