The Workshops of the Thirtieth AAAI Conference on Artificial Intelligence

Incentives and Trust in Electronic Communities: Technical Report WS-16-09

TRM: Computing Reputation Score by Mining Reviews

Guanquan Xu,* Yan Cao, Yao Zhang, Gaoxu Zhang, Xiaohong Li, Zhiyong Feng

School of Computer Science and Technology, Tianjin University, China

*losin@tju.edu.cn

Abstract

tional Model (Xu et al. 2009) and so on, consider different

aspects which may impact ratings to generate the final trust

score. Although all these models have achieved good results in some situations, they still have some drawbacks.

They only take advantage of overall ratings to assess the

sellers’ performance and ignore some implicit information

in textual reviews. A well-reported issue with the eBay

reputation management system is the “all good reputation”

problem (Resnick et al. 2000), where feedback ratings are

over 99% positive on average. Such strong positive bias

can hardly guide buyers to select sellers to transact with.

One possible reason for the lack of negative ratings at ecommerce web sites is that users who leave negative feedback ratings can attract retaliatory negative ratings and thus

damage their own reputation (Resnick et al. 2000). Although buyers leave positive feedback ratings, they may

express their real feelings in free textual reviews. For example, a comment like “The products were as I expected.”

expresses positive opinion towards the product aspect,

whereas the comment “Delivery was a little slow but otherwise, great service. Recommend highly.” expresses negative opinion towards the delivery aspect but a positive

opinion to the transaction in general (Zhang, Cui, Wang

2014). By analyzing the sentiment information in these

reviews we can capture buyers’ embedded opinions.

In this paper, we propose a new reputation model (TRM)

which based on word2vector model to obtain implicit sentiment information of users’ reviews, and calculate comprehensive reputation score of the seller. Experimental

results on real-world datasets shows our approach is effective to get reputation score accurately.

As the rapid development of e-commerce, reputation model

has been proposed to help customers make effective purchase decisions. However, most of reputation models focus

only on the overall ratings of products without considering

reviews which provided by customers. We believe that textual reviews provided by buyers can express their real opinions more honestly. As so, in this paper, based on

word2vector model, we propose a Textual Reputation Model (TRM) to obtain useful information from reviews, and

evaluate the trustworthiness of objective product. Experimental results on real data demonstrate the effectiveness of

our approach in capturing reputation information from reviews.

Introduction

With the growing availability of online business, a lot of ecommerce websites were developed to encourage users

make transactions with others through the web.

In some cases, since most users may not have any interactions with objective seller, they have to integrate a number of information sources to produce a comprehensive

trust score of the seller’s likely performance. To get these

information, websites such as Amazon (www.amazon.com)

encourage users to express opinions on products by posting

overall ratings and textual reviews (Li, Feng, and Zhu

2006 ).

In some famous e-commerce systems such as eBay and

Amazon, the reputation score for a seller is the positive

percentage score, as the percentage of positive ratings out

of the total number of positive ratings and negative ratings

in the past 12 months. However, it can’t guarantee buyers

give honest ratings to the sellers. Some existed trust models, such as Personalized trust model (Zhang, Cohen 2008),

BRS trust model (Jøsang, Ismail 2002), FIRE trust model

(Dong-Huynha, Jennings, and Shadbolt 2004), swift trust

model (Xu et al. 2007), Time-Cognition Based Computa-

Textual Reputation Model

We think textual reviews which provided by buyers can

express their real opinions more honestly. For an honest

buyer, s/he may give a relative positive rating for a transaction, but the review provided by the buyer may be negative.

For example, a buyer gave a positive rating (5 star) for a

transaction, but left the following textual feedback: “bad

Copyright © 2015, Association for the Advancement of Artificial Intelligence (www.aaai.org). All rights reserved.

491

communication, will not buy from again. Super slow

ship(ping). Item as described. ” (Zhang, Cui, Wang 2014).

Therefore, for an honest buyer, based on his review, we

can get his real overall rating.

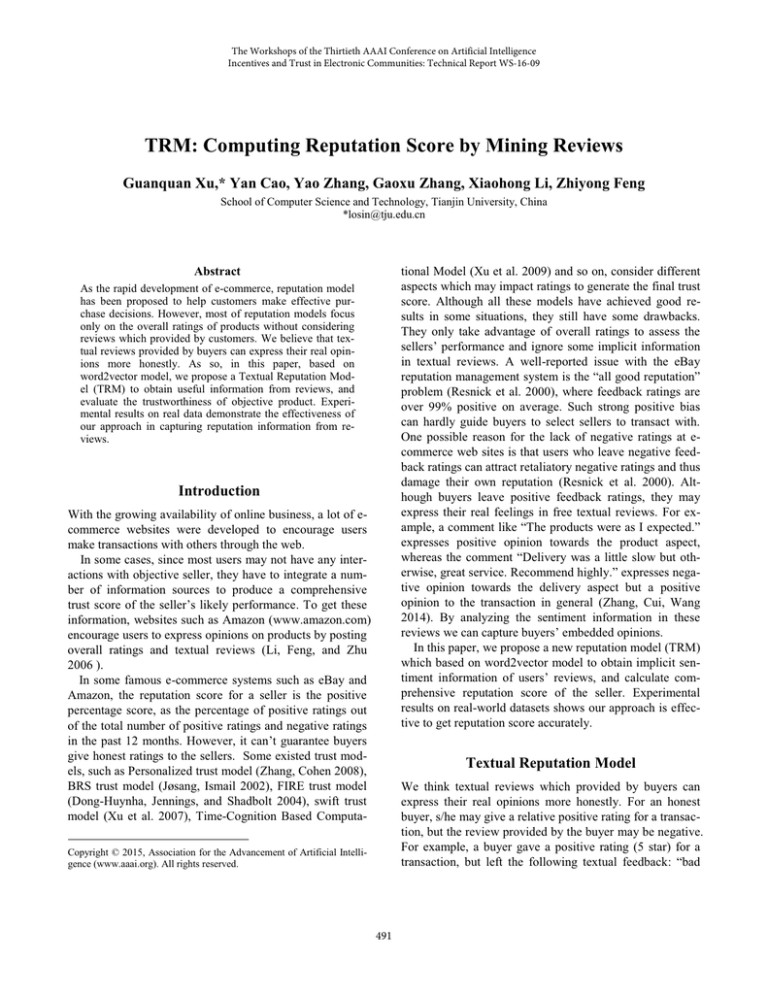

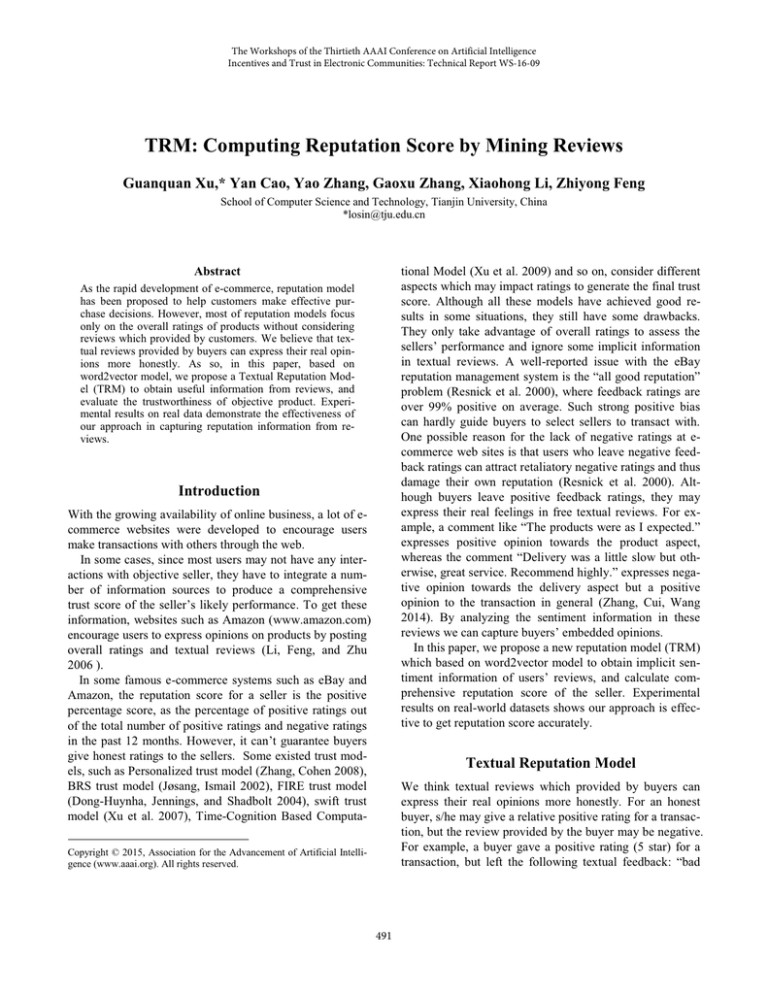

Textual reputation model can be described as Figure 1.

gram architectures for computing vector representations of

word. For a certain word, through calculating the information distance between aspect keywords with it, we can

get the most relevant keywords.

In this paper, we delete the stop words and punctuations

to generate the text corpus. The word2vec tool takes the

text corpus as input and produces the word vectors as output. It first constructs a vocabulary from the training text

data and then learns vector representation of words.

Then for each aspect Ai , we gave some pre-defined

keywords wAi . For example, in a review about hotel, the

keywords about service contains “service”, about room

feature contains “room” and so on.

After that, for a sentence’s semantic word representation

xm ! {w1 , w2 ,...,wn } , we calculate the information distance

between word wn with different keywords by using cosine

similarity. Then, we can get the correlation between the

sentence and aspects by counting the words similarity, and

rank it to assign the sentence an aspect label.

For example, supposing a sentence’s word representation is xm ! {w1, w2 ,...,wn } , the correlation between the sentence and aspects is correlatio n( xm , Ai ) ! "nk !1 cosine(wk , wAi ) .

Then the aspect label of max(correlation( xm , Ai )) is the sentence’s aspect it belongs.

Textual

Reviews

Textual

Pretreatment

Aspect

Segmentation

Aspect Rating

Score

Computing

Aspect Weight

Overall Rating

Calculation

Seller Reputation

Score Evaluation

Figure 1: Textual Reputation Model.

For textual reviews, pretreatment is the first step. After

that, every sentence was divided into different aspect. Aspect rating scores together with their weights are further

computed by sentence sentiment. After aggregating the

results got from aforementioned steps, the seller’s reputation score can be calculated.

Overall Rating Calculation

In this phase, we take advantage of aforementioned results

to calculate the buyer’s overall rating. Note that a review

may not cover all the aspects about a product. It is possible

for a buyer to focus on only one aspect. In this situation, it

means that the buyer pay more attention to the aspect

which he is interested in. And this aspect must have a great

influence on the overall rating about the product. Therefore,

the weights of aspects should have associate with the number of sentences which belong to them. Otherwise, to calculate the aspect trust score, the sentiment of sentence

should be considered.

Since sentiment analysis is not the crucial part in our

model, we assign the sentences sentiment labels manually.

For example, “I felt very welcome at La Tortuga, and

very much liked that it was close to everything. Breakfast

and WiFi Internet access were included in the price. My

room was just OK, considering the room rate. The room

was clean, the air conditioning worked, there was enough

hot water, and the bathroom amenities were good. The bed

was too soft for my liking, but that's a matter of personal

taste.”, in this review, the numbers of sentences belong to

different aspects are shown in Table 1.

Textual Pretreatment

Since every review may contain a number of sentences and

each sentence may belong to a different aspect, it is necessary to pretreatment these reviews. Therefore, for a buyer’s

review D , we can get some sentences X ! {x1 , x2 ,..., xm } .

Then we delete the stop words in each sentence by using a

stop words list to get the sentence’s semantic word representation xm ! {w1 , w2 ,...,wn } .

Aspects Segmentation

For a general buyer, he may express his opinions about a

certain product from different aspects. For example,

Āprice”, “screen”, “lens”, ... , are all important features of

a digital camera. Hotel features include “price”, “room”,

“service”, and so on (Long et al. 2010, 2014). In this phase,

we map the sentences in a review into subsets corresponding to each aspect.

Since every aspect is associated with a few keywords,

we design an approach to map these sentences by using

word2vector model.

Word2vector (Mikolov et al. 2013a) (Mikolov et al.

2013b) is a learning tool which provides an efficient implementation of the continuous bag-of-words and skip-

Aspect

Number

Pos/neg

Location

1

1/0

Service

1

1/0

Room

3

2/1

Table 1: Results for Aspects Segmentation

492

For each aspect, we assume the number of positive sentence is Aipos , negative is Aineg . Then the rating value in

aspect i can be calculated by Equation 1.

Aipos

Aipos # Aineg

TA !

i

pˆ !

i

1

1 # a * (e $b*Number )

T!

(2)

d

"i!1TA *WA

(maxrating $ min rating ) # min rating

d

"i!1WA

i

i

Extensive experiments on one hotel review (TripAdvisor

dataset) were used to evaluate effectiveness of our Textual

reputation model.

Seller Reputation Score Evaluation

Following the definition of reputation by (Jøsang, Ismail

2007), the reputation score for a seller is the probability

that buyers expect the seller to carry out transactions satisfactorily (Zhang, Cui, Wang 2014). The reputation score

can be estimated from the positive and negative ratings

towards the product.

Based on Bayes rule, the expectation can be estimated

from observations and some prior probability assumption.

Γ(α # β ) ( α$1)

p (1 $ p)( β $1)

Γ( α ) Γ( β )

Dataset

The TripAdvisor dataset was originally used by Wang et al.

(2010, 2011). The dataset contains 246,399 hotel reviews

as well as overall ratings. Since some reviews consist of

few words, we select some suite reviews for case studies.

Case Study

In this section, we select some reviews from dataset randomly to verify the effectiveness of our model.

For the logistic function, we set a ! 1, b ! 20 , and The

results are shown in Table 2.

According to the results, we can see that our model can

predict the ratings effectively.

(3)

The Bayes estimate of S can be calculated as follows:

UserName

Location

Room

Service

Rating

UserName

TextTrust

Real

Value

UserName

TextTrust

Real

Value

EgyptTravelers

pos

neg

3

2

1

1

1

0

3.4657

(5)

Experiments

(3)

i

Beta( p | α, β ) !

"Uu!1 Ratingu # k

U * max rating # 2k

Note that, the U is the number of reviews, and the k is

the preset value to smooth the reputation value.

And the overall rating of a buyer can be calculated

as :

Ratingu !

(4)

Note that the Beta distribution is a special case of the

Dirichlet distribution for two dimensions (Heinrich 2005).

In BRS trust model (Jøsang, Ismail 2002), it adopts constant setting α ! α # 1, β ! β # 1 to get reputation value. However, it is not suit to the condition with a great number of

ratings. Inspired by CommTrust (Zhang, Cui, Wang 2014),

it can set α ! α # k , β ! β # k to be a suitable preset value.

Then the reputation score of seller can be calculated by:

(1)

Then we can get the rating of location is 1, the rating of

service is 1 and the room’s rating is 2/3.

Since the number of sentences in most reviews is less

than 10, we use the logistic function to smooth the weights.

And the weights is given by :

WA !

α

α# β

WA

i

0.8812

0.2698

0.1197

bluevoter

3.872

4.0

Philvincent

5.0

5.0

EgyptTravelers

3.4657

3.0

PepperR

3.333

3.0

Jeff P

2.095

3.0

Olliebuba

2.668

3.0

Conclusion and Future Work

This paper proposed a novel word2vector-based model to

obtain useful information from reviews. It can deal with

generate reputation score according to buyers’ textual reviews. Case study results on real data demonstrate the effectiveness of our approach in capturing trust information

from reviews.

In the future, we will use more datasets to verify it, and

we will also make some necessary adjustments to this

model to improve its accuracy and efficiency.

Sentiment analysis is another factor which should be

considered. It would be interesting to further explore Textual reputation model in such a setting where calculating

reputation score according to textual reviews.

Table 2: Results for Case Study

493

Based Computational Model for Trust Dynamics, S. Qing,

C.J. Mitchell, and G. Wang (Eds.): ICICS 2009, Beijing,

China, LNCS 5927, pp. 385–395.

Zhang, J., and Cohen, R. 2008. Evaluating the trustworthiness of advice about seller agents in e-marketplaces: A

personalized approach. Electronic Commerce Research

and Applications 7(3): 330-340.

Zhang, X.; Cui, L.; and Wang, Y. 2014. Commtrust: computing multi-dimensional trust by mining e-commerce

feedback comments. IEEE Transactions on Knowledge

and Data Engineering, 26(7): 1631-1643.

Zhuang, L.; Jing, F.; and Zhu X. 2006. Movie review mining and summarization. In Proceedings of the 15th ACM

international conference on Information and knowledge

management. ACM.

Acknowledgements

This work is supported by the National Natural Science

Foundation of China (No. 61340039, 61572355, 61572349)

and 985 funds of Tianjin University, Tianjin Research Program of Application Foundation and Advanced Technology under grant No. 15JCYBJC15700 and No.

14JCTPJC00517.

References

Dong-Huynha, T.; Jennings, N.; and Shadbolt, N. 2004.

FIRE: An integrated trust and reputation model for open

multi-agent systems. In Proceedings of the 16th European

Conference on Artificial Intelligence, August 22-27.

Heinrich, G. 2005. Parameter estimation for text analysis.

Technical report.

Jøsang, A., and Ismail, R. 2002. The beta reputation system. In Proceedings of the 15th bled electronic commerce

conference, pp 41-55.

Jøsang, A.; Ismail, R.; and Boyd, C. 2007. A survey of

trust and reputation systems for online service provision. Decision support systems, 43(2), 618-644.

Long, C.; Zhang, J.; Huang, M.; Zhu, X.; Li, M.; and Ma,

B. 2014. Estimating feature ratings through an effective

review selection approach. Knowledge and information

systems, 38(2), 419-446.

Long, C.; Zhang, J.; and Zhut, X. 2010. A review selection

approach for accurate feature rating estimation.

In Proceedings of the 23rd International Conference on

Computational Linguistics: Posters ,pp. 766-774, Association for Computational Linguistics.

Mikolov, T.; Chen, K.; Corrado, G.; and Dean, J. 2013a.

Efficient Estimation of Word Representations in Vector

Space. In Proceedings of Workshop at ICLR.

Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; and

Dean, J. 2013b. Distributed Representations of Words and

Phrases and their Compositionality. Advances in neural

information processing systems (NIPS).

Resnik, P. 2000. Reputation systems: Facilitating trust in

Internet interactions. Communications of the ACM, 43(12):

45-48.

Wang, H.; Lu, Y.; and Zhai, C. 2010. Latent aspect rating

analysis on review text data: a rating regression approach.

In Proceedings of the 16th ACM SIGKDD international

conference on Knowledge discovery and data mining , pp.

783-792, ACM.

Wang, H.; Lu, Y.; and Zhai, C. 2011. Latent aspect rating

analysis

without

aspect

keyword

supervision.

In Proceedings of the 17th ACM SIGKDD international

conference on Knowledge discovery and data mining, pp.

618-626, ACM.

Xu, G.Q.; Feng, Z.Y.; Wu, H.B. and Zhao, D.X. 2007.

Swift Trust in Virtual Temporary System: A Model Based

on Dempster-Shafer Theory of Belief Functions, International Journal of Electronic Commerce(IJEC), Vol. 12, No.

1, pp. 93-127.

Xu, G.Q.; Feng, Z.Y.; Li, X.H.; Wu, H.T.; Yu, Y.X.; Chen,

S.Z.; and Rao, G.Z. 2009. TSM-Trust: a Time-Cognition

494