Proceedings of the Tenth International AAAI Conference on

Web and Social Media (ICWSM 2016)

Dynamic Data Capture from Social

Media Streams: A Contextual Bandit Approach

Thibault Gisselbrecht+∗ and Sylvain Lamprier∗ and Patrick Gallinari∗

+

Technological Research Institute SystemX, 8 Avenue de la Vauve, 91120 Palaiseau, France

thibault.gisselbrecht@irt-systemx.fr

∗

Sorbonne Universités, UPMC Univ Paris 06, CNRS, LIP6 UMR 7606, 4 place Jussieu, 75005 Paris

firstname.lastname@lip6.fr

nishes access to the data published by a particular user, under

restrictive constraints related to the number of streams that

can be simultaneously considered. On Twitter for instance,

data capture is limited to 5000 simultaneous streams1 . Regarding the huge number of available users on this media, it

requires an incredibly important effort for targeting relevant

sources. Selecting relevant users to follow among the whole

set of accounts is very challenging. Imagine someone interested in politics wishing to keep track of people in relation

to this topic in order to capture the data they produce. One

solution is to manually select a bucket of accounts and follow their activity along time. However, Twitter is known to

be extremely dynamic and for any reason some users might

start posting on this topic while others might stop at any

time. So if the interested user wants to be up to date, he

might change the subset of followed accounts dynamically,

his goal being to anticipate which users are the most likely

to produce relevant content in a close future. This task seems

hard to handle manually for two major reasons. First, the criteria chosen to predict whether an account will potentially

be interesting might be hard to define. Secondly, even if one

would be able to manually define those criteria, the amount

of data to analyze would be too large.

Regarding these important issues, we propose a solution

that, at each iteration of the process, automatically selects a

subset of accounts that are likely to be relevant in the next

time window, depending on their current activity. Those accounts are then followed during a certain time and the corresponding published contents are evaluated to quantify their

relevance. The algorithm behind the system then learns a

policy to improve the selection at the next time step. We

tackle this task as a contextual bandit problem, in which at

each round, a learner chooses an action among a bigger set

of available ones, based on the observation of action features

- also called context - and then receives a reward that quantifies the quality of the chosen action. In our case, considering

that following someone corresponds to an action, several actions have to be chosen at each time step, since we wish to

leverage the whole capture capacity allowed by the streaming API and therefore collect data from several simultaneous

streams.

Abstract

Social Media usually provide streaming data access that enable dynamic capture of the social activity of their users.

Leveraging such APIs for collecting social data that satisfy

a given pre-defined need may constitute a complex task, that

implies careful stream selections. With user-centered streams,

it indeed comes down to the problem of choosing which users

to follow in order to maximize the utility of the collected data

w.r.t. the need. On large social media, this represents a very

challenging task due to the huge number of potential targets

and restricted access to the data. Because of the intrinsic nonstationarity of user’s behavior, a relevant target today might

be irrelevant tomorrow, which represents a major difficulty to

apprehend. In this paper, we propose a new approach that anticipates which profiles are likely to publish relevant contents

- given a predefined need - in the future, and dynamically

selects a subset of accounts to follow at each iteration. Our

method has the advantage to take into account both API restrictions and the dynamics of users’ behaviors. We formalize

the task as a contextual bandit problem with multiple actions

selection. We finally conduct experiments on Twitter, which

demonstrate the empirical effectiveness of our approach in

real-world settings.

1

Introduction

In recent years, many social websites that allow users to publish and share content online have appeared. For example

Twitter, with 302 million active users and more than 500

millions posts every day, is one of the main actors of the

market. These social media have become a very important

source of data for many applications. Given a pre-defined

need, two solutions are usually available for collecting useful data from such media: 1) getting access to huge repositories of historical data or 2) leveraging streaming services

that most media propose to enable real-time tracking of their

users’ activity. As the former solution usually implies very

important costs for retrieving relevant data from big data

warehouses, the latter may constitute a very relevant alternative that enables real-time access to focused data. However,

it implies to be able to efficiently target relevant streams of

data.

In this paper, we consider the case of user-centered

streams of social data, i.e. where each individual stream fur-

1

In this paper we focus on Twitter, but the approach is valid for

many other social platforms.

Copyright © 2016, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

131

With the current activity of a certain user corresponding

to its context features, our task suits well the contextual bandit framework. However, in its traditional instance, features

of every action are required to be observed at each iteration to perform successive choices. For our specific task, we

are not able to observe the current activity of every potential

user. Consequently, we are not able to get everyone’s context

and directly use classical contextual bandit algorithms such

as LinUCB (Chu et al. 2011). On the other hand, it cannot

be treated as a traditional (non-contextual) bandit problem

since we would lose the useful information provided by observed context features. To the best of our knowledge, such

an hybrid instance of bandit problem has not been studied

yet. To solve our problem, we propose a data capture algorithm which, along with learning a selection policy of the

k best streams at each round, learns on the feature distributions themselves, so as to be able to make approximations

when features are hidden from the agent.

The paper is organized as follows: Section 2 presents

some related work. Section 3 formalizes the task and

presents our data capture system. Section 4 then describes

the model and the algorithm proposed to solve the task. Finally, section 5 reports various sets of experimental results.

2

from their posterior distributions, have been designed (Kaufmann, Korda, and Munos 2012; Agrawal and Goyal 2012a;

Chapelle and Li 2011; Agrawal and Goyal 2012b). More recently, the case where the learner can play several actions

simultaneously has been formalized respectively in (Chen,

Wang, and Yuan 2013) (CUCB algorithm) and (Qin, Chen,

and Zhu 2014) (C2 UCB algorithm) for the non contextual

and the contextual case. Finally in (Gisselbrecht et al. 2015)

the authors propose the CUCBV algorithm which extends

the original UCBV of (Audibert, Munos, and Szepesvari

2007) to the multiple plays case. To the best of our knowledge, no algorithm exists for our case, i.e. when feature vectors are only observable with some given probability.

Regarding social media, several existing tasks present

similarities with ours. In (Li, Wang, and Chang 2013), the

authors build a platform called ATM that is aimed at automatically monitoring target tweets from the Twitter Sample

stream for any given topic. In their work, they develop a keyword selection algorithm that efficiently selects keywords to

cover target tweets. In our case, we do not focus on keywords but on user profiles by modeling their past activity on

the social media. In (Colbaugh and Glass 2011) the authors

model the blogosphere as a network in which every node is

considered as a stream that can be followed. Their goal is to

identify blogs that contain relevant contents in order to track

emerging topics. However, their approach is static and their

model is trained on previously collected data. Consequently,

this approach could not be applied to our task: first we cannot have access to the whole network due to APIs restrictions and secondly, the static aspect of their methods is not

suitable to model the dynamics of users’ behaviors that can

change very quickly. In (Hannon, Bennett, and Smyth 2010)

and (Gupta et al. 2013), the authors build recommendation

systems, respectively called Twittomender and Who to Follow. In the former collaborative filtering methods are used to

find twitter accounts that are likely to interest a target user. In

the latter, a circle of trust (which is the result of an egocentric random walk similar to personalized PageRank (Fogaras

et al. 2005)) approach is adopted. In both cases, authors assume the knowledge of the whole followers/followees Twitter graph, which is not feasible for large scale applications

due to Twitter restriction policies. Moreover, the task is quite

different from ours. While these two models are concerned

about the precision of the messages collected from the selected followees (in order to avoid relevant information to

be drown in a too large amount of data), we rather seek at

maximizing the amount of relevant data collected in a perspective of automatic data capture.

Bandit algorithms have already been applied to various

tasks related to social networks. For example in (Kohli,

Salek, and Stoddard 2013), the authors handle abandonment

minimization tasks in recommendation systems with bandits. In (Buccapatnam, Eryilmaz, and Shroff 2014), bandits

are used for online advertising while in (Lage et al. 2013),

the authors use contextual bandit for an audience maximization task. In (Gisselbrecht et al. 2015), a data capture task

is tackled via non-contextual bandit algorithms. In that case,

each user is assumed to own a stationary distribution and the

process is supposed to find users with best means by trading

Related Work

In this section we first present some literature related to bandit learning problems, then we discuss about some data capture related tasks and finally present some applications of

bandits for social media tasks.

The multi-armed bandit learning problem, originally studied in (Lai and Robbins 1985) in its stationary form has been

widely investigated in the literature. In this first instance,

the agent has no access to side information on actions and

assume stationary reward distributions. A huge variety of

methods have been proposed to design efficient selection

policies, with corresponding theoretical guarantees. The famous Upper Confidence Bound (UCB) algorithm proposed

in (Auer, Cesa-Bianchi, and Fischer 2002) and other UCBbased algorithms ((Audibert, Munos, and Szepesvari 2007;

Audibert and Bubeck 2009)) have already proven to solve

the so-called stochastic bandit problem. This type of strategies keeps an estimate of the confidence interval related to

each reward distribution and plays the action with highest

upper confidence bound at each time step. In (Auer, CesaBianchi, and Fischer 2002), the authors introduce the contextual bandit problem, where the learner observes some

features for every action before choosing the one to play,

and propose the LinRel algorithm. Those features are used

to better predict the expected rewards related to each action. More recently, the LinUCB algorithm, which improves

the performance of LinRel has been formalized (Chu et

al. 2011). Those two algorithms assume the expected reward of an action to be linear with respect to some unknown parameters of the problem to be estimated. In (Kaufmann, Cappe, and Garivier 2012), the authors propose the

Bayes-UCB algorithm which unifies several variants of UCB

algorithms. For both the stochastic and contextual bandit

case, Thompson sampling algorithms, which introduce randomness on the exploration by sampling action parameters

132

off between the exploitation of already known good users

to follow and the exploration of unknown ones. The proposed approach mainly differs from this work for the nonstationnarity assumptions it relies on. This work is considered in the experiments to highlight the performances of the

proposed dynamic approach.

3

3.1

the set of user accounts that maximizes the sum of collected

relevance scores:

T max

ra,t

(1)

(Kt )t=1..T

Data Streams Selection

Context

As we described in the introduction, our goal is to propose a

system aimed at capturing some relevant data from streaming APIs proposed by most of social media. Face to such

APIs, two different types of data sources, users or keywords

(or a mixture of both kinds), can be investigated. In this paper, we focus on users-centered streams. Users being the

producers of the data, following them enables more targeted

data capture than keywords. Following keywords to obtain

data about a given topic for example would be likely to lead

to the collection of a greatly too huge amount of data2 , which

would imply very important post-filtering costs. Moreover,

considering user-centered streams allows a larger variety of

applications, with author-related aspects, and more complex topical models, than focusing on keywords-centered

streams.

Given that APIs usually limit the ability to simultaneously

follow users’ activity to a restricted number of accounts, the

aim is to dynamically select users that are likely to publish

relevant content with regard to a predefined need. Note that

the proposed approach would also be useful in the absence

of such restriction, due to the tremendous amount of data

that users publish on main social media. The major difficulty

is then to efficiently select user accounts to follow given the

huge amount of users that post content and that at the beginning of the process, no prior information is known about

potential sources of data. Moreover, even if some specific

accounts to follow could be found manually, adapting the

capture to the dynamics of the media appears intractable by

hand. For all these reasons, building an automatic solution

to orient the user’s choices appears more than useful.

To make things concrete, in the rest of the paper we set

up in the case of Twitter. However, the proposed generic

approach remains available for any social media providing

real-time access to its users’ activity.

3.2

t=1 a∈Kt

Note that, in our task, we are thus only focused on getting

the maximal amount of relevant data, the precision of the

retrieved data is not of our matter here. This greatly differs

from usual tasks in information retrieval and followees recommendation for instances.

Relevance scores considered in our data capture process

depend on the information need of the user of the system.

The data need in question can take various forms. For example, one might want to follow the activity of users that are

active on a predefined topic or influent in the sense that their

messages are reposted a lot. They can depend on a predefined function that automatically assigns a score according

to the matching of the content to some requirements (see

section 5 for details).

Whereas (Gisselbrecht et al. 2015) relies on stationarity

assumptions on the relevance score distributions of users for

their task of focused data capture (i.e., relevance scores of

each user account are assumed to be distributed around a

given stationary mean along the whole process), we claim

that this is a few realistic setting in the case of large social

networks and that it is possible to better predict future relevance scores of users according to their current activity. In

other words, with the activity of a user a ∈ K at iteration

t − 1 represented by a d-dimensional real vector za,t , there

exists a function h : Rd → R that explains from za,t the

relevance score ra,t that a user a would obtain if it was followed during iteration t. This correlation function needs to

be learned conjointly with the iterative selection process.

However, in our case, maximizing the relevance scores as

defined in formula 1 is constrained in some different ways:

• Relevance scores ra,t are only defined for followed accounts during iteration t (i.e. for every a ∈ Kt ), others are

unknown;

• Context vectors za,t are only observed for a subset of

users Ot (i.e., it is not possible to observe the whole activity of the social network).

Constraints on context vectors are due to API restrictions.

Two streaming APIs of Twitter are used to collect data:

• On the one hand, a Sample streaming API furnishes realtime access to 1% of all public tweets. We leverage this

API to discover unknown users and to get an important

amount of contexts vectors for some active users;

• On the other hand, a Follow streaming API provides realtime data published by a subset of the whole set of Twitter

users. This API allows the system to specify a maximal

number of 5000 users to follow. We leverage this API to

capture focused data from selected users.

For users in Ot , their relevance score at next iteration can

be estimated via the correlation function h, which allows

to capture variations of users’ usefulness. For others however, we propose to consider the stationary case, by assuming some general tendency on users’ usefulness. Therefore,

A Constrained Decision Process

As described above, our problem comes down to select, at

each iteration t of the process, a subset Kt of k user accounts

to follow, among the whole set of possible users K (Kt ⊆

K), according to their likelihood of posting relevant tweets

for the formulated information need. Given a relevance score

ra,t assigned to the content posted by user a ∈ Kt during

iteration t of the process (the set of tweets he posted during

iteration t), the aim is then to select at each iteration t over T

2

Note that on some social media such as Twitter, a limitation

of 1% of the total amount of produced messages on the network

is set. Considering streams centered on popular keywords usually

leads to exceed this limitation and then, to ignore some important

messages.

133

cess. At each round t ∈ {1, .., T } of the process, feature

vectors za,t ∈ Rd can be associated with users a ∈ K. The

set of k users selected to be followed during iteration t is denoted by Kt . The reward obtained by following a given user

a during iteration t of the process is denoted by ra,t . Note

that only rewards ra,t from users a ∈ Kt are known, others

cannot be observed.

In the field of contextual bandit problems, the usual linear hypothesis assumes the existence of an unknown vector

β ∈ Rd such that ∀t ∈ {1, .., T } , ∀a ∈ K : E[ra,t |za,t ] =

T

za,t

β (see (Agrawal and Goyal 2012b)). Here, in order to

model the intrinsic quality of every available action (where

an action corresponds in our case to the selection of a user

to follow during the current iteration), we also suppose the

existence of a bias term θa ∈ R for all actions a, such that

finally:

we get an hybrid problem, where observed contexts can be

used to explain variations from estimated utility means.

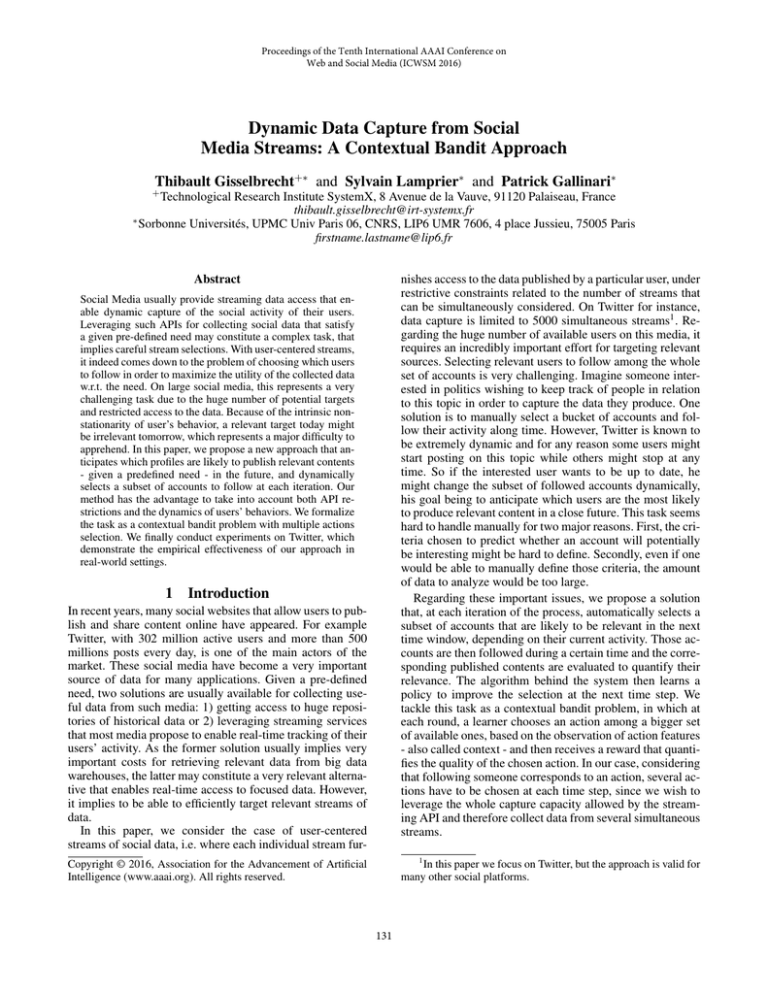

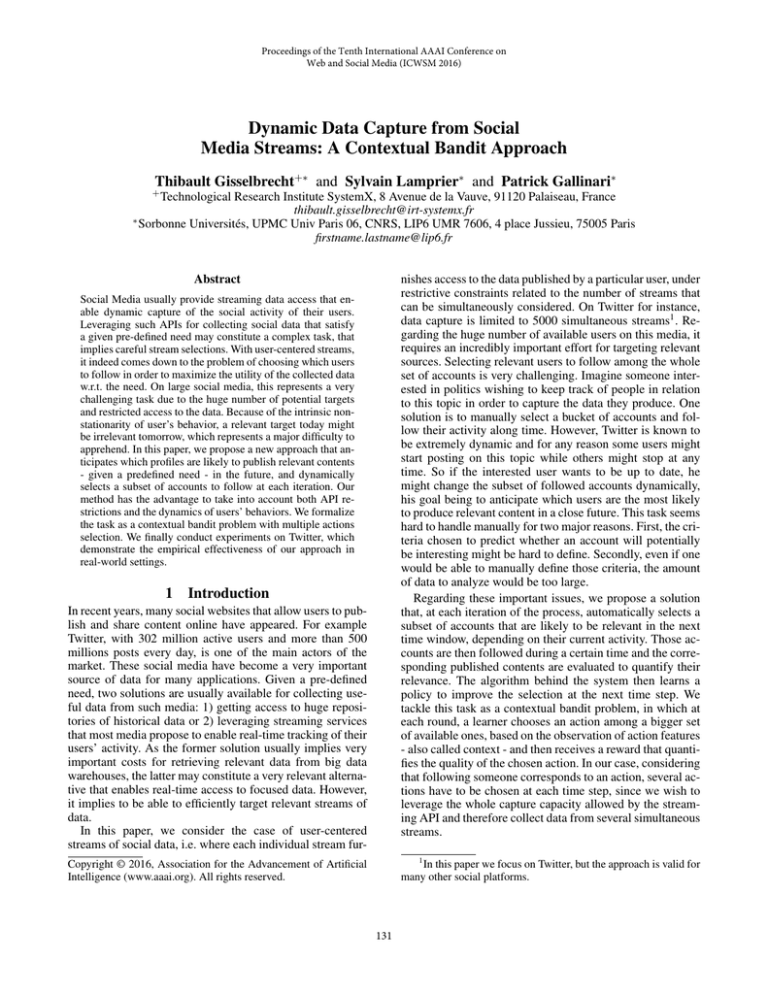

3.3

System Description

Potential users

Feeds / mean updates

Feeds / mean updates

za5,t+1

za1,t

Selection

policy

Selection

policy

za2,t

za7,t+1

Learner

Kt-1

Learner

Kt

za3,t

za1,t+1

Follow API

Ot

Tweets

Kt+1

za3,t+1

za5,t

Follow API

Selection

policy

za4,t+1

za4,t

Ot+1

Tweets

Relevance

Feedback

Follow API

Tweets

Relevance

Feedback

Relevance

Feedback

Iteration t

Sample Streaming API

Iteration t-1

Iteration t+1

T

β + θa

∀t ∈ {1, .., T } , ∀a ∈ K : E[ra,t |za,t ] = za,t

This formulation corresponds to a particular case of the LinUCB algorithm with the hybrid linear reward proposed in (Li

et al. 2010) by taking a constant arm specific feature equal to

1 at every round. Note that individual parameters could have

also been considered in our case but this is not well suited

for the types of problems of our concern in this paper, where

the number of available actions at each round is usually high

(which would imply a difficult learning of individual parameters). For the sake of simplicity, we therefore restrict this

individual modeling to this bias term.

In our case, a major difference with existing works on

contextual bandit problems is that every context is not accessible to the selection policy before it chooses users to

follow. Here, we rather assume that every user a from the

social media owns a probability pa (0 < pa < 1) to reveal

its context3 . The set of users for which the process observes

the features at time t is denoted Ot , whose complement is

denoted Ōt . Then, since Kt ⊂ K and K = Ot ∪ Ōt , selected

users can then belong to the set of users without observed

context Ōt .

Finally, as told above, while our approach would remain

valid in a classical bandit setting where only one action is

chosen at each step, our task requires to consider the case

where multiple users can be selected to be followed at each

iteration (since we wish to exploit the whole capture capacity allowed by the API). At each round, the agent has then

to choose k (k < K) users among the K available ones,

according to observed features and individual knowledge

about them. The reward obtained after a period of capture

then corresponds to the sum of individual rewards collected

from the k users followed during this period.

Figure 1: System illustration.

Figure 1 depicts our system of focused data capture from

social media. The process follows the same steps at each iteration, three time steps are represented on the figure. At the

beginning of each iteration, the selection policy selects a set

of users to follow (Kt ) among the pool of known users K,

according to observations and some knowledge provided by

a learner module. Then, the messages posted by the k selected users are collected via the Follow streaming API. As

we can see in the central part of the illustration, after having followed users in Kt during the current iteration t, the

collected messages are analyzed (by a human or an automatic classifier) to give feedbacks (i.e., relevance scores) to

the learner.

Meanwhile, as a parallel task during each iteration, current activity features are captured from the Sample streaming API. This allows us to feed the pool of potential users

to follow K and to build the set Ot of users with observed

contexts. Context vectors for iteration t are built by considering all messages that the system collected from the Follow

streaming and the Sample streaming APIs for each user during this iteration. Therefore, every user whose at least one

message was included in these collected messages at iteration t is included in Ot+1 (messages from a same author are

concatenated to form za,t+1 , see section 5 for an example of

context construction from messages). Contexts vectors collected during iteration t serve as input for the selection strategy at iteration t + 1.

4

Model and Algorithm

4.2

This section first introduces some settings and backgrounds

for our contextual bandit approach for targeted data capture,

details the policy proposed to efficiently select useful users

to follow, and then describes the general algorithm of our

system.

4.1

(2)

Distribution assumptions

To derive our algorithm, we first perform a maximum a posteriori estimate for the case where every context are available, based on the following assumptions:

• Likelihood: reward scores are identically and independently distributed w.r.t. observed contexts: ra,t ∼

Problem setting

As defined above, we denote by K the set of K known users

that can be follow at each iteration of the data capture pro-

3

The case pa = 1 ∀a ∈ K corresponds to the traditional

contextual bandit.

134

T

N (za,t

β + θa , λa ), with λa the variance of the difference between the reward ra,t and the linear application

T

za,t

β + θa ;

Theorem

For any 0 < δ < 1 and za,t ∈ Rd , denoting

√ 1 −1

α = 2erf (1 − δ) 5 , for every action a after t iterations:

P |E[ra,t |za,t ]− μ̄a −(za,t − z̄a )T β̄| ≤ α σa ≥ (1−δ)

1

with σa =

+ (za,t − z̄a )T A−1

0 (za,t − z̄a )

τa + 1

(5)

• Prior: the unknown parameters are normally distributed:

β ∼ N (0, bId ) and θa ∼ N (0, ρa ), where b and ρa are

two values allowing to control the variance of the parameters and Id is the identity matrix of size d (the size of the

features vectors).

Proof 2 E[ra,t |za,t ]

= (za,t − z̄a )T β + θa + z̄aT β.

By combining equations 2, 3 and 4: E[ra,t |za,t ] ∼

N μ̄a + (za,t − z̄a )T β̄, τa1+1 + (za,t − z̄a )T A−1

0 (za,t − z̄a ) .

The announced result comes from the confidence interval of a

Gaussian.

For the sake of clarity, we set b, ρa and λa equal to 1 for all

a in the following. Note that every results can be extended

to more complex cases.

Proposition 1 Denoting Ta the set of steps when user a

has been chosen in the first n time steps of the process

(Ta = {t ≤ n, a ∈ Kt }, |Ta | = τa ), ca the vector containing rewards obtained by a at iterations it has been followed

(ca = (ra,t )t∈Ta ) and Da the context matrix related to user

T

a (Da = (za,t

)t∈Ta ), the posterior distribution of the unknown parameters after n time steps, when all contexts are

available, follows:

(3)

β ∼ N β̄, A−1

0

1

(4)

θa + z̄aT β ∼ N μ̄a ,

τa + 1

This formula is directly used to find the so-called Upper

Confidence Bound, which leads to choose the k users having

the highest score values sa,t at round t, with:

sa,t = μ̄a + (za,t − z̄a )T β̄ + α σa

(6)

We recall that this formula can be used in the traditional

contextual bandit problem, where every context is available.

In the next section, we propose a method to adapt it to our

specific setting of data capture, where most of contexts are

usually hidden from the agent.

4.3

With:

A0 = Id +

K

(τa + 1)Σ̄a

bT0 =

a=1

Σ̄a =

(τa + 1)ξ¯a

cT Da

ξ¯a = a

− μ̄a z̄aT

τa + 1

β̄ =

Selection scores derived in the previous section are defined

for cases where all context vectors are available for the agent

at each round. However, in our case, only users belonging

to the subset Ot show their context. This particular setting

requires to decide how judging users whose context is unknown. Moreover, it also implies questions about knowledge

updates when some users are selected without having been

(contextually meaning) observed.

Even though it is tempting to think that the common parameter β and the user specific ones θa can be learned independently, in reality they are complexly correlated as formula 3 and 4 highlight it. It is important to emphasize that

to keep probabilistic guarantees, parameters should only be

updated when a chosen user was also observed, i.e. when it

belongs to Kt ∩Ot . So, replacing the previous Ta by Taboth =

{t ≤ n, a ∈ Kt ∩ Ot } (we keep the notation |Taboth | = τa )

allows us to re-use the updates formula of Proposition 1.

However, computing the selection score for a user whose

context is hidden from the agent cannot be done via equation

6 since za,t is unknown. To deal with such a case we propose

to use an estimate of the mean distribution of feature vectors

of each user. The assumption is that, while non-stationarity

can be captured when contexts are available, different users

own different mean reward distributions, and that it can be

useful to identify globally useful users.

New notations:

• We denote the set of steps when user a revealed its context vector after n steps by Taobs = {t ≤ n, a ∈ Ot } with

|Taobs | = na .

a=1

DaT Da

− z̄a z̄aT

τa + 1

T

A−1

0 b0

K

μ̄a =

ra,t

t∈Ta

τa + 1

z̄a =

za,t

t∈Ta

τa + 1

Proof 1 The full derivation is available at 4 . It proceeds as follows: denoting D = ((ra1 ,1 , za1 ,1 ), ...(ran ,n , zan ,n )) and using

Bayes’s rule we have:

p(β, θ1 ...θK |D)

∝ p(D|β, θ1 ...θK )p(β) K

a=1 p(θa )

−1

2

T

n (ra ,t −za

β−θa )2

t

t ,t

λa

T

+βbβ+

K θ2

a

ρa

t=1

a=1

.

∝e

Rearranging the terms and using matrix notations leads to:

...θK |D)

p(β, θ1

−1

2

β T A0 β−2bT

0 β+

K

T

(τa +1)(θa +z̄a

β−μ̄a )2

.

∝e

The two distributions are directely derived from this formula.

a=1

Dealing with Hidden Contexts

All the parameters above do not have to be stored and can

be updated efficiently as new learning example comes (see

algorithm 1).

erf −1 is the

x −t2

2/π 0 e

dx.

5

4

http://www-connex.lip6.fr/∼lampriers/ICWSM2016supplementaryMaterial.pdf

135

inverse

error

function,

erf (x)

=

• The empirical mean of the feature vector for user a is de1 za,t . Note that ẑa is difnoted ẑa , with ẑa =

na t∈Taobs

ferent from z̄a since the first is updated every time the

context za,t is observed while the second is only updated

when user a is observed and played in the same iteration.

sa,t = μ̄a + (zˆa − z¯a )T β̄ + α σ̂a +

• Without loss of generality, assume that β is bounded by

M ∈ R+∗ , i.e. ||β|| ≤ M , where || || stands for the L2

norm on Rd .

• For any user a, at any time t the context vectors za,t ∈

Rd are iid sampled from an unknown distribution with

finite mean vector E[za ] and covariance matrix Σa (both

unknown).

4.4

Contextual Data Capture Algorithm

The pseudo-code of our contextual data capture algorithm

from social streams is detailled in algorithm 1.

The algorithm starts by initializing the set of observed

users Ot from the Sample API during a time period of L.

Then, for each iteration t over T , it proceeds as follows:

Note that the requirement ||β|| ≤ M can be satisfied

through proper rescaling on β.

Proposition 2 The excepted value of the mean reward of

user a with respect to its features distribution, denoted E[ra ]

satisfies after t iterations of the process:

1. Lines 10 to 15: every user from Ot that does not belong

to the pool of potential users to follow is added to K, in

the limit of newM ax new arms at each iteration to cope

with cases where too many new arms are discovered each

iteration (in that case only the newM ax first new ones

are considered, others are simply ignored). This allows to

avoid over-exploration for such cases;

(7)

= E[za ] β + θa

T

= (zˆa − z¯a )T β + θa + z¯a T β + (E[za ] − zˆa )T β

Theorem 2 Given 0 < δ < 1 and 0 < γ < 12 , denoting

√

α = 2erf −1 (1 − δ), for every user a after t iterations of

the process:

1

P |E[ra ] − μ̄a − (zˆa − z¯a ) β̄| ≤ α σ̂a + γ ≥

na

Ca

(1 − δ) 1 − 1−2γ

na

1

with σ̂a =

+ (zˆa − z¯a )T A−1

0 (zˆa − z¯a )

τa + 1

(9)

Given that γ > 0, and that each user a has a probability

0 < pa < 1 to reveal its context at each time step t, the

extra-exploration term 1/nγa tends to 0 as the number of observations of user a gets larger. The above score then tends

to a classical LinUCB score (as in equation 6) in which the

context vector za,t is replaced by its empirical mean zˆa , as t

increases.

Main assumptions:

T

β + θa ]

E[ra ] = E[za,t

1

nγa

2. Lines 16 to 20: updates of empirical context means ẑa

(according to data collected from user a at the previous

iteration) and observation counts na for every observed

user a in Ot ;

3. Line 22: parameters β̄ of the estimation model are updated

according to current counts;

T

4. Lines 23 to 37: selection scores are computed for every

user in the pool K. As detailed in the previous section,

selection scores are computed differently depending on

the availability of the current context of the corresponding user. Note also that the selection scores of new users

added to the pool during the current iteration are set to

+∞ in order to enforce the system to choose them at least

once and initialize their empirical mean reward;

(8)

Where Ca is a positive constant specific to each user.

Proof 3 The full proof is available at URL4 , we only give the main

steps here. Denoting m̂a = (zˆa − z¯a )T β̄ + μ̄a , σ̂a2 = τa1+1 +(zˆa −

z¯a )T A−1

0 (zˆa − z¯a ), Xa the random variable such that Xa = (zˆa −

z¯a )T β+θa +z¯a T β and using Cauchy Schwarz inequality, we have:

|E[ra ]−m̂a |

zˆa ||M

≤ |Xaσ−am̂a | + ||E[za ]−

Then, using the Gaussian

σa

σa

property of Xa , we show that: P |Xaσ−am̂a | ≤ α = 1 − δ, with

√

α = 2erf −1 (1 − δ). On the other hand, with Chebyshev inequality,

we prove that:

zˆa ||M

1

M2d

≥

1

−

≤

T

race(Σ

)

P ||E[za ]−

γ

a

1−2γ

σˆa

σˆa na

na

Finally, combining the two previous results proves the theorem.

5. Line 38: selection of the k users with the best selection

scores;

6. Lines 39 to 43: simultaneous capture of data from the

Sample and Follow Apis during a time period of L. The

Follow API is focused on the subset of k selected users

Kt ;

7. Lines 44 to 55: computation of the rewards of users in

Kt according to the data collected from the Follow API.

Then, using users in Kt ∩ Ot , both the global model parameters (A0 and b0 ) and arm specific ones (τa , μ̄a and

z̄a ) are updated;

For γ < 12 , the previous probability tends to 1 − δ as the

number of observations of user a increases (as in equation

5). Then, the inequality above gives a reasonably tight UCB

for the expected payoff of user a, from which a UCB-type

user-selection strategy can be derived. At each trial t, if the

context of user a has not been observed (i.e. a ∈

/ Ot ), set:

8. Line 57: Update of the set of observed users by gathering

all users whose at least one post has been collected from

both APIs during the current iteration.

136

5

Algorithm 1: Contextual Data Capture Algorithm

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Classical bandit algorithms usually come with an upper

bound of the regret, which corresponds to the difference between the cumulative reward obtained with an optimal policy and the bandit policy in question. In our case, an optimal

strategy corresponds to a policy for which the agent would

have a perfect knowledge of the parameters β and θa (for

all a) plus an access to all the features at each step. However, in our case, in the absence of an asymptotically exact

T

β + θa , due to the fact that some contexts

estimate of za,t

za,t are hidden from the process, it is unfortunately not possible to show a sub-linear upper-bound of the regret. Nevertheless, we claim that the algorithm we proposed to solve

our problem of contextual data capture from social media

streams well behaves on average. To demonstrate this good

behavior, we present various offline and online experiments

in real-world scenarios. Our algorithm is the first to tackle

the case of bandits with partially hidden contexts, the aim

is to demonstrate its feasibility for constrained tasks such as

data capture from social media streams.

Input: k, T , α, γ, L, maxN ew

A0 ← Id×d (identity matrix of dimension d);

b0 ← 0d (zero vector of dimension d);

K ← ∅;

Ω1 ← Data collected from Sample API

during a period of L;

O1 ← Authors of at least one post in Ω1 ;

for t ← 1 to T do

nbN ew ← 0;

for a ∈ Ot do

if a ∈

/ K and nbN ew < maxN ew then

nbN ew ← nbN ew + 1;

K ← K ∪ {a};

τa ← 0; na ← 0; sa ← 0; ba ← 0d ;

μ̄a ← 0; z̄a ← 0d ; ẑa ← 0d ;

15

16

17

18

19

20

21

22

23

24

25

26

end

if a ∈ K then

Observe context za,t from Ωt ∪ Ψt ;

Update ẑa w.r.t. za,t ;

na ← na + 1;

end

end

else

1

τa +1

+ (zˆa − z¯a )T A−1

0 (zˆa − z¯a );

30

σ̂a ←

31

sa,t ← μ̄a + (zˆa − z¯a )T β̄ + α σ̂a +

32

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

1

γ

na

;

end

end

else

sa,t ← ∞;

end

end

Kt ←

arg max

K̂⊆K,|K̂|=k

Experimental Setup

Beyond a random policy that randomly selects the set of

users Kt at each iteration, we compare our algorithm to

two bandit policies well fitted for being applied for the

task of data capture: CUCB and CUCBV respectively proposed in (Qin, Chen, and Zhu 2014) and (Gisselbrecht et al.

2015). These algorithms do not take features into account

and perform stationary assumptions on reward distributions

of users. Compared to CUCB, CUCBV adds the variance

of reward distributions of users in the definition of the confidence intervals. This has been shown to behave well in cases

such as our task of data capture, where a great variability can

be observed due to the possible inactivity of users when they

are selected by the process. At last, we consider a naive version of our contextual algorithm that preferably selects users

with an observed context (it only considers users in Ot when

this set contains enough users), rather than getting the ability

of choosing users in Ōt as it is the case in our proposal.

For all of the reported experiments we set: 1) The exploration parameter to α = 1.96, which corresponds to a 95 percent confidence interval on the estimate of the expected reward when contexts are observed; 2) The reduction parameter of confidence interval for unknown contexts to γ = 0.25,

which allows a good trade-off between the confidence interval reduction rate and the probability of this interval; 3) The

number of new users that can be added to the pool at each

iteration to newM ax = 500, to avoid over-exploration, especially for online experiments, when many new users can

be discovered from the Sample API;

sa,t ← μ̄a + (za,t − z̄a )T β̄ + α σa ;

27

37

5.1

end

β̄ ← A−1

0 b0 ;

for a ∈ K do

if τa > 0 then

if a ∈ Ot then

1

T −1

σa ←

τa +1 + (za,t − z̄a ) A0 (za,t − z̄a );

28

29

33

34

35

36

Experiments

sa,t ;

a∈K̂

during a time interval of L do

Ωt ← Collect data from the Sample API;

Ψt ← Collect data from the Follow API

focused on the users in Kt ;

end

for a ∈ Kt do

Compute ra,t from Ψt ;

if a ∈ Ot then

A0 ← A 0 + b a b T

a /(τa + 1);

b0 ← b0 + ba sa /(τa + 1);

τa ← τa + 1;

sa ← sa + ra,t ; μ̄a ← sa /(τa + 1);

ba ← ba + za,t ; z̄a ← ba /(τa + 1);

T

− b a bT

A0 ← A0 + za,t za,t

a /(τa + 1);

b0 ← b0 + ra,t za,t − ba sa /(τa + 1)

5.2

Offline experiments

Datasets In order to be able to test different policies and

simulate a real time decision process several times, we first

propose a set of experiments on offline datasets:

end

end

Ωt+1 ← Ωt ; Ψt+1 ← Ψt ;

Ot+1 ← Authors of at least one post in Ωt+1 ∪ Ψt+1 ;

• USElections: dataset containing a total of 2148651 messages produced by 5000 users during the ten days preceding the US presidential elections in 2012. The 5000 cho-

58 end

137

sen accounts are the first ones who used either “Obama”,

“Romney” or “#USElections”.

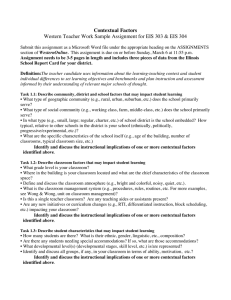

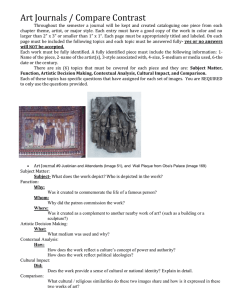

Results For space reasons and brevity, we only report results for k = 100, L = 2min (which means that every 2

minutes the algorithms select 100 users to follow) for the

USElections dataset and the four rewards defined above. For

the Libya dataset, we only show the results for the politics

reward. Similar tendencies were observed for different values of k and L.

Figures 2 and 3 represent the evolution of cumulative

reward for different policies and rewards, respectively for

the USElections and the Libya dataset. For contextual algorithms, their naive versions that select observed users in

priority are given with same symbols without solid line.

First, it should be noticed that every policy performs better than the Random one, which is a first element to assert the

relevance of bandit algorithms for the task in concern. Second, we notice that CUCBV performs better than CUCB,

which confirms the results obtained in (Gisselbrecht et al.

2015) on a similar task of focused data capture.

More interesting is the fact that when every context is observable, our contextual algorithm performs better than stationary approaches CUCB and CUCBV. This result shows

that we are able to better anticipate which user is going to

be the more relevant at the next time step, for a particular information need, given what he said right before. This

also confirms the usual non-stationarity behavior of users.

For instance, users can talk about science during the day at

work while being more focused on sports when they come

back home. Considering contexts also allows one to converge faster towards interesting users since every user account share the same β parameter.

Results show that even for low probabilities of context observation p, our contextual policy behaves greatly better than

non-contextual approaches, which empirically validates our

approach: it is possible to leverage contextual information

even if a large part of this information is hidden from the

system. In particular, for the Libya dataset where no significant difference between the two non-contextual CUCB and

CUCBV is noteworthy, our algorithm seems much more appropriate. Moreover, for a fixed probability p, the naive versions of the contextual algorithm offer lower performances

than the original ones. By giving the ability of selecting

users even if their context is unknown, we allow the algorithm to complete its selection by choosing users whose average context corresponds to a learned profile. If no user in

Ot seems currently relevant, the algorithm can then rely on

users with good averaged intrinsic quality. For k = 100, the

average number of selected users for which the context was

observed at each time step is 43 for p = 0.1 and 58 for

p = 0.5, which confirms that the algorithm does not always

select users in Ot .

• Libya: dataset containing 1211475 messages from 17341

users. It is the result of a three-months capture from the

F ollow API using the keyword “Libya”.

In the next paragraph, we describe both how we transform

messages in feature vectors and which reward function we

use to evaluate the quality of a message.

Model definition

Context model The content obtained by capturing data

from a given user a at time step t is denoted ωa,t (if we

get several messages for a given author, these messages are

concatenated). Given a dictionary of size m, messages can

be represented as m dimensional bag of word vectors. However the size m might cause the algorithm to be computationally inefficient since it requires the inversion of a matrix

of size m at each iteration. In order to reduce the dimension of those features, we used a Latent Dirichlet Allocation

method (Blei, Ng, and Jordan 2003), which aims at modeling each message as a mixture of topics. However, due

to the short size of messages, that standard LDA may not

work well on Twitter (Weng et al. 2010). To overcome this

difficulty, we choose the approach proposed in (Hong and

Davison 2010), which aggregates tweets of a same user in

one document. We choose a number of d = 30 topics and

learn the model on the whole corpus. Then, if we denote by

F : Rm −→ Rd the function that, given a message returns

its representation in the topic space, the features of user a at

time t is za,t = F (ωa,t−1 ).

Reward model We trained a SVM topic classifier on the

20 Newsgroups dataset in order to rate each content. For our

experiments, we focus on 4 classes to test: politics, religion,

sport, science. We propose to consider the reward associated to some content as the number of times it has been retweeted (re-posted on Tweeter) by other users if it belongs to

the specified class according to our classifier, or 0 otherwise.

Finally, if a user posted several messages during an iteration,

his reward ra,t corresponds to the sum of the individual rewards obtained by the messages he posted during iteration

t. This instance of reward function corresponds to a task of

seeking to collect messages, related to some desired topic,

that will have a strong impact on the network (note that we

cannot directly get high-degree nodes in the user graph due

to API restrictions).

5.3

Experimental Settings In order to obtain generalizable

results, we test different values for parameters p, the probability for every context to be observed, k, the number of users that can be followed simultaneously, and L,

which stands for the duration of an iteration. More precisely, we experienced every possible combination with

p ∈ {0.1, 0, 5, 1.0}, k ∈ {50, 100, 150} and L ∈

{2min, 3min, 6min, 10min}.

Online experiments

Experimental Settings We propose to consider an application of our approach on a real-world online Twitter scenario, which considers both the whole social network and

the API constraints. For these experiments, we use the full

potential of Twitter APIs, namely 1% of all public tweets for

the Sample API that runs in background, and 5000 users simultaneously followed (i.e., k = 5000) for the Follow API.

138

4000

10000

Cumulative Reward

Cumulative Reward

5000

25000

Random

CUCB

CUCBV

Contextual p=0.1

Contextual p=0.5

Contextual p=1.0

Contextual Naive p=0.1

Contextual Naive p=0.5

3000

8000

18000

Random

CUCB

CUCBV

Contextual p=0.1

Contextual p=0.5

Contextual p=1.0

Contextual Naive p=0.1

Contextual Naive p=0.5

20000

6000

4000

15000

Random

CUCB

CUCBV

Contextual p=0.1

Contextual p=0.5

Contextual p=1.0

Contextual Naive p=0.1

Contextual Naive p=0.5

16000

14000

Cumulative Reward

12000

Random

CUCB

CUCBV

Contextual p=0.1

Contextual p=0.5

Contextual p=1.0

Contextual Naive p=0.1

Contextual Naive p=0.5

6000

Cumulative Reward

7000

10000

12000

10000

8000

6000

2000

2000

1000

0

4000

5000

2000

0

1000

2000

3000

4000

5000

Time step

6000

7000

8000

0

9000

0

1000

2000

3000

4000

5000

Time step

6000

7000

8000

9000

0

0

1000

2000

3000

4000

5000

Time step

6000

7000

8000

9000

0

0

1000

2000

3000

4000

5000

Time step

6000

7000

8000

9000

Figure 2: Cumulative Reward w.r.t time step for different policies on the USElections dataset. From left to right, the considered

topics for reward computations are respectively: politics, religion, science and sport.

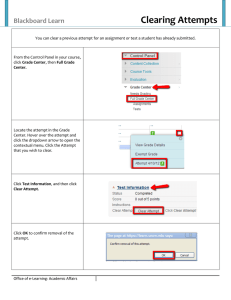

In order to analyze the behavior of the experimented policies during the first iterations of the capture, we also plot on

the right of the figure a zoomed version of the same curves

on the first 150 time steps. At the beginning of the process,

the Static policy performs better than every others, which

can be explained by two reasons: 1) bandit policies need

to select every user at least once in order to initialize their

scores and 2) users who are part of the Static policy’s pool

are supposed to be relatively good targets considering the

way we chose them. Around iteration 80, both CUCBV and

our Contextual algorithm become better than Static policy,

which corresponds to the moment they start to trade off between exploration and exploitation. Then, after a period of

around 60 iterations (approximately between the 80th and

the 140th time step) where CUCBV and our Contextual approach behave similarly, the latter then allows the capture

process to collect greatly more valuable data for the specified need. This is explained by the fact that our Contextual

algorithm requires a given amount of iterations to learn the

correlation function between contexts and rewards and then

taking some advantage of it. Note the significant changes in

the slope of the cumulative reward curve of our Contextual

algorithm, which highlight the ability of the algorithm to be

reactive to the environment changes. Finally, the number of

times every user has been selected by our Contextual algorithm is more spread than with a stationary algorithm such

as CUCBV, which confirms the relevance of our dynamic

approach. To conclude, in both offline and online settings,

our approach proves very efficient at dynamically selecting

user streams to follow for focused data capture tasks.

800

Random

CUCB

CUCBV

Contextual p=0.1

Contextual p=0.5

Contextual p=1.0

Contextual Naive p=0.1

Contextual Naive p=0.5

700

Cumulative Reward

600

500

400

300

200

100

0

0

2000

4000

6000

8000

Time step

10000

12000

14000

16000

Figure 3: Cumulative Reward w.r.t time step for different

policies on the Libya dataset and the politics reward.

At each iteration, the selected users are followed during

L = 15minutes. The messages posted by these users during this interval are evaluated with the same relevance function as in the offline experiments, using the politics topic.

We also use an LDA transformation to compute the context

vectors.

Given Twitter policy, every experiment requires one Twitter Developer account, which limits the number of strategies

we can experiment. We chose to test the four following ones:

our contextual approach, CUCBV, Random and a another

one called Static. This Static policy follows the same 5000

accounts at each round of the process. Those 5000 accounts

have been chosen by collecting all tweets provided during

24 hours by the Sample streaming API, evaluating them and

finally taking the 5000 users with the greatest cumulative

reward. For information, some famous accounts such that

@dailytelegraph, @Independent or @CNBC were part of

them.

6

Conclusion

In this paper, we tackled the problem of capturing relevant

data from social media streams delivered by specific users of

the network. The goal is to dynamically select useful userstreams to follow at each iteration of the capture, with the

aim of maximizing some reward function depending on the

utility of the collected data w.r.t. the specified data need. We

formalized this task as a specific instance of the contextual

bandit problem, where instead of observing every feature

vectors at each iteration of the process, each of them has

a certain probability to be revealed to the learner. For solving this task, we proposed an adaptation of the popular con-

Results Figure 4 on the left represents the evolution of

the cumulative reward of a two-weeks-long run for the four

tested policies. From these curves, we note the very good

behavior of our algorithm on a real-world scenario, as the

amount of rewards it accumulates grows greatly much more

quickly than other policies, especially after the 500 first iterations of the process. After these first iterations, our algorithm appears to have acquired a good knowledge on the

reward distributions, w.r.t. observed contexts and over the

various users of the network.

139

700000

7000

Random

CUCBV

Contextual

Static

600000

5000

Cumulative Reward

Cumulative Reward

500000

400000

300000

200000

4000

3000

2000

100000

0

Random

CUCBV

Contextual

Static

6000

1000

0

200

400

600

800

Time step

1000

1200

0

1400

0

20

40

60

80

Time step

100

120

140

160

Figure 4: Cumulative Reward w.r.t time step for different policies on the live experiment. The figure represents the whole

experiment on the left and a zoom on the 150 first steps on the right.

textual bandit algorithm LinU CB to the case where some

contexts are hidden at each iteration. Although not any sublinear upper-bounds of the regret can be guaranteed, because

of the great uncertainty induced by the hidden contexts, our

algorithm behaves well even when the proportion of hidden

contexts is high, thanks to some assumptions made on the

hidden contexts and a well fitted exploration term. This corresponds to an hybrid stationary / non stationary bandit algorithm. Experiments show the very good performances of our

proposal to collect relevant data under real-world capture

scenarios. It opens the opportunity for defining new kinds

of online intelligent strategies for collecting and tracking focused data from social media streams.

Chu, W.; Li, L.; Reyzin, L.; and Schapire, R. E. 2011. Contextual bandits with linear payoff functions. In AISTATS.

Colbaugh, R., and Glass, K. 2011. Emerging topic detection

for business intelligence via predictive analysis of ’meme’ dynamics. In AAAI Spring Symposium.

Fogaras, D.; Rácz, B.; Csalogány, K.; and Sarlós, T. 2005. Towards scaling fully personalized pageRank: algorithms, lower

bounds, and experiments. Internet Math.

Gisselbrecht, T.; Denoyer, L.; Gallinari, P.; and Lamprier, S.

2015. Whichstreams: A dynamic approach for focused data

capture from large social media. In ICWSM.

Gupta, P.; Goel, A.; Lin, J.; Sharma, A.; Wang, D.; and Zadeh,

R. 2013. Wtf: The who to follow service at twitter. In WWWW.

Hannon, J.; Bennett, M.; and Smyth, B. 2010. Recommending

twitter users to follow using content and collaborative filtering

approaches. In RecSys.

Hong, L., and Davison, B. D. 2010. Empirical study of topic

modeling in twitter. In ECIR.

Kaufmann, E.; Cappe, O.; and Garivier, A. 2012. On bayesian

upper confidence bounds for bandit problems. In ICAIS.

Kaufmann, E.; Korda, N.; and Munos, R. 2012. Thompson

sampling: An asymptotically optimal finite-time analysis. In

ALT.

Kohli, P.; Salek, M.; and Stoddard, G. 2013. A fast bandit algorithm for recommendation to users with heterogenous tastes.

In AAAI.

Lage, R.; Denoyer, L.; Gallinari, P.; and Dolog, P. 2013. Choosing which message to publish on social networks: A contextual

bandit approach. In ASONAM.

Lai, T., and Robbins, H. 1985. Asymptotically efficient adaptive allocation rules. Advances in Applied Mathematics 6(1):4

– 22.

Li, L.; Chu, W.; Langford, J.; and Schapire, R. E. 2010. A

contextual-bandit approach to personalized news article recommendation. In WWW.

Li, R.; Wang, S.; and Chang, K. C.-C. 2013. Towards social data platform: Automatic topic-focused monitor for twitter

stream. Proc. VLDB Endow.

Qin, L.; Chen, S.; and Zhu, X. 2014. Contextual combinatorial

bandit and its application on diversified online recommendation. In SIAM.

Weng, J.; Lim, E.-P.; Jiang, J.; and He, Q. 2010. Twitterrank:

Finding topic-sensitive influential twitterers. In WSDM.

Acknowledgments

This research work has been carried out in the framework of

the Technological Research Institute SystemX, and therefore

granted with public funds within the scope of the French

Program “Investissements d’Avenir”. Part of the work was

supported by project Luxid’x financed by DGA on the Rapid

program.

References

Agrawal, S., and Goyal, N. 2012a. Analysis of thompson sampling for the multi-armed bandit problem. In COLT.

Agrawal, S., and Goyal, N. 2012b. Thompson sampling for

contextual bandits with linear payoffs. CoRR.

Audibert, J.-Y., and Bubeck, S. 2009. Minimax policies for

adversarial and stochastic bandits. In COLT.

Audibert, J.-Y.; Munos, R.; and Szepesvari, C. 2007. Tuning

bandit algorithms in stochastic environments. In ALT.

Auer, P.; Cesa-Bianchi, N.; and Fischer, P. 2002. Finite-time

analysis of the multiarmed bandit problem. Mach. Learn.

Blei, D. M.; Ng, A. Y.; and Jordan, M. I. 2003. Latent dirichlet

allocation. J. Mach. Learn. Res.

Buccapatnam, S.; Eryilmaz, A.; and Shroff, N. B. 2014.

Stochastic bandits with side observations on networks. In SIGMETRICS.

Chapelle, O., and Li, L. 2011. An empirical evaluation of

thompson sampling. In NIPS. Curran Associates, Inc.

Chen, W.; Wang, Y.; and Yuan, Y. 2013. Combinatorial multiarmed bandit: General framework and applications. In ICML.

140