Predicting Crowd-Based Translation Quality with Language-Independent Feature Vectors

advertisement

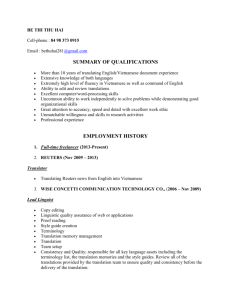

Human Computation AAAI Technical Report WS-12-08 Predicting Crowd-Based Translation Quality with Language-Independent Feature Vectors Niklas Kilian, Markus Krause, Nina Runge, Jan Smeddinck University of Bremen nkilian@tzi.de, phateon@tzi.de, nr@tzi.de, smeddinck@tzi.de In this paper, a method is proposed that classifies individual answers at submission time without the need to provide or gather information about the source language and which requires only very limited information about the target language. The algorithm calculates a vector of taskindependent variables for each answer, and uses a machine learning algorithm to classify them. Abstract Research over the past years has shown that machine translation results can be greatly enhanced with the help of mono- or bilingual human contributors, e.g. by asking humans to proofread or correct outputs of machine translation systems. However, it remains difficult to determine the quality of individual revisions. This paper proposes a method to determine the quality of individual contributions by analyzing task-independent data. Examples of such data are completion time, number of keystrokes, etc. An initial evaluation showed promising F-measure values larger than 0.8 for support vector machine and decision tree based classifications of a combined test set of Vietnamese and German translations. Language-Independent Quality Control The proposed algorithm uses thirteen task-independent variables, for example: the number of keystrokes, the completion time, the number of added/removed/replaced words and the Levenshtein Distance between MT output and user correction. The vector also includes more complex calculations using metadata acquired through the Google Translate University API. Translation costs, word alignment information and alternative translations are included in these calculations. In addition, a sentence complexity measurement of the target sentence is used, which is the only directly language-dependent variable that is included Keywords: human computation, crowdsourcing, machine translation, answer prediction, rater reliability analysis Introduction Over the past years, research has shown that it is possible to enhance the quality of machine translation (MT) results by applying human computation. Targeted paraphrasing (Resnik et al. 2010) and iterative collaboration between monolingual users (Hu and Bederson 2010) are just two examples. Another common approach is to ask mono- or bilingual speakers to proofread and correct MT results. When acquiring those corrections (e.g. through crowd services like Amazon Mechanical Turk1), a quality measurement is required in order to identify truthful contributions and to exclude low quality contributors (Zaidan 2011). Common methods – e.g. creating gold standard questions to verify the quality of contributors – do not work in a reliable way for natural language tasks, because the amount of possible translations is too large. Automated quality measurement systems would require large amounts of individual corrections, which could then be used as a set of correct translations to test against. However, this would be a time-consuming and oftentimes very costly process, since multiple contributors are required to validate just one “correct” translation. " " " # # " " " !! ! ! # Figure 1 - Evaluation results show the potential of the proposed method. Based on the F-measure value, the decision tree (DT) performed best, followed by the support vector machine (SVM), the feedforward neuronal network (MLP) and Naïve Bayes (NB). Copyright © 2012, Association for the Advancement of Artificial Intelligence (www.aaai.org). All rights reserved. 1 https://www.mturk.com 114 repeatedly at the end of the sentence. The ratio of acceptable to unacceptable units was 63/37 for the German test set and 59/41 for the Vietnamese test set. In a final step, each of the four machine learning implementations were trained and evaluated with a) only the German test set, b) only the Vietnamese test set, and c) both test sets combined. 10-fold cross validation was used for all evaluations. The F-measure was calculated for each of the four machine learning techniques and each language, as well as for the combined test set containing both languages. Across all three test cases, the resulting numbers show the potential of the proposed method (see figure 1). in the feature vector. The feature vector is calculated after analysing and comparing the initial translation and the corrected version. It is then used to train a machine learning algorithm. The following implementations of machine learning algorithms were tested as part of the evaluation process: support vector machine (LibSVM), common Naïve Bayes, decision tree (C4.5 algorithm) and a simple feedforward neuronal network (Multi-Layer Perceptron). Standard parameters were used for all but the support vector machine, which was set to use the RBF kernel with parameters optimized for the given training set. As some feature vector elements are time-based, or counters for actions that have been performed by the contributor, normalization was not applied. For initial tests, two popular Wikipedia articles were chosen – one German article dealing with the “Brandenburg Gate” and a Vietnamese article about the “City of Hanoi”. Native speakers of German and Vietnamese prepared a test set for each language. For each set, the first 150 sentences were taken from the respective article. Headlines, incomplete sentences and sentences that contained words or entire phrases in a strong dialect were removed from the test set. The remaining sentences were then ordered by word count and the longest 20% were removed. The remaining sentences still had an average length of 15 words. After the pre-processing, the two test sets were scaled down to 100 sentences each by randomly removing sentences. The purpose of this final step was to ensure better comparability between the two tests. Both, the Vietnamese and German sentences were then translated into English using Google Translate. A correction for all of the resulting translations was acquired through Amazon Mechanical Turk (via Crowdflower), which resulted in realistic results of varying quality. Each Turker was allowed to work on up to six corrections and all submissions were accepted without any quality measurements (e.g. gold standard questions, country exclusion) in place. All 200 submissions were then manually assigned to one of two classes by multilingual speakers of both source and target language. The first class represents all corrections that were done by a contributor who enhanced the translation in a reasonable way. One example for this class is the sentence “The lost previous grid was renewed for a thorough restoration 1983/84”, which was corrected to “The previously lost grid was renewed for a thorough restoration in 1983/84.” The second class contains invalid submissions and low-quality corrections. Whenever there was a potential to correct the MT result, but no or only little changes were made, the submission was considered invalid. If a contributor obviously lowered the quality, or if changes were made in an insufficient way, the submission was considered to be invalid as well. Typical examples for this class are corrections where several words were swapped randomly, or parts of the sentence got deleted, or added Discussion & Future Work Results suggest that the pruned decision tree performed best in all three test cases. It is showing acceptable results due to low and balanced false positive and false negative rates. The support vector machine has a similar F-measure, but showed a high number of false positives during the evaluation process. It is therefore less qualified for the task of sorting out low quality submissions. Although there are notable differences between the German and Vietnamese test results, the outcomes of the combined test indicate the language-independence of the proposed algorithm. Whether the differences between German and Vietnamese can be attributed to the slightly different ratio between “good” and “bad” units in the training sets requires further investigation. In addition, future work on the topic will need to carefully consider the suitability of the individual features, removing those that have no positive impact on the classification results. Considering this exploratory work with few training samples, the results look very promising. Further evaluations with more advanced samples (and with possibly less pre-processing steps in place) will shine light on the scalability of this language-independent approach to translation quality prediction. Acknowledgments This work was partially funded by the Klaus Tschira Foundation through the graduate school “Advances in Digital Media”. References Hu, C., & Bederson, B. (2010). Translation by iterative collaboration between monolingual users. Proceedings of Graphics Interface 2010. Resnik, P., Quinn, A., & Bederson, B. B. (2010). Improving Translation via Targeted Paraphrasing. Computational Linguistics, (October), 127-137. Zaidan, O. (2011). Crowdsourcing translation: professional quality from non-professionals. Proceedings of ACL 2011. 115