Proceedings of the Twenty-Seventh AAAI Conference on Artificial Intelligence

Data-Efficient Generalization of Robot Skills with Contextual Policy Search

Andras Gabor Kupcsik

Department of Electrical and Computer Engineering, National University of Singapore

kupcsik@nus.edu.sg

Marc Peter Deisenroth and Jan Peters and Gerhard Neumann

Intelligent Autonomous Systems Lab, Technische Unversität Darmstadt

marc@ias.tu-darmstadt.de, peters@ias.tu-darmstadt.de, neumann@ias.tu-darmstadt.de

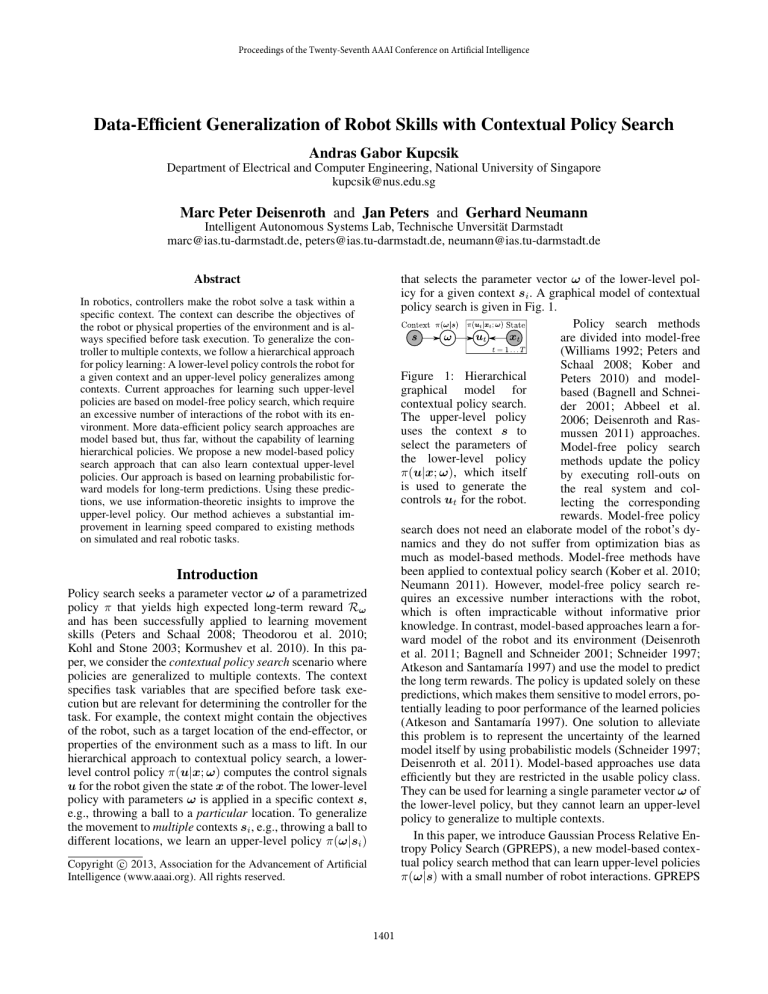

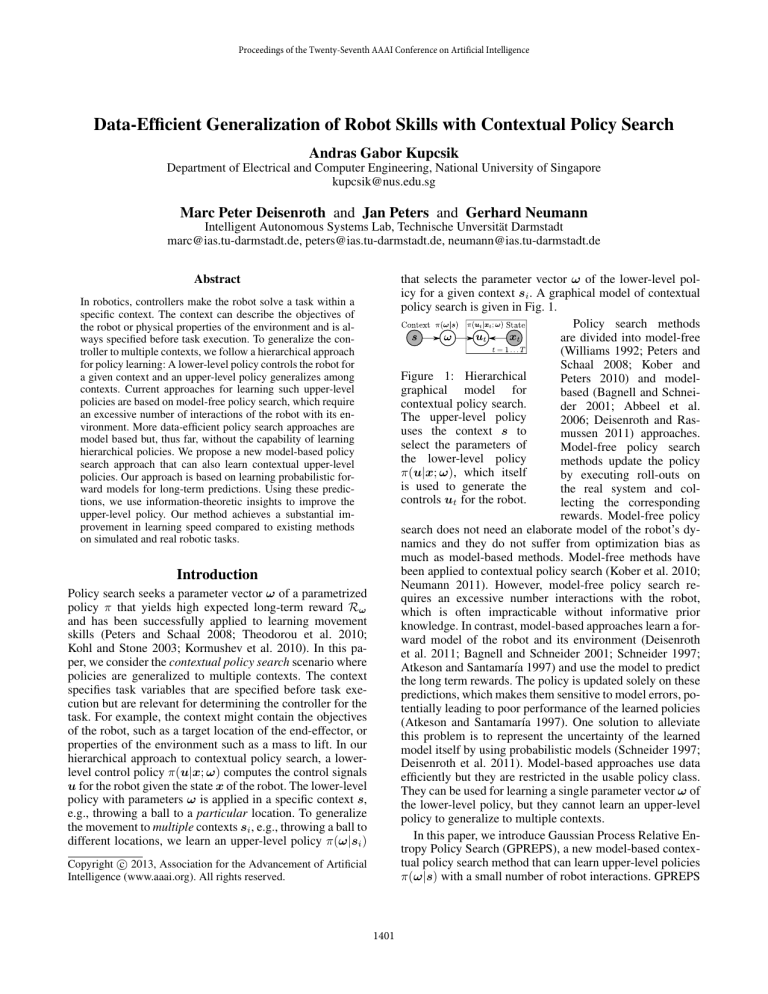

that selects the parameter vector ω of the lower-level policy for a given context si . A graphical model of contextual

policy search is given in Fig. 1.

Policy search methods

are divided into model-free

(Williams 1992; Peters and

Schaal 2008; Kober and

Figure 1: Hierarchical

Peters 2010) and modelgraphical model for

based (Bagnell and Schneicontextual policy search.

der 2001; Abbeel et al.

The upper-level policy

2006; Deisenroth and Rasuses the context s to

mussen 2011) approaches.

select the parameters of

Model-free policy search

the lower-level policy

methods update the policy

π(u|x; ω), which itself

by executing roll-outs on

is used to generate the

the real system and colcontrols ut for the robot.

lecting the corresponding

rewards. Model-free policy

search does not need an elaborate model of the robot’s dynamics and they do not suffer from optimization bias as

much as model-based methods. Model-free methods have

been applied to contextual policy search (Kober et al. 2010;

Neumann 2011). However, model-free policy search requires an excessive number interactions with the robot,

which is often impracticable without informative prior

knowledge. In contrast, model-based approaches learn a forward model of the robot and its environment (Deisenroth

et al. 2011; Bagnell and Schneider 2001; Schneider 1997;

Atkeson and Santamarı́a 1997) and use the model to predict

the long term rewards. The policy is updated solely on these

predictions, which makes them sensitive to model errors, potentially leading to poor performance of the learned policies

(Atkeson and Santamarı́a 1997). One solution to alleviate

this problem is to represent the uncertainty of the learned

model itself by using probabilistic models (Schneider 1997;

Deisenroth et al. 2011). Model-based approaches use data

efficiently but they are restricted in the usable policy class.

They can be used for learning a single parameter vector ω of

the lower-level policy, but they cannot learn an upper-level

policy to generalize to multiple contexts.

In this paper, we introduce Gaussian Process Relative Entropy Policy Search (GPREPS), a new model-based contextual policy search method that can learn upper-level policies

π(ω|s) with a small number of robot interactions. GPREPS

Abstract

In robotics, controllers make the robot solve a task within a

specific context. The context can describe the objectives of

the robot or physical properties of the environment and is always specified before task execution. To generalize the controller to multiple contexts, we follow a hierarchical approach

for policy learning: A lower-level policy controls the robot for

a given context and an upper-level policy generalizes among

contexts. Current approaches for learning such upper-level

policies are based on model-free policy search, which require

an excessive number of interactions of the robot with its environment. More data-efficient policy search approaches are

model based but, thus far, without the capability of learning

hierarchical policies. We propose a new model-based policy

search approach that can also learn contextual upper-level

policies. Our approach is based on learning probabilistic forward models for long-term predictions. Using these predictions, we use information-theoretic insights to improve the

upper-level policy. Our method achieves a substantial improvement in learning speed compared to existing methods

on simulated and real robotic tasks.

Introduction

Policy search seeks a parameter vector ω of a parametrized

policy π that yields high expected long-term reward Rω

and has been successfully applied to learning movement

skills (Peters and Schaal 2008; Theodorou et al. 2010;

Kohl and Stone 2003; Kormushev et al. 2010). In this paper, we consider the contextual policy search scenario where

policies are generalized to multiple contexts. The context

specifies task variables that are specified before task execution but are relevant for determining the controller for the

task. For example, the context might contain the objectives

of the robot, such as a target location of the end-effector, or

properties of the environment such as a mass to lift. In our

hierarchical approach to contextual policy search, a lowerlevel control policy π(u|x; ω) computes the control signals

u for the robot given the state x of the robot. The lower-level

policy with parameters ω is applied in a specific context s,

e.g., throwing a ball to a particular location. To generalize

the movement to multiple contexts si , e.g., throwing a ball to

different locations, we learn an upper-level policy π(ω|si )

Copyright c 2013, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

1401

need to be added to the REPS optimization problem.

To fulfill these constraints also for continuous or highdimensional discrete state spaces, REPS matches feature averages instead of single probability values, i.e.,

X

p(s)φ(s) = φ̂,

(4)

learns Gaussian Process (GP) forward models and uses sampling for long-term predictions. An information-theoretic

policy update step is used to improve the upper-level policy π(ω|s). The policy updates are based on the REPS algorithm (Peters et al. 2010). We applied GPREPS to learning

a simulated throwing task with a 4-link robot arm and to

a simulated and real-robot hockey task with a 7-link robot

arm. Both tasks were learned using two orders of magnitude

fewer interactions compared to previous model-free REPS,

while yielding policies of higher quality.

s

where φ(s) is a feature vector describing the context and

φ̂ is the feature vector averaged over all observed contexts.

The REPS optimization problem for the episodic contextual policy search is defined as maximizing (2) with respect

to p under the constraints (3) and (4). It can be solved by

the method of Lagrange multipliers and minimizing the dual

function g of the optimization problem (Daniel et al. 2012).

REPS yields the closed-form solution

(s) p(s, ω) ∝ q(s, ω) exp Rsω −V

(5)

η

Episodic Contextual Policy Learning

Contextual policy search (Kober and Peters 2010; Neumann

2011) aims to adapt the parameters ω of a lower-level policy to the current context s. An upper-level policy π(ω|s)

chooses parameters ω that maximize the expected reward

X

J = Es,ω [Rsω ] =

µ(s)π(ω|s)Rsω ,

to (2) where V (s) = θ T φ(s) is a value function (Peters et

al. 2010). The parameters η and θ are Lagrangian multipliers

used for the constraints (3) and (4), respectively. These parameters are found by optimizing the dual function g(η, θ).

The function V (s) emerges from the feature constraint in

(4) and depends linearly on the features φ(s) of the context.

It serves as context dependent baseline which is subtracted

from the reward signal.

s,ω

where µ(s) is the distribution over the contexts and

Z

Rsω = Eτ [r(τ , s)|s, ω] =

p(τ |s, ω)r(τ , s),

(1)

τ

is the expected reward of the whole episode when using parameters ω in context s. In (1), τ denotes a trajectory, p(τ |s, ω) a trajectory distribution, and r(τ , s) a userspecified reward function. The trajectory distribution might

still depend on the context s, which can also describe environmental variables. After choosing the parameter vector ω at the beginning of an episode, the lower-level policy π(u|x; ω) determines the controls u during the episode

based on the state x of the robot.

In the following, we briefly introduce the model-free

episodic REPS algorithm for contextual policy search.

Learning Upper-Level Policies

The optimization defined by the REPS algorithm is only per[i]

formed on a discrete set of samples D = {s[i] , ω [i] , Rsω },

i =

. The resulting probabilities p[i] ∝

1, . . . , N

R[i] −V (s[i] )

sω

exp

of these samples are used to weight the

η

samples. Note that the distribution q(s, ω) can be skipped

from the weighting as we already sampled from q(s, ω). The

upper-level policy π(ω|s) is subsequently learned by performing a weighted maximum-likelihood estimate for the

parameters ω of the policy. In our experiments, we use a

linear-Gaussian model to represent the upper-level policy

π(ω|s), i.e.,

Contextual Episodic REPS

The key insight of REPS (Peters et al. 2010) is to bound the

difference between two subsequent trajectory distributions

in the policy update step to avoid overly aggressive policy

update steps. In REPS, the policy update step directly results

from bounding the relative entropy between the new and the

previous trajectory distribution.

In the episodic contextual setting, the context s and the

parameter ω uniquely determine the trajectory distribution

(Daniel et al. 2012). For this reason, the trajectory distribution can be abstracted as joint distribution over the parameter vector ω and the context s, i.e., p(s, ω) = p(s)π(ω|s).

REPS optimizes over this joint distribution p(s, ω) of contexts and parameters ω to maximize the expected reward

X

J=

p(s, ω)Rsω ,

(2)

π(ω|s) = N (ω|a + As, Σ).

(6)

In this example, the parameters of the upper-level policy are

given as {a, A, Σ}.

The sample-based implementation of REPS can be problematic if the variance of the reward is high as the expected

reward Rsω is approximated by a single roll-out. The subsequent exponential weighting of the reward can lead to riskseeking policies.

Exploiting Reward Models as Prior Knowledge

s,ω

The reward function r(τ , s) is typically specified by the user

and is assumed known. If the outcome τ of an experiment is

independent of the context variables, the reward model can

easily generate additional samples for the policy update. In

this case, we can use the outcome τ [i] to evaluate the reward

[j]

Rsω = r(τ [i] , s[j] ) for multiple contexts s[j] instead of just

for a single context s[i] . For example, if the context specifies a desired target for throwing a ball, we can use the ball

trajectory to evaluate the reward for multiple targets.

with respect to p while bounding the relative entropy to the

previously observed distribution q(s, ω), i.e.,

X

p(s, ω)

≥

p(s, ω) log

.

(3)

s,ω

q(s, ω)

P

As the context distribution p(s) = ω p(s, ω) is specified

by the learning problem and given by µ(s), the constraints

∀s : p(s) = µ(s)

1402

GPREPS Algorithm

Input: relative entropy bound , initial policy π(ω|s),

number of iterations K.

for k = 1, . . . , K

Collect Data:

Observe context s[i] ∼ µ(s), i = 1, . . . , N

Execute policy with ω [i] ∼ π(ω|s[i] )

Train forward models, estimate µ̂(s)

for j = 1, . . . , M

Predict Rewards:

Draw context s[j] ∼ µ̂(s)

Draw lower-level parameters ω [j] ∼ π(ω|s[j] )

[l]

Predict L trajectories τ j |s[j] , ω [j]

P

[j]

[l]

Compute Rsω = l r(τ j , s[j] )/L

end

Update Policy:

Optimize dual function:

[η, θ] = argminη0 ,θ0 g(η 0 , θ 0 )

Calculate sample

weighting:

R[j] −V (s[j] )

sω

p[j] ∝ exp

, j = 1, . . . , M

η

Update policy π(ω|s) with weighted ML

end

Output: policy π(ω|s)

Table 1: In each iteration, we generate N trajectories on

the real system and update our data set for learning forward

models. Based on the updated forward model, we create M

artificial samples and predict their corresponding rewards.

These artificial samples are subsequently used for optimizing the dual function g(η, θ) updating the upper-level policy

by weighted maximum likelihood estimates.

Gaussian Process REPS

problem of the model-free REPS approach of high variance

in the rewards. GPREPS is well suited for our sample-based

prediction of expected rewards as several artificial samples

(s[j] , ω [j] ) can be evaluated in parallel.

Learning GP Forward Models

We learn forward models to predict the trajectory τ given

the context s and the lower-level policy parameters ω. We

learn a forward model that is given by y t+1 = f (y t , ut )+,

where y = [x, b] is composed of the state of the robot and

the state of the environment b, for instance the position of a

ball. The vector denotes zero-mean Gaussian noise. In order to simplify the learning task, we decompose the forward

model f into simpler models, which are easier to learn. To

do so, we exploit prior structural knowledge of how the robot

interacts with its environment. For example, for throwing a

ball, we use the prior knowledge that the initial state b of

the ball is a function of the state x of the robot at the time

point tr when releasing the ball. We use a separate forward

model to predict the initial state b of the ball from the robot

state x at time point tr and a forward model for predicting

the free dynamics h(bt ) of the ball which is independent of

the robot’s state xt , t > tr .

Our learned models are implemented as probabilistic nonparametric Gaussian processes, which are trained using the

standard approach of evidence maximization (Rasmussen

and Williams 2006). As training data, we use data collected

from interacting with the real robot. As a GP captures uncertainty about the learned model itself, averaging out this

uncertainty reduces the effect of model errors and results in

robust policy updates (Deisenroth and Rasmussen 2011). To

reduce the computational demands of learning the GP models, we use sparse GP models with pseudo inputs (Snelson

and Ghahramani 2006).

Trajectory and Reward Predictions

Using the learned GP forward model, we need to predict the

expected reward

Z

Rsω =

p̂(τ |ω, s)r(τ , s)dτ

(7)

In our approach, we enhance the model-free REPS algorithm with learned probabilistic forward models to increase

the data efficiency and the learning speed. The GPREPS algorithm is summarized in Tab. 1. With data from the real

robot, we learn the reward function and forward models

of the robot. Moreover, we estimate a distribution µ̂(s)

over the contexts, such that we can easily generate new

context samples s[j] . Subsequently, we sample a parameter vector ω [j] and use the learned probabilistic forward

[l]

models to sample L trajectories τ j for the given contextparameter pair. Finally, we obtain additional artificial samP

[j]

[j]

[l]

ples (s[j] , ω [j] , Rsω ), where Rsω = l r(τ j , s[j] )/L, that

are used for updating the policy. The learned forward models are implemented as GPs. The most relevant parameter

GPREPS is the relative-entropy bound that scales the exploration of the algorithm. Small values encourage continuing exploration and allow for collecting more data to

improve the forward models. Large causes increasingly

greedy policy updates.

We use the observed trajectories from the real system

solely to update the learned forward models but not for evaluating expected rewards. Hence, GPREPS avoids the typical

τ

for a given parameter vector ω executed in context s. The

probability p̂(τ |ω, s) of a trajectory is now estimated using

learned forward models. Our approach to predicting trajectories is based on sampling. To predict a trajectory, we iterate the following procedure: First, we compute the GP pre[l]

[l]

dictive distribution p(xt+1 |xt , ut ) for a given state-action

[l]

[l]

[l]

pair xt , ut . The next state xt+1 in trajectory τ [l] is subsequently drawn from this distribution. To estimate the expected reward Rsω [j] in (7) for a given context-parameter

pair (s[j] , ω [j] ), we use several trajectories τ [j,l] . For each

sample trajectory τ [j,l] , a reward r(τ [j,l] , s[j] ) is obtained.

Averaging over these rewards yields an estimate of Rsω [j] .

Reducing the variance of this estimate requires many samples. However, sampling can be easily parallelized. In the

limit of infinitely many samples, this estimate is unbiased.

As alternative to sampling-based predictions, deterministic inference methods, such as moment matching, can

1403

be used. For instance, the PILCO policy search framework (Deisenroth and Rasmussen 2011) approximates the

expectation given in (7) analytically by moment matching (Deisenroth et al. 2012). In particular, PILCO approximates the state distributions p(xt ) at each time step by

Gaussians. Due to this approximation, the predictions of the

expected trajectory reward Rsω can be biased. While moment matching allows for closed-form predictions, the policy class and the reward models are restricted due to the

computational constraints.

In our experiments, we could obtain 300 sample trajectories within the same computation time of a single prediction

of the deterministic inference algorithm. The accuracy of the

deterministic inference is usually met after obtaining around

100 sample trajectories. Using a massive parallel hardware

(e.g., a GPU), we obtained 7000 trajectories with the same

amount of computation time.

Reward limit

-100

-10

-1.5

Required Experience

PILCO GPREPS REPS

10.18s

10.68s

1425s

11.46s

20.52s

2300s

12.18s

38.50s

4075s

Table 2: Required experience to achieve reward limits for

a 4-link balancing problem. Higher rewards require better

policies.

version of REPS (Peters et al. 2010). Tab. 2 shows the required amount of experience for achieving several reward

limits. The model-based methods quickly found stable controllers, while REPS required two orders of magnitude more

trials until convergence.

Ball-Throwing Task

In the ball-throwing task, we consider the problem of contextual policy search. The goal of the 4-DOF planar robot

was to hit a randomly placed target at position s with a ball.

Here s is a two-dimensional coordinate, which also defines

the context of the task. The x and y-dimensional coordinates vary between 5 m–15 m and between 0 m–4 m from

the robot base. Our 4-DOF robot coarsely modeled a human

and had an ankle, knee, hip and shoulder joint. The lengths

of the links were given by l = [0.5, 0.5, 1.0, 1.0] m and the

masses by m = [17.5, 17.5, 26.5, 8.5] kg.

In this experiment, we used GPREPS to find DMP parameters for throwing a ball to multiple targets while maintaining balance. The reward function was defined as

X

X

r(τ , s) = −min ||bt−s||2−c1

fc (xt )−c2

uTt Hut .

Results

We evaluated our data-efficient contextual policy search

method on a context-free comparison task and two contextual motor skill learning tasks. As the lower-level policy

needs to scale to anthropomorphic robotics, we implement

them using the Dynamic Movement Primitive (Ijspeert and

Schaal 2003) approach, which we will now briefly review.

Dynamic Movement Primitives

To parametrize the lower-level policy we use an extension (Kober et al. 2010) to Dynamic Movement Primitives

(DMPs). A DMP is a spring-damper system that is modulated by a forcing function f (z; v) = Φ(z)T v. The variable

z is the phase of the movement. The parameters v specify

the shape of the movement and can be learned by imitation.

The final position and velocity of the DMP can be adapted

by changing additional hyper-parameters y f and ẏ f of the

DMP. The execution speed of the movement is determined

by the time constant τ . For a more detailed description of

the DMP framework we refer to (Kober et al. 2010).

After obtaining a desired trajectory through the DMPs,

the trajectory is followed by a feedback controller, i.e., the

lower-level control policy.

t

t

t

The first term punishes minimum distance of the ball to the

target s. The second term describes a punishment term to

prevent the robot from falling over. The last term favors

energy-efficient movements.

As lower-level controllers, we used DMPs with 10 basis

functions per joint. We modified the shape parameters but

fixed the final position and velocity of the DMP to be the

upright position and zero velocity. In addition, the lowerlevel policy also contained the release time tr of the ball

as a free parameter, resulting in a 41-dimensional parameter

vector ω.

GPREPS learned a forward model consisting of three

components: the dynamics model of the robot, the free dynamics of the ball, and the model of how the robot’s state

influences the initial position and velocity of the ball at the

given release time tr . These models were used to predict ball

trajectories for a given parameter ω.

The policy π(ω|s) was initialized such that it was expected to throw the ball approximately 5 m without maintaining balance, which led to high penalties. Fig. 2 shows the

learned motion sequence for two different targets. GPREPS

learned to accurately hit the target for a wide range of

different targets. The displacement for targets above s =

[13, 3] m could raise up to 0.5 m, otherwise the maximal error was smaller than 10 cm. The policy chose different DMP

4-Link Pendulum Balancing Task

In the following, we consider the task of balancing a 4-link

planar pendulum. The objective was to learn a linear control policy that was move a 4-link pendulum to the desired

upright position from randomly distributed starting angles

around the upright position. The 4-link pendulum had a total length of 2m and a mass of 70kg. We used a quadratic

reward function r(x) = −xT Qx for each time step.

We compared the performance of GPREPS to the PILCO

algorithm (Deisenroth et al. 2011), the state-of-the-art

model-based policy search method. As PILCO cannot be applied to the contextual policy search setting, we used only

a single context. We initialized both PILCO and GPREPS

with 6s of real experience using a random policy. With

GREPS we use 400 artificial samples, each with 10 sample trajectories. We also learned policies with the model-free

1404

4

3

2

y [m]

y [m]

3

1

0

1

−1

0

x [m]

0

1

8

11

14

4

3

2

y [m]

y [m]

5

x [m]

3

1

0

2

2

1

−1

0

x [m]

1

0

5

8

11

x [m]

14

Figure 4: KUKA lightweight arm shooting hockey pucks.

Figure 2: Throwing motion sequence. The robot releases the

ball after the specified release time and hits different targets

with high accuracy.

tra samples generated with the known reward model (extra

context). In this case, REPS always converged to good solutions. For GPREPS, we sampled ten trajectories initially to

obtain a confident GP model. We evaluated only one sample after each policy update and subsequently updated the

learned forward models. We used 500 additional samples

and 20 sample trajectories per sample for each policy update.

GPREPS converged to a good solution in all cases after 30–

40 real evaluations. In contrast, while directly learning Rsω

also resulted in an improved learning speed, we observed

a highly varying, on average lower quality in the resulting

policies (GP direct). This result confirms our intuition that

decomposing the forward model into multiple components

simplifies the learning task.

0

reward

-2000

-4000

GPREPS

REPS (GP direct)

REPS

REPS (extra context)

-6000

-8000

101

102

evaluations

103

Robot Hockey with Simulated Environment

Figure 3: Learning curves for the ball-throwing problem.

The shaded regions represent the standard deviation of the

rewards over 20 independent trials. GPREPS converged after 30–40 interactions with the environment, while REPS required ≥ 5000 interactions. Using the reward model to generate additional samples for REPS led to better final policies,

but could not compete with GPREPS in terms of learning

speed. Learning the direct reward model Rsω = f (s, ω)

yielded faster learning than model-free REPS, but the quality of the final policy is limited.

In this task, the KUKA lightweight robot arm was equipped

with a racket, see Fig. 4, and had to learn shooting hockey

pucks. The objective was to make a target puck move a specified distance. Since the target puck was out of reach, it could

only be moved indirectly by shooting a second hockey puck

at the target puck. The initial location [bx , by ]T of the target

puck was varied in both dimensions as well as the distance

d∗ the target puck had to be shoot. Consequently, the context

was given by s = [bx , by , d∗ ]T . The simulated robot task is

depicted in Fig. 5.

We obtained the shape parameters v of the DMP by imitation learning and kept them fixed during learning. The learning algorithms had to adapt the final positions y f , velocities

ẏ f and the time scaling parameter τ of the DMPs, resulting

in a 15-dimensional lower-level policy parameter ω. The reward function was the negative minimum squared distance

of the robot’s puck to the target puck during a play. In addition, we penalized the squared difference between the desired travel distance and the distance the target puck actually

moved. We chose the position of the target puck to be within

0.5m from its initial position, i.e., [2, 1]m from the robot’s

base. The desired distance to move the target puck ranged

between 0 m and 1 m.

GPREPS first learned a forward model to predict the initial position and velocity of the first puck after contact with

the racket and a travel distance of 20 cm. Subsequently,

GPREPS learned the free dynamics model of both pucks and

the contact model of the pucks. Based on the position and

velocities of the pucks before contact, the velocities of both

parametrizations and release times for different target positions. To illustrate this effect we show two target positions

s1 = [6, 1.1] m, s2 = [14.5, 1.2] m in Fig. 2. For the target

farther away the robot showed a more distinctive throwing

movement and released the ball slightly later.

The learning curves for REPS and GPREPS are shown in

Fig. 3. In addition, we evaluated REPS using the known reward model r(τ, s) to generate additional samples with randomly sampled contexts s[i] as described previously. We denote these experiments as extra context. We also evaluated

REPS when learning the expected reward model directly

Rsω = f (s, ω) as a function of the context and parameters

ω with a GP (GP direct). Using the learned reward model

we evaluate artificial samples (s[i] , ω [i] ). Fig. 3 shows that

REPS converged to a good solution after 5000 episodes in

most cases. In a few instances, however, we observed premature convergence resulting in suboptimal performance.

The performance of REPS could be improved by using ex-

1405

reward

-0.05

-0.1

-0.15

GPREPS

50

55

60

65

70

75

80

evaluations

Figure 7: GPREPS learning curve on the real robot arm.

Figure 5: Robot hockey task. The robot shot a puck at the

target puck to make the target puck move for a specified distance. Both, the initial location of the target puck [bx , by ]T

and the desired distance d∗ to move the puck were varied.

The context was s = [bx , by , d∗ ]. The learned skill for two

different contexts s is shown, where the robot learned to (indirectly) place the target puck at the desired distance.

The learned movement is shown in Fig. 5 for two different

contexts. After 120 evaluations, GPREPS placed the target

puck accurately at the desired distance with a displacement

error ≤ 5 cm.

Robot Hockey with Real Environment

Finally, we evaluated the performance of GPREPS on the

hockey task using a real KUKA lightweight arm, see Fig. 4.

A Kinect sensor was used to track the position of the two

pucks at a frame rate of 30Hz. We smoothed the trajectories

in a pre-processing step with a Butterworth filter and subsequently learned the GP models. The desired distance to

move the target puck ranged between 0 m and 0.6 m.

The learning curve of GPREPS for the real robot is shown

in Fig. 7, which shows that the robot clearly improved its initial policy within a small number of real robot experiments.

0

reward

-0.2

-0.4

GPREPS

REPS

REPS (GP direct)

CRKR

-0.6

101

102

103

evaluations

104

Conclusion

We presented GPREPS, a novel model-based contextual policy search algorithm. GPREPS learns hierarchical policies

and is based on information-theoretic policy updates. Moreover, GPREPS exploits learned probabilistic forward models of the robot and its environment to predict expected rewards. For evaluating the expected reward, GPREPS samples trajectories using the learned models. Unlike deterministic inference methods used in state-of-the art approaches

for policy evaluation, trajectory sampling is easily parallelizable and does not limit the policy class or reward model.

We demonstrated that for learning high-quality contextual policies, GPREPS exploits the learned forward models to significantly reduce the required amount of experience

from the real robot compared to state-of-the-art model-free

contextual policy search approaches. The increased data efficiency makes GPREPS applicable to learning contextual

policies in real-robot tasks. Since existing model-based policy search methods cannot be applied to the contextual setup,

GPREPS opens the door to many new applications of modelbased policy search.

Figure 6: Learning curves on the robot hockey task.

GPREPS was able to learn the task within 120 interactions

with the environment, while the model-free version of REPS

was not able to find high-quality solutions.

pucks were predicted after contact with the learned model.

With GPREPS we used 500 parameter samples, each with

20 sample trajectories.

We compared GPREPS to directly predicting the reward

Rsω , model-free REPS and CrKR (Kober et al. 2010), a

state-of-the-art model-free contextual policy search method.

The resulting learning curves are shown in Fig. 6. GPREPS

learns to accurately hit the second puck within 50 interactions with the environment, while to learn the whole task

around 120 evaluations were needed. The model-free version of REPS needed approximately 10000 interactions. Directly predicting the rewards resulted in faster convergence

but the resulting policies still showed a poor performance

(GP direct). CrKR used a kernel-based representation of the

policy. For a fair comparison, we used a linear kernel for

CrKR. The results show that CrKR could not compete with

model-free REPS. We believe the reason for the worse performance of CrKR lies in its uncorrelated exploration strategy.

Acknowledgments

The research leading to these results has received funding from the European Community’s Seventh Framework

Programme (FP7/2007-2013) under grant agreement n◦

270327.

1406

References

Rasmussen, C. E. and Williams, C. K. I. 2006. Gaussian

Processes for Machine Learning. MIT Press.

Schaal, S.; Peters, J.; Nakanishi, J.; and Ijspeert, A. J. 2003.

Learning Movement Primitives. In International Symposium

on Robotics Research.

Schneider, J. G. 1997. Exploiting Model Uncertainty Estimates for Safe Dynamic Control Learning. In Advances in

Neural Information Processing Systems.

Snelson, E., and Ghahramani, Z. 2006. Sparse Gaussian

Processes using Pseudo-Inputs. In Advances in Neural Information Processing Systems.

Theodorou, E.; Buchli, J.; and Schaal, S. 2010. Reinforcement Learning of Motor Skills in High Dimensions: a Path

Integral Approach. In Proceedings of the International Conference on Robotics and Automation.

Williams, R. J. 1992. Simple Statistical Gradient-Following

Algorithms for Connectionist Reinforcement Learning. Machine Learning 8:229–256.

Abbeel, P.; Quigley, M.; and Ng, A. Y. 2006. Using Inaccurate Models in Reinforcement Learning. In Proceedings of

the International Conference on Machine Learning.

Atkeson, C. G., and Santamarı́a, J. C. 1997. A Comparison of Direct and Model-Based Reinforcement Learning.

In Proceedings of the International Conference on Robotics

and Automation.

Bagnell, J. A., and Schneider, J. G. 2001. Autonomous Helicopter Control using Reinforcement Learning Policy Search

Methods. In Proceedings of the International Conference on

Robotics and Automation.

Daniel, C.; Neumann, G.; and Peters, J. 2012. Hierarchical

Relative Entropy Policy Search. In International Conference

on Artificial Intelligence and Statistics.

Deisenroth, M. P., and Rasmussen, C. E. 2011. PILCO: A

Model-Based and Data-Efficient Approach to Policy Search.

In Proceedings of the International Conference on Machine

Learning.

Deisenroth, M. P.; Turner, R.; Huber, M.; Hanebeck, U. D.;

and Rasmussen, C. E. 2012. Robust Filtering and Smoothing

with Gaussian Processes. IEEE Transactions on Automatic

Control 57(7):1865–1871.

Deisenroth, M. P.; Rasmussen, C. E.; and Fox, D. 2011.

Learning to Control a Low-Cost Manipulator using DataEfficient Reinforcement Learning. In Robotics: Science &

Systems.

Ijspeert, A. J., and Schaal, S. 2003. Learning Attractor Landscapes for Learning Motor Primitives. In Advances in Neural Information Processing Systems.

Kober, J., and Peters, J. 2010. Policy Search for Motor

Primitives in Robotics. Machine Learning 1–33.

Kober, J.; Mülling, K.; Kroemer, O.; Lampert, C. H.;

Schölkopf, B.; and Peters, J. 2010. Movement Templates

for Learning of Hitting and Batting. In Proceedings of the

International Conference on Robotics and Automation.

Kober, J.; Oztop, E.; and Peters, J. 2010. Reinforcement

Learning to adjust Robot Movements to New Situations. In

Robotics: Science & Systems.

Kohl, N., and Stone, P. 2003. Policy Gradient Reinforcement Learning for Fast Quadrupedal Locomotion. In Proceedings of the International Conference on Robotics and

Automation.

Kormushev, P.; Calinon, S.; and Caldwell, D. G. 2010.

Robot Motor Skill Coordination with EM-based Reinforcement Learning. In Proceedings of the International Conference on Intelligent Robots and Systems.

Neumann, G. 2011. Variational Inference for Policy Search

in Changing Situations. In Proceedings of the International

Conference on Machine Learning.

Peters, J., and Schaal, S. 2008. Reinforcement Learning

of Motor Skills with Policy Gradients. Neural Networks

(4):682–97.

Peters, J.; Mülling, K.; and Altun, Y. 2010. Relative Entropy

Policy Search. In Proceedings of the National Conference

on Artificial Intelligence.

1407