From: AAAI Technical Report FS-01-01. Compilation copyright © 2001, AAAI (www.aaai.org). All rights reserved.

From Stereoscopic

Vision to Symbolic Representation

Paulo E. Santos* and Murray P. Shanahan

{p.santos, m.shanahan}@ic.ac.uk

Intelligent and Interactive SystemsGroup

Imperial College of Science, Technologyand Medicine

University of London

Exhibition Road SW72AZLondon

Abstract

Thispaperdescribesa symbolicrepresentationof the

data providedby the stereo-visionsystemof a mobile

robot. Therepresentationproposed

is constructedfrom

qualitativedescriptions

of transitionsin the depthinformationgivenby the stereograms.Thegoal of this paper is to investigatethe consequences

in the depthtransitionsof qualitativespatial eventssuchas the relative

motionbetweenobjects,spatial occlusionandobject’s

deformation(expansionand contraction).Thiscausal

relationshipbetween

spatialeventsanddepthtransitions

formsa background

theoryfor an abductiveprocessof

sensordata assimilation.

Introduction

Oneof the most important issues in mobilerobot navigation is the connectionbetweenthe physical world and the

internal states of the robot. The term "anchoring"(Coradeschi &Saffiotti 2000),refers to this processof assigningabstract symbolsto real sensor data, and is related to the symbold grounding problem (Harnard 1990). There are three

mainpossible approachesto this problem,either this connection is explicitly madevia a knowledgerepresentation

theory, such as (Shanahan1997)(Hahnel,Burgard, & Lakemeyer1998),or it is hiddenin the layers of neural-networks

(Racz&Dubrawski1995)or, finally, there can be no representation of the robot’s internal states whatsoever,as is the

case with purely reactive systems (Brooks 1991a)(Brooks

1991b). Althoughthe last two frameworksgive important

solutions to certain sets of problems,they are insufficient

for the construction of task planning systems using sensor

information. For this reason the present work assumesa

knowledgerepresentation view of the connection between

sensor informationand abstract symbols.

Briefly, the goal of the present workis to developa logicbased systemfor scene interpretation using dynamicqualitative spatial notions. Therefore,not only is anchoringa

central topic in this work,but the sceneinterpretationissues

discussed in this paper are also going to contribute to the

understandingof howto track and predict future states of

*Supportedby CAPES.

Copyright(~) 2001,American

Associationfor Artificial Intelligence(www.aaai.org).

All rights reserved.

37

symbolicrepresentations of objects in a robot’s knowledge

base.

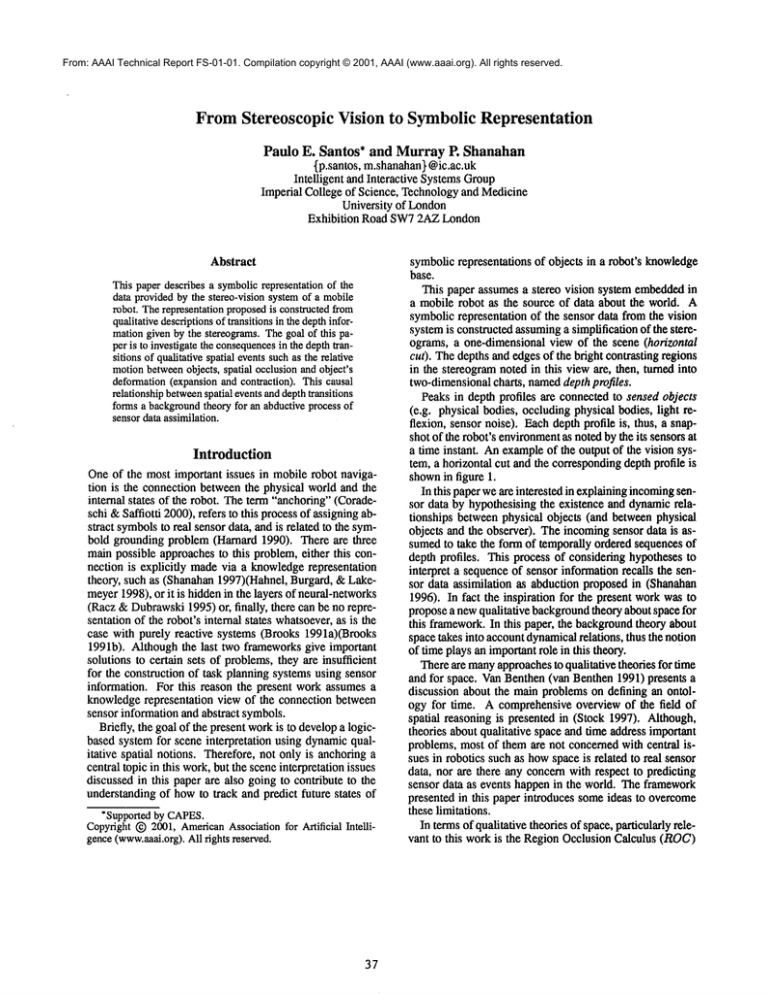

This paper assumesa stereo vision system embeddedin

a mobile robot as the source of data about the world. A

symbolicrepresentation of the sensor data fromthe vision

systemis constructedassuminga simplification of the stereograms, a one-dimensionalview of the scene (horizontal

cut). Thedepths and edgesof the bright contrasting regions

in the stereogramnoted in this vieware, then, turned into

two-dimensionalcharts, nameddepth profiles.

Peaksin depth profiles are connectedto sensed objects

(e.g. physical bodies, occludingphysical bodies, light reflexion, sensor noise). Eachdepth profile is, thus, a snapshot of the robot’s environment

as notedby the its sensorsat

a time instant. Anexampleof the output of the vision system, a horizontal cut and the correspondingdepth profile is

shownin figure 1.

In this paperweare interested in explainingincomingsensor data by hypothesising the existence and dynamicrelationships betweenphysical objects (and betweenphysical

objects and the observer). The incomingsensor data is assumedto take the form of temporally ordered sequences of

depth profiles. This process of considering hypothesesto

interpret a sequenceof sensor informationrecalls the sensor data assimilation as abduction proposedin (Shanahan

1996). In fact the inspiration for the present workwas to

proposea newqualitative backgroundtheory about space for

this framework.In this paper, the backgroundtheory about

space takes into accountdynamicalrelations, thus the notion

of time plays an importantrole in this theory.

Thereare manyapproachesto qualitative theories for time

and for space. Van Benthen(van Benthen1991) presents

discussion about the mainproblemson defining an ontology for time. A comprehensiveoverview of the field of

spatial reasoning is presented in (Stock 1997). Although,

theories about qualitative space and time address important

problems,most of themare not concernedwith central issues in robotics such as howspace is related to real sensor

data, nor are there any eoneernwith respect to predicting

sensor data as events happenin the world. The framework

presented in this paper introduces someideas to overcome

these limitations.

In termsof qualitative theories of space,particularlyrelevant to this workis the RegionOcclusionCalculus (ROC)

(Randell, Witkowski, & Shanahan 2001). The ROCis a

first-order axiomatisationthat can be used to modelspatial

occlusion of arbitrary shapedobjects. The formal theory is

defined on the degree of connectivity betweenregions representing two-dimensionalimages of bodies as seen from

someviewpoint. This paper presents a dynamiccharacterization of occlusion as noted by a mobilerobot’s sensors,

whichcould extend ROC

by introducing time into its ontology.

Stereo-Vision System

The stereo-vision systemused in this workis Small Vision

System(SVS), whichis an efficient implementationof an

area correlation algorithm for computingrange from stereo

images. The images are delivered by a stereo head STHMD1,whichis a digital device with megapixelimagers that

uses the 1394bus (FireWire)to deliver high-resolution, real

time stereo imageryto any PCequippedwith a 1394interface card t.

Figure1 showsa pair of input images,the resultant stereo

disparity imagefrom the vision systemand a depth profile

of a scene.

quenceof profiles is a sequenceof temporallyordered snapshots of the world. For brevity, assumethroughoutthis paper that a profile Pi representsthe snapshottaken at the time

point tl, for i e N.

Informationabout the objects in the world, as noted by

the robot’s sensors, is then encodedas peaks (depth peaks)

in depthprofiles (figure 2b).

The variables edgeand depth in the depth profiles range

over the positive real numbers.The edges of a peak in a

profile represent the imageboundariesof the visible objects

in a horizontalcut.

Thedepthof a peakis assumedto be a measureof the relative distance betweenthe observer (robot) and the objects

in its field of view.Purther investigation shouldbe donein

order to extendthis notion to take into accountthe depthsin

the objects’ shape. This wouldlead to the treatmentof the

peak’sformat as an additional informationin the theory.

The axis depth in a profile is constrainedby the furthest

point that can be noted by the robot’s sensors, this limiting

distance is represented by L in the charts (figure 2b). The

value of L is, thus, determinedby the specification of the

2.

robot’s sensors

a)

J

¯

e

f

i

...........

d.

Figure 2: a) Twoobjects a, b notedfrom a robot’s viewpoint

v; b) Depthprofile (relative to the viewpointv in a) representing the objects a and b respectively by the peaksp and

q.

Figure 1: Imagesof a scene (top pictures); stereogramgiven

by SVS(bottomleft picture); and depth profile of a scene’s

horizontal cut (bottomcentre image).

DepthProfiles

In order to transform the stereogramsfrom the vision systeminto a high-levelinterpretation of the world,a simplification of the initial data is considered.Instead of assuming

the wholestereogramof a scene, only a horizontal line of

it (horizontal cut) is analysed. Eachhorizontal cut is represented by a two-dimensional

chart (depth profile) relating

the depths and the extremeedgesof bright contrasting regions in the portion of the stereogramcrossedby the cut. An

exampleof a depthprofile is shownin figure 2b.

Depthprofiles can be interpreted as snapshotsof the environment,each profile taken at a time instant. Thus, a se~BothSVSand STH-MD1

weredevelopedin the SRIlaboratoties, http:flwww.ai.sri.com/konoligeYsvs/index.htm.

38

Animportant information embedded

in the depth profiles

is the size of the peaks. The size of a peakis given by the

moduloof the subtraction betweenthe values of its edges

(e.g. in figure 2b the size of the peakq is definedby le- dl).

Thus,the size of a peakis connectedto the angulardistance

betweentwoobjects in the worldwith respect to a viewpoint.

Differences in the sizes (and/or depths) of peaksin the

sameprofile and transitions of the size (depth)of a peakin

sequenceof profiles encodeinformationabout dynamicalrelations betweenobjects in the worldand betweenthe objects

and the observer. Themaingoal of this workis to d.evelop

methodsto extract this informationfor sceneinterpretation.

It is worthypointing out that, for the purposesof this

work,the variables depth and edge do not necessarily have

to represent precise measurements

of regions in a horizontal cut of a scene, but approximatevalues of these quantifies within a predefinedrange. However,

the values of these

2Thepractical limit (L) for depthperceptionin the apparatus

availablefor this workis about5 meters,readingsfurtherthanthis

has shown

to be veryunreliable.

variables should be accurate enoughto represent the order

of magnitudeof the actual measurementsof depth and size

of objects in the worldfromthe robot’s perspective.

In this work,depthprofiles are assumedto be the only informationavailable to the robot about its environment.The

purpose of this assumptionis to permit an analysis of how

muchinformation can be extracted from the vision system

alone, makingprecise to whichextent this information is

ambiguousand howthe assumption of new data (such as

robot’s proprioception)can be used to disambiguateit.

their logical descriptions wouldbe too extensiveto be taken

into account completely.Thus, the strategy of the reasoning systemis not to alwaysprovidinga correct answer,but

to concludea possibly correct interpretation of the sensor

transition. If this interpretationturns out to be false, another

hypothesisshould be searchedfor.

Example2: Occlusion betweentwo objects Figure 4 is

anotherexampleof a depthprofile sequence.In this case the

profiles contain two peaks, p and q, possibly representing

twodifferent objects in the observer’sfield of view.

Motivating Examples

In order to motivatethe development

of a theory about depth

information two examplesare presented.

Example1: Approaching an Object Assuming the two

profiles in figure 3 as constituting a sequenceof snapshots

of the world taken fromthe viewpointof one observer (u).

Common

sense makesus suppose that there is an object in

the worldrepresentedby the peakp which,either:

1. expandedits size, or

2. was approachedby the observer, or

3. is approaching

the observer.

It is worthypointing out that any combinationof the previous three hypothesis wouldalso be a plausible explanation for this profile sequence.Thechoiceabout the best set

of hypothesesto consider should followa modelof priority,

whichis not developedin the present paper.

Thetransition in the profile sequencethat suggeststhese

hypothesesaccountfor the decreasein the relative depth of

the peak p from P1 to P2. The task of a reasoning system,

in this domain,wouldbe to hypothesisesimilar facts given

sequencesof depth profiles.

P1

VI

kedge

P2

v I

Figure 3: Depthprofile sequenceP1, P2.

Thereare other plausible hypothesisto explain this profile sequencethat werenot consideredin this example..For

instance, it could be supposedthat in the interval after P1

and before P2 a different object wasplaced in front of the

robot’s sensors that totally occludesthe object represented

in P1. This is not a weaknessof the present work, but an

exampleof its basic assumptionconcerningthe incompleteness of information. For the sake of this simple example

it mighthavebeing possible to enumerateall of the possible hypothesisto explain the scenario in figure 3, however,

in a more complexsituation the amountof hypothesis and

39

P2

P1

P

F

v

edge

vI

P3

edge

q

~"edge

]P4;

vI

kedge

Figure 4: A depth profile sequence P1, P2, P3 and P4.

Intuitively, analysingthe profile sequencein figure 4, the

transition fromP1 to P2 suggeststhat either the twoobjects

representedby p and q weregetting closer to each other or

the observer waseircling aroundp and q in the direction of

decreasingthe observer-relative distance betweenthese two

objects.

Moreover,from P2 to P3 it is possible to supposethat

the object represented by q and p are in a relation of occlusion, since the peaks p and q in P2 becameone peak in

P3. Likewise, from P2, P3, P4, totally occlusion between

twoobjects can be entailed, since the peaksp and q changed

from two distinct peaks in P2 to one peak in P3 (whiehis

a compositionof the peaks in P2), and eventually to one

single peak(in P4).

In the next section these notions are mademorerigorous.

Peaks, Attributes

and Predicate Grounding

In the present section a formal languageis defined to describe depthprofiles transitions in termsof relations between

the objects of the worldand the observer’s viewpoint.

Thetheory’s universeof discourseincludes sorts for time

points, depth, size, peaks, physical bodies and viewpoints.

Timepoints, depthandsize are variables that rangeover positive real numbers(R+), peaksare variables for depthpeaks,

physical bodies are variables for objects of the world, viewpoints are points in Ra. Amany-sortedfirst-order logic is

assumedin this work.

The relation peak_of(p, b, v) represents that there is a

peakp assigned to the physical bodyb with respect to the

viewpointv. This relation plays a similar role of the predicate groundingrelation defined in (Coradeschi&S~Tiotti

2000).

Apeakassignedto an object is associatedto the attributes

of depthandsize of that object’s image.In orderto explicitly

refer to particular attributes of a peakat a determinedtime

instant t, twofunctionsare defined:

¯ depth:peak x time point ~ depth that gives the peak’s

depthat a timeinstant; and

¯ size: peak x time point -+ size, which mapsa peak and

a time point to the peak’sangularsize.

Besidesthe informationabout individual peaks, information

about the relative distance betweentwopeaksis also important whenanalysingsequencesof depth profiles. This data is

obtained by introducing the function dist described below.

¯ dist: peak x peak x timepoint ~ size, mapstwo peaks

and a time point to the angular distance separating the

peaksin that instant. This angulardistance is the modulo

of the subtraction of the nearest extremeedges between

two peak (e.g. in figure 2b the distance betweenpeak

and peakqis given by Ib - el).

It is worthypointingout that the depthprofiles of a stereogrammaycontain peaksrepresenting not only real physical

objects of the worldbut also illusions of objects (e.g. shadowsand reflexes) and sensor noise. This kind of data cannot, in most of the cases, easily be distinguished from the

data about real objects in the robot’s environment.In order

to cope with this problem,the frameworkpresented in this

paper assumes,as a rule of conjecture, that every peak in a

profile represents a physical bodyunless there is reasonto

believe in the contrary. In other words,this is the assumption that the sensors (by default) note only physical bodies

in the world,illusions (andnoise) of objects are exceptions.

Onewayto state this default rule formallyis by the use of

circumscriptionin a similar wayas presented in (Shanahan

1996) and (Shanahan 1997). A complete solution to this

problem,however,is a subject for future research.

Relations and Axioms

Assumingthat the symbolsa and b represent physical bodies, v represents the observer’s viewpoint,and t represents

a time point, the followingrelations are investigatedin this

paper:

1. being_approached_by(v,

a, t), read as "the viewpoint u

is beingapproachedby the object a at timet";

2. is_distancing_from(a,v, t), read as "the object a is dislancing fromthe viewpointu at time t";

3. approaching(u,a,t), read as "the viewpoint u is approachingthe object a at time t";

4. receding(u, a, t), read as "the viewpointu is receding

fromthe object a at timet";

5. expanding(a,u, t), read as "the object a is expanding

fromthe viewpointu a at t";

6. contracting(a,v, t), read as "the object a is contracting

fromthe viewpointu a at t";

getting.closer(a,

b, u, t), read as "the objects a andb are

7.

getting closer fromeachother with respect to v at t";

8. getting_further(a, b, u, t), read as "the objects a and b

are getting further fromeach other with respect to v at t";

9. eireling<(u,a, b, t), read as "u is circling aroundobjects

a and b at t in the direction that the distancebetween

their

imagesis decreasing";

10. circling>(v, a, b, t), read as "u is circling aroundobjects

a andb at t in the direction that the distancebetweentheir

imagesis increasing";

11. occluding(a,b, v,t), read as "a is occludingb fromv at

timet"; andfinally

12. totally_occluding(a,b, v, t), read as "a is totally occluding b fromu at timet";

Theserelations are hypothesesassumedto be possible explanationsfor transitions in the attributes of a peak(or set of

peaks). The formulae[A1] to [A6] below express the connectionsbetweenthe relations 1. to 12. stated aboveand the

transitions in the peaks’attributes.

[A1]Vavt being_approached_by(v,a,

t)V

approaching(v,a, t) V expanding(a,u, t)

3ptlt2(tl

< t)

A (t

< t2)A

peak_of(p,

a,u)A (depth(p,

tl)< de~h(p,

Theformula[A 1] states that if there exists a peakp representing the object a fromthe viewpointu suchthat its depth

decreasesthrougha sequenceof profiles, then it is the ease

that either the observeru is beingapproached

by the object,

or the observer is approaching

the object, or the object is

expandingitself.

The relations is_distancing_f rora / 4, receding~4and

contracting~3can be similarly stated. As shownin the formula[A2].

[A2] Vaut is_distancing_from(a, u, t)V

receding(v, a, t) V contracting(a, u,

3ptlt~(tl < t) A (t < t2)A

peak_of(p, a, u) A (depth(p, tl) > depth(p, t2))

Likewise,the event of two bodies getting closer to each

other (or the observer movingaroundthe objects) at an instant t followsas hypothesisto the fact that the angulardistance betweenthe respective depth peaksto the two objeets

decreases throughtime. The formula[A3]belowstates these

facts. Theoppositerelation, getting further, is stated by the

formula[.44].

[A3]Vabut[getting_closer(a, b, u,

t)V

circling<(u,a,b,t)] A (a y£ b) ~-3pqht2(ta < t) A (t < t2) Apeak_of(p,a,v)A

peak_of(q,b,u) A (dist(p,q, t2) < dist(p,q,

40

[.44] Vabut Letting_further(a, b, u, t)Y

circling>(u, a, b, t)] A(a ¢ b)

3pqtlt2(tl < t) A (t < t2) Apeak_of(p,a,u)A

peak_of(q,b, v) A (dist(p, t2)> dist (p, q, ~1))

Assumethe relation connected(p, q, t), read as "peak p

is connectedto peakq at time t"; connected(p,q, t) holds if

the distancebetweenthe peaksp and q at timet is zero.

of reading the formulaeaboveis assumingthe predicates in

the left-hand side as actions to be manipulatedby a planning

system. In this cause, the formulae[All to [A6]can be understoodas rewriting roles for these actions in termsof the

expectedsensor transition.

As the present paper is concernedwith the first interpretation, sensor data assimilation via an abductiveprocessis

briefly discussedin the next section.

Sensor Data Assimilation

Vpqtconnected(p,q, t) ~- ( dist(p, q, t)

Notethat the previousrelation is only definedfor peaks,

it has no relation to the degree of connectivity betweentwo

physical bodies. In this article the relation connected~3is

only used to characterise the occluding~4relation, as stated

in formula [A5]below.

[A5] Vabut occluding(a, b, v, t) A (a ~ b)

3Pq~I($1

~ ~) Apeak_of(p,a, y)A

peak_of(q,b, u) A -~connected(p,q, tx

Aconnected(p,q, t) A [size(q, t) < size(q, tl)V

size(p, t) < size(p, tl)]

Theformula[.45] states that one of the objects a and b is

occludingthe other at time instant t, if the peaks p and q

(connectedat t) are not connectedat sometime point before

t and the size of one of the peaks is shrinking through the

given profile sequence.

Similarly to occluding~4,if at an instant tl (tl < t)

wasthe ease that occluding(a,b, v, t), andat t the peakrepresenting b disappearsfromthe profile (i.e. size(q, t) =

then one of the objects is totally occludingthe other. As

representedin formula[.46].

As already mentioned,this workfollows a similar approach

to that proposedin (Shanahan1996),the task of sensor data

assimilationof a streamof sensor data is consideredto be an

abductive process on a backgroundtheory about the world.

Basically, abductionis the process of adopting hypotheses to explaina set of facts accordingto a knowledge

theory.

Givena set of formulaeabout a certain field of knowledge

and a set of observations, the task of the abductiveinference is to supposethe causesof these observationsfromthe

knowledgeavailable. Abduction,thus, is a non-monotonic

inference (Boutilier &Becher1995) since the explanation

inferred from the available knowledgemaycomeout to be

false providednewinformationis acquired.

Takinginto account the formal systemdescribed in the

previoussection, the task of the abductiveprocessis to infer the conclusionsof formulae[.41] to [.46] as hypotheses,

given a description (observation)of the sensor data in terms

of depthpeakstransitions.

Moreformally, assumingthat ~ is a backgroundtheory

comprisingformulae[A1] to [.46] and ¯ a description (in

terms of depth profiles) of a sequenceof stereo images,the

task is to find a set of formulaeA, suchthat

r~,A~,

[.46] Vabuttotally_occluding(a, b, u, t) A (a # b)

3h V q( t a <t) ^ occluding(a,

b, u, tx

^peak_of(q, b, u) ^ (size(q, t)

The formulae[A1] to [A6] represent a set of weakconnections betweendepth peak transitions and dynamicrelations

betweenobjects and betweenthe observer and then objects.

By weakconnectionswe meanthat some(but not all) of the

hypothesesto explain the sensor data are representedwithin

the formulae. This set of hypotheses, however, does not

exhaust all of the possible configurationsof the objects as

noted by each sequenceof snapshots(as briefly discussedin

the example1 above). The purpose of this incomplete set

of hypothesesis, initially, to give a hint aboutwhatpossibly couldbe a high-levelexplanationfor the sensor data this

high-level interpretation could then be used by a planning

systemin order to give a possibly executableplan.

Asa matter of fact, there are twowaysof readingthe formulaeabove. Firstly, if the fight-hand side of one of these

formulaeunifies with a logic description of the sensor data,

then the predicates in the left-hand side of it should be inferred. Dueto the fact that the set of hypothesesis not complete, this inference process maylead to false conclusion,

that can be reviewedif newdata is available. For this reason

we assumeabduction as the inference rule. A second way

41

(I)

where

thesymbol

l~refers

tothenon-monotonic

logic

consequence.

In order to makethis clearer, examples1 and 2 are recalled using the formal languageand the concept of abduction presented above.

ExamplesRevisited

Example1: Approachingan Object Consider the profile sequencein figure 3. A description of the sensor data

described by the transitions betweenthe peak p in P1 and

P2 is the set

(3p a u tl t2 peak_of(p,a,u)^

= ~ (size(p,

t,) < size(p, t2))A

I, ( depth(p,tl ) >depth(p,t2

Wherepeak_of(p, a, y) is the result of the default assumptionthat every peak represents a physical body.

/,From ¯ and the formula [A1], abduction infers that

being_approached_by(y,a, t) or approaching(u,a, t) or

expanding(a, u, t) for sometime point t E It1,... ,t2].

Consideringthe formula (1) in the previous section, the

set {being_approached_by(u,

a, t ), approaching(u,a, t

expanding(a,u, t)} constitutes the set of explanationsA.

Example2: Occlusion between two objects Similarly to

the example1, a description of the profile sequencerepresentedin figure 4 can be written as the set

the gap betweenvision and planningrely on the use of special purpose algorithms or on the developmentof a common

language,instead of first-order logic, to solve this issue. A

limitationof the first approachis that it treats visual interpretation and planningas twodistinct processes, while the

present workassumesthat they can be handled by a similar

abductive mechanism,as proposed in (Shanahan1996).

the other hand, by assumingthe secondoption, the developmentof a common

languagedistinct from logic mayhave to

cope with most problemsthat were already solved through

the longtradition of logic-basedsystemsfor A.I.

In this workthe simplification of the stereogramsin terms

of horizontalcuts mayseemto be verylimiting at first sight.

However,

it guaranteedthe development

of a qualitative spatial reasoningtheory intrinsically related to sensor information. The core purposeof this ongoingresearch is to investigate howmuchof a spatial reasoning theory can be extracted from poor sensor information, concludingfromthat

whatkind of robotic tasks can be accomplishedwithin this

theory.

Extendingthis one-dimensionalview of the scene to a

moregeneral analysis could be doneby consideringthe joint

information given by depth profiles of cuts madein other

directions of the scene. This analysis maylead to a better

notion of individual objects and the degree of connectivity

betweenobject. This issue, however,is left for future research.

Anotherissue that was overlookedby the present paper

due to time constraints wasthe considerationof the information encodedby the format of the depth peaks. Assuming profiles taken frommanydifferent points of viewaround

the object, the formatof these peaksare intrinsically related

to the shape of the bodies, and to the two dimensionalregion this object oecupyin the environment.Thus, the format of the depth peaks could play an important role in the

perceptualsignature of the objects (Coradesehi&Saffiotti

2000) and to theories about spatial occupancyand persistence (Shanahan1995).

Afurther subject for future researchis the improvement

of

the representationalsystemas moreprecise and richer visual

informationis considered.

Future workshould also investigate the possible transitions betweenthe relations 1. to 12. (describedin the section

Peaks, Attributes and Predicate Grounding).This information wouldbe useful for constraining the possible explanations in the abduetivetask of sensordata assimilation.

Theconsiderationof default rules in the theory is another

important open problemworthy mentioning.

In the section Peaks, Attributes and Predicate Grounding, the connectionbetweenthe symbolicand the physical

representation of the objects in the worldis defined by the

predicate peak_of~3. The statement peak.o f fp, a, v) can

be interpreted in terms of anchoringas "the anchorp is assigned to the object a fromthe viewpointv". It is the task

of the abductiveprocessto maintainthis anchorin time. For

instance, recalling the profile P4 in the example2 (figure

4), whereonly one peak is shown, and supposingthat in

sequential profile P5 another peak appears next to peak q,

the reasoningsystemshould concludethat this newpeak is

’3ab01 02tl t2 t3 t4 v tl < t2 A t2 < t3A

t3 <t4 A peak_of(a, 01, !/) A peak_of(b, 02, v)A

( dist( a, b, h) > dist( a, t2)) ^ -~connected( a,b, h)

^-~eonneeted(a, b, t2) ^ connected(a,b, t3)^

Vt q [h, t4] (depth(a, t) > depth(a, t))^

size(a, t4) =

/,From ~’ and the set of formulae I~ (as defined in the

previous section), an abductiveexplanation for ~ wouldinelude someof the followingrelations:

’getting_closer(a, b, v, t)

!m

A tl

<~ t A 1~ <~ t2

circling(v, a, b, t) A h < t A t <

occluding(a,b, v, t3)

, totally_occluding(a,b, v, t4)

Image segmentation:

future plans

This work assumes a bottom-up approach to image segmentation. Theimplementationof the systemis in its very

early stages. Upto nowthere is an edgedetection task followedby a region segmentation(and labeling) step on the

stereograms.Therefore,depth profiles are going to be constructed from the vertical edges extracted fromthe imageof

the scene.

Thecentral idea is to hypothesisethe existenceof objects

fromthese imageregions, and proposetests in order to verify the hypothesesor to search for morecompleteexplanations wheneverit is needed.This necessity in searchingfor

more complete explanations should be decided by a planning systemcapable of handling priorities within the hypotheses. This issue, however,is outside the scopeof this

paper.

Discussion and Open Issues

This paper proposeda symbolicrepresentation of the data

from a mobilerobot’s stereo-vision systemusing depth informationand dynamicnotions relative to a viewpoint.

The symbolicrepresentation proposedis based on a horizontal cut of the imagegiven by the stereo-vision system.

Transitions in the depth profiles were, then, linked to highlevel descriptions of the environmentby first-order axioms.

The mainpurpose of this workis to developa mechanism

of

inference to concludehypothesisabout the relative motion

betweenobjects, spatial occlusion and objeet’s deformation

fromsensor data transition. This relationship betweenspatial events and their effects in the depthprofile transitions

constitutes a backgroundtheory for a logic-based abduetive

processof sensor data assimilation.

The reason for assuminga logic-based approachin this

workis to assumea solid common

language for visual data

interpretation and task planning. Otheroptions for bridging

42

the peakio (previously involvedin the profile sequenceP1,

P2, P3 and P4), maintainingthe previously stated connection betweenp and the object a. This fact can be concluded

assuminga default rule about the permanence

of objeeLs,another importantopen issue of this work.

The connection between the depth profile representation and the Region Occlusion Calculus (ROC)(Randell,

Witkowski,&Shanahan2001), as mentionedin the introductionof this paper, is also an interesting topic for further

investigation. Themaincontributionof investigating the relationship betweenthe RegionOcclusion Calculus and the

framework

presentedin this article is the possibility to extend ROCincluding dynamicsensor information in its ontology.

Acknowledgments

Thanks to David Randell and MarkWitkowskifor the invaluable discussions and to the two anonymousreviewers

for the useful suggestionsfor improvingthis paper.

References

Boutilier, C., and Beeher, V. 1995. Abductionas belief

revision.Artificial Intelligence.

Brooks, R.A. 1991a. Intelligence without reason. A.I.

Memo1293, MIT.

Brooks,R. A. 1991b.Intelligence without representation.

Artificial Intelligence Journal47:139-159.

Coradesehi,S., and Saffiotti, A. 2000. Anchoringsymbols

to sensor data: preliminaryreport. In Proc.of the 17thNational Conferenceon Artificial Intelligence (AAAI-2000),

129-135.

Hahnel, D.; Burgard, W.; and Lakemeyer, G. 1998.

GOLEX

bridging the gap between logic (GOLOG)

and

real robot. LNCS.Springer Verlag.

Harnard, S. 1990. The symbolgroundingproblem. Physica

D 42:335-346.

Racz, J., and Dubrawski,A. 1995. Artificial neural networkfor mobilerobot topological localization. Journalof

Robotics and AutonomousSystems 16(1):73-80.

RandeU,D.; Witkowski,M.; and Shanahan, M. 2001. From

imagesto bodies: modelingand exploiting spatial occlusion and motionparallax. In Proceedingsof the International Joint Conferenceon Artificial Intelligence. Toappear.

Shanahan,M. 1995. Default reasoning about spatial occupancy.Artificial Intelligence 74(1): 147-163.

Shanahan, M. 1996. Robotics and the commonsense informatic situation. In Proceedingsof the 12th European

Conferenceon Artificial Intelligence. JohnWileyand Sons.

Shanahan, M. 1997. Solving the frame problem. TheMIT

press.

Stock, O., ed. 1997. Spatial and TemporalReasoning.

KluwerAcademicPublishers.

van Benthen,J. 1991. TheLogic of Time: a model-theoretic

investigation into the varieties of temporalontologyand

temporal discource. KluwerAcademic.

43