From: AAAI Technical Report FS-94-03. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

UnderstandingControl at the KnowledgeLevel

B. Chandrasekaran

Laboratoryfor AI Research

TheOhioState University

Columbus, OH43210

Email: chandra@cis.ohio-state.edu

Abstract

the food is in the kitchen. That the cat would

naturally go to the kitchen under these conditions seems reasonable to the host and presumablyto the guest. Whenpeople talk this

way,they are not asserting that the neural stuff

of the cat is somekind of a logical inference

machineworkingon Predicate Calculus expressions. It is simplya useful wayof setting up a

model of agents and using the model for explaining their behavior.Theattributions of the

goal and knowledgecan be changedon the basis

of further empirical evidence, but the revised

modelwouldstill be in the same language of

goals and knowledge.

The Knowledge

Level still needs a representation, but this is a representationthat is not

posited in the agent, but onein whichoutsiders

talk about the agent. Newellthoughtthat logic

wasan appropriaterepresentation for this purpose, leaving openthe possibility of other languages also being appropriate in somecircumstances. Newell used the phrase "Symbol

Level," to refer to the representational languages actually used to implementartificial

decision agents (or explain the implementation

of natural agents). Logic-based languages,

Lisp and FORTRAN,

neural net descriptions,

and even Brook’ssubsumptionarchitectures are

all possible Symbol-Levelimplementationsfor

a given KnowledgeLevel specification of an

agent.

Whatis it that unifies the control task

in all its manifestations,fromthe thermostat to the operator of a nuclear

power plant? At the same time, how

do weexplain the variety of the solutions that wesee for the task? I propose a KnowledgeLevel analysis of

the task whichleads to a task-structure

for control. Differencesin availability

of knowledge,the degree of compilation in the knowledgeto mapfrom observationsto actions, andpropertiesrequired of the solutions together determinethe differencesin the solution architectures. I end by discussing a

numberof heuristics that arise out of

the Knowledge

Level analysis that can

help in the designof systemsto control

the physical world.

WhatIs the Knowledge

Level?

By nowmost of us in AI knowabout the

KnowledgeLevel proposal of Newell[Newell,

81]. It is a wayof explainingandpredicting the

behavior of a decision-making agent without

committingoneself to a description of the

mechanismsof implementation.The idea is to

attribute to the agenta goal or set of goals and

knowledgewhich together would explain its

behavior,assuming

that the agentis abstractly a

rational agent, i.e., onethat wouldapplyan item

of relevant knowledgeto the achievementof a

goal. Imaginethe following conversation betweena guest anda host at a houseparty:

G: Whydoes your cat keepgoing into the

kitchen again andagain?

H: Oh,it thinksthat the foodbowlis still

in the kitchen. It doesn’t knowI just

movedit to the porch.

Thehost attributes to the cat the goalof satisfying its hunger, and explains its behavior by

positing a (mistaken) piece of knowledgethat

TheControlProblem

at the

Knowledge

Level

Consider the following devices and control

agents: the thermostat, the speed regulator for

an engine, an animalcontrolling its bodyduring

somemotion, the operator of a nuclear power

plant, the president and his economicadvisors

duringthe task of policy formulationto control

inflation and unemployment,

and a major corporation planningto control its rapid loss of market share. All these systemsare engagedin a

19

"control" task, but seemto use rather different

control techniques and architectures. Is this

similarity in high-leveldescriptionjust a consequenceof an informaluse of wordsin our natural language,or is it an indication of someimportant structural similarity that can haveuseful

technical consequences7Formulatingthe control problemat the Knowledge

Level can help

us to see what makesthese problemssimilar,

and, at the sametime, to explain the widely

divergent implementations

of the systemsthat I

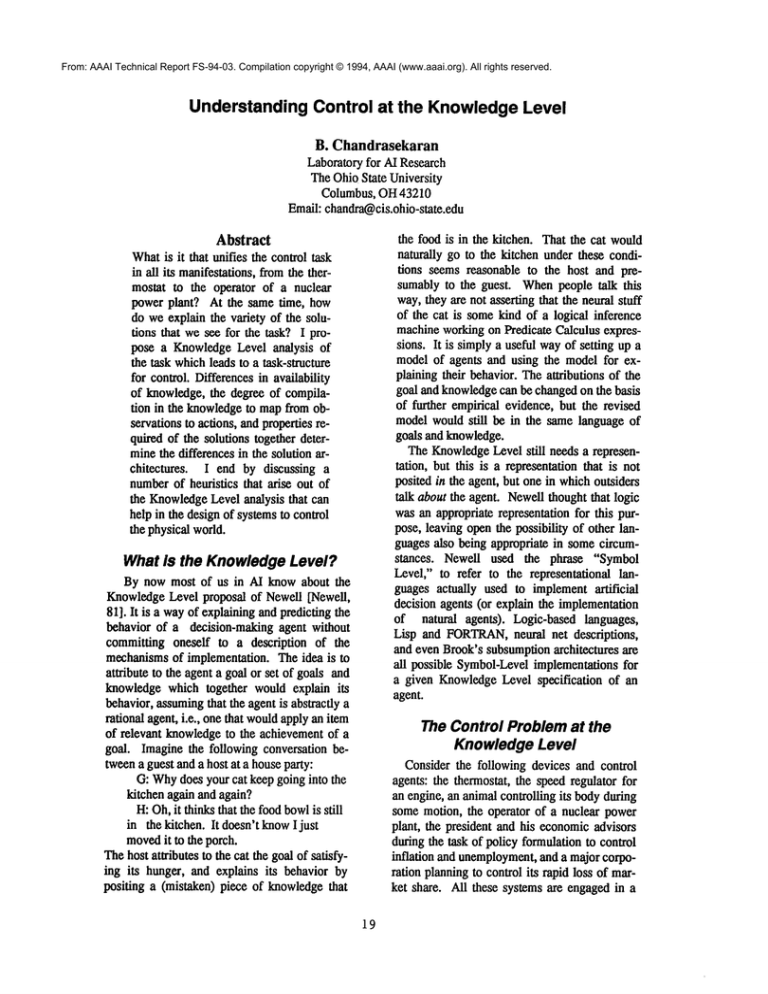

listed. Fig. 1 is a brief descriptionof the control

problemat the Knowledge

Level.

Control Agent C,

System to be controlled S, state

vector s, goal state G, defined as a

wff of predicates over components of s,

Observations O,

Action repertoire A,

The Task:. Synthesize action sequence from A such that S reaches

G, subject to various performance

constraints (time, error, cost, robustness, stability, etc.)

wouldgenerate the intendedbehavior given the

model.

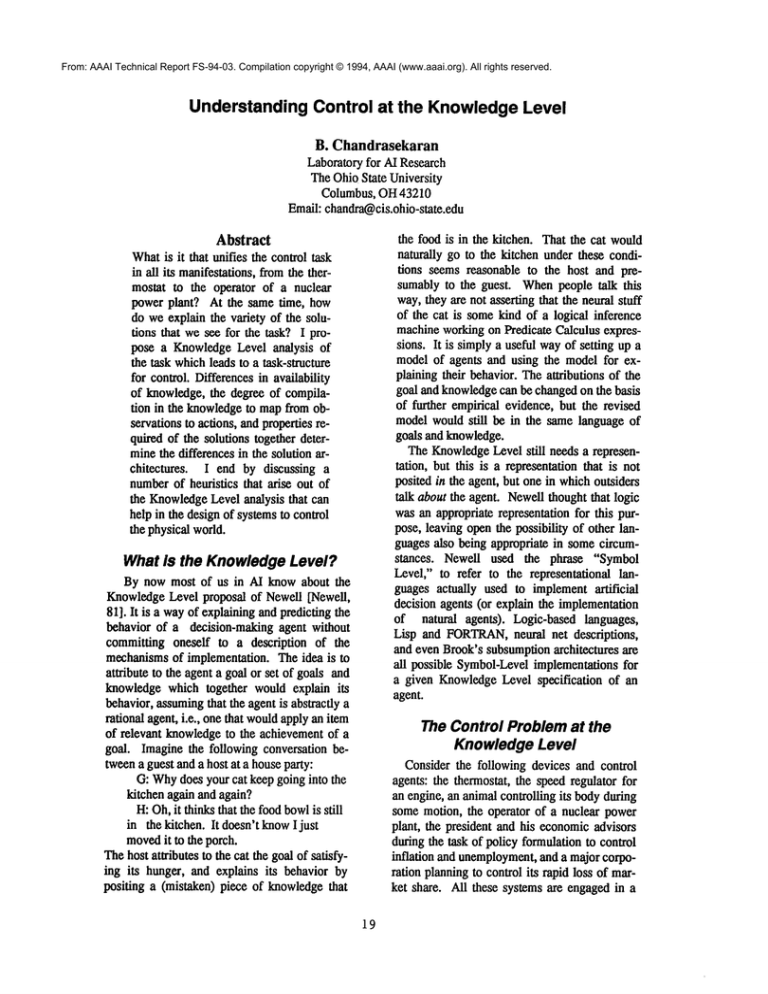

¯ Commonsubtasks:

Build a model of S using O

(The general version of the problem

is abductive explanation: from perception to scientific theory formation

are examplesof this.) The task might

involve prediction as a subtask.

,, Create a proposed plan to move S

to G

(The general version of the problem

is oneof synthesis of plans.)

- Predict behavior of S under plan

using model

(In general, simulation to analysis

maybe invoked.)

, Modify plan

Fig. 2. Thetask-structureof control

In fact, control in this sense indudes a good part of the general

problem of intelligence.

Fig. 1. Thecontrol

task

at the Knowledge

Level

In order to see howdifferent control tasks differ in their nature, thus permitting different

types of solutions, we need to posit a task

structure [Chandrasekaran,

et al, 1992]for it.

Thetask structure is a decomposition

of the task

into a set of subtasks, and is oneplausible way

to accomplishthe goals specified in the task

description. Fig. 2 describesa task structure for

control.

Basically, the task involves two important

sub_m__

sks: usingO, modelS andgeneratea control responsebasedon the model.Bothof these

tasks could use as their subtask a prediction

component.Typically, the modelingtask would

use prediction to generate consequencesof the

hypothesizedmodeland checkagainst reality to

verify the hypothesis. The planning component

woulduse prediction to check whetherthe plan

2O

Everycontrol system neednot do each of the

tasks in Fig. 2 explicitly. It is hardto build effective control systemswhichdo not use some

sort of observationto sensethe environment,or

whichdo not makethe generationof the control

signal or policy depend on the observation.

Manycontrol systemscan be interpreted as performingthese tasks implicitly. Theprediction

subtask mayactually be skipped altogether in

certain domains.

Thevariety of solutionsto the control problem

arises from the different assumptionsand reqniremeatsunderwhichdifferent types of solutions are adoptedfor the subtasks, resulting in

different propertiesfor the control solution as a

whole.

As I mentioned,at one extreme, the subtasks

maybe doneimplicitly, or in a limited waythat

they only workundercertain conditions. At the

other extreme, they mayalso be done with explicit problem solving by multiple agents

searching in complexproblem spaces. Andof

coursethere are solutions of varyingcomplexity

in between.

The Thermostat, the Physician and the

Neural Net Controller

Considera thermostat (C in Fig. 1) For this

system,S is the room,s consistsof a single state

variable, the roomtemperature,G, the goal state

of S, is the desired temperaturerange, Ois the

sensing of the temperature by the bimetallic

strip, andAconsistsof actionsto turn on andoff

the furnace,the air-conditioner,the fan, etc.

The modelingsubtask is solved by directly

measuringthe variable implicated in the goal

predicate. The model of the environment is

simplythe valueof the single state variable, the

temperature,andthat in turn is a direct function

of the curvatureof the bimetallicstrip.

Thecurvature of the strip also directly determineswhenthe furnace will be turned on and

off. Thus the control generation subtask is

solvedin the thermostatby using a direct relation betweenthe modelvalue and the action.

Thetwo subtasks, and the task as a whole, are

thus implementedby a direct mappingbetween

the observationandthe action.

Becauseof the extremesimplicity of the way

the subtasks are solved, the prediction task,

whichis normallya subtask of the modelingand

planningrusks, is skippedin the thermostat.

Thecontrol architecture of the thermostatis

economical

and analysis of its behavioris tractable. Butthere is also a price to pay for this

simplicity. Supposethe measurementof temperature by the bimetallicstrip is off by 5 reg.

The control system will systematically malfunction. A similar problem can be imagined

for the control generation component.Alarger

control system consisting of a humanproblem

solver (or an automateddiagnostic system)

the loop maybe able to diagnose the problem

and adjust the control behavior. This approach

increasesthe robustnessof the architecture, but

at the cost of increased complexity of the

modelingsubtask.

Nowconsider the task facing a physician(C):

controlling a patient’s body (S). Various

symptoms

and diagnostic data constitute the set

O. Thetherapeutic options available constitute

the set A. Thegoal state is specifiedby a set of

predicates over importantbodyparameters,such

as the temperature,liver function, heart rate,

etc.

Considerthe model-making

subtask. This is

the familiar diagnostic task. In someinstances

this problemcan be quite complex, involving

abductiveexplanationbuilding, prediction, and

so on. This processis modeledas problemspace

search. The task of generating therapies is

usually not as complex,but could involve plan

21

instantiation andprediction,againtasks that are

best modeledas search in problemspaces.

Whycan’t the two subtasks, modeling and

planning, be handled by the direct mapping

techniquesthat seemto be so successful in the

case of the thermostat?Tostart off, the number

of state variables in the modelis quite large,

and the relation betweenobservations and the

modelvariables is not as direct in this domain.

It is practically impossibleto so instrumentthe

bodythat every relevant variable in the model

can be observeddirectly. Withrespect to planning, the complexityof a control systemthat

mapsdirectly from symptomsto therapies somesort of a huge table look-up - wouldbe

quite large. It is muchmore advantageousto

mapthe observations to equivalence classes the diagnostic categories - and then index the

planningactions to these equivalenceclasses.

But doing all of this takes the physician far

awayflom the strategies appropriate for the

thermostat.

As a point intermediate in the spectrumbetweenthe thermostat, whichis a kind of reflex

control system, and the physician, whois a deliberative search-basedproblemsolver, consider

control systems based on PDP-like (or other

types of) neural networks.O providesthe inputs

to the neural net, and the output of the net

should be composedfrom the elements of the

set A. Neural networks can be thought of as

systemsthat select, by usingparallel techniques,

a path to oneof the output nodesthat is appropriate for the given input. Theactivity of even

multiply layered NN’scan still be modeledas a

selection of suchpathsin parallel in a hierarchy

of pre-enumeratedand organizedsearch spaces.

Thiskindofconnection

finding

in a limited

space

ofpossibilities

iswhythese

networks

are

also

often

called

associative.

During

a cycle

of

itsactivity,

thenetfinds

a connection

between

actual

observations

andappropriate

actions.

This behavior needs to be contrasted with a

modelof deliberationsuchas Soar [Laird, et al,

1987] in whichthe essence of deliberation is

that traversing a problemspace and establishing

connections betweenproblemspaces are themselves subject to open-endedadditional problem

solvingat run time.

Thethree modelsthat we have considered so

far - the thermostat,neural net controllers, and

deliberative problemsearch controllers - can be

comparedalong different dimensionsas follows.

Speed

Robustness

tradition that different from the one that is

prevalent in AI - and attempts to understand

biological control. Myownresearch has been

motivatedby trying to understand someof the

pmgmaticsof humanreasoning in prediction,

causal understandingand real-time control. I

have catalogued - and I will be discussing in

the rest of the paper- a set of heuristics that I

characterize as sourcesof powerthat biological

control systems use. These ideas can also be

usedin the designof practical control systems,

i.e., they are not intendedjust as explanationsof

biological control behavior. I do not intend

themto be an exhaustivelist, but as examples

of

heuristics that maybe obtainedby studyingthe

phenomenon

of control at an abstract level.

Theseheuristics do not dependon what implementation approaches are used in the actual

design - be they symbolic, connectionist networksor fuzzysets.

tractability"

Reflex

fast

low

easy

NN’s

medium medium medium

Delib.

potentially low

slow

engines

high*’

*: tractability of analysis

**: dependingon availability of knowledge

Table1: Tradeoff between different kinds of

control systemsalongdifferent dimensions

Byrobustness in Table 1 I meanthe range of

conditions under which the control system

would perform correctly. The thermostat is

unable to handle the situation wherethe assumptionabout the relation betweenthe curvature of the strip and the temperaturewasincorrect. Given a particular body of knowledge,

deliberation can in principle use the deductive

closure of the knowledgebase in determining

the control action, whilethe other twotypes of

control systemsin the Table use only knowledgewithin a fixed length of connectionchaining. Of course, any specific implementationof

problem space search may not use the full

powerof deductive closure, or the deductive

closure of the knowledgeavailable maybe unable in principle to handle a given newsituation.

The control systems in Table 1 are simply

three samplesin a large set of possibilities, but

selected becauseof their prominence

in biological control models. The reflex and the NN

models more commonly

used in the discussion

of animal behavior and humanmotor control

behavior, while deliberation is generally restricted to humancontrol behaviorwhereproblem solving plays a major role. Engineeringof

control systemsdoesnot needto be restricted to

these three families. Otherchoices can be made

in the spaceof possibilities, reflecting different

degreesof search and compilation.

Integrating

Modules of Different

Types

Wehaveidentified a specmma

of controllers: at

one end ate fast-acting controllers, but with

very circumscribedability to link up observations andactions; and at the other, slowdeliberative controllers whichsearch in problem

spaces in an open-endedway. Biological control makesuse of several controllers fromdifferent places in the spectrum.Howthe controllers are organizedso as to makethe best use of

themis expressedas Heuristic 1.

Heuristic 1. Layer the modulessuch that the

faster, less robust modulesare at the lowerlevels, and slower, morerobust modelsare on top

of them, overriding or augmentingthe control

providedby the lowerlevel ones. This is illustrated in Fig. 3.

Deliberation I

:_

A

e

:::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::

::iN~!i!i::ii::i::i::iiiiiiiiiiiiii

i~iiii!iiiiiiiiiiiiiiiiiiii!iiiiiiiiiiii!iiii!iiii

Ide

Sources of Power

I havebeen involved, over the last several

years, in the construction of AI-basedprocess

control systemsand also in research on causal

understandingof devices. I havealso followed

the major trends in both control systemstheory

- muchof whichcarried on in a mathematical

Thethree modules

aboveare biologically motivated.

Engineering

systems

donot needto beresb’ietedto

thesethreelayerspredsely.

Fig. 3. Layeringof modules

22

Manycontrol systemsin engineeringalready

followthis heuristic. For example,process engineering systemshave a primarycontrol layer

that directly activates certain importantcontrols

based on the value of somecarefully chosen

sensors. In nuclear powerplants, the primary

coolingis activated instantly as soonas certain

temperaturesexceedpreset thresholds. In addition, there are often additional controllers that

perform morecomplexcontrol actions, someof

them to augmentthe control actions of the

lower level modules. Whenhumansare in the

loop, they mayintervene to override the lower

level control actions as well. In general, emergencyconditions(say in controlling a pressure

cookeror driving a car) will be handledby the

lower level modules.In the case of driving a

car, makinghypothesesabout the intentions of

other drivers or predicting the consequencesof

a route changewouldrequire the involvement

of higher-level modulesperformingmorecomplex problemsolving.

In addition to overriding their controls as appropriate, the higher level modelscan influence

the lower level modulesin another way. They

can decomposethe control task and pass on to

the lowermodulescontrol goals at a lowerlevel

of abstraction that the lower-levelmodulescan

achieve morereadily. For example,the deliberative controller for robot control maytake the

control goal of "boilingwater"(say, the robot is

executing an order to makecoffee) and decomposeit into control goals of "reachingthe stove"

and "turn the dials on (or off)". Thesecontrol

goals can be achieved by motor control programsby using the more compiledtechniques

similar to those in neural andreflex controls.

Real-time control

Thenext set of heuristics are importantfor the

design of real-time control systems and are

basedon ideas discussedin [Chandrasekaran,

et

al, 1991].

Control with a guaranteeof real-time performanceis impossible. Physical systemshave,

for all practical purposes, an unboundeddescriptive complexity. Anyset of measurements

can only conveylimited informationabout the

system to be controlled. This meansthat the

best modelthat anyintelligence can build at any

lime maybe incompletefor the purposeof action generation. No action generation scheme

23

can be guaranteedto achieve the goal within a

given time limit, whateverthe time limit. On

the other hand, there exist control schemesfor

whichthe moretime there is to achieve the actions, the higherthe likelihoodthat actions can

be synthesizedto achievethe control goals. All

of this leads to the conclusionthat in the control

of physical systems,the time required to assure

that a control action will achieve the goal is

unbounded.

The discussion in the previous paragraph

leads to twodesiderata for anyaction generation

schemefor real-time control.

Desiderata:

¯ 1. For as large a range of goals andsituations as possible, actions needto be generated rapidly and with a high likelihood of

success. That is, wewouldlike as muchof

the controlas possibleto be reactive.

¯ 2. Someprovision needs to be madefor

whatto do whenthe actions fail to meetthe

goals in the time available, as will inevitably happensooneror later.

Desideratum1 leads to the kind of modulesat

the lowerlevels of the layering in Fig. 3. The

following Heuristics 3 and 4 say more about

howthe modulesshould be designed.

Heuristic2. Designsensor systemssuch that

the systemto be controlled can be modeledas

rapidlyas possible.

As direct a mappingas possible should be

madefromsensor values to internal states that

are related to importantgoals (especiallythreats

to importantgoals). Techniques

in the spirit of

reflex or associative controls could be useful

here. In fact, any technique whoseknowledge

can be characterizedas "compiled,"in the sense

described in [Chandrasekaran,1991], wouldbe

appropriate.However,

there is a natural limit to

howmanyof the situations can be covered in

this waywithoutan undueproliferation of sensors. So only the most common

and important

situations can be coveredthis way.

Heuristic 3. Designaction primitives such

that mappingfrom models to actions can be

madeas rapidly as possible.

Action primitives need to be designed such

that they haveas direct a relation as possibleto

achieving or maintaining the more important

goals. Acorrespondinglimit here is the proliferation of primitiveactions.

Let us discuss Heuristics 2 and 3 in the context of someexamples. In driving a car, the

design of sensor andaction systemshas evolved

over time to help infer the most dangerous

states of the modelor the most commonly

occurringstates as quicklyas possible, andto help

take immediateaction. If the car has to be

pulled over immediatelybecausethe engine is

getting too hot - and if this is a vital control

action - install a sensor that recognizesthis

state directly. Onthe other hand, we cannot

havea sensorfor everyinternal state of interest.

For example, there is no direct sensor for a

wornpiston ring. That condition has to be inferred through a diagnostic reasoning chain,

using symptomsand other observations. Similaxly, as soon as somedangerousstate is detected, the control action is to stop the car.

Applyingthe brake is the relevant action here,

and cars are designedsuchthat this is available

as an action primitive.Again,there are limits on

the numberof action primitives that can be

provided. For example, the control action of

increasing traction does not havea direct control action associatedwith it. Aplan has to be

set in motioninvolving a numberof other control actions.

Desideratum

2 leads to the followingheuristic.

Heuristic 4. Real-control control requires a

frameworkfor goal-abandonmentand substitution. This requires as muchpre-compilationof

goalsandtheir priority relations as possible.

Aswechive a car andnote that the weatheris

getting bad, weoften decide that the original

goal of getting to the destination by a certain

time is unlikely to be achieved.Or, in the control of a nuclearpowerplant, the operator’sattempts to achieve the goal of producingmaximumpower in the presence of somehardware

failure might not be bearing frniL In these

cases, the original goal is abandoned

andsubstituted by a less attractive but moreachievable

goal. Thedriver of the car substitutes the goal

of getting to the destination an hourlater. The

power plant operator abandons the goal of

powerproduction, and instead pursues the goal

of radiation containment.

Howdoes the controller pick the newgoal? It

could spendits time reasoningabout whatgoals

to substitute at the least cost, or it couldspend

the time trying to achieve the newgoal, whatever it mightbe. In manyimportant real-time

control problemsreplacementgoals and their

priorities can be pre-compiled.In the nuclear

industry, for example,a prioritized goal struttare called the safety function hierarchy is

madeavailable in advanceto the operators. If

the operator cannot maintain safe powerproduction and decides to abandonthe production

goal, the hierarchy gives him the appropriate

newgoal. Weacquire over time, as weinteract

with the world, a numberof such goal priority

relations. In our everydaybehavior,these relations help us to navigate the physical worldin

close to real time almost always. Weoccasionally have to stop and think about which

goalsto substitute,but not often.

Qualitative reasoning in prediction

Thelast set of heuristics that I will discuss

pertain to the problemof prediction. Prediction,

as I discussed earfier, is a common

subtask in

control. Evenif a controller is well-equipped

with a detailed quantitative modelof the environment,the input to the prediction task maybe

only qualitative t. Of course, the modelitself

maybe partly or whollyqualitative as well. de

Kleer, Forbus and Knipers have all proposed

elements of a representational vocabularyfor

qualitative reasoningand associated semantics

for the terms in it (see [Forbus,1988]for a reviewof the ideas). Theheuristics that I discuss

belowcan be viewedas elements of the pragmaticsof qnalitative reasoningfor prediction.

Whateverframeworkfor qualitative reasoning

one adopts, there will necessarilybe ambiguities

in the prediction dueto lack of completeinformarion. The ambiguities can proliferate exponentially.

Howdo humansfare in their control of the

physicalworld,in spite of the fact that qnalitarive reasoningis a veritable fountain of ambiguities? I have outlined someof the ways in

’ I amusing the word"qualitative" in the sense

of a symbolthat stands for a range of actual

values, such as "increasing," "decreasing," or

"Large." It is a formof approximatereasoning.

Theliterature on qualitative physics uses the

wordin this sense. This sense of "qualitative"

shouldbe distinguishedfromits use to stand for

"symbolic" reasoning as opposedto numerical

calculation. Thelatter sense has no connotation

of approximation.

24

whichwe do this in [Chandrasekaran, 1992].

The following simple examplecan be used to

illustrate the waysin whichwemanage.

Supposewewant to predict the consequences

of throwinga ball on a wall. Byusing qualitative physical equations (or just commonsense

physical knowledge),wecan derive a behavior

tree with ever-inerea~ing ambiguities. On the

other hand, consider howhumanreasoning

mightproceed.

1. If nothing muchdependson it, we

just predict the fLrSt coupleof bouncesandthen

simplysay, "it will go onuntil it stops."

2. If there is something

valuableon the

floor that the bouncingball mighthit, wedon’t

agonizeover whetherthe ball will hit it or not.

Wesimplypick out this possibility as one that

impactsa "Protect valuables" goal, and remove

the valuable object (or decide against bouncing

the ball).

3. Wemaybounce the ball a couple

of timesslowlyto get a senseof the its elasticity, and use this informationto prune someof

the ambiguitiesaway.Thekey idea here is that

weuse physical interaction as a wayof making

choicesin the tree of future states.

4. Wemight have bounced the ball

before in the same room, and might knowf~m

experience

that a significantpossibilityis that it

will roll underthe bed. Thenexttime the ball is

bounced,this possibility can be predictedwithout going through the complexbehavior tree.

Further, using another such experience-based

compilation, wecan identify the ball getting

crushed between the bed and the wall. This

possibility is generatedin two steps of predictive reasoning.

5. Supposethat there is a switchon the

wall that controls somedevice, and that weunderstand howthe device works. Usingthe idea

in 2 above,wenote that the ball mighthit the

switch and turn it on and off. Thenbecausewe

havea functional understandingof the device

that the switchcontrols, wewill be able to make

rapid predictions about what wouldhappento

the device. In somecases, we might even be

able to makeprecise predictions by using available quantitative models of the device. The

qualitative reasoningidentifies the impacton

the switchof the deviceas a possibility, which

then makesit possible for us to deploy additional analytic resources on the prediction

problemin a highly focusedway.

25

Theabovefist is representativeof whatI mean

by the pragmatics of qualitative reasoning,

which are the ways in which we manage to

control the physical worldwell enough,in spite

of the qualitativenessinherentin our reasoning.

In fact, weexploit qualitativenessto reducethe

complexityof prediction (as in point 5 above).

Thelist aboveleads to the followingheuristics.

Heuristic 5. Qualitative reasoningis rarely

carried out for morethan a very small number

of steps.

Heuristic 6. Ambiguitiescan often be resolved in favor of nodes that correspond to

"interesting" possibilities. Typically,interestingnessis definedby threats to or supports for

variousgoals of the agent.

Additionalreasoningor other formsof verification maybe used to check the occurrenceof

these states. Or actions mightsimplybe takento

avoidor exploit these states.

Heuristic7. Direct interaction with the physical world can be used to reducethe ambiguities

so that further steps in predictioncan be made.

Heuristic 7 is consistent with the proposalsof

the situated action paradigmin AI andcognitive

science.

Heuristic 8. The possibility that an action

maylead to an importantstate of interest can be

compiledfrom a previous reasoning experience

or stored froma previous interaction with the

world. This enables the states to be hypothesized without the agent havingto navigate the

behavior tree generated fromthe moredetailed

physical model.

Heuristic 9. Prediction often has to jump

levels of abstractionin behaviorandstate representation, since goals of interest occur at many

differentlevels of abstraction.

Compiledcausal packagesthat relate states

and behaviorsat different levels of abstraction

are the focus of the workon FunctionalRepresentations, whichis a theory of howdevices

achieve their functionality as a result of the

functionsof the components

andthe structure of

the device. Researchon howto use this kind of

representation for focused simulation and prediction is reviewedin [Chandrasekaran,

1994].

Concluding Remarks

Thereader will note that, as promisedby the

use of the term "Knowledge

Level" in the title,

I haveavoidedall discussionon specific representational formalisms. I have not gotten in-

volvedin the debates on fuzzy versus probabflistic representations,linear control versus nonlinear control, discrete versus continuouscontrol and so on. All of these issues and technologies,hnportantas they are, still pertain to

the SymbolLevel of control systems. The

Knowledge

Level discussion enabled us to get

someidea both about what unifies the task of

control, andabout the reasonsfor the vast differences in actual control systemdesignstrategies. Thesedifferencesare dueto the different

consmuntson the various substasks and different types of knowledgethat are available. We

can see an evolution in intelligence fromreflex

controls whichfix the connection betweenobservations and actions through progressively

decreasing rigidity of connectionbetweenobservations and actions, culminatingin individual or social deliberative behaviorwhichprovides the most open-endedwayof relating observatious and actions. I also discusseda number of biologically motivatedheuristics for the

design of systemsfor controlling the physical

world and illustrated the relevance of these

heuristics by lookingat someexamples.

References

[Chandrasekaran, 1991] B. Chandrasekaran,

"Modelsvs rules, deepversus compiled,content

versus form: Somedistinctions in knowledge

systems research," IEEEExpert, 6, 2, April

1991,75-79.

[Chandrasekaran,

et al, 1991]

B. Chandrasekaran, R. Bhamagarand D. D.

Sharma," Real-time disturbance control,"

Communications of the ACM,August 1991,

Vol. 34, # 8, 33-47.

[Chandrasekaran,1992] "QPis morethan SPQR

and Dynamicalsystems theory: Response to

Sacks and Doyle," ComputationalIntelligence,

8(2), 1992,216-222.

[Chandrasekaran,

et al, 1992]

B. Chandrasekaran, Todd Johnson, Jack W.

Smith, "Task Structure Analysis for Knowledge

Modeling," Communications

of the ACM,33-9,

Sep, 1992,124-136.

[Forbus, 1988]Forbus,K. D., 1988,Qualitative

Physics: Past, Present and Future, in Exploring

Artificial Intelligence, H. Shrobe, ed., San

Mateo, CA, MorganKauffman,239-96.

Acknowledgments

This research was supported partly by a grant

from The OhioState University Office of Research and College of Engineeringfor interdisciplinary researchon intelligent control, and

partly by ARPA,contract F30602-93-C-0243,

monitoredby USAF

RomeLaboratories. I thank

the participantsin the interdisciplinaryresearch

groupmeetingsfor useful discussions.

[Laird, et al, 1987] Laird, J.E., Newell, A. &

Rosenbloom,P.S. SOAR:An architecture for

generalintelligence. Artificial Intelligence,33,

(1987),1-64.

[Newell, 1981] Newell, A. The Knowledge

Level. A/ Magazine, Summer(1981), 1-19.

26