Point and Paste Question Answering

Sanda M. Harabagiu

Department of ComputerScience

University of Texasat Dallas

Richardson, Texas, 75083-0688

sanda@cs.utdallas.edu

Finley L~c~tu~u

Department of ComputerScience

University of Texasat Dallas

Richardson, Texas, 75083-0688

finley @student.utdallas.edu

Abstract

The informativeanswerto a question needssometimes

to be formattedas a summary

of a documentor set of

documents.

This scenariorequires a novelQuestionAnsweringarchitecturethat relies on a combination

of topical relations minedfromWordNet

and textual information availablefromdocuments

related to the question’s

topic. This paper presents a methodology

of pointing

out informationto be included in a summary

by using

extractiontemplates.Thepaperalso describeshowthe

summary

is generatedusing a small set of pastingoperators.

Introduction

Automatictextual Question Answering(QA)draws substantial interest both from research laboratories and the commercial world because it provides a promising solution to

the informationoverload we are currently facing. Instead of

browsingthrough long lists of documentswheneverwe need

answersto a question, we rather read a short text snippet

containing the answer we are looking for. Oneinteresting

aspect of QAis uncoveredif we take into accountthe observation that not all topics are createdequal. In a givenon-line

text collection, sometopics maybe covered by more documents whereas other topics are briefly mentioned. Whena

question asks about one of the more popular topics, summarization techniques need to replace that standard paragraph

retrieval techniques used in current QAsystems. If a documentcovers at length one of the topics, any of the paragraphs is relevant to the question asking about that topic.

Instead of selecting one of the paragraphs based on some

keyword-basedmetric, we argue that using summarization

techniques will produce more informative answers. If several documentscover different aspects of the same topic,

the answer should be generated by multi-document summarization techniques similar to those presented in (Radevand

McKeown1998).

In this paper we revisit the problemof QAby focusing on

questions that are answeredby a summary.Wethus study

the changes imposedon the three typical processes of a QA

system: (l) question processing; (2) documentprocessing

Copyright~) 2002, American

Associationfor Artificial Intelligence(www.aaai.org).

All rights reserved.

27

Paul Mor,~rescu

Department of ComputerScience

University of Texasat Dallas

Richardson, Texas, 75083-0688

pauim@student.utdallas.edu

and (3) answer formulation. Moreover, the techniques

employfor generating summariesas answer to question are

different from those developed for query-based summarization. At the core of our methodof generating topic-related

summariesis the lexico-semantic knowledgethat we discem for each topic in part. To be able to identify textual

informationthat is relevant to a question we reply on pattern matchingof linguistic rules against free text. Similar to

the InformationExtraction (IE) task developedfor the Message UnderstandingConferences(MUC),if a topic template

is known,we can rely on linguistic rules that recognize the

topic information. However,whena question is asked, no

template is provided. Instead, we generate an ad-hoc template based on topical relations that are mimedfrom the

WordNetlexico-semantie data. Whenthe topic is covered by

a single document,we generate a summaryusing sentences

that are extracted and compressesbased on lexico-semantic

and cohesive information brought forward by the WordNet

topical relations. If the topic is coveredby multiple documents, the summery

is generated by mergingtopic templates

and using someof the possible relations betweentemplates.

This form of fusing information is highly dependenton the

combinationof topic patterns minedfrom WordNetand the

lexico-semanticrelations discoveredin texts.

The remainingof this paper is organized as follows. Section 2 presents the topical relations that can be minedfrom

WordNet,whereasSection 3 presents the techniques for generating point-and-paste answers. Section 4 summarizesthe

conclusions.

Topical Relations

Lexical chains are sequences of semantically related words

found in a documentor across documents. They have been

used extensively in computationallinguistics to study: discourse, coherence, inference, implicatures, malapropisms,

automatic creation of hypertext links, and others (Morris

and Hirst 1991), (Hirst and St-Onge 1998), (Green 1999),

(Harabagiu and Moldovan1998). Current lexical chainers take advantage only of the WordNetrelations between

synsets, totally ignoring the glosses. A far better lexical

chaining technology can be developed based on Extended

WordNet

since the interconnectivity of synsets will largely

increase via the gloss concepts. Considerthe followingtext:

The mine has revitalised diamondproduction. One cannot

{SHi

}: (CHi

J)

ISml: (Cm’i)

.------’¢" {Sjl: (Cj.

k)

l

~

/

{Si} : (CidJ

/\

- .I )

{Shi}

: (Ch;

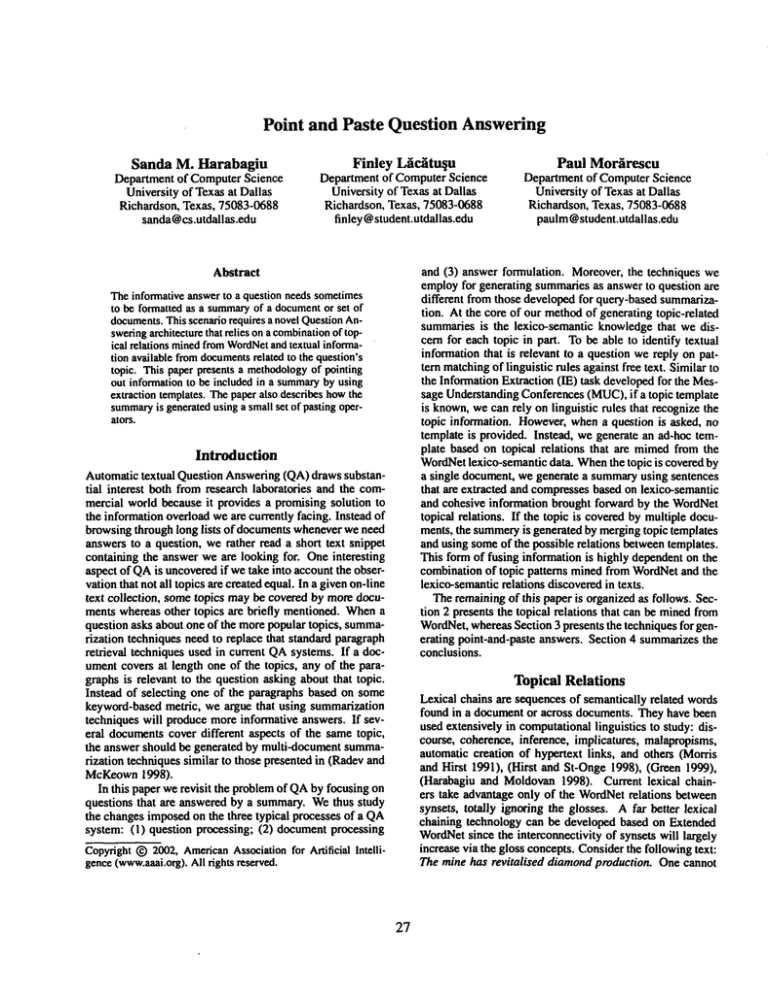

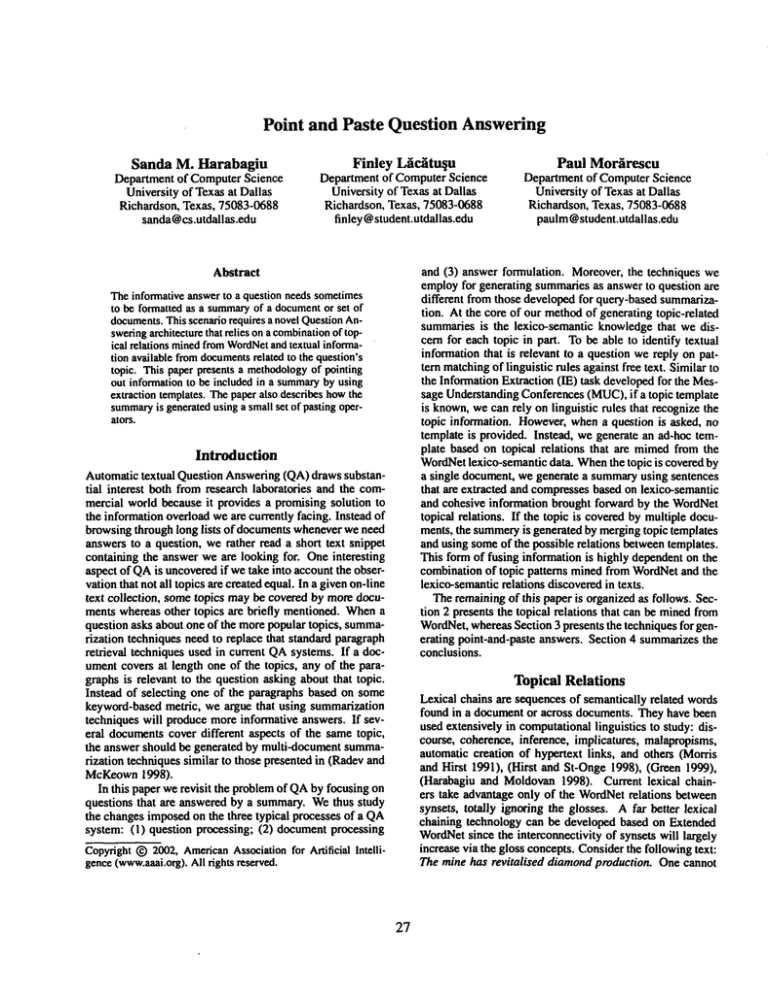

Figure 1: topical" relations are extracted’ffbmseveral sources

Morphological

Gloss

relation

Synset

(the soundof laughing:v#1)

noun - verb

laughter:n#1

immediately:r#3 (beating an immediate:a#2relation)

adverb- adjective

(take out insurance:n#3for)

verb - noun

insure:v#4

(..characteristic of or befitting a parent:n#1) adjective - noun

parental:adj#2

Table 1: Examplesof newmorphologicalrelations revealed by the topical relations

explain the cohesion,and the intention of this simple text by

using only the existing lexico-semantic relations like those

encoded in WordNet(Fellbaum 1998). Typical relations

encoded in lexico-semantic knowledgebases like WordNet

comprise hypernymy, or IS-A relations, meronymyor PARTOFrelations, entaibnent or causation relations. Noneof

these relations can be established betweenany of the words

from the abovetext. However,it is obvious that this short

text is both cohesive, as wordshave morethan syntactic relations joining them, and coherent,as it is logical. Relations

that account for the cohesionof the abovetext can be mined

from WordNet’sglosses that define the encoded concepts.

In WordNeteach concept is represented through a synonym

set of words sharing the same part-of-speech. Such synonymsets are knownarts synsets. The words used to define the synset {mine} are related to the wordsused to define the synset {diamond}. Furthermore, these two synsets

are related to other synsets containing wordssuch as ring,

bracelet or gemand precious. Similarly, production, market

and mine are related to the nameof big diamondconcerns

like De Beers. All these relations convergetowardthe topic

of "diamondmarket", bringing forward all the various concepts that define the topic. This is whywe call themtopical

relations.

A good source for topical relations is WordNet,namely

the glosses defining each synset. Topical relations are pointers that link a synset to other related synsets that mayoccur in a discourse. Topic changes from a discourse to another, and the sameconcept maybe used in different contexts. Whenthe context changes, the concepts used change.

For example, the diamondmarket can be discussed from a

financial, or geological, or gem-jewelrypoint of view. In

each case, the concepts used wouldbe quite different. This

pluralism of topics for the sameconcept creates confusion

and difficulty in identifying related concepts.

Thesourcesfor the topical relations are illustrated in Figure

1.

1. The first place wherewe look is the gloss of each concept. Since the gloss concepts are used to define a synset,

28

they clearly are related to that synset. Fromthe gloss definition we extract all the nouns, verbs, adjectives and adverbs,

less someidiomatic expressions and general concepts that

are not specific to that synset. For example,the gloss of the

concept diamondis (a transparent piece of diamondthat has

beencut and polished and is valued as a precious gent)

2. Eachconcept Ci,j in the gloss of synset Si points to a

synset Sj that has its owngloss definition. The concepts

C’./,~ mayalso be relevant to the original synset Si. Although

this mechanism

can be extendedfurther, we will stop at this

level. For the case of the gloss of diamond,we shall have

pointers to the glosses of gem,cut, polish, valued and precious.

3. A third source is the hypernymSHi and its gloss with

concepts CHi,I. For examplethe synset {jewelry, jewelry.}

is one of the hypernymsof diamond,having its owngloss.

Other relations such as causation, entailment and meronymy

are treated in the samewayas the hypernymy.

4. Yet another source consists of the glosses in whichsynset

Si is used. Thosesynsets, denoted in the figure with Smare

likely to be related to Si since this is part of their definitions.

This schemeof constructing topical relations connects a

synset to manyother related synsets, somefrom the same

part of speechhierarchy, but manyfromother part of speech

hierarchies. The increased connectivity betweenhierarchies

is an important addition to WordNet.Moreover,the creation

of topical relations is a partial solution for the derivational

morphologyproblem that is quite severe in WordNet.In

Table I we showa few examplesof morphologicallyrelated

wordsthat appear in synsets and their glosses whichwill be

linked by the newtopical relations.

Paths betweensynsets via topical relations

Figure 2 showsfive synsets St to $5, each with its topical

relation list representedas a vertical line. In the list of synset

Si there are relations rij pointing to Sj.

It is possible to establish some connections between

synsets via topical relations. Wepropose to develop software that automatically provides connecting paths between

any two synsets Si and Sj up to a certain distance as shown

!

:

five synsets

paths that can be es blished betweenthe i lynsets

in Table 2. The meaningof these paths is that the concepts

along a path have in common

that they mayoccur in a discourse, thus are topically related. These paths should not

be confused with semantic distance or semantic similarity

paths that can also be established on knowledgebases by

using different techniques.

Explanation: polish:v#1 is part of the definition of synset

diamond:n#1,

thus it is on its topical relations list.

Example 2: Is there any topical relation between ring

and diamond?

Answer: there is a W-path: diamond:n#1 ~ gem:n#1

ring:n#1

Explanation: gem:n#1is found in the definition of diamond:n#1and is also found in the definition of ring:n#1.

I Name

I Path

I

V- path

W- path

Si -Sk-Sj

VW- path s~ -s~-s~-s,

WW

- path &-s~-&-sm-sj

Table2: Typesof topically related paths

Example 3: Is there a topical connection between diamondand gold?.

Answer:there is a VW-path:diamond:n#1--* gem:n#1*-ring:n#1--+ gold:n#1

Explanation: diamond:n#1has gem:n#1in its definition,

which in turn is in the definition of r/rig:n#1 which is

defined by gold:n#1.

The nameof the paths was inspired by their shape in Figure 2. The V path links directly Si and Sj either via a rij or

rj,i, namelyeither Si has Sj in its list of topical relations or

vice versa. The Wpath allows one other intermediate synset

between Si and Sj. Similarly, the VWpath allows two intermediate synsets and the WW

path allows three intermediate synsets. Welimit the length of the path to five synsets.

For each type of path, there are several possible connections

depending on the direction of the connection between two

adjacent synsets (i.e. via ri,j or rj,D. Theseconnections

are easily established with knownsearch methods. When

searching for all topical relations of a given concept, wemay

assumethat we try to establish whetherthere are topical relations betweenthat concept and any other concept encoded

in WordNet.To speed-up the search, we rely on hash tables

of all possible paths betweenany sysnet and any other possible sysntet fromWordNet.In this way,at processing time,

retrieving all conceptsthat characterize a topic is a fast and

robust process.

Examples

Supposeone wants to find out whether or not there are any

topical relations between two words. The system will try

to establish topical paths betweenany combinationof the

senses of the two words. Here are someexamples:

Example4: Is there a possible topical relation between

diamond and mine?

Answer:there is a WW-path:diamond:n#1t-- gem:n#1--r

ring:n#4 --* gold:ngl ~ mine:n#1

Explanation: gold:n#1 is in the definition of mine:n#1,and

the rest is as before.

Answering questions with summaries

There are questions whoseanswer cannot be directly mined

from large text collections because the informationthey request is spread across full documentsor across several documents. Instead of returning all those documents,the answer

should summarizethe textual information contained in the

texts. To generate summariesas response to a question, the

architecture of the typical QAsystemneeds to be modified.

Figure 3 illustrates the newQAarchitecture capable of answeringquestion of the type "Whatis the situation with the

diamond market?".

Instead of capturingthe semanticsof the questionby classifying against the question stem (e.g. what, whoor how),

the question processingmoduleidentifies the topic indicated

by the question. Topicidentification is performedby first ex-

Example 1: Is there any topical relation between diamondand polish?

Answer:there is a V-path: diamond:n#1

--+ polish:v#1 t

part-of-speechof the synset and by the sense of that word.For

parts-of-speech,wedenotenounsas n, verbs as v, adjectivesas a

and adverbsas r, followingthe samenotationas in WordNet.

tWedenote a synset by one of its elements,followedby the

29

Input article set

Input article

~

GISTexter

Single-Document

Summarizer

T

I SentenceExtracti°n ~

_

~

i

~ s~fo: Document

I,

.---...

,"" Comusot

"",~

: human-written:9

¯ . abstracts ."

Sum~

Figure 4: Architecture of GISTEXTER

Question

Processing

Multi-Document

Summary Summarization

System

Figure 3: Architecture of QAsystems that answer question

with a summary.

tracting the context wordsfrom the question and then mining their associated topical relations from WordNet.Topical

paths that display a high degree of redundancyare grouped

as separate topics and are used to process the documents.Instead of performingparagraphretrieval as it is donein current fact-based QAsystem, documentprocessing involves

a topical categorization of the collection and returns either

single documentsthat are characteristic of the topic or set

of such documents.Finally, a summarizationsystem generates the answerto the question in the form of a summaryof

predefinedsize or compressionrate.

For the purpose of summarization,we have trained a summarization system on the corpus of documentsand correspondingabstracts written by four different humanassessors

for the DUC-2001

evaluations. Our system, called GiSTEX-

3O

TERgeneratesboth single-documentand multi-document

summaries.

Twoassumptions

stay at the core of our techniques:(1)

whengenerating the summary

of a single document,we

shouldextract the sameinformationa human

wouldconsider

whenwriting an abstract of the samedocument;(2) when

generating a multi-documentsummary,weshould capture

the textual informationsharedacrossthe document

set. To

this end, for the multi-document

summaries

wehaveapplied

the CICERO

InformationExtraction (IE) systemto generate

extraction templates for someof the topics that werealready

encoded in the multi-domain knowledgeof CICERO

2.

For the topics that werenot coveredby CICEROwehad a

back-upsolution of gisting informationby combining

cohesion and coherence

indicators for sentenceextraction. By

definition, gisting is an activity in whichthe information

taken into accountis less than the full informationcontent

available, as reported in (Resnik 1997). In our backupsolution, getting the gist of the summary

information is performed by assumingthat the more cohesive the gisted information is, the moreessential it is to the multi-document

summary.In addition coherence cue phrases were considered for information extraction. At the core of getting the

gist of the summarystay topical relations extracted from

WordNet.

The architecture of G ISTEXTER

is shownin Figure 4. Input to the systemis either a single document

or a set of documents. Whena summaryfor a single documentis sought,

in the first stage key sentences are extracted, as in mostof

the current summarizers.A sentence extraction function is

2CICEROis an ARDA-sponsored

on-goingproject that studies

the effects of incorporatingworldknowledge

into IE systems.CICERO

is being developedat LanguageComputerCorporation.

learned, based on features of the single-documentdecwnposition that analyzes the features of human-writtenabstracts

for single documents.Tofurther filter out un-necessaryinformation, the extracted sentences are then compressed.In

the last stage a summary

reduction is performed,to trim the

whole summaryto the length of 100 words.

If multi-documentsummariesare sought, two situations

arise:

Case 1: the topic of the documentset has been encodedin

the multi-domain knowledgeof CICERO,therefore the IE

systemextracts templates filled with informationrelevant to

the topic of the documentset. To fill extraction templates

CICEROperforms a sequence of operations, implemented

as cascaded finite-state automata. The texts are tokenized

and the namedentities are semantically disambiguated,then

phrasal parses are generated and combinedinto noun and

verb groupstypical to a given topic of interest. Coreference

betweenentities is resolved and the mentionsof the events

of interest are identified. Beforemerginginformationreferring to the sameevent, coreference betweenentities related

to the sameevent is resolved. The textual information that

fills the templates is used for content planning and generation of the multi-documentsummary.

Case 2: the topic is new and unknown.In this case the

human-writtenabstracts are decomposedto analyze the cohesive and coherence cues that determine the extraction of

textual information for the multi-document summary.For

the analysis of the cohesive information, we use the topical relations mined from WordNetas well as the semantic

classes of the namedentities provided by CICERO.

The coherence information is indicated by cue phrases such as because, therefore or but but also by very dense topical relations betweenthe wordsof the text fragment.

2. Application of SummaryOperators - after commonfeatures of multiple templatesare identified and the distinct

features are markedup, summaryoperators are applied

to link information from different templates. Often this

results into the synthesis of newinformation. For example, a generalization maybe formed from two independent facts. Alternatively, since almostall templateshavea

TIME and a LOCATIONslot, contrasts can be highlighted

by showinghowevents changes along time or across different locations. The operators we encoded in GISTEXTERare listed in Table3 and weredefined and first introduced in (Radev and McKeown

1998).

3. Orderingof Templatesatut Linguistic Generation- whenever an operator is applied, a newtemplate is generated

by merginginformation from the argumentsof the operator. All such resulting templatesare scored highly then the

extracted templates. Moreover,dependingon the slots the

operators merged,the scores havedifferent values. At the

end, information from templates with higher importance

appear with priority in the resulting summary.Weuse the

topical relations to realize only those sentence fragments

that are identified by extraction patterns.

Name

Changeof Perspective

Definition

Thesameevent described

differently

Contradiction

Conflictinginformationaboutthe

sameevent

Addition

Additionalinformationabout an event

is broughtforward

Refinement

Moregeneral informationabout an

eventis refinedlater

Superset/Generalization Combinetwo incomplete

templates

Trend

Severaltemplateshavecommon

fillers

for the sameslot

Single-Document Summarization

To generate lO0-wordsummariesfrom single textual documents, we have implementeda sequence of three operations:

Table 3: SummaryOperators.

1. SentenceExtraction- identifying sentences in the original

documentthat contain information essential for the summary;

Whenthe topic is not encoded in CICERO, we need to

further implementtwoadditional steps:

1. Automatic Generation of the Extraction Template - in

whichtopical relations are used to find redundantinformation,and thus use it as slots of the template;

2. AutomaticAcquisition of Extraction Patterns - in which

linguistic rules capable of identifying informationrelevant to the topic in text and to extract it by filling the

slots of the template. Usually, such textual information

represents only about 10%of a document.

For generating extraction templates we collect all the sentences that contain at least twoconceptsthat are related to

the topic. Wethen categorize semanticallyall these concepts

and select the most common

classes observed in the corpus.

To these classes we add NamedEntity classes (e.g. PERSON,

LOCATION,DATEand ORGANIZATION).

We consider the

eachof these twotypes of classes as the slots of the template.

Whenthe extraction template is ready, extraction patterns

are learned by applying the bootstrapping techniques de-

2. Sentence Compression- reducing the extracted sentences

by filtering out all non-importantinformation while preserving the grammaticalquality;

3. SummaryReduction - trimming the resulting summaryto

the required 100 word/documentlimit.

The sentence extraction is based on the topical relations,

whereas sentence compression combines the topical relations with syntactic dependencies. The summaryreduction

simply retains only the first I00 wordsfrom the summary.

Multi-Document Summarization

The generation of multi-documentsummariesis a two-tired

process. Whenthe topic is one of the 20 topics encodedin

CICERO, we generate the summaryas a sequence of three

operations:

1. TemplateExtraction - filling relevant information in the

slots of predefinedtemplates correspondingto each topic;

31

scribed in (Riloff and Jones 1999). If there is any document from which we cannot extract at least a template, we

eliminate the slot corresponding to the least popular semantic class and continue until we have at least on template per

document.

The extraction techniques have the role of pointing where

the information that should be included in the summaryexists. But we also need to paste this information in a coherent

and cohesive summary. This form of generation was first

introduced in (.ling and McKeown2000). Instead of using

knowledge represented in the form of templates alone, cutand-paste operators provide a way of generating summaries

by transforming sentences selected from the original documents because they were matched by extraction patterns.

The cut-and-paste operators are listed in Table 4.

Name

Sentence reduction

Sentence combination

Paraphrasing

Generalization/Specification

Reordering

S. Harabagiu. M. Pa~ca and S. Maiorano. Experiments with

Open-DomainTextual Question Answering. In Proceedings of

the 18th International Conference on ComputationalLinguistics

(COLING-2000),August 2000, Saarhruken Germany,pages 292298.

S. Harabagiu, D. Moidovan,M. Pa~ca, R. Mihalcea, M. Surdeanu,

R. Bunescu,R. Girju, V. Rusand P. Mor~rescu.Therole of lexicosemantic feedback in open-domaintextual question-answering. In

Proceedings of the 39th Annual Meeting of the Association for

ComputationalLinguistics (ACL-2001),Toulouse, France, 2001,

pages 274-28 I.

G. Hirst and D. St-Onge. Lexical Chains as Representations

of Context for the Detection and Correction of Malapropisms.

WordNet-AnElectronic Lexical Database. The MIT Press,

C. Fellbaumeditor, pp 305-332, 1998.

E. Hovy, L. Gerber, U. Hermjakob,C-Y. Lin, D. Ravichandran.

Towardsemantic-based answer pinpointing. In Proceedings of

the HumanLanguage Technology Conference (HLT-2001), San

Diego, California, 2001.

H. Jing and K. McKeown.

Cut and Paste Text Summarization. In

Proceedingsof the First Meeting of the North AmericanChapter

of the Association of ComputationalLinguistics (NAACL-2000),

pages 178-185, 2000.

W. Lehnert. The Process of Question Answering: a computer

simulation of cognition. LawrenceErlbaum,! 978.

G.A. Miller. WordNet:a lexical database. Communicationsof

theACM,38(I I):39--41, 1995.

J. Morris and G. Hirst. Lexical CohesionComputedby Thesaural

Relations as an Indicator of the Structure of Text. In Computational Linguistics, Vol.! 7, no 1, pp. 21-48,! 99 !.

D.R. Radev and K.R. McKeown.Generating natural language

summariesfrom multiple on-line sources. Computational Linguistics, 24(3):469-500, 1998.

P. Resnik. Evaluating Multilingual Gisting of WebPages. AAA!

Spring Symposiumon Natural LanguageProcessing for the World

Wide Web, 1997.

E. Riloff and R. Jones. Learning Dictionaries for Information

Extraction by Multi-LevelBootstrapping. Proceedingsof the Sixteenth National Conferenceon Artificial Intelligence (AAAI-99),

pages 474-479, 1999.

Definition

Removeextraneous phrases

from sentence

Mergetext from

several sentences

Replacephrases with their

paraphrases

Replace phrases with more

general/specific phrases

Changethe order of sentences

Table 4: Cut-and-Paste Operators.

Conclusions

In this paper we described a new paradigm for answering

natural language questions. Whenevera question relates to

a topic that is discusses extensively in a document or set

of documents, the answer produced is a summary of those

texts. The generation of the summaryrelies on mining topical relations from the WordNetknowledge base. In this way,

answers are mined both from the texts and the lexical knowledge base encode in WordNet.

Acknowledgments: This research

ARDA’s AQUAINTprogram.

was partly

funded by

References

Christiane Fellbaum(Editor). WordNet- AnElectronic Lexical

Database. MITPress, 1998.

S.J. Green. Building Hypertext Links by ComputingSemantic

Similarity. IEEETransactions on Knowledgeand Data Engineering, pp 713-730,Sept/Oct1999.

S.M. Harabagiu and D.L Moldovan.KnowledgeProcessing on an

Extended WordNet. W0rdNet-AnElectronic Lexical Database.

The MITPress,C. Fellbaumeditor, pp 379-406, 1998.

S. Harabagiu, G.A. Miller and D. Moldovan.WordNet2 - A Morphologically and Semantically EnhancedResource. In Proceedings of SIGLEX-99,June 1999, Univ. of Maryland,pages I-8.

S. Harabagiuand S. Maiorano.Finding answers in large collections of texts: Paragraphindexing + abductive inference. In AAAI

Fall Symposiumon Question Answering Systems, pages 63-71,

November1999.

32