From: AAAI Technical Report SS-02-04. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved.

TeamCoordination amongDistributed Agents:

Analyzing Key TeamworkTheories and Models

David V. Pynadath and Milind Tambe

ComputerScience Departmentand Information Sciences Institute

University

ofSouthern

California

4676Admiralty

Way,Marina

delRey,CA 90292

Email:

pynadath@isi.edu,

tambc@usc.edu

Abstract

Multiagent

researchhasmade

significantprogressin constructingteams

of distributedentities (e.g., robots,agents,embedded

systems)that act

autonomously

in the pursuit of common

goals. Therenowexist a variety of prescriptivetheories,as wellas implemented

systems,that can

specify goodteambehaviorin different domains.However,

each of

these theoriesandsystemsaddressesdifferent aspectsof the teamwork

problem,andeachdoes so in a different language.In this work,we

seekto providea unifiedframework

that cancaptureall of the common

aspectsof the teamwork

problem

(e.g., heterogeneous,

distributedentities, uncertainanddynamic

environment),

whilestill supportinganalyses of boththe optimalityof teamperformance

andthe computational

complexityof the agents’ decision problem.OurCOMmunicative

Muitiagent TeamDecision Problem(COM-MTDP)

modelprovides such

a framework

for specifyingand analyzingdistributed teamwork.The

COM-MTDP

modelis general enoughto capture manyexisting models

of multiagentsystems,and we use this modelto providesomecomparativeresults of these theories. Wealso providea breakdown

of the

computational

complexityof constructingoptimalteamsundervarious

classes of problemdomains.Wethen use the COM-MTDP

modelto

compare

(both analyticallyandempirically)twospecific coordination

theories (joint intentionstheoryand STEAM)

against optimalcoordination, in termsof bothperformance

and computational

complexity.

Introduction

A central challenge in the control and coordination of distributed

agents, robots, and embedded

systemsis enablingthese different, distributed entities to worktogether as a team, towarda common

goal.

Suchteamworkis critical in a vast range of domains,e.g., for future teamsof orbiting spacecraft, sensor teamsfor tracking targets,

teams of unmanned

vehicles for urban battlefields, software agent

teamsfor assisting organizationsin rapid crisis response, etc. Research in teamworktheory has built the foundations for successful

practical agent team implementationsin such domains.Onthe forefront so far havebeentheories basedon belief-desire-intentions(BDI)

frameworks(e. g., joint-intentions (Cohen&Levesque1991), SharedPlans (Grosz &Kraus 1996), and others (Sonenberget al. 1994))

that haveprovidedprescriptions for coordinationin practical systems.

Thesetheories have inspired the construction of practical, domainindependent teamworkmodels(Jennings 1995, Rich & Sidner 1997:

Tambe1997;Yenet al. 2001), successfully applied in a range of complex domains. While the BDI-basedtheories continue to be useful

in practical systems, there is nowa critical need for complementary

foundational frameworksas practical teams scale-up towards complex, real-world domains. Such complementaryframeworkswould

address somekey weaknessesin the existing theories, as outlined below:

¯ Existing teamworktheories provide prescriptions that usually ignore the various uncertainties and costs in real-world environCopyright(~) 2002, AmericanAssociationfor Artificial Intelligence

(www.aaai.org).

All rights reserved.

57

ments. Whilesuch frameworks

can still providesatisfactory behavior, it is difficult to evaluatethe degreeof optimalityof the behavior

suggestedby these theories/models.For instance, the joint intentions theory (Cohen& Levesque1991) suggests that team members committo attainment of mutualbeliefs in key circumstances,

but it ignores the cost of attaining mutualbelief (e.g., via communication).Coordinationstrategies that blindly followsuch prescriptions maylead to highly suboptimal implementations.While

in somecases optimality maybe practically unattainable, understandingthe strengths and limitations of coordinationprescriptions

is definitelyuseful.

Of course, while existing theories have avoidedcosts and uncertainties, practical systemscannotdo so in real-worldenvironments.

For instance, STEAM

(Tambe1997) extends the BDI-logic approach with decision-theoretic extensions. Unfortunately, while

such approaches succeed in their intended domains, their very

pragmatism

often necessarilyleads to a lack of the theoretical rigor.

Furthermore,it remainsunclear whethertheir suggestedprescriptions (e.g., STEAM’s

prescriptions) necessarily lead to optimal

team coordination.

¯ Existing teamworktheories haveso far failed to provide insights

into the computationalcomplexityof various aspects of teamwork

decisions. Suchresults mayshowintractability of somecoordination strategies in specific domainsand potentially justify the use

of practical teamworkmodelsas an approximationto optimality in

such domains.

To answer these needs, we propose a new complementaryframework, the COMmunicativeMultiagent Team Decision Problem

(COM-MTDP),

inspired by earlier work in economic team theory (Marschak& Radner1971; Ho1980), within the context of economics and operations research. While our COM-MTDP

model borrowsfroma theory developedin another field, weare not just dressing up old wine in a newbottle. Wemakeseveral contributions in

applyingand extendingthe original theory for applicability to intelligent distributed systems.First, weextendthe theory to include key

issues of interest in the distributed AI literature, mostnotablyby explicitly including communication.Second, we introduce a categorization of teamworkproblemsbasedon these extensions. Third, we

provide complexityanalyses of these problems,providing a clearer

understandingof the difficulty of teamworkproblemsunder different circumstances. For instance, someresearchers have advocated

teamworkwithout communication

(Matarid 1997). This paper clearly

identifies domainswheresuch team planning remains tractable, at

least whencomparedto teamworkwith communication.It also identifies domainswherecommunication

provides a significant advantage

in teamworkby reducing the time complexity.

In addition to analyzing complexity, our frameworksupports the

analysis of the optimality of different meansof coordination. We

isolate the conditions under whichcoordination based on joint intentions (Cohen&Levesque1991) is preferred, based on the re-

suiting performanceof the distributed team at the desired task. We

provide similar results for coordination based on the STEAM

algorithm (Tambe1997). Wealso analyze the complexityof the decision

problemwithin these frameworks.The end result is the beginningsof

a well-groundedcharacterizationof the complexity-optimalitytradeoffamongvarious meansof team coordination.

Finally, we are currently conductingexperimentsusing the domain

of simulated helicopter teams, conducting a joint mission. Weare

evaluating different coordination strategies, and comparingthemto

the optimal strategy that we can computeusing our framework.We

will use the helicopter teamas a runningexamplein our explanations

in the followingsections.

Multiagent Team Decision Problems

Givena team of selfless, distributed entities, a, whointend to perform somejoint task, wewish to evaluate the effectiveness of possible coordinationpolicies. A coordinationpolicy prescribes certain

behavior, on top of the domain-levelactions the agents performin direct service of the joint task. Webegin withthe initial teamdecision

model(Ho1980). This initial modelfocusedon a static decision, but

we provide an extension to handle dynamicdecisions over time. We

also provide other extensions to the individual components

to generalize the representation in wayssuitable for representingmultiagent

domains.Werepresent our resulting multiagent team decision problem (MTDP)

modelas a tuple, (S, A, P. fl, O, R). The remainder

this section describes each of these components

in moredetail.

World States: S

¯ S: a set of worldstates (the team’senvironment).

Domain-Level Actions: A

¯ {Ai},¢~:set of actions available to each agent i E a, implicitly

defining a set of combinedactions, A - l’I~o A~.

Theseactions consist of tasks an agent mayperform to changethe

environment(e.g., changehelicopter altitude/heading). Theseactions

correspondto the decision variables in teamtheory.

Extension to DynamicProblemThe original conception of the

team decision problemfocused on a one-shot, static problem. The

typical muRiagentdomainsrequire multiple decisions madeover

time. Weextend the original specification so that each component

is a time series of randomvariables, rather than a single one. Theinteraction betweenthe distributed entities and their environment

obeys

the followingprobabilistic distribution:

¯ P: state-transition function, S x A -~ II(S).

This function represents the effects of domain-levelactions (e.g.,

flying action changesa helicopter’s position). Thegivendefinition of

P assumesthat the world dynamicsobey the Markovassumption.

Agent Observations: 1"1

¯ (fl~}~a: a set of observations that each agent, i, can experience of its world, implicitly defining a combinedobservation,

In a completelyobservablecase, this set maycorrespondexactly to

S x A, meaningthat the observations are drawndirectly from the

actual state and combinedactions. In the moregeneral case, f~ may

include elementscorresponding

to indirect evidenceof the state (e.g.,

sensor readings) and actions of other agents (e.g., movement

of other

helicopters). In a team decision problem,the informationstructure

represents the observationprocessof the agents. In the original conception, the informationstructure wasa set of deterministicfunctions,

O~: S --* ~.

58

Extension of AllowableInformation Structures Such deterministic observationsare rarely presentin nontrivial distributed domains,

so weextend the informationstructure representation to allowfor uncertain observations. There are manychoices of modelspossible for

representing the observability of the domain.In this work, we use

a general stochastic model, borrowedfrom the paniaUyobservable

Marl~vdecision process model(Smallwood&Sondik 1973):

¯ {O~}~a:an observation function, Oi : S x A ~ H(f~i), that

gives a distribution over possible observationsthat an entity can

make.This function modelsthe sensors, representing any errors,

noise, etc. EachO, is cross productof observationfunctions of the

state and actions of other entities: O~sx O~A,respectively. Wecan

define a combinedobservation function, O : S x A ~ II(f~),

taking the cross productover the individual observationfunctions:

O = ]"[~¢~ Oi. Thus, the probability distribution specified by O

formsthe richer informationstructure used in our model.

Wecan makesomeuseful distinctions betweendifferent classes of

informationstructures:

Collective UnobservabilityThis is the general case, wherewe make

no assumptionson the observations.

Collective Observabllity Vwe f}, 3s E S such that Pr(St =

sill t = w) = 1. In other words,the state of the worldis uniquely

determinedby the combinedobservations of the team of agents.

Theset of domainsthat are collectively observableis a strict subset of the domainsthat are collectively unobservable.

Individual Observability V~o~E f~l, 3s E S such that Pr(St =

s[ft~ = w~)= I. In other words,the state of the worldis uniquely

determinedby the observations of each individual agent. The set

of domainsthat are individuallyobservableis a strict subset of the

domainsthat are collectively observable.

Policy (Strategy) Space:

¯ ~qA:a domain-levelpolicy (or strategy, in the original teamtheory

specification) to governeach agent’s behavior.

Eachsuch policy mapsan agent’s belief state to an action, where,in

the original formalism,the agent’s beliefs corresponddirectly to its

observations

(i.e., ~riA: Oi--~ A).

Extension to RicherStrategy SpaceWegeneralize the set of possible strategies to capture the morecomplexmental states of the

agents. Eachagent, i E a, formsa belief state, b~ E B4, based on

its observationsseen throughtime t, whereB~circumscribesthe set

of possible belief states for the agent. Wedefine the set of possible combinedbelief states over all agents to be B -- 1-Ii~o Bi. The

correspondingrandomvariable, bt, represents the agents’ combined

belief state at timet. Thus,wedefinethe set of possibledomain-level

policies as mappingsfrombelief states to actions, 7r~a : Bi --* A. We

elaborate on different types of belief states and the mappingof observationsto belief states (i.e., the state estimatorfunction)in a later

section.

Reward Function: R

¯ R: rewardfunction(or payofffunction, or loss criterion), S x A

R.

This function represents the team’s joint preferences over domainlevel states (e.g., destroyingenemyis good, returning to homebase

with only 10%of original force is bad). The function also represents

the cost of domain-levelactions (e.g., flying consumesfuel). Thus,

all the agents share the samecommon

interest. Followingthe original

team-theoretic specification, we assumea Markovianproperty to R.

Extension for Explicit Representation of

Communication:

E

Wemakean explicit separation betweenthe domain-levelactions (A

in the original team-theoretic model)and communication

actions. In

principle, A can include communication

actions, but the separation is

useful for the purposesof this framework.

Thus, weextendour initial

MTDP

modelto be a communicativemultiagent team decision problem (COM-MTDP),

that wedefine as a tuple, (S, A, X], P, f~, O,

with the additional component,E, and an extendedrewardfunction,

R, as follows:

¯ {E~}i~a:a set of possible "speechacts", implicitly defining a set

of combinedcommunications,X~= I-Le,, E~. In other words, an

agent, i, maycommunicatean element, z E E~, to its teammates,

whointerpret the communication

as they wish (e.g., broadcast arrival in enemyterritory). Weassumehere perfect communication,

in that each agent immediatelyobservesthe speechacts of the others withoutany noise or delay.

¯ R: reward function, S x A x X] ~ R. This function represents

the team’s joint preferences over domain-levelstates and domainlevel actions, as in the original team-theoretic model. However,

we nowextend it to also represent the cost of communicative

acts

(e.g., communication

channels mayhaveassociated cost).

Weassume that the cost of communicationand cost of domainlevel actions are independentof each other, giventhe current state

of the world. Withthis assumption, we can decomposethe reward

function into two components:a domain-level reward function,

RA: S x A --+ R, and a communication-levelreward function,

Rz : S × ~ ~ R. The total reward is the sum of the two component values: R(s, a: o’) = RA(S, a) + RE(s, ~). Weassumethat

communication

has no inherent benefit and mayinstead have some

cost, so that for all states, s E S, and messages,~r E E, the reward

is neverpositive: R(s, or) <

Withthe introduction of this communication

stage, the agents now

updatetheir belief states at twodistinct points within each decision

epoch t: once upon receiving observation f~ (producing the precommunication

belief state b~.x), and again uponobservingthe other

agents’ communications(producing the post-communicationbelief

state b~E.). The distinction allows us to differentiate betweenthe

belief state used by the agents in selecting their communication

actions and the more"up-to-date" belief state used in selecting their

domain-levelactions. Wealso distinguish betweenthe separate stateestimator functions used in each update phase:

n,

b,x o = SE~Eo (b~.,x, ~t)

b°= SE O b~.x=SE~*x(bix°,

’

~)

whereS E~.r. : B~x fl~ -+ B~ is the pre-communication

state estimator for agent i, and SERE.: B~x ~2 --+ B~is the post-communication

state estimator for agent i. Theyspecify functions that update the

agent’s beliefs based on the latest observation and communication,

respectively. Theinitial state estimator, SEmi: 0 -~ B~, specifies

the agent’s prior beliefs, before any observationsare made.For each

of these, we also makethe obviousdefinitions for the corresponding

°.

estimators for the combinedbelief states: SE.E, SEE., and SE

Note that, although we assumeperfect communication

here, we could

potentially use the post-communication

state estimator to modelany

noise in the communication

channel.

Weextendour definition of agent’s policy to include a coordination

policy, 7r~x : B~--¢ E~, analogousto the domain-levelpolicy. We

define the joint policies, *rE and *rA, as the combined

policies across

all agents in a. Wealso define the overall policy, 7r~, as the pair,

(TqA,~rix ), andthe overallcombined

policy, ,r, as the pair, (*rA,*rE).

Communication Subdasses

One key assumption we makeis that communicationhas no latency,

i.e., agents communication

instantly reaches other agents withoutde-

lay. However,as with the observability function, weparameterizethe

communication

costs associated with messagetransmissions:

General CommunicationIn this general case, we makeno assumptions on the communication.

Free Communication

R(s, a, or1) = R(s, a, o’~) for any o’1, or2

E, s E S, and a E A. In other words, communication

actions have

no effect on the agents’ reward.

No communication]D = 0, so the agents have no explicit communication capabilities. Alternatively, communication

maybe prohibitively expensive, so that Vu E 1~, s E S, and a E A,

R(s, a.. or) = -oo. If communication

has such an unboundednegative cost, then wecan effectively treat the agents as havingno

communication

capabilities whendeterminingoptimal policies.

The free-communicationcase is common

in the multiagent literature, whenresearchers wish to focus on issues other than communication cost. Wealso identify the no-communicationcase because such decision problemshavebeenof interest to researchers as

well (Matari6 1997). Of course, even if E = 0, it is possible that

there are domain-levelactions in A that have communicative

value.

Suchimplicitly communicative

actions mayact as signals that convey

informationto the other agents. However,we still label such agent

teamsas having no communication

for the purposesof the workhere,

wherewe gain extensive leverage from explicit modelsof communication whenavailable.

Model mustration

Wecan viewthe evolvingstate as a Markovchain with separate stages

for domain-leveland coordination-level actions. In other words, the

agent team begins in someinitial state, SO, with separate features,

.... , fo. Wealso add initial belief states for each agent i E a,

b~ "= S~-~i"0. Eachagent, i E a, receives an observation f~o drawn

from f~ according to the probability distribution O~(S°, .), since

there are no actions yet. Then,each agent updates its belief state,

= S ,.E(bo, W).

Next, each agent i E a selects a speech act according to its coo

ordination policy, Eo = ~z (bi°z),

defining a combinedspeech act

Eo. Eachagent observesthe communications

of all of the others, and

it updates its belief state, box. = SE~E.(b°.E,]~o). Eachthen selects an action accordingto its domain-levelpolicy, A° =~riA(b°E.),

defining a combinedaction A°. In executingthese actions, the team

receives a single reward, R° = R(S°, A°, ~o). The world then

movesinto a newstate, SI, accordingto the distribution, P(S°, A°),

and the process continues.

Now,each agent i receives an observation f~ drawnfrom N~accordingto the distributionOi ( S ~, A°), andit updatesits belief state,

bl_x = SE~,E

(blE.. fl~). Eachagent again chooses its speechact,

E~= ~r,z (b~.~:). The agents then incorporate the received messages

into their newbelief state, blE. = SE~:.(bl.~., ~.~). Theagents then

choosenewdomain-levelactions, A~= ~r~A(b~E.), resulting in yet

anotherstate, etc.

Thus,in additionto the timeseries of worldstates, S°, t,

SZ,... : S

the agents themselvesdeterminea time series of coordination-level

and domain-levelactions, Eo, El,...., Et and A1, A1,..., At, respectively. Wealso havea time series of observationsfor each agent

t

i, fl~,

0 G~

1 ,...,

fl~.

Likewise,we can treat the combined

observations,

f~o, l~ ..., f~t, as a similar time series of randomvariables.

Finally, the agents receive a series of rewards,Re, R1,..., Rt. We

can define the value, V, of the policies, *rAand *rE, as the expected

rewardreceivedwhenexecutingthose policies. Overa finite horizon,

T, this value is equivalentto the following:

vT(*rA,*rx)

59

= [t =;-~oRtl*rA,*rE ]

(1)

I{

Individually

Collectively

Collectively

Unobservable

Observable

Observable

P-complete NEXP-complete NEXP-complete

No Comm.

Gen. Comm. P-complete NEXP-complete NEXP-complete

PSPACE-complete

Free Comm. P-complete

P-complete

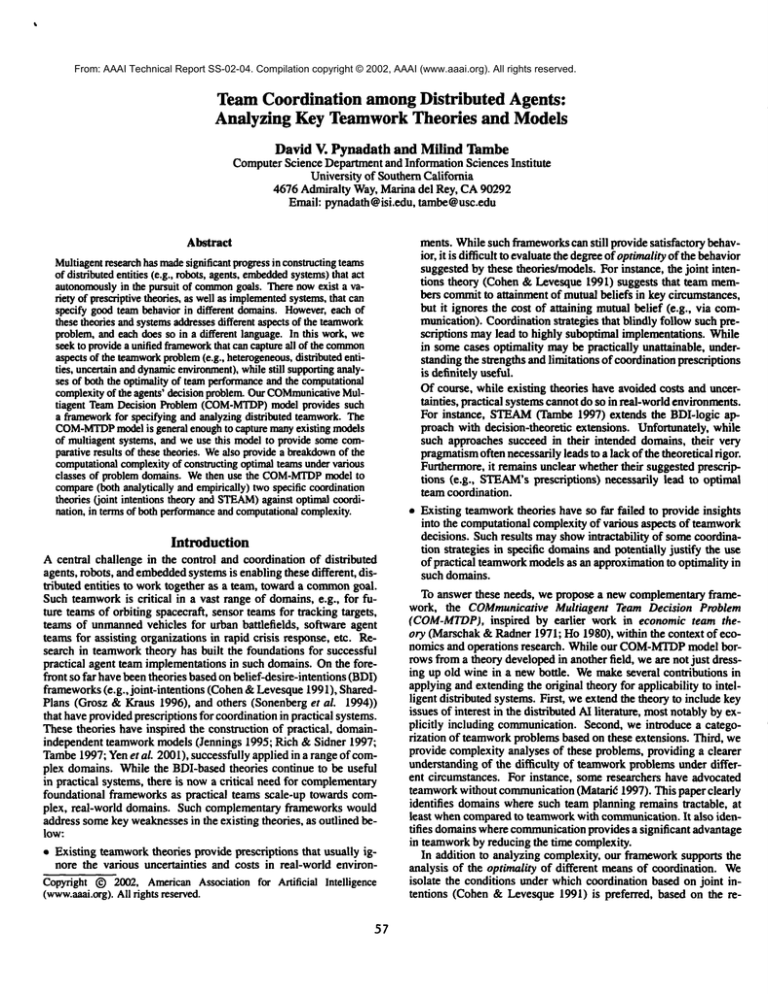

Table 1: Time complexity of COM-MTDPs

under combinations of

observability and communicability.

Complexity Analysis of Team Decision Problems

The problemfacing these agents (or the designer of these agents)

howto construct the joint policies, ~r~_and ~rA, so as to maximize

their joint utility, R( S*, t, Et )

TheoremI The decision problemof determining whetherthere exist

joint policies, ~r~. and~r A, for a given teamdecision problemthat

yield a total rewardat least K over somefinite horizon T (given

integers K andT ) is NEXP-complete

ilia[ >_. 2 (i.e., morethan one

agent).

The proof for this theoremfollows from somerecent results in

decentralized POMDPs

(Bernstein, Zilberstein, &Immerman

2000).

Dueto lack of space, wewill not provide the proofs for the theorems

outlinedin this paper.

Thedistributed nature of the agent team is one factor behindthe

complexityof the problem.The ability of the agents to communicate

potentially allowssharing of observationsand, thus, simplification of

the agents’ problem.

Theorem2 The decision problemof determining whether there exist

joint policies, 7rE and 7rA, for a given team decision problemwith

free communication,that yield a total rewardat least K over some

finite horizon T (given integers K and T) is PSPACE-complete.

Theorem3 The decision problemof determining whether there exist

joint policies, 7rr~ and 7r A, for a given teamdecision problemwith

free communication

and collective observability, that yield a total

rewardat least K over somefinite horizon T (given integers K and

T) is P-complete.

Table 1 summarizesthe above results. The columnsoutline different observability conditions, while the rowsoutline the different

communication

conditions. Wecan makethe following observations:

¯ Wecan identify that difficult problemsin teamwork--in the sense

of providingagent teamswith domain-leveland coordination policies -- arise underthe conditionsof collective observabilityor unobservability, and whenthere are costs associated with communication. In attacking teamworkproblems,our energies should concentrate in these arenas.

¯ Whenthe world is individually observable, communication

makes

little differencein performance.

¯ Thecollective observability case clearly illustrates a case where

teamworkwithout communication

is highly intractable, potentially

with a double exponential. However,with communication,the

complexitydrops downto a polynomialrunning time.

¯ Thereis a reduction in complexitywith the collectively unobservable case as well, but the differenceis not as significant as withthe

collectively unobservablecase.

Evaluating Coordination Strategies

Whileprovidingdomain-leveland coordination policies for teams in

general is seen to be a difficult challenge, manysystemsattempt to

alleviate this difficulty by having humanusers provide agents with

domain-levelplans (Tambe1997). The problemfor the agents then

6O

to generateappropriateteamcoordinationactions, so as to ensurethat

the domain-levelplans are suitably executed. In other words,agents

are providedwith ~rA, but they mustcompute~rr autonomously.Different teamcoordinationstrategies havebeenproposedin the literature, essentially to compute~r~. Weillustrate that COM-MTDP

can

be used to evaluate suchcoordinationstrategies, by focusingon a key

theory and a practical teamworkmodel.

Communicationunder Joint Intentions

Joint-intention theory provides a prescriptive frameworkfor multiagent coordination in a team setting. It does not makeany claims

of optimalityin its coordination,but it providestheoretical justifications for its prescriptions, groundedin the attainment of mutual

belief amongthe teammembers.Ourgoal here is to identify the domainproperties under whichjoint-intention theory provides a good

basis for coordination. By "good", we meanthat a team of agents

communicates

as specified under joint-intention theory will achieve

a high expectedutility in performinga given task. A teamfollowing

joint-intention

theorymaynot performoptimally, but wewishto find

a methodfor identifying precisely howsuboptimalthe performance

will be. In addition, wehave already shownthat finding the optimal

policy is a complexproblem.The complexityof finding the jointintention coordinationpolicy is lowerthan that of the optimalpolicy.

In manyproblemdomains,we maybe willing to incur the suboptimal

performancein exchangefor the gains in efficiency.

Underthe joint-intention framework,the agents, a, makecommitmerits to achieve certain joint goals. Wespecify a joint goal, G, as

a subset of states, GC_S, wherethe desired conditionholds. Under

joint-intention theory, whenan individual privately believes that the

teamhas achievedits joint goal, it shouldthen attain mutualbelief

of this achievement, with its teammates. Presumably,such a prescription indicates that joint intentions do not applyto individually

observableenvironments,since all of the agents wouldobserve that

St E G, and they wouldinstantaneouslyattain mutualbelief. Instead,

the joint-intention frameworkaims at domainswith somedegree of

unobservability.In such domains,the agents must communicate

to attain mutualbelief. However,wecan also assumethat joint-intention

theory does not apply to domainswith free communication.In such

domains,we do not need any specialized prescription for behavior,

since we can simplyhave the agents communicate

everything, all the

time.

The joint-intention frameworkdoes not specify a precise communication policy for the attainmentof mutualbelief. Let us consider

one possible instantiation of joint-intention theory: a simple jointintention communication

policy, ~rsJI

I; , that prescribes that agents

communicate

the achievementof the joint goal, G, in each and every belief state wherethey believe G to be true. Underjoint intentions, we makean assumptionof sincerity (Smith &Cohen1996),

that the agents neverselect the special oa messagein any belief state

whereG is not believed to be true with certainty. Wemakeno other

assumptionsabout whatmessages~rs J1 specifies in all other belief

states.

Given the assumptionof sincerity, we can assumethat all of the

other agents immediatelyaccept the special message,oa, as true, and

we define our post-communication

state estimator function accordingly. Withthis assumption,as well as our assumptionsof perfect

communication,

the team attains mutualbelief that G is true immediately uponreceiving the message,OG.

Wecan define the negated simple joint-intention communication

policy, ~r~sJx, as an alternative to 7r~Jx. In particular, this negated

policy never prescribes communicating

the oc message.For all other

belief state, the two policies are identical. Thedifference between

these policies dependson manyfactors within our model. Giventhat

the domainis not individually observable,certain agents maynot be

awareof the achievementof G whenit happens. The risk with the

Individually

Observable

No Comm.

a(1)

General Comm.

fl(1)

Free Comm.

n(1)

Collectively

Observable

Collectively

Unobservable

n(t)

n(1)

o((ISl. IND") o((ISl- Inl)D

fl(1)

n(1)

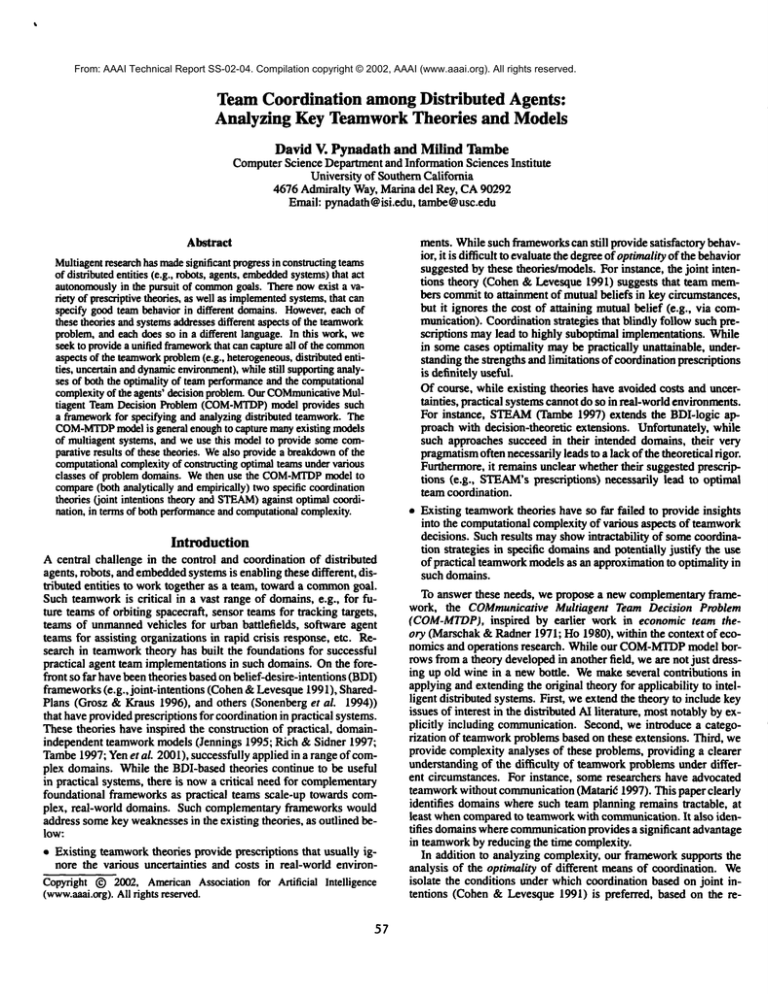

Table 2: Timecomplexity of choosing betweenSJI and -~SJI policies at a single point in time.

negatedpolicy, Ir~.sJ1, is that, evenif one of their teammatesknows

of the achievement,these unawareagents mayunnecessarily continue

performingactions in the pursuit of achieving G. The performance

of these extraneousactions couldpotentially incur costs and lead to a

lowerutility than one wouldexpect underthe simple joint-intention

sJI.

policy, lr

To more precisely comparea communicationpolicy against its

negation, we define the following difference value, for a fixed

domain-levelpolicy, 7rA:

AT ----- vT(~A, "ffyt

X) -- vT(~rA, =Z~X)

(2)

So all else being equal, wewouldlike to see that the simple jointintention policy dominatesits negation--i.e., A~jI >_ 0. Theexecution of the twopolicies differs only if the agents achieve G and one

of the agents comesto knowthis fact with certainty. Dueto spacerestrictions, weomit the precise characterizationof the policies, but we

can use our COM-MTDP

model to derive a computable expression

correspondingto our condition, AsTjI _> 0. This expression takes

the formof a page-longinequality groundedin the basic elements of

our model.Informally, the inequality states that weprefer the SJI

policy wheneverthe magnitudeof the cost of executionafter already

achieving G outweighsthe cost of communication

of the fact that G

has been achieved. At this high level, the result maysoundobvious, but the inequality providesan operational criterion for an agent

to makeoptimal decisions. Moreover,the details of the inequality

provide a measureof the complexity of makingsuch optimal decisions. In the worst case, determiningwhetherthis inequality holds for

a given domainrequires iteration over all of the possible sequences

of states and observations, leading to a computationalcomplexityof

O((ISl. Inl)r).

However,under certain domainconditions, we can reduce our derived general criterion to drawgeneral conclusions about the SJI

and --,SJI policies. Underno communication,the inequality is alwaysfalse, and we knowto prefer -~SJI (i.e., we do not communicate). Underfree communication,the inequality is alwaystrue, and

we knowto prefer SJI (i.e., we always communicate). Under

assumptions about communication,the determination is more complicated. Whenthe domainis individually observable, the inequality

is alwaysfalse (unless under free communication),and we prefer the

--,SJI policy (i.e., we do not communicate).

Whenthe environmentis only partially observable, then agent ~ is

unsure about whether its teammateshave observed that G has been

achieved.In such cases, the agent mustevaluate the general criterion

in its full complexity.In other words,it mustconsider all possible

sequencesof states and observationsto determinethe belief states of

its teammates.Table2 provides a table of the complexityrequired to

evaluate our general criterion underthe various domainproperties.

STEAM

In choosing betweenthe SJI and -~SJI policies, we are faced with

two relatively inflexible styles of coordination: alwayscommunicate

or never communicate.Recognizingthat practical implementations

must be moreflexible in addressing varying communicationcosts,

the STEAM

teamworkmodel includes decision-theoretic communication selectivity. The algorithmthat computesthis selectivity uses

61

an inequality, similar to the high-level viewof our optimalcriterion

for communication:7" C,,,t > Cc Here, Cc is the cost of communication that the joint goal has beenachieved;C,~t is the cost of miscoordinated termination, where miscoordination mayarise because

teammatesmayremainunawarethat the goal has been achieved; and

"y is the probability that other agents are unawareof the goal being

achieved.

Whileeach of these parameters has an corresponding expression

in the optimal criterion for communication,in STEAM,

each parameter is a fixed constant. Thus, STEAM’s

criterion is not sensitive to

the particular worldand belief state that the teamfinds itself in at

the point of decision, nor does it conductany lookaheadover future

states. Therefore, STEAM

provides a rough approximation to the

optimal criterion for communication,

but it will suffer somesuboptimality in domainsof reasonable complexity.

Onthe other hand, while optimality is crucial, feasibility of execution is also important. Agentscan computethe STEAM

selectivity

criterion in constant time, whichpresents an enormoussavings over

the worst-casecomplexityof the optimal decision, as shownin Table

2. In addition, STEAM

is moreflexible than the SJI policy, since its

conditions for communication

are moreselective.

Summary

and Future ExperimentalWork

In addition to providingthese analytical results over general classes

of problem domains, the COM-MTDP

frameworkalso supports the

analysis of specific domains.Givena particular problemdomain,we

can use the COM-MTDP

model to construct an optimal coordination policy. If the complexityof computingan optimal policy is prohibitive, we can instead use the COM-MTDP

modelto evaluate and

comparecandidates for approximatepolicies.

To provide a concrete illustration of the application of the COMMTDP

frameworkto a specific problem, we are investigating an

exampledomaininspired by our experiences in constructing teams

of agent-piloted helicopters. Considertwohelicopters that mustfly

across enemyterritory to their destination without detection. The

first, piloted by agentA, is a transport vehiclewithlimited firepower.

Thesecond, piloted by agent B, is an escort vehicle with significant

firepower. Somewhere

along their path is an enemyradar unit, but

its location is unknown

(a priori) to the agents. AgentB’s escort helicopter is capableof destroyingthe radar unit uponencounteringit.

However,

agent A’s transport helicopter is not, and it will itself be

destroyed by enemyunits if detected. Onthe other hand, agent A’s

transport helicopter can escapedetection by the radar unit by traveling at a very lowaltitude (nap-of-the-earthflight), thoughat a lower

speedthanat its typical, higheraltitude. In this scenario,agentB will

not worryaboutdetection, givenits superior firepower;therefore, it

will fly at a fast speedat its typical altitude.

The two agents form a top-level joint commitment,GD,to reach

their destination. Thereis no incentive for the agents to communicate

the achievementof this goal, since they will both eventually reach

their destination, at whichpoint they will achievemutualbelief in the

achievementof GD.However,in the service of their top-level goal,

GD,the two agents also adopt a joint goal, Gn, of destroying the

radar unit, since, withoutdestroyingthe radar unit, agent A’s transport helicopter cannot reach the destination. Weconsider here the

problemfacing agent B with respect to communicating

the achievementof goal, Gn. If agent B communicatesthe achievementof GR,

then agent A knowsthat the enemyis destroyed and that it is safe

to fly at its normalaltitude (thus reachingthe destinationsooner).

agent B does not communicate

the achievementof Gn, there is still

somechance that agent A will Observethe event anyway.If agent

A does not observer the achievementof GR,then it must fly nap-ofthe-earth the wholedistance, and the team receives a lower reward

becauseof the later arrival. Therefore, agent B must weighthe increase in expectedrewardagainst the cost of communication.

Weare in the process of conductingexperimentsusing this example scenario with the aimof characterizing the optimality-efficiency

tradeoffs madeby various policies. In particular, wewill computethe

expected rewardachievable by the agents using the optimal coordination policy vs. STEAM

vs. the simple joint-intentions policy. We

will also measurethe amountof computationaltime required to generate these various policies. Wewill evaluatethis profile overvarious

domainparameters--moreprecisely, agent A’s ability to observe GR

and the cost of communication.

The results of this investigation will

appear in a forthcomingpublication (Pynadath &Tambe2002).

References

Bemstein, D. S.; Zilberstein, S.; and Immerman,H. 2000. The

complexityof decentralized control of Markovdecision processes.

In Proceedingsof the Conferenceon Uncertaintyin Artificial Intelligence, 32-37.

Cohen, P. R., and Levesque, H.J. 1991. Teamwork. Nous

25(4):487-512.

Grosz, B., and Kxans, S. 1996. Collaborative plans for complex

groupactions. Artificial Intelligence 86:269-358.

Ho, Y.-C. 1980. Teamdecision theory and information structures.

Proceedingsof the IEEE68(6):6A.A. 654.

Jennings, N. 1995. ContToilingcooperative problemsolving in industrial multi-agentsystemsusing joint intentions. Artificial Intelligence 75:195--240.

Marschak,J., and Radner, R. 1971. The EconomicTheoryof Teams.

NewHaven,CT: Yale University Press.

Matari~, M.J. 1997. Using communicationto reduce locality in

multi-robot learning. In Proceedingsof the NationalConferenceon

Artificial Intelligence, 643--648.

Pynadath, D. V., and Tambe,M. 2002. Multiagent teamwork:Analyzing the optimality and complexityof key theories and models.In

Proceedingsof the International Joint Conferenceon Autonomous

Agents and Multi-AgentSystems, to appear.

Rich, C., and Sidner, C. 1997. COLLAGEN:

Whenagents collaborate with people. In Proceedingsof the International Conferenceon

AutonomousAgents (Agents’97).

Smallwood,R. D., and Sondik, E. J. 1973. The optimal control of

partially observableMarkovprocesses over a finite horizon. Operations Research 21:1071-1088.

Smith,I. A., and Cohen,P. R. 1996. Towarda semanticsfor an agent

communicationslanguage based on speech-acts. In Proceedingsof

the NationalConferenceon Artificial Intelligence, 24-31.

Sonenberg,E.; Tidhard, G.; Wemer,E.; Kinny, D.; Ljungberg, M.;

and Rao, A. 1994. Planned team activity. Technical Report 26,

AustralianAI Institute.

Tambe,M. 1997. Towardsflexible teamwork.Journal of Artificial

Intelligence Research7: 83-124.

Yen, J., Yin, J.; Ioerger, T. R.; Miller, M. S.; Xu, D.; and Volz,

R. A. 2001. CAST:Collaborative agents for simulating teamwork.

In Proceedingsof the International Joint Conferenceon Artificial

Intelligence.

62