From: AAAI Technical Report SS-02-03. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved.

Active Classification with Bounded Resources

AnYuan Guo

Department of Computer Science

University of Massachusetts at Amherst

140 Governors Drive

Amherst, MA 01003

anyuan@cs.umass.edu

Abstract

Traditionally, the input to a classifier is an instance vector with fixed values. Little attention is paid to the acquisition process of these values. In this paper, we will

assume that the values of all the attributes are initially

unobserved, a cost is associated with the observation of

each attribute, and a problem specific misclassification

penalty function is used to assess the decision. Framed

in this way, active classification turns into a resourcebounded optimization problem for the best information

gathering strategy with respect to a given loss function.

We will formalize this problem and present a principled

approach to its solution by mapping it onto a partially

observable Markov decision process and solving for a

finite horizon optimal policy.

1. Introduction

With the advent of database engineering, vast amounts of

data have been accumulated in realms such as finance, manufacturing, and bioinformatics. How can we transform passive domain knowledge into active information gathering

strategies with respect to a task at hand? In this paper, we

will present a framework for automated information gathering in the context of classification problems.

A probabilistic classifier is a function that maps domain

instances to a distribution over class labels. A domain instance is a vector of attribute values given as input to the

classifier. Supervised learning of classifiers can be used

to learn this mapping from a data set of labeled instances.

Given a classifier, the classification problem turns into the

problem of simply inputting an instance to this classifier for

an assignment.

In real life, costs are often associated with attribute measurements. In medical domains, this can be the monetary

cost of tests performed on patients; or, in a robotics domain,

the cost of sensing different attributes of the environment.

Aside from attribute measurement costs, misclassification costs are also ignored by most machine learning algorithms. Simple classification accuracies are often used

that do not account for variations in penalties among different types of misclassification. In medical diagnosis, false

Copyright c 2002, American Association for Artificial Intelligence (www.aaai.org). All rights reserved.

positives are temporarily distressing, yet they are more desirable than false negatives, which may let patients go untreated. Most learning systems lack the expressiveness to

model these costs.

In recent years, the cost-sensitive learning community has

started to tackle the problem of representing costs in classification (Turney 2000). They have taken myopic approaches

using value of information heuristics to decide which queries

to perform (Pedersen et al. 2001).

In this paper, we will present a principled, decisiontheoretic approach to the active classification problem.

Given a knowledge base, active classification is defined

as finding the optimal information gathering strategy with

respect to any given misclassification cost function under

bounded resources.

As a concrete example, during the 1998 Antarctic Expedition (Moorehead et al. 1999) sponsored by NASA, an autonomous rover was deployed to independently search and

gather meteorites. On-board the robot was a rock classifier

built by expert geologists from rock databases and extensive laboratory results. The robot could observe different

attributes of a rock with its camera, with a metal detector,

and by performing a spectroscopy test involving the deployment of a robot arm. The robot’s task was to decide which

sensors to deploy at each step. These decisions have to be

carefully formulated since only a limited number of observations can be carried out due to resource bounds on battery

power and intrinsic monetary costs of sensor deployment.

Diagnostic problems in general fit well under the active

classification framework, since in most instances, costs are

associated with information gathering. In medical diagnosis,

given a knowledge base of diseases, how can these knowledge be used to guide the doctor’s decisions to carry out

further testing as current test results become available, given

certain costs for mis-diagnosis.

The main contribution of our work is the novel treatment

of the true class of an instance as the state of a partially observable Markov decision process(POMDP), and formulating attribute gathering as observations on the states. With

these insights, we are able to map the active classification

problem onto a POMDP, thereby enabling the application of

general solution techniques to find an optimal solution.

In the rest of this paper, we will first survey related work

in section 2; then, lay out the relevant background in section

3. In section 4, we define the active classification problem

formally;

in section 5 present a solution technique by map

ping our problem onto a POMDP. Finally, in section 6 we

conclude with a discussion of future work.

2. Related Work

Aspects of this problem have been studied in different literature. Decision tree induction uses a greedy informationtheoretic strategy to minimize misclassification (Quinlan

1986). Imposing the constraints of our problem would mean

specifying a cut off depth on the decision tree induced. One

problem with this approach is that it is not straightforward

to incorporate cost of attribute observation and misclassification penalty into the decision tree induction procedure.

In (Pedersen et al. 2001), which uses the robotic collection of meteorites as their domain, a greedy entropy reduction heuristic is used to predict the utility of observing an

attribute. As was pointed out in the paper, some problems

are apparent with this approach. First, information gain

is is always positive, yet sometimes entropy increases after new data is obtained. Contradictory data can increase

entropy. Another problem with using entropy reduction is

that an observation that changes P(X) from 10% to 90% has

entropy reduction of zero, indicating that this observation

has no effect, which obviously is not true, since information has definitely being obtained. Furthermore, the utility

measure in that work is simple classification accuracy no

cost is attached to misclassification. We can easily imagine

cases where false-positives should be weighted differently

than false-negatives.

The cost-sensitive learning literature is devoted to this

last issue, where problem-specific loss functions are used to

calculate utility rather than simply using mis-classification

rates (Turney 2000).

The work in (Greiner, Grove, and Roth 1996) studies a

similar problem. We borrow some of the problem definition

from this work. However the focus there is how to learn

an active classifier. In our case, we start with a pre-learned

classifier, and the task is to construct an optimal policy for

attribute observation. Our representation is also different;

we use a Naive Bayesian classifier to compactly represent

the joint distribution of class and attribute variables.

In this paper, we will address classification problems of

this latter case. The meteorite collecting rover example falls

into this category. In this example, the non-determinism

can arise from sensor noise or hidden environment variables.

Photographing a rock at varying distances and under different light conditions could have significant effects on the final

readings.

Within the general framework, we are interested in performing active classification using a specific representation:

the Bayesian classifier. Prior knowledge of a domain can be

easily incorporated into a Bayesian classifier and easily updated as more data becomes available. The classifier outputs

a belief distribution over the class labels rather than a single

label, increasing the informativeness of the answer.

A Bayesian classifier assigns labels to data instances by

computing the posterior distribution of a class variable given

the values of the attribute variables. The joint probability distribution of the class variable and attribute variables

are encoded by a Bayesian network, denoted by .

G is a directed acyclic graph (DAG) capturing the relationships between the class variable that takes its values

from a discrete set , and attribute variables

. is a vector of conditional probabilities specifying the conditional distribution of each attribute

given the class.

C

a1

a2

a3

an

Figure 1: A general Bayesian Classifier with attribute dependences

3. Background

The same mathematical formalism for classification can give

rise to two different interpretations. One is to assume that

there is a stationary distribution over the space of domain

instances from which random instances are drawn independently. Once drawn, the attributes of the instance stay fixed,

and observations made on them are deterministic. In this

case, there is no reason for ever observing the same attribute

twice.

Another interpretation is to assume that the classes are objects whose attributes assume different values according to

a probability distribution. In this case, observations are nondeterministic, so repeated observation of the same attribute

can reduce uncertainty associated with that attribute.

The conditional probabilities in a Bayesian network can

be learned from data using standard Bayesian learning algorithms, see (Heckerman 1996) for a comprehensive survey.

In our work, we start with a Bayesian network with previously learned conditional probabilities.

4. Active Classification

Active classification is the problem of finding the optimal information gathering strategy with respect to any given misclassification cost function under bounded resources.

An active classification episode would proceed as follows.

First an instance, drawn according to a prior distribution

over the classes, is presented to the classifier. The values of

its attributes are not yet known. The active classier chooses

an attrib

ute to observe, obtains its value " , and then de!

cides again what attribute to observe next. This process continues until a deadline is reached at which point the classifier

outputs a class label.

An instance is identified by a# finite vector of attributes

# %

$&

$

$

%

$)($

$

. Let '

+*-,./

&*-,00

be the set of all possible data instances. ,01 denote the value

set

of attribute 1 . A labeled instance of a concept 2 is a pair

$#

$05

is the set of all classes.

243

6*7')8:9 , where 9

The problem description of active classification can be defined by the tuple <;=?>@ACBDEF ,

G

Rock Type

G

; is a Bayesian classifier, denoted by , where is a

directed acyclic graph capturing the relationships between

the class variable (let H denote the set of values that can take on) and attribute variables I*JH . is a vector of

conditional probabilities quantifying these relationships.

G

> is the deadline for the classification episode. It is a

bound on the amount of time available to perform observation actions.

%

HLKM9

is the set of all possible actions, where NO*

A signifies an attribute observation action and N-* C is a

classification action.

G

@

G

BQP.HSRTVUXW is a function that assigns a positive integer

time duration to each of the attribute observation actions.

EYPZ[9\8]9^XRT_U is a function that maps a pair of class

values, the first being the actual class of the instance, and

the second being the class that the classifier outputs, to an

integer denoting the loss for misclassification.

B & W

Image

Color

Image

Metal Spectrometer

Dector

Reading

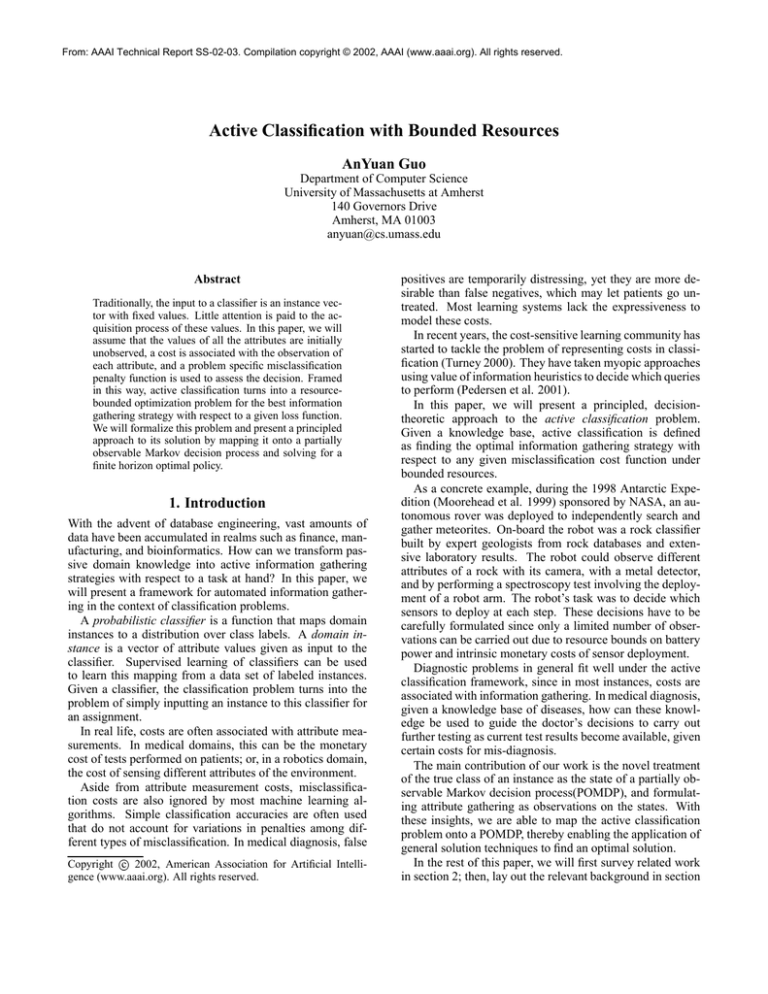

Figure 2: A Naive Bayesian classifier for meteorite identification

the Operations Research community in modeling problems

of this nature as a partially POMDP. General solution techniques have been developed that find optimal control strategies (Lovejoy 1991). In the next section, we will show how

the active classification problem maps onto a POMDP. Once

this mapping is complete, solutions can be found by the application of standard POMDP algorithms.

5. Solution technique

Given the formal description outlined above, the problem

is to find an active classifier `&# Pab cMRTdHeKM9 , a function mapping partial instances $ c to actions, which maximizes classification accuracy calculated by the loss function

E while staying under the time bound > . The partial instance

is initially completely unobserved.

In this paper we will restrict the distribution of data to the

ones representable by a Naive Bayesian classifier. This is

a Bayesian classifier that assumes the conditional independence of attributes given the class (Mitchell 1997). Graphically, there are no links between attribute variables. Naive

Bayesian classifiers require less training data to learn and in

practice often achieve performance comparable to that of the

optimal Bayesian classifier (Domingos and Pazzani 1997).

Figure 2 is a Naive Bayesian classifier for the robot rover

example.

A rock in question can be either a meteorite, sandstone,

basalt, or schist. The type of attributes gathered are a black

and white image, a colored image, the metal-detector results,

and the spectroscopy signatures. The value of the spectroscopy test, for example, is a vector of spectral signatures.

We will not go into further details here about the specifics of

rock classification.

Formalized in this way, the active classification problem

is one of decision making in a partially observable stochastic environment. Extensive study has been carried out by

A partially observable Markov decision process can be described as a tuple <fXgbih'?jJik=lm , where f is a finite set

of states of the world; g is a finite set of actions; h is the

state-transition function that maps each state action pair to a

probability distribution over the next state; j is the reward

function, given the expected immediate reward for each action, state pair; k is a finite set of possible observations; l

is the observation function, that for a state action pair, gives

a probability distribution over possible observations for the

probability of making an observation given the state and action taken.

In this section, we will present how the active classification problem ;A>n@ACBI?EF , under the Naive Bayes assumption, maps onto a POMDP <fXg'h'?jJik=l+ .

5.1

State representation

The true class label of an instance can be thought of as its

underlying state. Given this simple interpretation, the state

space of the POMDP is simply the set of class labels. Since

we also need to keep track of the time elapsed to ensure

that the deadline for this classification episode has not been

reached, a time stamp has to be included as part of the state

representation. Thus, the state space is a class, time stamp

pair.

f

%

o prq

(

=*J9LK7stvuxwyti`{z^|

}~p}~

An absorbing state, denoted as o stvuxwytz^|q , is reached

when either the deadline has passed or a classification action has been taken.

5.2

g

¢¢

¢¢

p

¢¢

(^{

{

"

s

{r

or H

¢¢

¢¢

¢¢

¢¢

¢

3?o ipx q

(

o prqr

¢¢

¢¢

¢¢

¢¢

¢¢

¢¢

if ¢¢

¢¢

p

¢¢

¢¢

k

,01

, 1

is the value set of

Observation function

When the underlying states are not known with certainty,

actions taken at each state give rise to different observations

according to some distribution P. The observation function

lPf8&g8&kRTj , assigns the probability of observing

a certain value given the class of the instance and the action

taken. For observation actions, this corresponds to the entry

in the conditional probability table of the attribute observed

indexed by the class value and attribute value. For classification actions, this value is 0.

3

6(

o iprq[i

s

5%\

3

( 5

if pL

if p % >

The Naive Bayes assumption is crucial for this mapping.

In general Bayesian classifiers, attributes can be correlated

with other attributes. This means we can no longer compactly represent the state space given the current state and

action. Past observations will also need to be included as

part of the state space, leading to an exponential increase of

its dimensionality. We are currently working on extensions

that will relax the Naive Bayes assumption.

5.5

~>

%

>

%

%

%

H

stvuxwyit

%

p¥©|

>

r

otherwise

An absorbing state is reached either after a classification

action was taken or when the deadline was reached.

5.6

where H is the set of attributes and

attribute 1 .

5

%

1Z

5.4

¢¤

s

or

¢¢

where I*7H and *J9

The observation set is the union of the set of possible values

of all the attributes.

stvuxwyit

^

{

5F¨

¥§¦&3

"

s

%e^

{

"

s

p

¢¢

Observation Set

"

%

if £

5%

^

{

or

¢¢

A and C are, respectively, the set of attributes and the set of

classes.

5.3

%

¥§¦&3

%e^

s

¢¢

Action Set

%

if |

¢¢

¢¢

An action in the active classification framework can either

gather information or assign a class label. An information

gathering action chooses an attribute to observe, while a label assignment action terminates a classification episode by

outputting a class label.

%

¡¢¢

Transition Function

The state transitions made during a classification episode are

essentially self loops, because once chosen, the instance’s

class value remains constant. Since we are also keeping

track of the current time as part of the state representation,

the transition function of the POMDP needs to reflect this

constraint.

Rewards

The immediate rewards for all attribute observation actions

are 0. For the classification actions, we query the loss function to find out the cost of misclassifying x as y. Under 0-1

loss, this function is a simple “and” function that returns 1

if and only if the class label assigned matches the true class

and 0 otherwise. More generally, the loss function can be

specified by the user of the classifier to reflect domain specific misclassification costs.

E3 ªi«

5

if H

%

H

{r¬

i­

%

o ªiprq

For example, false positives and false negatives can be

associated with different penalties. This is the cost that the

classifier will have to optimize against.

Given this mapping of our original active classification

problem onto a POMDP, it is possible to show that an optimal policy for the POMDP corresponds to the optimal active classifier. Finding the optimal solution for a POMDP

is PSPACE-complete (Papadimitriou and Tsitsiklis 1987).

Since the active classification problem exhibits more structure, namely, the state transitions are essentially self-loops,

we are exploring the possibility of constructing a polynomial

time algorithm for this problem.

6. Conclusion and Future Work

The main contribution of this work is to put the traditional

classification problem into a decision theoretic framework,

which balances the cost of acquiring additional information

against penalties for misclassification. Once complete, standard POMDP solution techniques from decision theory can

be adopted to find the optimal policy for active classification.

This framework opens up avenues to work both in the

empirical and theoretical domain. On the empirical end,

we would like to compare the performance of this fullfledged

decision-theoretic approach to a greedy information®

theoretic approach. We have applied standard POMDP algorithms to small examples on simulated data. We have yet

to analyze the results and make detailed comparisons with

other approaches. Our goal is to establish heuristic characterizations of problems that suggest the applicability of one

approach over the other.

The special structures of the active classification problem

lead us to believe that it is simpler than a POMDP. This warrants exploration for the existence of a polynomial time algorithm.

Acknowledgements

Special thanks to Dan Bernstein and Ted Perkins for technical discussions and insights; Paul Cohen, Kelly Porpiglia,

Charles Sutton, and Brent Heeringa for proof reading the

paper; and Louis Theran for graphics.

References

Lovejoy, W. S. 1991. A Survey of algorithmic methods for

partially observed Markov decision processes. Annals of

Operations Research 28(1):47-65.

Papadimitriou, C. and Tsitsiklis, J. 1987. The complexity

of Markov decision processes. Mathematics of Operations

Research, 12(3):441 – 450.

Turney, P. 2000. Types of cost in inductive learning. In

Workshop on Cost-Sensitive Learning at the Seventeenth International Conference on Machine Learning, 15-21, Stanford, CA.

Mitchell, T.M. 1997. Machine Learning. The McGraw-Hill

Companies, Inc.

Quinlan, J.R. 1986. Induction of Decision Trees. Machine

Learning, 1. 81–106.

Greiner, R., Grove, A., and Roth, D. 1996. Learning active

classifiers. In Proc. of the International Conference on Machine Learning (1996).

Heckerman, D. 1996. A tutorial on learning with Bayesian

networks. Technical Report MSR-TR-95-06, Microsoft Research.

Domingos, P. and Pazzani, M. 1997. Beyond independence:

Conditions for the optimality of the simple Bayesian classifier. Machine Learning, 29(2/3):103– 130.

Moorehead, S., Simmons, R., Apostolopoulos, D., and

Whittaker, W.L. 1999. Autonomous Navigation Field Results of a Planetary Analog Robot in Antarctica. In proceedings of the International Symposium on Artificial Intelligence, Robotics and Automation in Space.

Pedersen, L., Wagner, M.D., Apostolopoulos, D., and Whittaker, W.L. 2001. Autonomous Robotic Meteorite Identification in Antarctica. 2001 IEEE International Conference

on Robotics and Automation, 4158–4165.