From: AAAI Technical Report SS-02-02. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved.

Large Scale Q-tables

Kaile Prorok

Departmentof ComputingScience

Ume~University

SE-901 87 UMEA

Sweden

kalle.prorok@tfe.umu.se

Abstract

Thisarticle studiesthe possibilitiesfor Q-learning

to learnthe

biddingin the card-game

bridge.Thelearnt biddingsystems

are quiteusefulbutbelowthe level of an average

club-player.

Some

studiesregardingthe relationbetween

the learningrate,

the exploration

rate andinitial Q-values

are alsodone.

The Application

Bridge is a card-gamenormallyplayed by four humans.It

has someinteresting properties making.itsuitable as a test

platform regarding Collaborative Learning Agents. Each

player is on his ownbut playing together pair-wise; a dynamic, collaborative and adversial game. Someagreements

are madebetweenthe players on beforehandbut mostsituations have to be taken care of by commonsense

logic. This

makesit difficult to pre-programa computerized

player. Instead it makesit interesting to let the computer-player

learn

from exhaustive experiences. The optimal behavior is also

unknown,which makethe results from such an artificial

player valuable.

Myhopeis that these experimentswith Bridgewill be applicable for other domainslike networkmanagement

(informationtransfer), compressionalgorithms(finding descriptions of i.e. a face automatically),electronic commerce

(how

to performan auction) and robotics (learning to interact).

Another area might be language learning; howdid we invent a language"in the beginning".

Blocks world (Winston1977) has been used as a smallscale modelsystemfor decadesin the Artificial Intelligence

community.Today’sproblemsare of a different kind; cooperation, stochastic and/or non-linear systems, emergent

properties etc. Mysuggestion is to use the international

card-gameBridge as a newmodel world with someinteresting properties fromthe real worldbut still on a reasonably small scale. Someof the things that can be studied

using the Bridge platform are: Collaboration/Cooperation

within pairs and teams; Competitionbetweenpairs, teams

and countries; Communication

- information transfer with

limited bandwidth;Insecure domain- not all information

is visible for all parts; Stochasticdomains- there is a randomfactor (bowthe cards were dealt); The role of concertCopyright

(g) 2002,American

Associationfor Artificial Intelligence(www.aaai.org).

All rights reserved.

69

tration; mentaltraining; Planning(of biddingand playing);

Emergent

properties; single suit versus multi-suit plays, endplays; Democratic(descriptive) or Captain (enquiry) based

methods;Tactics, Strategy, Risk taking, "the only chance"

or safety play "guarding"; Constructiveversus destructive

waysof doing things; Psychologicalaspects - to be unpredictable, whento fool partner or opponent;Philosophical

aspects - try to find out whatpartner or opponentsthink;

Memory;a complexbidding systems versus an easy to remember,understandand use bidding system; Getting information - what and when; Reducingamountof information

to opponents whomaytake advantage; Learning from mistakes and successes.

The gameis described in manystandard textbooks and

the rules can be learnt within a fewdays but requires several years of practice to become

a master. Thegoal with the

gameis to score points related to the numberof tricks your

partnershipis able to take and this is dependentof howgood

youare to reach a suitable contract; the gameis thus separated into twophases: the bidding (auction) into a contract

and then the play in such a contract. Anexampledeal is

presentedin Fig. 1 and a biddingin Fig. 2.

Background

Bridgeis fairly international withsimilar rules all overthe

world and there are more than one million players in the

world. Nowit is also an Olympicgame. There are bridge

programsperformingwell and it is also a matter of time before the computerbeats most humanbridge players. The

mainreason for this is the technical advantagea computer

has (rememberingall cards during the play and the bidding

system,possibilities of statistical simulationsand calculations makingit possible to play well) compensating

for the

lack of intuition and other psychologicalaspects by making

fewer mistakes. Today’ssituation (December2001) is that

the play with the cards is very goodbut bidding lacks behind. The reason for this is that entering humanjudgement

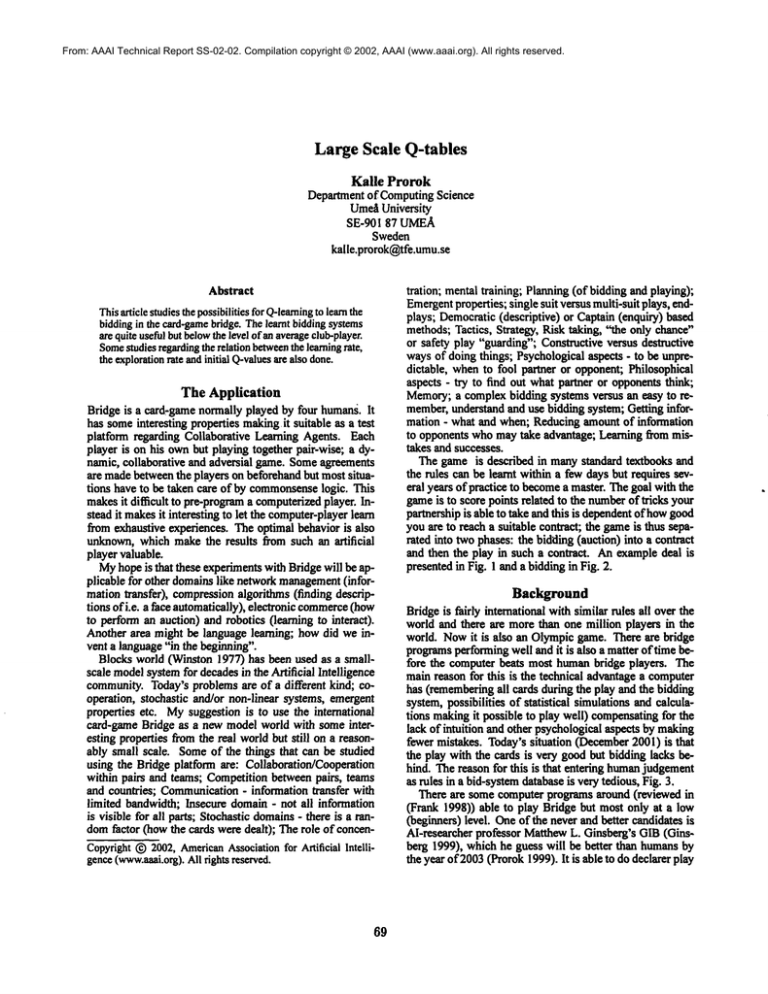

as rules in a bid-systemdatabaseis verytedious, Fig. 3.

There are somecomputerprogramsaround (reviewed in

(Frank 1998)) able to play Bridge but most only at a

(beginners)level. Oneof the never and better candidates

AI-researcher professor MatthewL. Ginsberg’s GIB(Ginsberg 1999), whichhe guess will be better than humansby

the year of 2003(Prorok1999).It is able to do declarer play

North/Deal

4iv T9632

¯ T72

6 QT873

West

East

¯ AJT842

AK7

* Q963

v 85

¯ AJ64

4. J86

South

* K75

v QJ4

¯ K92

4. K953

¯5

4. AQ4

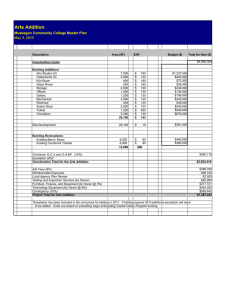

Figure 1: A typical deal in schematicform. It is dealt by

player North whothen starts the bidding with East next in

turn. Northis void in (have no) spades but has a weakhand

and passes. East has a strong hand and normallyopens the

bidding by giving a bid, maybe1 6. South has an average

hand but not enoughto enter the bidding with a bid other

than Pass.

N E SW

P 18P 24

P 4*P P

P

Figure 2: The schemaof one possible bidding with the hands

in Fig 1. Threepasses ends the auction. 4 Ik requires EWto

take 6 ("the book")+ 4 = 10 tricks out of the possible 13.

1"#08115" {5{[CDHS]:"

!.!

%0% %.% <0x1083>"2153"#08116"

5[÷#b]"

!DOPI!

%6% %:H[~:]*~8&[^:]*-S&%

"2160"#08117"

5[+÷#b]"

!DOPI!

%7%

t:H[^:|*-8&[^:]*~8&{^:J*~8&t

"2159"#08118" 5[+++#b]"

!DOPI[

18% %:H~8&.’S&.’S&.~8&%

"2156"#08119" 4N:$7"

!.!

"

%0% t.t *1501"#08120 {SNI[67][CDHSN]):" !.!

"

%0% %.% <0x1083>"2153"#08121

X"

!DOPI!

"

%3% I.% "2154"#08122

P"

!DOPI!

!DOPI!

%4% %:H[^:]*’S&%"2155"#08123"

X"

%5% %:H[^:]*~8&[^:]*~8&%

"2154"#08124" P"

!DOPI!

%6%

%:H[^:]*~8~[^:]*’8&|^:]*-8~%

"2155"#00126"

.*{|{{{[I||{{[[CDHS]<#j=3><#k=6>:P:4N"

!.!

%0% %.% *2037*#00127" :(PIX):"

!.!

"

[BLACRWOOD!

%0~ I.%#00128

@ACE BLACKWOOD-"

Ii% %.% "1501"#00129"

8ACE RKCB-"

[RKCB!

%1% %.%#00130" [1231{{{{{|{{T{(({.:P:5N$12" !GSF!

"

%0% t.% "2229"#00131 :,:"

!,[

%0% %.%~00132" 7#a"

!.!

"

!.!

%5% %:H[~:]*-9>#a%

*2034*#00133

7#a"

!.!

%5% %:H[*:]*-6=#a%

*2034*#00134"

6#a"

Figure 3: A small part of the GIB-database, showinghow

the Ace-asking bid Blackwood(4NT) should be used.

7O

on a very high level. This also makesthe biddingbetter becauseit simulatesthe play to find the best or mostprobable

contract.

GIBusestheBorelsimulations

algorithm

(Ginsberg

1999):

Toselect

a bidfrom

a candidate

setB,given

a databaseZ that

suggest

bids

invarious

situations:

I.Construct

a setD ofdeals

consistent

withthe

bidding

thus

far.

2,Foreachbidb E B andeachdeald E D usethe

database

Z toproject

howtheauction

willcontinue

if

thebidb ismade.

(Ifnobidissuggested

bythedatabase,

theplayer

inquestion

isassumed

topass.)

Computethedouble-dummy

result

oftheeventual

contract,

denoting

its(b,

d).

3.Return

that

b forwhich

Y’~a

s(b,

d)ismaximal.

There

aredozens

of other

programs

forplaying,

generatingdeals(Andrews

1999)etc,fanFrankhasmade

end-play

analyzer/planner

FINESSE

whichseemstowork

wellbuttooslowforpractical

use(written

ininterpreted

PROLOG)

andhehasalsowritten

anexcellent

doctoral’s

thesis "Search and Planning UnderIncompleteInformation"

(Frank 1998). PYTHON,

(Sterling & Nygate1990) as cited

in (Bj6mGamback

&Pell. 1993) is an end-play analyzer for

findingthe best play but not on howto reach these positions.

The former World Bridge ChampionZia Mahmood

has

offered one million poundsto the designer of a computer

systemcapable of defeating him but has withdrawnhis offer

after the last matchagainst GIBwhichwasa narrowwinfor

him.

Most programsbasically use a rule-based approach. The

first one (Carley 1962)with four (I) bidding rules with

performance

like "Theability level is about that of a person

whohas played dozenor so hands of bridge and has little

interest in the game".Wasserman

(cited from(Frank 1998))

divides the rules into collection of classes that handledifferent types of bid, such as openingbids, respondingbids

or conventional bids. The classes are organized by procedures into sequences."Siightlymoreskillful than the average

duplicate Bridge player at competitive bidding". MacLeod

(MacLeod1991)(cited from (Frank 1998)) tries to build

picture of cards a player holds but cannotrepresent disjunction; whena bid has morethan one possible interpretation.

Staniers (Startler 1977)(citedfrom(Frank1998)) inlroduces

look-ahead search as an element of Bayesian planning and

LindeR$f’sCOBRA

(Lindelof 1983)uses quantitative adjustmerits. Lindel6fclaimsthat COBRA

bidding is of world expert standard. Ginsberghas invented a relatively compact

but cumbersome

(Fig. 3) way of representing bidding systemsin his GIB-soi~vare.By using such representations he

reduceda rule-base of about30,000rules into one with about

5,000. Suchrepresentationsare of course proneto errors.

The Rules of Bridge

Bridge is a card gameplayed with a deck of 52 cards. The

deck is composedof 4 suits (spades 6, hearts ~, diamonds

<> and clubs &). Eachsuit contains 13 cards with the Ace

as the highest value and then the King, Queen,Jack, Ten, 9,

.... 2. Thefirst five are often abbreviatedto A, K, Q, J, T.

The gamebegins with someshuffling of the deck and then

the cards are dealt to the four players, often denotedNorth,

South, East and West (N, S, E, W)Fig. 1. N and S play

together in a team against E and W.

Before the card play begins an auction (bidding) takes

place. Oneof the teamst wins a contract to makeat least

a certain numberof tricks. Somelevels give bonuspoints.

Topreventopponentsfromtaking a lot of tricks in a long

suit of theirs, a trump-suitis lookedfor in the bidding. If

no common

trumpsuit is found, a gamewithout trumps can

maybebe played; No Trump(NT), which is ranked higher

in the biddingthan the suits whichare ranked6 (lowest),

~, to 6 (highest).

Duringthese twophases, the bidding and the play, there

is cooperationin pairs playing versus each other with four

players at each table. The pairs communicatevia bidding

wherethe bids are limited to a few (15) "words", but many

sequences"sentences/dialogs" are possible. Opponentsare

2 about the meaning(semantics) of the bid

kept informed

sequence. This can be seen as a mini-languagewith words

like 4, 1, Spades, Double, Pass etc. and these words can

be combinedvia simple grammaticalrules into short "sentences" like 3 Spades.The sentences are put in a sequence

"conversation"called the bidding. It can look like N:Pass

- E:I Spade - S:Pass - W:2Spades, N:Pass - E:4 Spades

- S:Pass - W:Pass, N:Passwith semantic meaningslike "I

havea handwith spades as the longest suit and aboveaverage numberof Aces, Kingsand Queens"- "I have support

for your spades but not so goodhand", "I have someextra

strength"- "1 havenothingto add". Thefirst non-passbid is

denotedopening.A full bidding schemais presented in Fig.

2.

When

the biddingis finished, a pair handlesthe final contract (4 spades here) and the play with the cards can begin

by taking tricks. After the play, the pairs are given points

accordingto the result. In tournamentplay the points are

comparedto the other pairs playing a duplicate3 version of

the deal, almost compensating

the factor of luck with "good

cards".

In our workwe have studied undisturbed bidding which

meansthe pair is free to bid on their ownwithout disturbing interventions from the opponents. Thereis no need for

preemptivebids and sacrificing or to keep in mindthe importance of reducingthe amountof informationtransferred

to the opponents.By havingthese limitations weare able to

learn better in shorter time. Less memory

is also needed.

Representation

A bridge-hand as a humanviews it is seen in Fig. 4. A

shorter, standard, representation with almost the samemeaning (losing the graphic"personality"of the cards)

Figure 4: A typical hand

Card

Ace

King

Queen

Jack

Highcard points (h.c.p.)

4

3

2

1

Table1: Theestimatedstrength of the high cards.

6AK87

cYQJ52

QQ7

6T96

or, simply, AK87,Q J52, Q7, T96with the order of the

suits understood. The total numberof possible hands are

6 ¯ 1011. There are 8192different suit holdings

92, AK82,etc.), and only 39 hand patterns (5431,5422,

etc. disregardingsuit-order) (Andrews1999)and 560shapes

(5431,4513, 5422,.) in total. To estimate the strength in

hand, Milton Worksinvented the high card points (h.c.p.)

(table 1).

The total numberof h.c.p, in a deck is 40 and the average strength per hand is exactly 10. A hand with AKQJ,

AKQ,AKQ,AKQcontains 37 points which is the maximum.Suchevaluations are neededto estimate the numberof

tricks a pair of handsare able to take. RobinHillyard(Hillyard 2001) has calculated the expected numberof ~cks at

no trump, presented in table 2 basedon G1B-simulations

and

the results are close to basic rules of thumbused by bridgeplayers. ThomasAndrews(Andrews1999) has made some

analysis showingthat the value of the Aceis slightly underestimated in the MiltonWorks432 l-scale.

Of coursethis scale is just to makean roughestimateof a

handand every bridgeplayeradjust (reestimate) the value

the hand, maybedynamicallyduring the bidding due to fit

~

etc.

Oneadd-onadjustment invented by Charles Gorenis the

distributional strength (Table3) wherethe total value of our

hand is 12 (h.c.p) + 1 (doubleton ~) = 13 points.

H.c.p 20

Tricks 6.1

21

6.7

22

7.2

23

7.6

24

8.2

25

8.7

H.c.p 26 27 28 29

30

Tricks 9.1 9.7 10.1 10.6 11.1

t Withthe highestbid.

21ftheyask.

3Or replayed

later bystoringthe dealin a wallet.

Table2: Theaveragenumberof tricks with a given strength.

71

Number

of cards in a suit

no card (void)

one (singleton)

two (doubleton)

Distributional value

3

2

I

Whatvalue of the rewardr should the evaluator give the

learners?

a) Calculatethe differencebetweenthe actual score to the

score achievableon this combinationof hands:

Table3: Distributionalpoints.

r = score - achievable

idea behindis that shortage in a suit makesit possible to.

ruff in a trumpcontract andtherebyincreases the strength of

thehand.Thesedistributional

pointsare normally

notadded

during a search for a no trump(hiT) contract. Thestrength

is used to define a bidding systemin terms of shape-groups

and strength intervals.

Our hand can be represented as a 4423-shape(4 spades,

4 hearts, 2 diamondsand 3 clubs) with the strength of 12

h.c.p. Thenumberof possiblehandsin this representationis

560 ¯ 38 = 21180. In our approachwe reduce it further by

just keepingthe distribution of the cards (the shape), andthe

rangeof strength:

4432, medium

where mediummight show12-14 (Goren)points.

Allowedsystems In most real tournaments the possible

systems are limited by assigning penalty points (dots)

non-natural opening bids (Svenska BridgefOrbundet, SBF

and WorldBridgeFederation, WBF).This is so becauseit is

difficult for a humanopponentto find a reasonabledefence

within a fewminutesto a highly artificial biddingsystem.A

certain numberof points are alloweddependingon the type

of tournament.

:

---- qsituat,bid q- lr *

(7" q- "7 I~ida~ qsituat’,bid’ -- qsltuat,bid)

It worksbadly due to high exploration. Assuming

a start

value of Q withzero "optimisticstart", almostall tried bids

will start with negative scores (strange contracts) making

themworsethan hitherto untested alternatives. This will

lead to a huge, time consumingexploration phaseof all alternatives.

b) Differenceas abovebut added200 points:

r = score - achievable + 200

(3)

A contract not worsethan -200 is denotedbetter than the

untested cases. The Pass alwayssystemevolved guaranteeing a score of 200. Maybe

a long run will improvethis policy

and the pass-systemis a kind of eye of a needle?

c) Absolutescore. Simplyreinforce the learner by the

actual score:

r = score * 10

(4)

It workedbest, at least on our short (oneday or two)runs.

Thescore was sealed with 10 to try to avoid integer roundofferrors whenmultipliedby the learning rates.

d) Heuristic reinforcement.

r’ = r - 100 * isToHighBid

(5)

To avoid high-level bidding with bad cards there wasan

extra negative reinforcementon those bids that were above

the optimalcontract.

The Learning

The Q-learning (Watkins &Dayan1992) updating rule

qsituat,bid

(2)

(1)

wasusedfor all three (response,rebid, 2ndresponse)bidding positions (whenapplicable) after each deal. Theshape,

strength, position (E,W)and bid-sequence is encoded

part of the situation and the q-valueswere stored in a multidimensionalinteger array, q is the averageresulting score

havingthis handand followingthe policy, lr is the learning

rate, 7 the discount rate and r is the reward. The bid with

the highestq wasselected in a particular situation unless exploration was actual, on which any bid was selected with

equal probability. If several bids had the sameq-value, one

of themwas randomlyselected.

In practice this meantone out of 2048opener werecombined with one of the 2048respondersaccordingto the current examplein the databaseand they had to learn together

with their partners; thence multi-agentreinforcementlearning. The pair’s q-values wereupdated whenthe bidding was

finished. This wasrepeatedmultiple times.

Reinforcement signal

Somepreliminary tests with the rewardwasdone.

?2

Discount/Decay

How

far-sighted should the bidders be’?. Byselecting different values for -y in the Q-learningdifferent behaviorswas

obtained. A lowvalue makethe learners prefer a short bidding sequenceand resulted in guessinginto 3NTmoreoften.

Maybea value higher (not tested) than the normallimit

one will encouragea moreinformative, long sequence?Eligibility traces wasnot usedbut the result after the bidding

wasusedfor all pohitions.

Results

Someof the ideas above were implementedin a program

called "Reese", after the famous bridge player Terence

Reese who died 1996. He was knownfor his excellent

booksand simple and logical bidding style. Regardingour

bidding programthere was hopefor finding a super-natural

bidding system but so was not the case. This programrequires a computer with at least 348 MBof memorywith

the described configuration. The experimentscan be seen

as what can be achieved with these methodsin a complex

two-learner task and also gives somehints of howto chose

learning parameters.

Introduction

By using the huge databaseof GIB-results whenplaying

againstitself for 717.102deals, wefounda reasonable

evaluative function. Thedatabasewasgeneratedusingan early

versionof GIBbut both the declarerandthe opponents

were

not so strong so maybe

the result is quite reasonableanyhow. Wehave used the 128 most commonshapes which

includesall handswithat mosta six-cardsuit exceptfor the

rare 5440, 5530, 6511, 6430 and6520shapes. This covers

about91%of the handsand, neglectingthe inter-handshape

influence, 0.91.0.91 = 83%of the hand-pairs.Thestrength

wasclassified into the following16 intervals(althoughthis

is easy to modify):

Class

0

1

2

3

h.c.p.

0-3

4-6

7-8

9

Class

5

6

7

4

10

11

12

13

h.c.p.

Class

8

9

10

11

h.c.p.

14

15 16 - 17 18 - 19

Class

12

13

14

15

h.c.p.

20 - 21 22 - 24 25 - 27 28 - 37

Theintervals wereheuristicallyselected in accordance

to

frequencyof appearing.

To reducememory

requirements,learning time and simplify the restriction of learninginto allowedbiddingsystems, the openingbids wasdefined by the user as a small

twopagetable. Partof the definitionof the openingbids in

bidsyst.txt is (the strengths9, 13, 14 and28 wereremoved

here ~r ease of showing:

Shape

6313

6241

6232

6223

6214

6142

6133

6124

5521

5512

5431

5422

5413

5341

5332

0 4

P 3S

P

P 3S

P 3S

P

P

P 3S

P

P

P

P

P

P

P

P

7 i0

2D

2D

2D

2D

11 12

1S

1S

1S

lS

1S

1S

1S

1S

1S

IS

IS

15

IS

IS

IS

IS IN

16

18

20 22 25

2S

2S

2S

2S

2S

2S

2S

2S

2S

2S

2S

2S

2S

2S

IS 2C 2N 2S

Thebidsyst.txt canbe rearranged

(preferablywitha program)fromthis matrixrepresentation

into an inputfile with

examples.Eachstrength 0..37 is used to generatean example, resultingin 128 ¯ 38 = 4864examples,whichlook like

this (high cardpoints, number

of Spades,Hearts,Diamonds,

Clubs, recommended

opening-bid):

0,

i,

2,

3,

4,

6, 4,2, I, pass

6, 4,2, i, pass

6,4,2,l,pass

6, 4,2, l,pass

6, 4,2, i, pass

73

It canbe represented

as a decisiontree or rulesetbut this

introduceserrors (due to somepruning;about2%is erroneous),is larger (five andten pagesrespectively)andconsiderablymorecomplexto read.

The See5, inductive-logic, sotbvare (available via

http://www.rulequest.com)

generatesthe following(slightly

modified)decisiontree:

See5

[Release

1.13]

Wed

Mar

Options:

Generating

rules

Fuzzy thresholds

Pruning confidence

4864

cases

Decision

(5 attributes)

14 2001

level

from

50%

open.data

tree:

pts in [20-38] :

:.s in [5-6] :

: .pts in [25-38]: 2S (390)

: pts in [0-24]

:

: :.pts

in [0-21]

:

:

:

:

:.s in [1-5] :

:

:

: :.d in [5-6] : 2S (4)

:

:

: : d in [1-4] :

:.h in [5-6]: 2S (4)

h in [1-4] :

:.h in [1-2] :

:.d in [1-2] :

: :.d = i: 2S (2)

: : d in [2-6]:

: : :.h = I: 2S (2)

: :

:

:

: ¯

h in [2-6] : IS (2)

.

.

. .

. d in [3-6] :

:

:

: :

: :.h = I: IS (4)

: :

: :

:

h in [2-6] :

:

:

: :

:

:.d in [1-3] : 2C (2)

: :

: :

:

d in [4-6] : IS (2)

:

:

: :

h in [3-6]

:

:

: :

:.h in [4-6]: IS (6)

... (5 pages)

And the ~llowings~ ofrulm(mycommen~

on fight of

the *):

Rule

i: (512, lift 3.9)

pts in [0-3]

-> class

pass [0.998]

* Pass with a very weak hand

Rule

2: (132/2, lift 3.8)

pts in [0-II]

s in [1-3]

h in [1-4]

d in [5-6]

d in [1-5]

-> class

pass [0.978]

* Pass even up to average and 5 diamonds

Rule 3: (39, lift 3.8)

pts in [0-12]

s in [4-6]

s in [1-4]

h in [2-6]

h in [1-2]

d in [1-3]

-> class pass [0.976]

* Pass with up to average and 4 spades

... (i0 pages]

Theprogramuses the original bidsyst.txt andit is doubtful

if the tree or the rules canbe useful to a human

reader.

Noopponentswere taken into accountexcept for the specified openingbids whichincludedpreemptivebids like 2 diamonds(showing a strong hand with diamondsor a weak

hand with a six-card major) and 3 in a suit showinga weak

handwith a six-card suit.

Thedatabase contains a vector with the actual numberof

tricks taken in any suit or no trumph.Wehave assumedEast

to be declarer in all cases due to performancereasons. The

maximum

numberof bids in a row was set to four (a pass

wasautomaticallyaddedas a fifth bid if applicable) and the

numberof possible bids waslimited to sixteen.

Learningrates wereencodedas integers and shifted right

ten steps, effectively scaling them downwith a factor of

1024. Explorationrates wasinteger codedin parts per thousand. A T-value of zero wasused and the initial values of

the Q-tables for east and west respectively was a command

line argument. The exampleswere separate! in a training

and a test set and training exampleswere selected in randomorder to avoid biasing effects. The desired numberof

exampleswere selected in order from the database except

for not representable uncommon

shapes which were simply

skipped. Exploration was not used during test-evaluations

but used duringtraining evaluations.

Thescore wascalculated from the numberof tricks taken

and final contract. The vulnerability was "none" and the

dealer was always East. Undoubledpart-scores, games,

small-slams and grand slams was calculated and contracts

going more than two downwas assumedto be doubled.

Wetried to find the best possible biddingsystemby doing

three experiments;first a surveyrun with different parameters, a secondsurvey with somenew,better, parametervalues and then a longer run with the parametersestimatedto be

best by the humanobserver. The parametersto be selected

werethe learning rate (common

for both hands), exploration

rate (also common)

and initial values for the Q-tables.

In the first run weusedthe learningrates 5, 10 and20, explorationrates 5, 10 and20 andinitial Q-values-100for East

and -200, 0 and +200for West. Eachrun was repeated three

times with different randomnumberseeds (13579, 5799and

8642respectively). This resulted in a total of 3,3.3,3 = 81

cases, Ten million training exampleswereselected in each

epochand 200 epochswas run in each case. Eachcase took

about 41 minutes and the total CPU-time

was about 55 hours

on a 1.5 GHzIntel Pentium IV running Windows2000 and

equipped with 512 MBytememory.Each training occasion

required about one microsecondincluding evaluation. Every

74

Lr = 5/1024

Lr = 10/1024

Lr = 20/1024

e = 0.005

27.2.4- 8.7

24.9.4- 1.8

33.8.4- 3.6

¯ = 0.010

27’.6.4- 0.2

40.4.4- 6.1

50.1.410.0

¯ = 0.020

30.7+ 9.9

41.7+ 3.7

63.6

.4-3.1

Table4: Theaverageof the last ten test-evaluations, averagedover three runs. q0 is zero

" :,-

" =’

Figure 5: Runswith Q0= -200. The smoothcurve is for the

training set and the ruggedcurve the test-set. 200epochs,

each10 miijon tries, wererun and repeatedthree times to be

averagedinto these curves.

99th epoch,a biddingsystemwasemittedinto twofiles; responses.txt (115 kB) and rebid.txt (525 kB), the second

sponsewasnot stored. Thegenerationof the rebid-file takes

the openingrestrictions into considerationto reducethe size.

A log-file (6 kB) with the time, average training-sum and

averagetest-sum wasgenerated and the standard output was

redirected into a file (1.4 MB)with the actual bidding sequencesfrom all the test-cases for future reference. About

2MB*gI=I62MB

of disk space were u~ed.

550,000 training examplesand 200 test examples(positioned from 710,000 and forward) were used. 109,940

(16.7%)were skipped, reasonably close to the assumption

above(83%kept).

Selecting Learning Rate, Exploration and Initial

Q-value

Thefirst run was madewith a qo of 0. The results are shown

in Table 4. Thehighest value for both of themresulted in

the best performance;

a averagevalue of 63.6 -4- 3.1 with an

learning rate of 0.019 and an exploration of 0.020,

denoting

a 2%risk of selecting a randombid. The experimentswere

rerun with a qo of +200, meaninga untested alternative is

worth 200 points. Becausemost bridge-results are in the

-100..140region, this is the optimistic approachresulting

in an (more)exhaustivesearch and slightly worseresults.

A third run with qo = -200 where done with better resuits. Thisvalue (-200)results in less searchof alternatives

becausea reasonableresult like -100is preferred to untested

alternatives. The training and test-curves (Fig.5) seems

Lr = 5/1024

Lr = 10/1024

Lr = 20/1024

¯ = 0.005

29.3 :h 5.1

28.4 + 5.6

27.2-4- 2.4

¢ = 0.010

36.7 -4- 13.6

40.9 + 6.2

48.3 ± 8.3

e = 0.020

51.4 ± 6.9

54.6 ± 3.2

68.7 ± 4.9

Table 5: The average of the last ten test-evaluations,

aged over three runs. q0 is -200

aver-

150

100

!

!

5O

Figure 6: A 5-dayCPU run of learning. Lr is 20/1024,

e = 0.050 and qo = -200.The maximumtraining case has

a score of 104.89, max test-score is 121.7 and average on

the last ten is 94.65.

follow each other but at a different level. Thetest-curve is

almost always below the training curve although no exploration is used. The training-curve is also more smooth due

to the large number of examples (10 million, some identical). The best result (Table 5; 68.7 -4- 4.9) was also obtained with the highest parameter values; Learning_rate =

20/1024 ,~ 0.019 and e = 0.020.

A long, single run with lower learning rate but high exploration is shownin Figure 6. The levels seems to more or

less stabilize around 100.

Some of the interesting examples (many removed) from

the bidding are presented in table 6.

At a later position (1000) under the same training conditions, a bidding system was emitted. Only a very small part

of it (part of the responses whenpartner has started with

Pass) is presentedin table 7. It is illustrative becauseit indicates what suit to open with and which strengths are associated with a particular shape. Manycases are innovative and

maybe unknown to most human bridge-bidders.

Some examples are: opening with only seven h.c.p, whenhaving six

spades, two hearts and a four card minor (club or diamond)

suit, opening with the lower of adjacent suit if weak(then

pass) or strong (continue bidding) and higher suit if medium

strength, pass if halanced and less than fourteen h.c.p., open

one or three NTwith strong hands and any shape, 2 hearts

and spades(stronger) shows a good hand with eight+ cards

the majors, open with nine h.c.p, and unbalanced with sixcard major or diamondsor when having a five-five shape,..

75

Deal

2

5

6

7

13

16

24

26

27

28

29

33

34

42.

52.

53.

54.

56.

60.

64.

East

2614

2434

4522

3145

1543

3514

3334

5152

4243

2434

3613

5134

3514

3334

2623

2254

4342

3163

4144

4441

Hp West Hp

16 2353 15 IH

9 5323 14

P

i0 1255 13 P

7 2434 15

P

9 5332 16

P

12 3253 14 IH

6 3343 14 P

4 3613 i0 P

2 2641 22 P

12 4243 12 IH

13 3262 15 IH

6 3622 Ii P

ii 4135 13 P

I0 5512 13 P

10 2236 7 2D

13 2632 I0 ID

2 3514 ii P

12 5422 15 ID

0 3325 17

P

9 5431 ii

P

E W E

3N 4N

IS 2C

IC IS

IH IN

IS 2C

3N P

ID P

IH P

3N P

3N P

3N P

IH IN

IC IN

IH IS

2H P

3N P

IC ID

3N P

IN 2H

IS IN

W

7H

2H

2C

P

3N

2H

P

4S

IH

2S

2S

Score

1510

110

ii0

90

400

430

-50

110

-100

-50

520

-50

150

480

110

400

-50

430

-100

110

Table 6: Someinteresting examples of bidding from a reasonably good run with Lr = 0.02 and exploration set to 0.02,

q0 is set to -200. Anaverage score of 87 on the training set

and 100 on the test set is achieved after 562 epochs.

Response

10

3613 IH

3541 P

3532 P

3523 P

3514 P

3451 P

3442 P

3433 P

3424 P

3415 P

3361 P

3352 P

3343 P

3334 P

3325 P

3316 IC

3262 P

to P:

ii 12 13 14 15 16 18 20 22 25

IH IH IH IH IH IH IH 2C 2D ?

IH IH IH IH IH IH 3D 2S 2H 2S

P P P IH IH IH IN 3N 3N 2C

P P IH IH IH IH IH 3N 2C 2H

IC IH IH iC IH IH IH 3N 3N 3C

ID ID ID ID ID ID IN 2H 3D ?

P P P ID ID IH IN 3N 3N 3N

P P P iC IH IH IN IN 3N 2H

P P iC IC IC IH IN 3N 3N 3N

IC IC IC IH IC IC IH IN 3N ?

ID ID ID ID ID ID IN IN 3N 3S

P ID ID ID ID IN ID 3N 3N 2D

P

P P ID ID ID IN 3N 3N 3N

P

P IC IC IC IC IN 3C 3N 3N

P IC iC IC IC IC IN 3N 3N 3N

IC IC IC IC IN IC iC 3H 3N ?

P ID ID ID ID IN 3N 3N 3N 2N

28

?

?

?

3C

?

?

?

3N

?

?

?

?

2S

?

?

?

3H

Table 7: Some of the responses when partner has started

with pass, showing a weak hand without extreme shape.

Question-mark indicate unknowncase (no example).

Epoch

849

951

1074

1221

1413

1683

Training-level

84.7

83.4

79.1

67.3

45.7

35.6

Lr

6/1024

5/1024

4/1024

3/1024

2/1024

1/1024

Difference

170.7

204.8

256

341.3

512

1024

Thetest-set evaluationsare quite close to the training evaluations, indicating that no over-fitting is achievedyet. An

initial qo value set to a reasonableloweracceptablethreshold seemto steer the learninginto realistic areas. Goodruns

with decreasinglearningrate wasnot possibleto achievedue

to the integer nature of this implementation.

Theresulting biddingsystem,studied in detail is also interesting. The responderhave learnt, "understood",that an

openershowshearts or spades or a strong handwith the two

diamondopening bid and thereby never passes and do not

bid twohearts with heart support. The no-trumpbidding is

also interesting; two-bidresponsesseemsto be sign off, two

NTis neverused and three in a suit seemsto be highly conventional and never passed by the NT-opener.Three NTis

often the response with mediumstrength hands and not an

extremeshape and four in suit is a transfer bid. Maybean

interesting NT-biddingpart of the systemcan be developed

if the learning wereconcentratedon this?

Anyhow;

the learnt systemis not practically useful until

the defensive bidders are put into consideration. Moreinformationwill be available in myLicentiate thesis (ongoing

work)including a Genetic approach.

Table 8: Integer round-offerror

150

i

100

Figure 7: The scores are actually decreasingstep-wisewhen

the learningrate reduces.

Reducing the Learning Rates

The single learner Q-learningtheory says that convergence

is assuredif the learning rate is reducedslowlyenough.An

attempt to improvethe average score is done by reducing

the learningrate witha decayfactor. In our cases, the learning seems to drop offat about epoch 150. By setting the

learning rate to 20/1024at this point a experimentswasrun

with a slow decay (Figure 7). There are jumpsin the performanceand if viewedin detail those jumpsare positioned

according to table 8. Whenthe learning rate steps down

to small values, the required difference betweenscore and

q-value has to be at least one whenscaled with the learning rate accordingto EquationI. The first noticeable effects appear whenthe learning rate is 6/1024~-, 0.006 and

the required difference is then 1024/6~ 170.7. In bridge

termsthis meansthat the learner is not able to see the difference betweenpart-scores and concentrateson the large contracts like gamesand slams. Whenlearning rate gets below

3/1024,the game-contract

abilities are also lost. This problem with learning could be avoided by using floating-point

representation but this consumesmore memory

(4 times in

this case; 384,4 = 1536MB)and requires more computing

time.

Conclusions and Further Work

The runs showsit is possible to find a reasonable bidding

systemin a limited time. A relatively highlearning rate combinedwith highexplorationresulted in the best performance.

76

References

Andrews, 1". 1999. Double dummybridge evaluations.

http://www.best.com/thomaso/bridge/valuations.html.

Bj6rn Gambllck, M. R., and Pell., B. 1993. Pragmatic

reasoning in bridge. Technical Report 299, University of

Cambridge,ComputerLaboratory, Cambridge,England.

Carley, G. 1962. A programto play contract bridge. Master’s thesis, Dept.of Electrical Engineering,Massachussets

Institute of Technology,Cambridge,Massachussets.

Frank, I. 1998. Search and Planning UnderIncomplete

Information. London:Springer-Verlag.

Ginsberg, M. L. 1999. GIB:Steps towardan expert-level

bridge-playingprogram.ProceedingsfromSixteenth International Joint Conferenceon Artificial Intelligence 584589.

Hillyard, R. 2001. Extending the law of total tricks.

http : //www.

bridge,hotmail,ru/fil e/Total Tricks.html.

Lindvlof, E. 1983. The ComputerDesignedBidding System - COBRA.Number ISBN0-575-02987-0. London:

Victor Gollancz.

MacLeod,J. 1991. Microbridge - a computer developed

approach to bidding. Heuristic Programming

in AI- The

First ComputerOlympiad81--87.

Prorok, K. 1999. Gubbeni I§dan (in swedish). Svensk

Bridge3 l(no 4, october):26-27.

Stanier, A. 1977. Decision-Makingwith Imperfect Information.Ph.D.Dissertation, EssexUniversity.

Sterling, L., and Nygate, Y. 1990. P3~thon: An expert

squeezer. Journal of Logic Programming

8:21--40.

Watkins, C. J. C. H., and Dayan, P. 1992. Q-learning.

MachineLearning 8:279-292.

Winston, P.H. 1977. Artificial Intelligence. AddisonWesley.