From: AAAI Technical Report SS-00-01. Compilation copyright © 2000, AAAI (www.aaai.org). All rights reserved.

The Learning Shell

Nico Jacobs

Dept.of ComputerScience,K.U.Leuven

Celestijnenlaan

200A,

B-3001Heverlee,

Belgium

Nico.Jacobs@cs.kuleuven.ac.be

Abstract

Inthispaper

wepresent

preliminary

workintheareaof

automated

construction

ofuserprofiles.

We consider

thetaskof modeling

thebehavior

of a userworking

witha command

shell(suchas cshor bashunderthe

Unixoperating

system).

We illustrate

howthetechniqueof inductive

logicprogramming

canbe usedto

build

a userprofile

andhowthisprofile

canbeusedto

predict

thefuture

behavior

of theuser.We describe

a first

setofexperiments

totestthese

hypotheses

and

present

directions

forfuture

work.

Introduction

Computers are probably the most flexible

tools

mankind ever built. However most users are not able

to optimally use all the features computers can offer

because they lack the knowledge about these features

or lack the time to optimally configure their hardware

and software. By combining domain knowledge about

potential optimizations with a user profile describing

how the user (mis-)uses the computer changes can

made to optimize the use. In this paper we focus on

the automatic construction of a user profile as a set of

rules in first order logic. Weimplementedthis technique

to model users of a commandshell in a Unix environment and present some preliminary results on using this

model to predict the user’s future behavior.

with a description of the state of the environment if

relevant. In the learning from interpretations setting

(Blockeel et al. 1999) we represent input as facts in

first order logic. The output is a general description

of the behavior of the user represented as a first order

logic theory.

In the rest of the paper we will focus on modeling a

commandshell user. Although this is a very old and

basic user interface, it is still widely used. The main

reason for selecting this user interface is that it’s very

easy to log the user’s actions and state of the environment whenusing such shells. It’s also very easy to adapt

the shell so that it uses the generated model. Modern

graphical user interfaces (e.g. the Microsoft Windows

2000 operating system) also keep a log of all actions

performed, which is comparable with what a user types

in at a shell. As a result, the technique we present here

can be used to generate user models from the logs of

modern GUIs as well. However, the main difference is

how the resulting model will be used.

We use the ILP system WARMR

(Dehaspe & Toivohen 1999) to discover first order association rules in the

user behavior. As input to the system we use the commands typed at the prompt of a Unix commandshell.

Inductive logic programming (ILP) (Muggleton

De Raedt 1994) combines machine learning with logic

programming. ILP systems take as input a set of examples and produces an hypothesis which generalizes

over these examples. Input as well as output are represented in a subset of first order logic. Also background

knowledge can be used. This is information provided

by a domain expert which can be used in building the

output hypothesis.

We canuseILP techniques

to automatically

builda

modelofthebehavior

of theuser.Theinputof thesystemconsists

of actions

performed

by theusertogether

Z cd rex

Y, emacs-n. -q aaai.1;ex

Thesecommands

areparsedandtransformed

intologicalfacts.

Wesplituptheinformation

in threefacts:

inI

formation

aboutthecommandused,theswitches

used

andtheattributes

(suchas filenames).

Thefirstargumentof eachof thesefactsis a uniqueexample

identifierwhichindicates

theorderin whichthe command

was entered,

thesecondargument

of switch/3

andattribute/3

predicates

indicates

theorderof switches

and

attributes

withinthe commandbecausethe ordermay

be important

(e.g.in thecp (copy)-command).

In

it’spossibleto submitmultiplecommands

one input

line(usingthe pipe-symbol

’--’)in sucha way that

theresult

of thefirstcommand

isusedas theinputfor

thesecondetc.To incorporate

thatin our knowledge

representation

schemewe caneitherintroduce

a fourth

Copyright

© 2000,American

Association

forArtificial

Intelligence

(www.aaal.org).

Allrights

reserved.

la switch

is a parameter

provided

toa command

to modifyit’sbehavior

Inductive

Logic Programming

5O

fact which takes as argument the identifier of a piped

command, or we can add a third boolean argument to

the commandfact. This feature is not yet included in

the prototype system.

For each rule we know the accuracy of the rule, the

3)

deviation (an indication of how’unusual’ the rule is

and the number of examples which obey this rule.

Experiments

command(1,’cd’).

attribute(1,1,’tex’).

command(2,’emacs’).

switch(2,1,’-nw’).

switch(2,2,’-q’).

attribute(2,1,~aaai.tex’).

To test the quality of the generated user models we performed tests in which we use these modelsto predict the

next commandthe user will type based on a history of

5 previous commands. This raises the problem of how

the user model can be used to predict the next command a user will use, because in most situations more

Nowwe can start looking for regularities in these exthan one rule in the user modelwill be applicable, often

amples using WARMm

However, within one example

with different conclusions. Therefor we tested 9 strateone can only find relations between a commandand

gies for selecting a rule to predict the next command:

it’s attributes, a command

and it’s switches or between

using (the prediction of) the rule with the highest acswitches and attributes. Interesting rules describe recuracy, the highest deviation, the highest support, the

lations between a commandand the previously used

commandwhich was predicted the most often, the comcommands.WARMR.

doesn’t automatically take into acmand with the highest accumulated accuracy, accumucount these relations, but using background knowledge

lated deviation or accumulated support. Wealso used

we can inform WARMR

about these relations by definthe commandwith the highest product of accuracy and

ing relations such as nextcommandand recentcommand.

deviation, and with the highest accumulated product of

Background information is represented as Prolog prothese two statistics.

grams, see (Bratko 1990) if you are unfamiliar with the

In this experiment we used data from real users.

used Prolog notation.

Some of the commandstyped by the user don’t exist

(errors), other are only very rarely used. Weinstructed

nextcommand(CommandID,Nextcommand)

the system to only learn rules to predict commands

NextID is CommandID

- 1 ,

from a set of 30 frequent occurring commands4. We

command(NextID,Nextcommand).

logged the shell use of 10 users, each log consisting of

recentcommand(CommandID,Recentcommand)

approximately 500 commands. Wesplit up each log in

command(RecentCommandID,Recentcommand),a training set consisting of 66%of the examples, the

Recentborderis RecentcommandID

+ 5 ,

remaining examples were used as a test set. We perRecentborder> CommandID.

formed two experiments. In a first experiment one general user model was built from the combined training

Wecan also use this background knowledge to ’endata of all the users and we tested this general model

rich’ the data that’s available. One type of background

on the test data of each user individually. In a second

knowledge is defining some sort of taxonomy of comexperiment we build an individual user model for each

mands(emacs is an editor, editors are text tools, ...).

user based on her training data and tested this on the

Other information can be provided as well (is this new

test data for that user.

or old software, does it uses a GUI, network connection,

The first experiment (’global’-column in table 1) conor other recourses, ...)

firms that the most intuitive rule selection method

(namely selecting the rule with the highest accuracy

command(Id,Command)

on the training set (maxacc)) leads to the best results:

isa(0rigcommand,Command),

36.4% of the occurrence of frequent commandswas precommand(Id,0rigcommand).

dicted correctly 5. Using the rule with the highest deisa(emacs,editor).

viation (maxdev), with the highest product of accuisa(vi,editor).

racy and deviation (maxpro) and with the highest acWhenall input data is converted and all useful backcumulated product of accuracy and deviation (accpro)

ground knowledge is defined, WARMR

calculates the set

all perform almost as well. A clearly bad rule selecof rules which describes all 2 frequent patterns which

tion method is selecting the commandwhich is prehold in the dataset. These rules can contain constants

dicted by the highest numberof applicable rules regardas well as variables.

less of there accuracy or deviation (count), resulting

IF command(Id,’ls’)

THENswitch(Id,’-l~).

IF recentcommand(Id,’cd’)

ANDcommand(Id,’ls’)

THEN nextcommand(Id,~editor~).

3the proportional difference betweenthe numberof examples which obey this rule and the number of examples

whichwouldobey this rule in a uniform dataset

4Is, cd, exit, pine, rm, vi, more, pwd,dir, make,cp, ps,

finger, telnet, javac, export, pico, tt, lpq, elm, man,java,

rlogin, w, my, grep, logout, less, ssh and mail

Sail percentages are averagesover the 10 users

2we have to provide a language bias which defines the

space of rules we are interested in

5]

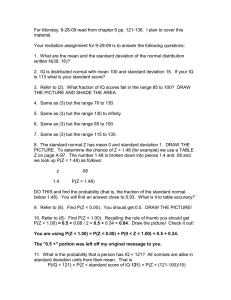

method

maxacc

maxdev

maxpro

maxrul

accacc

acedev

accpro

accrul

count

global

36.4

34.8

36.0

28.3

32.5

34.0

35.5

30.2

20.5

specific

44.7

43.3

45.3

34.4

41.3

40.1

42.5

40.0

31.2

Table 1: Prediction accuracies for different rule selection methodsand for global as well as user specific models

a 20.4% accurate prediction. Rule selection methods

based on accumulated accuracy (accacc), accumulated

deviation (accdev), predictions from rules which cover

most examples (maxrul) and commandswhich have the

highest accumulated number of covered examples in the

training set (accrul) all perform in between.

The second experiment -- in which user models are

built for each user individually -- shows the same results concerning the rule selection methods: maxacc,

maxdev, maxpro and accpro perform well, count performs bad and the others are in between. The prediction accuracies are significant higher than in the first experiment, and this for all rule selection methods. This

shows that constructing user models for each individual separately is beneficial for the resulting user model

and confirms previous results in the domainof building

user models for the browsing behavior of users (Jacobs

1999).

Future

Work

The system and experiments described above are only

preliminary work. It illustrates that it’s possible to

learn user models from logs, and that it’s beneficial to

learn individual models for each user. However, the

system still needs improvements to become practical

useful.

In both experiments the best rule selection method

doesn’t outperform the other methods: for some users

other methods perform better than the method with

the overall highest accuracy. This information could be

used as input for a meta, learning task: which type of

rule selecting method is best suited for which type of

user model. An other possible improvement of the rule

selection method is by defining new methods as combinations of the above methods (the product of accuracy

and deviation is already an example of such a method).

Voting or weighted voting techniques could be useful.

The rules that the system builds up till now only

predict the commandthe user will use. Often the parameters ¯ the user provides (such as filenames) can

predicted as well. Since we use inductive logic programming as a induction technique, it’s easy to adapt the

system such that rules can be constructed which not

only predict the commandbut also (some of) the parameters, either as constants or as variables (in this way

linking them to parameters of previous commands).

Wefocused on how to generate the user model and

how to select rules from this model. A next step is the

actual use of these models to aid the user. An obvious

use of these models is intelligent commandcompletion

in which the computer tries to complete the command

the user is typing. With these models this is even possible before the user has typed the first character of

the new command!But other possible more useful applications of the models exist. One such application

is the automatic construction of useful new commands

(macros). The system can do this by selecting a ’good’

rule (for example a rule with high accuracy and deviation) if command(A) then nextcommand(B) and

ating a commandC which just executes A and then B.

Another application of this user modelis in giving the

user information about howhe (mis)uses the shell. The

system could e.g. detect that the user often removes

file A after copying file A to B. The system can then

inform the user that it’s more efficient to use the movecommand.

The log file that the system uses to induce a user

model lacks information which can be useful. Up till

now the file only contains a history of commands,but

no information at all about the state of the environment. Wecan inform the system by including information such as the date, the time, the workload of the

machine and the current directory at the momentthat

a commandis invoked in the log file. This information

can then be used in the construction of new rules.

Finally we considered the user model as static. In reality a user model changes often, so our system should

build user models in a non-monotonic incremental way.

This can be achieved with the current system by keeping a window on the log file and use those examples

to construct a user model from scratch each time. But

this is clearly a very inefficient approach and a truly

incremental system would speed up the user model construction/maintenance.

Conclusions

Webriefly described howwe can use the inductive logic

programming system WARMR

to build user models and

discussed the benefits of this approach: the use of background knowledgeto provide Ml sorts of useful information to the induction process and an expressive hypothesis space (horn clause logic) which allows for finding

rules that contain variables. Weillustrated this in the

domain of commandshells. Wealso presented 9 strategies to select rules from this model for prediction. We

constructed a global user model as well as individual

models for each user and tested their predictive accuracy on real user data, which indicated that individual

models perform significant better than a global model.

In future work we have to extend the system to predict the parameters of the commandsas well, improve

52

the rule selection method, incorporate more information about the environment and look for a truly incremental construction of the user model. Finally more

attention should be paid to the use of the model to

improve the user interface.

Acknowledgements:

The author thanks Luc Dehaspe for his help with the WArtMrtsystem and Luc De

Raedt for the discussions on this subject. Nico Jacobs

is financed by a specialization grant of the Flemish Institute for the promotion of scientific and technological

research in the industry (IWT).

References

Blockeel, H.; De Raedt, L.; Jacobs, N.; and Demoen,

B. 1999. Scaling up inductive logic programming

by learning from interpretations.

Data Mining and

Knowledge Discovery 3(1):59-93.

Bratko, I. 1990. Prolog Programmingfor Artificial

Intelligence. Addison-Wesley. 2nd Edition.

Dehaspe, L., and Toivonen, H. 1999. Discovery of frequent datalog patterns. Data Mining and Knowledge

Discovery3 (1) :7-36.

Jacobs, N. 1999. Adaplix: Towards adaptive websites.

In Proceedings of the Benelearn ’99 workshop.

Muggleton, S., and De Raedt, L. 1994. Inductive logic

programming : Theory and methods. Journal of Logic

Programming 19,20:629-679.

53