Hypertext Summary Extraction for Fast Document Browsing

advertisement

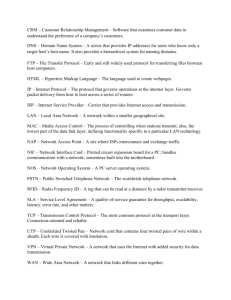

From: AAAI Technical Report SS-97-02. Compilation copyright © 1997, AAAI (www.aaai.org). All rights reserved. Hypertext SummaryExtraction for Fast DocumentBrowsing Kavi Mahesh Computing Research Laboratory (3CRL) NewMexico State University Las Cruces, NM88003-8001 USA mahesh~crl.nmsu.edu http://crl.nmsu.odu/users/mahesh/papers/hypex-s-mmaryhtml importance). The most interesting pieces should be most easily accessible to the user and can be considered to constitute the snmmaryof the document.Less interesting (~ moredetailed) pieces should still be accessible but hidden behind more interesting ones in multiple layers of detail. In this view of summarization,each layer can be considered a snmmaryof the more detailed layer immediately behindit. Abstract This article describes an application of Natural Langnage Processing (NLP) techniques to enable fast browsing of on-line documentsby automatically generating Hypertext s~lmmaries of one or more documents. Unlike previous w~k on summarization, the system described here, HyperGen,does not produce plain-text snmmariesand does not throw awayparts of the document that weren’t included in the summary. HyperGenis based on the view that snmmarization is essentially the task of synthesizing Hypertextstructure in a documentso that parts of the document"important" to the user are accessible up front while other parts are hiddenin multiple layers of increasing detail. In fact, HyperGengenerates short descriptions of the contents and rhetorical purposes of the hidden parts to label the Hypertext links between the s~lmmary and the different layers of detail that it generates. A prototype HyperGensystem has been implementedto illustrate the techniques and demonstrate its usefulness in browsing World Wide Web documents. I. Summarization as Hypertext eration Linkingthe different pieces and layers together in a Hypertextstructure enables the user to navigate to the different parts of the documentas desired. Labeling such Hypertextlinks with a short phrase or two indicates to the user what lies behind a link. In HyperGen,a prototype system that generates Hypertext surnrnaries of ~nslish and Spanishtexts, these labels are in fact very short summaries of the pieces of text that lie behind them. Each label snmmarizesthe content and/or the perceived rhetorical purpose(e.g., illustration, historical background. comparison,explanation, etc.) of one 0¢ morepieces of text. Users can "open" a hidden piece and "zoominto" any part of the documentif its label suggests matter of interest to them. Hypertext links in a HyperGensnmmaryneed not be limited to other parts of the samedocument.Suchlinks can in fact function as cross-links to related parts of other documents,even those in other languages. Suchcrosslinks are generated by HH0erGen using keywordsthat it recoglfizes while snmmarizingthe documentsinvolved. Gen- There is no correct algorithm for snmmarization.What belongs in a snmmaryextracted from a documentis determinedby the ne~s. likes, and stylistic preferences of users and the tasks they are performingusing the summary. Whythen should a snmmarizatiou system decide what to include in a snmmaryand what to throw away7 Onthe other hand. not throwingawayany part of the documentdefeats the very purpose of snmmarization, namely,fast browsingof documents.It seemsthat in general the best wayto snmmarizea documentis to assign to each piece of the documenta level of interestingness (or HyperGenhas been developed under the belief that a summarizationsystemthat (i) runs muchfaster than reading the full document,(ii) is robust, (iii) portable, richly customizable, and (v) provides excellent aids for the user to navigate throughthe difference parts of a document, will be useful in practice even if the summaries are less than ideal. HyperGenis intended for document browsingscenarios over any large collection of docu- 95 ments,such as an on-line library or the WorldWideWeb, whetherthe documents themselvesare in plain or Hypertext. Current Webbrowsersand search engines merely followpredefinedHypertextlinks andretrieve entire documentsby searchingfor keywordsin pre-c(ymputed index databases. HyperGen goesbeyond thistechnology and dynamicallyconstructs Hypertextpresentationsof documentsat mtdtiplelevels of detail, frombrief snmmoxies to entire documents.This viewof summarization as a meansfor documentvisualization througha meremanipularionof Hypertextfinks betweendifferent parts of documentspromisesto deliver the full potential of Hypertext documentcollections such as the WWW to the large community of Internet users. 2. Example Figure 1 showsa Hypertextsummary extracted by HyperGen. TheWebbrowserscreen on the left showsthe mainsllmmaryalong with automaticallygeneratedkeywordsin the document and several labels in between paragraphsthat haveHypertextlinks to parts of the documentnot includedin the summary. Theparagraphs shouldin fact be considered as "callouts"("highlights"or "pullquotes")extracted fromthe document.Thelabels, Hypertext links, andthe separatefiles for the hiddenparts are all automaticallygeneratedby HyperGen. Thepart of the document hiddenbehindthe first label ’TheDealer Network Option"is shownonthe right half of Figure1. It canbe seenthat this label is in fact a sectionheadingin the document.Otherlabels, such as "RankXerox’s experfise...the chaos..." are automaticaUy extractedfrom oneof t he"mostinteresting"sentencesin the hiddenpart. showsthe basic architecture of the HyperGen snmmarizarion engine. HyperGen has adopteda robust, graded approachto buildingthe coreengineby incorporatingstatistical, syntactic, anddocument-structure analysesamongother techniques.This approachis less expensiveandmore robust than a snmm~izaflon techniquebasedentirely on a single method.HyperC~n is designedin sucha waythat as additionalresources,suchas lexical andother lmowledgebases or text processingand machinetranslation engines, become available, they canbeincorporated into HyperGen’s architecture toincrementally enhance its capabilities andimprovethe quality of summaries.For example, there isnomorphological analyzer (or stemmer) in the current implementation of HypexGea. If available, it can be addedeasily as shownin Figure2. Someof the mainmodulesin HyperGen are (i) document structureanalysis,(ii) sentenceselection,(iii) sentence simplification,(iv) summary construction,and(v) customization. 3.1. Document Structure Analysis Document structure analysis is importantfor extracting the topic of a text (PaiceandJones,1993;SaltonandSinghal, 1994;Salton,et al, 1995).In suchan enalysis,for example,rifles andsub-rifles wouldbe givena more impcctantweightthan the bodyof the text. Similarly,the introductionandconclusion forthe text itself andfor each section are moreimportantthan other paragraphs, andthe first andlast sentencein eachparagraphare more importantthan others. Theapplicabilityof these depends, of course, on the style adoptedin a particular domain, andon the language:the stylistic structureandthe presentationof arguments vary significantly across genres andlanguages.Structureanalysis mustbe tailored to a particular type of text in a particular language.Document structure analysis in HyperGen involvesthe following subtasks: ¯ Document Structure Parsing: HyperGen assumes that documents haveI-/FML markers forheaders, secflon headings, andparagraph breaks. Itseparates the rifle, sections andsubsection headings, andother data andgraphics from paragraphs oftext. ¯ Sentence Segmentation: HyperGen breaks each paragraph into sentences bylooking forsentence boundaries. Ituses a stop list todeal with special cases such ascertain uses of’.’inabbreviations. Someof the labels are in fact links to large chunksof the documentwhichoften contain several subsections. These chunksare in fact summarized again by HyperGen to create multiplelayers of intermediatedetail. HyperGen is currentlybeingextendedto producelabels describingthe rhetorical purposeof a pieceof text. For example,rhetorical categoriesare identified andpresentedusingphrases such as "comments""analysis" and "example"Optionally, any multimedia elementsin the document will also be retained by HyperGen in the appropriateparts of the HypertextsnmmJtry. 3. Generating Hypertext Summaries Thecore snmmarizarion problemis taking a single text andproducinga shorter text in the samelanguagethat containsall the mainpointsin the input text. Figure2 96 Hidden Subpart 1 0069:Ajoint venture betweenthe XeroxCorporation.... Dat4,:December 27, I~, Thtrsda¥,LateE~on- F~] Soerco: CopydBht (c) 199U TheNew York’r’~tcsCompany ~ Ke~words in Document: state owned Rank Xerox Europe Eastern ............................................................................... Nobody hastotenSe..p’pLeh~gt’~ber how dl~.cul’( It Is todobusiness ~t aimed thai is In ber,vce.n Cortm~u~ts~n mxd capit~sm. H~ hastwochoices forse~l~$ Xerox pEotocopi~s in Czechoslovakia, andas far ashcisc~c~x~l, botharcrott=n. Thet~st Lsto cont~cdohxgl~sines$ wlOx the twoc~ingstat~-ovmcd comp~cs thathadheldthem~nopoly for ~trtbuttn$ offlc~cqulpmcnt tractorthe C~orm’mmists. Oivea how bureaucratic tutti Inefficient the),have always been,Mr.Lehngruber, them’~ dir~qor of Htmsary and~z~chos]ovakia for ]),ankXerox Ltd.,would not m~t~ar~mom~a th~ ~r~e. ........................................................................ The Dealer Network Option AJoint venture between the XeroxCorporation and the RankOr~udzation of Britain, RankXeroxsells Xeroxphotocopiers outside the Americasand has been Involvedin Eastern Europednce 1.964. RankX~ox’s_ex~rdse...the chaos...now In theyenr ended Oct. RankXeroxsold about $50 mlIllon In equipmentto Eastern Europeand about $50n’dlllon to the Soviet Union. C~echoslovalda....whege ...there...a Beck to the~v.,~,nor~ The Dealer Network Option Thesecond choice-theonehe would prefer-- Is to createa network o! Ind~eadent d~v]e~. The prob]cm, E~s okl,is thattheonlyptopicwiththenecks s ~c~pite~ er~thos c whowerecorruptCormntmist ot~alsor thos~involved in money-changh~g or e~e~b]ack-~ act~vitles. "Nobody~t thefts crookshave~emoney7 Mr. Le~’q~ruber said,"~dthequestion L~whether youas a re~ectab]e company wanttodobusiness withthecr~oks." mere RmtkXeroxestimates that it has about 70 percent of the marketin the Soviet Unionand about one-third in Eastern Europe, where its share ranges from 10 percent In Hupry to about 50 percent In Romanhc Plant in Soviet Union With big stato-owned compenlesbeing broken up throughout Eastern Europe and with small budnesses expected to multiply rapidly, RankXerox-- Hke other Westerncompanies -- Is also trying to create a networkof dealers and re’vice or~mdmtlons to sex, re the newlydecentralized mmket. "Withthe secret police some," Mr. Ldmgrubersaid, "contact xvith Western °’ compiles is no longer on the no-no list. Figure1. A Hypertextsummary generatedby HyperGen is shownon the left. Onthe right is one of the parts of the documenthiddenunderthe label "TheDealerNetworkOption"Thedocumentis 200 lines long. 97 Stop List ~ SntncR ord Frequency and’~ eyword Analysis ~] (Sentence Seleclion~ / KMorphologicai Analysis / Figure2. Architectureof HyperGen showingthe basic steps in extracting the Hypertextsummary of a document. Notethat only selected sentencesare parsed. 3.2. SentenceSelection Text Structure Heuristics: HyperGen uses heuristic rules basedon document structure to rank the sentences in a document (see below).For example,different scoresare assignedto first sentencesof paragraphs,to single-sentenceparagraphs,andso on. In order to allowa multitudeof techniquesto contribute to sentenceselection, HyperGen uses a flexible method of scoringthe sentencesin a document by each of the techniquesand then rankingthemby combiningthe different scores. Text-structurebasedheuristics provideone 98 Finally, sentencesin the document are rankedbasedon the scores returnedby all of the abovetechniques.Highest rankingsentencesare selected to constitutea summaryof a givenlength(whichis either a default or set by the user). wayto rank and select sentencesin a document.Additional methodsincluded in Hype~are described below. 3.2.1. WordFrequencyAnalysis Thebasic techniqueis to sort the wordsin the docmnent by frequencyof occurren~within the documentand select a fewof the mostfrequentcontentwords(i.e., wordsother thanarticles, prepositions,conjunctionsand other closed-classwords).Sentencescontainingthose wordsget a score increment.Supportingprocesses neededfor wordfrequencyanalysis include: 3.3. Sentence Simplification Sentencesselected for inclusionin the s-mmary are often lengthyandcan be simplifiedto further shortenthe summary. HypexGen has implementeda novel "phrase dropping" (or parse-tree pluming)algorithmbasedon phrasestructure heuristics for F.n~lishandSpanish.For exampie, it dropsembedded clauses andright-branchin~ preposiricoal adjuncts. Figure3 showsan exampleof sentencesimplification. ¯ Morphological Analysis(Optional): Statistical analysis worksmovereliably on a text whichhas been morphologically processedto recognizethe same wordwithdifferent inflections. Thecurrent implementationof HyperGen uses simple string matching for countingandccanparingwords. This method requiresa robust, shallowparser, at least a partial onethat identifies phraseboundaries,with good coverage.Thesimplification moduleis currently being integrated with the sentence selection moduleso asnot do dropparts that weredeemed importantby the selection scores. Thesnmmary shownin Figure1 wasproducedwithoutsentencesimplification 3.2.2. CorpusStatistics Wordfrequencyanalysis can be enhancedby counting wordfiequenciesacross an entire corpusinstead of just withina document.Wordsthat havesignificantly higher frequencieswithin the document relative to the whole corpusare likely to indicatesignificantparts of the document.Suchtechniqueswill be integratedinto future enhancementsof HyperGen. Shallow Syntactic Parsing. A shallow syntactic analysis helpsnot only sentencesimplificationbut also the recognitionof importantsentencesandextractionof semanticallyrelevant parts of these sentences.For example, labels for Hypertext links are generatedbyextracting several nounphrasesfromthe highest rankingsentencein the hiddenpart attachedto the link. Syntacticanalysisis also usedin HyperGen to recognizethe rhetorical purposesof texts. Avery simpleparser has beenimplementedfor Englishusing only a closed-class English lexicon. This lexiconmerelyspecifies the part of speech of closed-classwordsanda few stop words.Theparser can correctly tag manynoun,verb, andprepositional phrasesin texts. HyperC~n is very fast in spite of usinga parser, since the parserrunsonly on selectedsentences. 3.2.3. Keyword andKeyword Pattern Analysis Preset or user-specifiedkeywords fromeither the domain or to focusonparticular style can be usedto introduce elementsof targeted snmmarizaticn.Keyword-based rankingand selection has already beenimplemented in HyperGen. This will be further developedin the future to accept moreexpressivekeywordpatterns. Thenewcapability will enableother query-based IE_/[Rmodules to be integrated with HyperGen. Open-classwordsin document titles andsection headings are also treated as keywords. Sentencesin eachsection containingsuchkeywords are given score increments to boosttheir chancesc~ beingselectedfor inclusionin the summary. 99 Thursday, A group of bankers will assemble in Hong Kong to sign a @400million 12-year loan agreement to help fund local tycoon Gordon Wu’s dream of building 120-kilometre, six-lane "super highway"from the colony to Guangdong,capital of the neighbouring Chineseprovinceof Guangzhou, formerlyCanton. On a Figure 3. Sentencesimplification exampleshowingthe retained parts in boldfaceandthe droppedparts in a smaller font. 3.4. a label that links it to other piecesandsubpiecesof the document in the Hypertextsnmrnal T. SummaryConstruction Usingthe simplifiedparts of selectedsentences,a summaryis constrnctedby extractingthe corresponding parts of the sourcedocument.Anumber of issues such as capitalization andother punctuationmustbe addressedto renderthe 5nlmrnary andmakeit readable,especially whensentences havebeensimplified by droppingsome of their parts. In addition,the document title (if any)and informationabout the sourceand date of the document are presentedat the be~nnlng of a snmmary. Severalkeywords(frequent wordsor user specified keywords)are also presentedat the top of the snmms1T. F, uttLreversions of HyperGen will highlight occurrencesof the keywords in the bodyof the snrnmal T or the hiddenparts andprovide cross-links to other documents containingthe same keywords. Current workon HyperGen is developingalgorithmsfor identifyingthe rhetorical purposeof a paragraphby matching its sentencesagainsta glossaryof syntacticpatterns that act as keysfor the rhetorical purposes.The glossarycontainssyntactic patternsfor phrasessuchas "considerx" "illustrated by x" 3A.1. Hypertext Generation Eachcontiguouspieceof unselectedtext is writteninto a separate HTML file with Hypertextlinks to the appropriate point in the $11mmary (or the nextlayerin front of it, in general).Labelsare generatedfor these links as follows:if thereis a sectionheadingfor the hiddenpart, use it as the label; otherwise,select the highestscoringsentence(s)in the hiddenpart, parse them,simplifythem droppingphrasesandif theyare longerthan a preset maximum length, pick any keywordsor other noun phrasesin themto constructa label of lengthless thanthe maximum length. Theselabels are in a sense snmmaries of the hiddenparts andare intendedto indicate the main topic (or theme)of the hiddenpart of the document. If a hiddenpart is longerthan a (user cnstomizable) thresholdor has multiplesections, thenthe part is summarizedagain by Hypergen to create an intermediate layer of snmmary with hiddensubparts behindit. Thus the document is ultimatelybrokeninto individualparagraphswherethe contents of each unit is s.mmarized by Apartfrom Hypertextlinks betweena snmmary and different parts of a document,future versions of HyperGen will generateadditional navigationalaids by matching keywords and keytopic areas across documents in a collection. Results will be presentedin the Hypenext summaryby proviclin~an overallset of links to related documents as well as links to related documents basedon each keyword.Somesuch cross-links mayin fact be betweendocuments in different languages(if the keywordscan be translated by a machinetranslation module). 3.5. User Customization Users can customizeHypergenin the followingways: ¯ setting the length of the snmmary: relative to the length of the document or in absolute terms (number of charactersor sentences) ¯ specifyingkeywordsto use: e.g., "joint venture" ¯ specifyingthe numberof frequent or title wordsto find ¯ controlling sentencerankingheuristics: by adjusting the levels of preferencefor frequentwords,keywords,title words,first sentencein a paragraph,etc. ¯ extendingthe stop list: by specifyingwordsto be ignored In essence, all aspects of HyperGen’s summarization behaviorcan be controlled and customizedby a user througha friendly graphicalinterface. Futureversionsof i00 HypevOen will allow users to customizerhetorical purposeidentification by addingnewphrasepatterns to lock for or by telling HyperGen to focusen particular rhetorical types. marionthat is of interest to the useror is relevantto the taskonhand),it is critical for sucha system to indicateto the user the contentsof the unselectedparts of a document.HyperG-en providesthis newfunctionality. 4. HyperGen:The System 6. Future Work:Further Uses of NLP HyperGen has beenimplemented entirely in Java and can be usedin conjunctionwith a Web browser.It is fast, robust, modular,portable,essentiallymultilingual,and simple(e.g., doesnot requirecompletelexicons,full parsers, etc.). It has beentested on severalEnglishand Spanishnewsarticles. Nousability studyhas beenconductedso far to determineif HyperGen s1)mmarleS are in fact useful for Webbrowsingor other document filtering applications. Anewversion of HyperGen with rhetoricadpurposelabels will be developedanddemonstratedduring the symposium. Results fromtesting HyperC~n on larger collections of documents andpreliminary usability studies will be reportedduringthe symposium if possible. Thissectionbriefly outlinesadditionalapplicationsof NLPtechniquesto improvethe quality of HyperGen’s summaries.Thecore s-mmarizationmethodoutlined abovehas the advantageof simplicity, doesnot require a languagegenerator, anddelivers grammatical and fairly readable s~)mmaries even in untargetedsummarization situations. However, it suffers fromseveralproblems whichlead to importantresearchissues: ¯ Pronouns,reference, anaphora,etc.: Whenthe selected sentencescontainpronounsor other references, anaphora,or ellipsis, the requiredcontextmay be missing in the snmrnary, thereby hurting its read- ability and ease of understanding.HyperGen needs simplereferenceresolution techniquesto overcome the problemsandheuristics for avoidingsuchproblemsby not selecting sentencesthat are beyondthe capabilitiesof the resolutiontechniques. 5. Related Work Previousworkin summarization (also called automatic abstracting) has addressedprimarilythe simplerproblem of producinga plain-text snmmary of a single document in the samelanguage(Cohen,1995;Lnhn,1958;Palce, 1990;Pinto Molina,1995;Prestonand Williams,1994; Saltonet al., 1994).Document retrieval andclassification efforts, on the other hand,haveproduceda multitudeof techniquesfor selectinga subsetof an entire collectionof documents.However, they simplypresent the retrieved documents in their entirety, providinglittle supportfor quicklydigestingthe contentsof an individualdocument. Informationextraction (Cowieand Lehnert,1996)systemshaveassumed that whatis of interest to users is known a priori in the formof templates.Mostworkin natural languagegenerationhas focusedon generating s~mrnaries of data, not texts (Kalita. 1989;Kukich,et al, 1994; McKeown. et al, 1995;Robin. 1994). Workin machinetranslation has assumed for the mostpart that sourcetexts mustbe translatedin their entirety. Akey drawback of the aboveresearchis that they assumed that a document mustbe either processed(e.g., translated, retrieved,etc.) in its entirety or that it mustbe summarized whilethrowingawaythe unselectedparts. Bybringing someof these areas together, HyperGen has attemptedto exploit NLPtechniquesto improvethe utility of Webbrowsers.Sincethere is no algorithmfor summarizationthat guaranteesa summary that always meets the needsof a task (i.e., nevermissesona pieceof infor- i01 ¯ Poor flow in summary: Since the stlrnmary is put togetherby conjoiningdifferent pieces of the source text, it is not likely to havea goodflow.However, this maynot be a critical problemfor HyperGen since it does not producea plain-text snmmary. HyperCw.n s~mmaries are inherently Hypertextsand are interspersedwith hyperlinksto other pieces of documents. Further applications of NLPtechniquesmayyield benefits in severalareas: Ontology-based content classification: Futureversions of HyperGen will attemptto classify the topic (or "theme")of a piece of text usinga broadcoverageontology, such as the Mikrokosmos Ontology(Carlsonand Nirenburg,1990;, Maheshand Nirenburg,1995; Mahesh, 1996). Wordsin a text will be mapped to ontologicalconcepts using a semi-automaticallyacquiredmappingfrom WordNet entries to conceptsin the Mikrokosmos Ontology. Anappropriate clustering algorithm will beused to select the mainconceptfromamongthe conceptsto whichwordsin a piece of text are mapped.Sucha methodis expectedto yield better labels that snmmarize hiddenparts than current onesoften generatedby simplifying a sentencein the hiddentext. Translationissues: Aglossary-based(or other fast and simple)translation enginecan be usedto translate necessary parts of documents in other languagesto F~nglish. Theentire document shouldbe translated only if demanded by the user. Differentmethodsfor interleaving ,~lmmarization andtranslation operationscan be explored to minimize translation efforts. For example,the source text maybe summarized in the source languageand the salmmary translated to English. Unselectedparts may sometimes needto be translated to Englishbef~elabels canbe generatedto identify anddescribetheir topic areas andrhetorical purposes. for manyinsightful comments anddiscussions. Self-evaluation: Everydecision madeby HyperGen is basedon scores assignedaccordingto a variety of usercnstomizableparameters.Self-evaluationcan be addedto this designby attachingsimpleevaluationproceduresto each of the parametersso that a measureof the system’s confidenceis determinedwhenevera decision is made anda score is assignedalong someparameter.Asimple algorithmcan be developedfor combiningindividual measuresof confidenceso that an overall measurecan be presentedto the user. Suchconfidencemeasurescan be integratedinto the labels andsummaries by generating Englishphrasessuchas "certainly about""appearsto be" or "not sure about"to expressHyperGen’s evaluation of its owns, mmarization decisions. Cowie,J. andLehnert. W.(1996). "InformationExtraction?’ Communications of the ACM, special issue on Natural LanguageProcessing, January 1996 References Carlson,L. and Nirenburg,S. (1990). "WorldModeling for NLP"Centerfor MachineTranslation, Carnegie Mellon University, Tech Report CMU-CMT-90-121. Cohen,J.D. (1995). "Highlights: Language-and domainindependentautomaticindexingterms for abstracting" Journal of the American Societyfor InformationScience, 46(3): 162-174. Kalita, J. (1989)."Automatically generatingnatural languagereports." International Journal of Man-Machine Studies, 30:399-423. Kuldch,K., McKeown, K., Shaw,J., Robin,J., Morgan, N., andPhillips, J. (1994)."User-needs analysis and design methodology for an automateddocumentgenerator." In Zampolli,A., Calzolari,N., andPalmer,M., editors, CurrentIssues in Computational Linguistics:In Honorof DonWalker. KluwerAcademicPress, Boston, MA. 7. Conclusions H_P.(1958)."Theautomaticcreation of literary abstracts" I.B.M.J.of Res. Dev.,2(2): 159-165. Lnhn, Thereis an overloadof on-line documents on the users of the Intemet. Currentsearch enginesandbrowsersare limitedin their capabilitiesto easethis overloadandto help users quicklylind anddigest the informationthey needfromthe large collectionof arbitrarily irrelevant documentsavailable to them. TheHyperGen system describedin this article attemptsto remedy this situation by applyingNLPtechniquesto enhancethe capabilities of a Webbrowser.HyperGen automaticallyconstructs Hypertextsummaries of documents so that users can see interesting subparts of documents in a summary up front and, at the sametime, see labels summarizing the parts that are hiddenbehindthe mainsummary in layers of increasingdetail. Theauthorfirmlybelievesthat these ~mmaries are moremeaningfulanduseful than the first fewlines of documents typically displayedin the results froma state-of-the-art Netsearchengineandthat these capabilities cannotbe achievedwithoutthe use of NLP techniques. Mahesh,K. andNirenburg,S. (1995). "Asituated ontologyfor practical NLP."In Proceedingsof the Workshop on Basic OntologicalIssues in Knowledge Sharing, InternationalJoint Conference on Artificial Intelligence (IJCAI-95), Montreal,Canada,August1995. Mahesh,K. (1996). "Ontologydevelopmentfor machine translation: Ideologyand methodology." Technical Report, ComputingResearchLaboratory, NewMexico State University, LasCruces, NM. McKeown, K., Robin,J., and Kuldch,K. (1995). "Generating concisenatural languagesummaries."Information Processingand Management (special issue on s,,mmarizatiou). Paice, C.D.andJones, P.A. (1993)."Theidentification importantconceptsin highlystructuredtechnical papers?’ Proc.of the 16th ACM SIGIRconference, PittsburghPA,June27-July1, 1993;pp.69-78. Acknowledgments I wouldlike to thank Sergei NirenburgandP~miZajac 102 Palce, C.D.(1990)."Constructingliterature abstracts computer:Techniquesand prospects" Iuf.Proc. & Management 26(1). 171-186. Pinto Molina,M.(1995). "Documentary abstracting: towarda methodological model."Journal of the American Societyfor InformationScience,46(3):225-234. Preston, K. and Williams,S. (1994). "Managing the informationoverload: Newautomaticsummarization tools are goodnewsfor the hard-pressedexecutive." Physicsin Business,Institute of Physics,June1994. Robin,J. (1994). "Revision-based generationof natural languages, mmariesprovidl,~ historical background: Corpus-basedanalysis, design, implementation and evaluation." Ph.D.Thesis, Computer ScienceDepartmerit, ColumbiaUniversity, TechnicalReportCUCS034-94. Salton,G., Allen, J., Buckley,C. andSinghal,A. (1994). "Automaticanalysis, themegeneration, and summarization of machine-readable texts." Science,264:14211426. Salton,G., Sing,hal, A., Buckley,C., andMitra,M. (1995)."Automatic text decomposition using text segmentsand text themes"TechnicalReport, Department of Computer Science,CornellUniversity,Ithaca, NY. Salton, G. andSinghal, A. (1994). "Automatic text theme generationandthe analysisof text structure."Technical Report TR94-1438,Departmentof ComputerScience, ComellUniversity,Ithaca, NY. 103