REASON: NLP-based Search System for the ...

advertisement

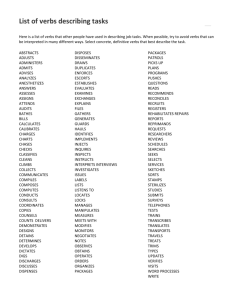

From: AAAI Technical Report SS-97-02. Compilation copyright © 1997, AAAI (www.aaai.org). All rights reserved. REASON:NLP-based Search System for the WWW Natalia Anikina, Valery Golender, Svetlana Kozhukhina, Leonid Vainer, Bernard Zagatsky LingoSenseLtd., P.O.B. 23153,Jerusalem91231,Israel valery@actcom.co.il http://www.lingosense.co.il/ai_symp_paper.html Abstract This paper presents REASON, an NLPsystem designedfor knowledge-based informationsearch in large digital libraries and the WWW. Unlike conventionalinformationretrieval methods,our approachis basedon using the contentof natural languagetexts. Wehaveelaborateda searching strategyconsistingof three steps: (1) analysis natural languagedocuments and queries resulting in adequateunderstandingof their content; (2) loading the extracted informationinto the knowledge base and in this wayformingits semanticrepresentation;(3) query matchingproperconsisting in matchingthe contentof the queryagainst the content of the input documentswith the aim of finding relevant documents.Theadvancedsearchingcapabilities of REASON are provided by its mainconstituents: the LanguageModel, the KnowledgeBase, the Dictionaryand the Softwarespecially developedfor content understandingand content-basedinformation search. Wecharacterizethe particularities of the Systemand describe the basic components of the formallinguistic model,the architectureof the knowledge base, the set-up of the Dictionary,andthe mainmodulesof the System- the moduleof analysis and the logical inference module.REASON is an importantcontributionto the development of efficient tools for informationsearch. Introduction The WWW is rapidly expandingand becominga source of valuableinformation.At the sametime this informationis poorlyorganized,mixedwith noise and dispersed over the Intemet. Attemptsto find the informationusing currently existing search enginessuchas Alta Vista, Lycos, Infoseek,etc. lead to unsatisfactoryresults in manycases. Usually,the responseto the searchcontainsa large amountof documents,manyof whichare totally irrelevant to the subjectof interest. Onthe other hand, documents that are relevant maybe missingif they do not contain the exact keywords.Refiningthe searchby using "advanced" Booleanoperationsusually results in a few, or evenno, documents.Serious problemsare causedby homonymy, ambiguity, synonymy,ellipsis, complex semanticstructure of natural language. Let us considersuchrelatively simplequeriesas: Q1. Supercomputer marketdata Q2. Digital users groupin England Q3.parallelizationtools It is verydifficult to find documents relevantto these queries using traditional keywordsearch. Evenmore sophisticatedapproachesbasedon different thesaurusesdo not allowmatchingsemanticrelations betweendifferent conceptsin queriesandtexts. In order to solve the above-mentioned search problems we designed an NLP-basedsearch system REASON. This systemunderstandsfree-text documents,stores their semanticcontent in the knowledgebase and performs semanticmatchingduringthe processingof queries. This paperbriefly describesthe mainprinciples of the System. General Principles and System Architecture REASON is an NLP-based information search system whichis capableof: ¯ understandingthe content of natural language documents extracted fromlarge information collections, such as the WWW; ¯ understandingthe content of the users’ query; ¯ building a knowledgebase in whichthe extracted informationcontainedboth in the documents and the queryreceives an adequatesemanticrepresentation; ¯ performinginformation search whichconsists in matchingthe contentof the queryagainst the content of the documents ; ¯ finding relevant documents or giving anotherkind of response(e.g. answeringquestions). REASON does not use the keywordmatchingmethod. Instead, the Systemapplies moresophisticated matching techniqueswhichinvolve a numberof reasoning operations(cf. Haase1995): ¯ establishing equivalenceof wordsand constructions, ¯ expansionof the queryon the basis of associations betweenconcepts( definitions, "general-specific" relations andso on), ¯ phrase transformations(based on "cause-result" connections,temporalandlocal relations, replacementby paradigmaticallyrelated words), ¯ logical inferences(basedon cross-referencesin the text, restorationof impliedwords). Themainconstituent parts of REASON are: the LanguageModel,the Dictionary, the Knowledge Base and the Software. Thepresent version of REASON processes texts in the Englishlanguage.TheSystemsoftwareis written in C and runs on different platforms.Theinterface to the Webis implemented in Java. Language Model Theneedsof natural languageprocessingcall for a consistent, unambiguous and computer-oriented modelof linguistic description. Anatural language,unlike artificial languages,aboundsin ambiguities, irregularities, redundancy, ellipsis. In constructingthe languagemodelweproceededfrom current linguistic theories, in particular, semanticsyntax(Tesniere1959), generativesemantics(Fillmore1969),structural linguistics (Chomsky 1982,Apresianet al. 1989), Meaning-TextModel(Melchuk& Pertsov 1987). Wealso makeuse of the experienceof the "SpeechStatistics" ResearchGroupheadedby Prof. Piotrowski(Piotrowski 1988). Ourlanguagemodelincludes four levels: morphological, lexical, syntactic, discourse.At eachlevel wedistinguish two planes: the planeof expression(forms)and the plane of content (meanings,concepts).Thelatter is considered the semanticcomponentof language. Morphological Level In describing English morphology weapplied the techniquesuggestedby J.Apresian. For compact presentation of morphologicalinformationweused charts of standard paradigmsof morphologicalforms and masks for non-standardstems. Everywordstemis ascribed a certain morphological class on the basis of three charts for verbsandtwocharts for nounsandadjectives. Weelaboratedrules for revealingdeepstructure categories for verbs. Thus,wehavelearnedto determine the real time and aspect meaningsguidedby the cooccurrenceof adverbialsof time (certain features are ascribed to each groupof adverbsandprepositionsof time), grammatical features of verbs(stative verbs, verbs of motion).This approachallowsto transfer to utterances in other languages,wherethe expressionof tense-aspect relationsis different~ OurModelincludes about 80 patterns whichestablish correspondence betweenformand content of verbal tenseaspect forms. Lexical Level All the wordsare divided into two kinds: majorand minor classes. Theformerinclude conceptual(notional verbs andnouns)andattributive classes (e.g. adjectivesand adverbsof manner,denotingthe properties of concepts). Majorwordclasses are opengroupsof words, while minor classes are closedgroupsconsistingof a limited number of words.Majorandminorclasses are treated differently in text processing.Wehavesingled out about 200 conceptual wordclasses for verbs and220 - for nouns.Eachword class is characterized by somecommon semanticor pragmaticfeature, e.g. Wordclass 187 - "Sphereof human activity" (science, business),204 - "Cessation Possession"(lose). Wehave singled out about 150 attributive classes, e.g. Wordclass 407- "Color"(white), 480- "Characterof action accordingto intention" (deliberate). Wedifferentiate betweenthe namesof properties(color, size, depth,price) andthe valueof these properties(red, big, deep, cheap). Thenamesof properties (nouns), their values (adjectives) andunits of measurement (nouns) linked in terms of lexical functions(Melchuk &Pertsov 1987), whichenablesthe Systemto paraphrasedifferent statementsand bring themto a canonicalform. Minorwordclasses play an auxiliary role. Theyinclude functional words,supra-phrasalclasses, wordsexpressing modality,probability,the speaker’sattitude, andso on. Supra-phrasalwordclasses comprisewordsindicating referenceto the informationcontainedin other parts of the sentence(also, neither), sentenceconnectorsandsentence organizers:e.g. "to beginwith"marksthe starting point of the sentence, "by the way","on the other hand"develop the theme,"therefore" marksthe conclusion,andso on. Specialplaceis occupiedbyintensifiers of properties(too big, quite well), actions(likedvery much),etc. OurLinguistic Modelreflects logical, extralingual connectionsandassociations betweenwords. Dueto these relations, it becomes possibleto join wordsinto groupings on the paradigmaticaxis (Miller 1990). Wedistinguish several types of paradigmaticgroupings: 1. Quasi-synonymicgroups 2. "General-specific"groupings 3. "Whole-part"groupings 4. "Cause-result"groupings 5. Conversives 6. Definitions 7. Lexicalparadigms- "Nests". Quasi-synonymicgroups include words which are close in meaning and can be interchangeable in speech or, at least, in documents pertaining to a certain domain of science or technology. As an example, we could mention such Quasi-synonymic groups as Qv3 (show, demonstrate, present, describe, expose), Qnl0 (developer, author, inventor). "General-specific" groupings. Such a grouping consists of a word with a generic meaning and words with more specific meanings.For instance, "market" is a generalized term for more specific "purchase", "sale", "orders", "supply". "Whole-part" groupings. The name of the whole object can be used instead of the names of its components. For example, "America" can be used instead of USA,Canada; "processing" can be used instead of "analysis", "synthesis", and so on. "Cause-result" groupings. Many concepts, especially actions and states, are connected by the "Cause-result" relation. Wehave revealed a numberof such connections, e.g.: "produce" -~ "sell" -~ "order" (If a companyproduces something, it can sell this product and a customer can order it.) Special rules are designedin order to reveal the role of the participants of the situation. Conversives. Manyverbs are associated on the basis of conversion. Theyform pairs of concepts (take - give, sell buy, have - belong). In real speech the statements organized by these verbs are interchangeable if the first and the second (or third) actants of the predicate are reversed: I gave him a book ~ He took a book from me. They have a laboratory -~ A laboratory belongs to them. The company sold us a computer -~ We bought a computer from the company. There are rules stating correspondences betweenactants. Definitions. Paradigmatic associations between concepts, based on the above-mentioned groupings, are most efficiently realized in definitions. Everydefinition states a certain equivalence of concepts. If definitions are loaded into the knowledge base, the associations between the components of the definition help identify statements expressing the same idea. Wesingled out a number of types of definitions: 1. Incompletedefinition of the type Ns is Ng(specific is generic): DECUS is a worldwideorganization. 2. Completedefinition of the type Ns is Ngthat (which)... : DECUS is a worldwideorganization Information Technologyprofessionals that is interested in the products, services, and technologies 3. 4. 5. 6. 7. of Digital EquipmentCorporation and related vendors. Incompletedefinition of the type Attribute + Ngis Ng: A parallel computeris a supercompnter. Completedefinition of the type Attribute Ngis Ng that... : A parallel computeris a supercompnterthat is based on parallelization of sequential programs. A list of junior concepts- Ngis Nls, N2s, N3s... : Marketis sale,purchase, orders, supply ... N1is the sameas N2: Identifier is the sameas symbol. N1 is abbreviation of N2: COBOL is the abbreviation of Common Business-Oriented Language. Nests. One of the most ingenious and useful ways of grouping words is their organization into lexical paradigms which we call "Nests". Every Nest contains words of different parts of speech, but united around one basic concept (e.g. invest, investment, make (an investment), investor, etc.). Membersof the Nest may derived from different root morphemes,e.g. buy, buyer, customer, purchase, shopping. The main criterion is that the members of the Nest stand in certain semantic relations to the head of the Nest. Every Nest is viewedas a frame and its membersoccupy certain slots in it. Each slot corresponds to a specific semantic relation with the head of the Nest or sometimeswith other slot-fillers. The relations between the Nest memberscan be described in terms of lexical functions - LF (according to I.Melchuk). Wehave developed and adjusted the system of LF to the peculiarities of our LanguageModel. Wedesigned several types of Nests. Transitive verbs of action, for example, form a frame which includes 25 slots. In each particular case someof the slots mayremain empty. Belowwe illustrate the Nest formedby the verb "produce": Slot 1 - the verb of action: produce Slot2 - nominaactionis: production, manufacture (LF S0) Slot 3 - perform the action of Slot 2: carry out (LF Operl) Slot 4 - the performerof the action: producer, manufacturer Slot 7 - the generalized name ofactant 2: product Slot 13 - able to performthe action of Slot 1: productive (LF A0). Semantic Features. The mechanismof semantic features (SF) plays an important role in the semantic and syntactic description of language. Within our Modelevery lexeme ( hence, a concept) is assigned a specific set of SF which characterize their semantic content and often determine their syntactic behavior. The co-occurrence of words is often determined by their semantic agreement. Wedistinguish about 200 SFfor nouns,180 SFfor verbs, 50SFfor adjectives. Someexampleswouldsuffice. SFfor nouns:"Relative" (son), "Economic organization" (company),"Inanimate"(house, book), "Transparent" kindof, a part of), etc. SFfor verbs: "Causative"(compel),"Possession"(own), "Movement" (go), etc. SFfor adjectives: "Measurable property"(cold), "Relationto time"(past), etc. SFare used in government modelsto specify the semanticrestrictions on the choiceof actants. Theyhelp identify the actants andtheir semanticroles, resolve ambiguity, reveal second-level modelscommonly formed by a limitedcircle of predicatesandso on. Hereis oneexample to illustrate the assertion: Thehomonyms "raise" (growplants), "raise03" Coring up), "raise01"(lift), "raise02(gather money),"raise04" (producean action) are differentiated only dueto the SF their first andsecondactants. Syntactic Level Thebasic syntacticunit is a predicativeconstruction.The predicate governsthe dependentsas the headof the construction. Themainformof the predicative constructionis a simplesentence,but the predicatecan govern,in its turn, other predicativeconstructions.Among dependentstructures wedistinguish subordinateclausesof all kindsandpredicativegroupsformedby verbals. So it is possible to gradepredicativeconstructionsby the rank of their dependence (first, secondor third levels). At the deepstructure level relations betweenwordsare viewedin terms of semanticroles, whena dependent("a Servant")playsa definite semanticrole in relation to the head("the Master").Thusa predicativeconstruction representedas a role relation (Allen1995).In our LanguageModelthe Syntactic Leveldistinguishes about 600semanticroles of the first andsecondlevels (including subroles). Hereare someexamplesof roles. #1 Addressee #9 Instrument #35.1 Agentof the action #35.2 Experiencer ##24.6.aContentof completeinformationat the 2nd level (that-clause),etc. Otherparts of speechcan also formrole relations. Oneof the mostfrequentheadsof a predicativeconstructionis a predicativeadjective( "Heis afraid of progress").But treat suchadjectivesas verbswith auxiliary"be", andin the courseof analysis the combination "be + adjective"is reducedto a one-member verb (Lakoff 1970). Besides, manynounscan haveservants linked to themby certain semanticroles. Aspecial place among potential mastersis occupiedby prepositionsand conjunctions. If the servantscanbe predicted,the combinability of the masteris describedin terms of models.Wehavesingled out about 260 patterns of government,or Government models.Everymodelis a frame.Theslot-fillers are actants, eachof whichis linked to the masterby a certain semanticrole. In typical government modelsthe actants are describedin termsof word-classes,but in local models, characteristicof concretepredicates,the SFof actants are usuallyspecified. Thenumber of actants varies from1 to 7. For example,the verb "buy"has five actants. In the followingsentenceall of themare realized: "Thecustomerbought a computerfor his son from IBM for 2000dollars". Act 1 is linked by role #35.1 (Agent). RequiredSF N13 (Legalperson). Act2 is linked by role #60.1(Objectof Action). RequiredSF N4(Inanimatenon-abstract thing), (Animals),N87(Services). Act3 is linked by role #2 (Beneficiary).Required N13, N3. Act4 is linked by role #55 (Anti-Addressee).Required SF N13. Act5 is linked by role #12 (Measure).RequiredSF N51 (Money). Themaster can haveservants the occurrenceand selection of whichis not determinedby the predicate. Theirpositioning,structural andsemanticfeatures are less fixed than those of the actants. Theyare considerednonactant servants. Lexemes functioningas non-actant servantsare ascribed reverse modelswhichindicate in whatrelation they stand in referenceto their master. Second-levelgovernment modelsare also classified into twotypes: actant andnon-actant.For instance, the verbs "tell" and"inform",besidesfirst-level models,can form modelson the secondlevel, e.g.: 1) an objectclause linkedby"that" (Hetold us that livedthere). 2) an objectclause introducedby relative conjunctive words(Hetold us howhe workedthere). Discourse Animportantcomponent of syntactic and lexical analysis is processingtextual units larger than a sentence(Allen 1995).Thisis the level of discourse. Oneof the mostsalient featuresthat characterizetext is cohesion.OurSystemdeals with different types of sentencecohesion.Theyincludethe use of substitutes, correlativeelements,all typesof connectionsignals (like wordorder, functional words,sentenceorganizersof the "yet", "besides","to beginwith"types). Semantic interdependencebetweensentences involves naming identicalreferents(objects, actions,facts, properties) different ways.Thus,parts of a broadcontextare knit by variouskinds of cross-reference.For instance, pronouns andtheir antecedentsfoundin the precedingparts of the passageco-refer. OurLanguage Modelhas special rules for identifyingsuchcasesof co-reference. Among other meansof co-reference wetake into account the following: a) the repetition of the samenounwithdifferent articles or other determiners; b) the use of synonyms or other semanticallyclose words (company - firm, parallelizer - parallelizationtool); c) the use of generictermsinstead of morespecific in the samecontext(vessel - ship, warship,freighter); d) the use of common and proper nounsfor denotingthe samereferent (Washington - the capital of the USA); e) the use of semanticallyvagueterms instead of more concrete denominations(company- Cray Research); f) the occurrenceof wordswith relative meanings (sister, murderer,friend), the full understandingof whichis possibleonlyif discourseanalysishelps to find their actual relationships(say, whosesister, the mudererof whom,a friend to whom). Thesemanticrepresentationof a sentenceis often impossiblewithouttaking into considerationthe representationof other parts of the context.In particular, it appearsnecessaryto considerthe syntactic structure and role relations of precedingsentences.Specialrules helpto restore ellipsis, incomplete role relations with missing obligatoryor essentially importantactants (key actants), identify semanticallyclose syntacticstructures. Proper discourseanalysis is an importantprerequisitefor natural text comprehension and informationsearch. Dictionary The main componentsof the LanguageModelare mirroredin the Dictionaryentries. Ourdictionary currentlyconsists of about20,000items. Further expansionof the Dictionarycan be automated.New lexemescan be addedby analogyif their semanticand morphological characteristics coincidewith those of the previouslyintroducedentries. Anappropriate mechanism for expandingthe Dictionaryhas beenelaboratedand tested. Everyentry contains exhaustivelexicographical informationabout the word:its semantic,morphological, syntactic, collocational, phraseological,paradigmatic characteristics.Eachentry has 20 fields. Hereis part of the entry"sell": fl. Thestem: sell 12. Thecodeof the word-class:204 f3. Thecodeof the wordwithin the word-class:7 f4. Thecodeof the typical government model:4.3.1 f5. Morphologicalclass: 42 f7. Syntactic features: G1, G9, G18 t’8. Semanticfeatures: V6, V86,V19,V53 f9. Grammaticalfeatures: GI2; Tv3 f15. Lexicalfunctions: Lf60= "sellS" Theverb"sell" has twostems:"sell" and"sold"; both of themshare the samefields - 2 and3 and constitute one lexeme.Someother fields maydiffer. Thus,in the entry "sold"wesee: f5. 48 f9. G12;Tv3;Passl In the entry "inform"wesee: fl0. Paradigmaticconnections: Qvl3; Lv23 In the entry "abolish"wesee: f13. Style: St3, St4 Forthe verb "delete": f14. Domainof usage: Cf45 Somecommentson the meaningof the symbolsin the fields are presentedbelow: GI: possibleto use absolutely,withoutanyobjects; G18:possibleto use witha direct object; G12:usedwith prepositionlessdirect object; G9:usedonly with adverbialsof place, andnot those of direction; Tv3:verb of a dual character(can be either resultative or durative); Lf60:"sellS: the verb formsa Nestof type 1; Passl: the stemformsPassiveof type 1, whenactant 2 becomesthe subject (the left-handdependent); Qvl3: the lexemebelongsto a quasi-synonymic group No13(alongsidewith "say", "report", etc); Lv23:the verb can governa second-levelmodelof a certain type; St3:literarystyle; St4: style of official documents; CF45: the domainof usage "Computers". Lackof space doesnot permitus to dwell on manyother subtle wordcharacteristicscontainedin the entry. There are somemorepoints concerningdictionary entries. Specialentries are compiled for set expressions. Fixedand changeableset expressionsare processed differently (compare "of course","by bus", on the one hand,and"vanitybag", "bringup", on the other). Thedictionary entry also containsfull informationabout typical and local government models. Someentries are formednot for concretewords,but for representativesof groupsof words:e.g. conceptualword classes or quasi-synonymic groups. Knowledge Base The System’sKnowledge Base (KB)serves for representingthe meaningof natural languagetexts - both documents and queries. In our Systemthe term Knowledge base is used in a slightly different sencethan in the theoryof expert systems.TheKBstructure is specially orientedtowardsextractingfromthe text a maximum of knowledgethat is necessaryfor further searchof documents on the basis of the query. SimpleRoleRelation. Thebasic construction of the KBis a so-called simple Role Relation (simple RR). A simple RRis a KBrepresentation of a simple sentence in the formof a tree. Theroot of the tree is the predicateof the sentence.All nodesof the tree are classified into twotypes - 0-ranknodeswhichrepresent conceptinstances (objects, actions, states) and lst-rank nodes whichrepresent the values of the properties of these conceptinstances. Anarc from a 0-rank parent node to a 0-rank child node represents a role the latter performsin relation to the former. Anarc from a 0-rank parent node to a lst-rank child noderepresents an instance-property link whichis nottreatedas a role. Toillustrate a simpleRR,let us take a natural text sentence: S 1: TheCompany provides customerswith the newest technology. For describingRoleRelations, weuse our own metalanguagein whichthe RRof $I looks as follows: provide => Company < lact, #35.1 >, customers < 2act, #2 >, technology(newest)< 3act, #60.1> This is interpreted in the followingway: Thepredicate "provide"- Master- has the following Servants: "Company" as the 1st actant linked by role #35.1 ("Agent"), "customer"as the 2rid actant linked by role #2 ("Beneficiary"), "technology" as the 3rd actant linked by role #60.1 ("Objectof Action"); "technology"is specifiedby a propertyhavingthe value "new". Characteristics of the Elements of a Simple Role Relation. Each node - an element of the RR- is representedin the KBby a set of characteristics derived fromthe text in the course of processing. Thus,for each conceptinstance, there is a KBcorrespondence- a 0-rank node which is an RR element having about 20 characteristics,e.g.: ¯ the class and the ordinal number, ¯ the morpho-syntacticcharacteristics, ¯ the referenceto the properties of the element, ¯ the role of the givenelementin referenceto the master. Likewise,for eachpropertyof the conceptinstance, there is a KBcorrespondence - a lst-rank nodewhichis an RR elementhavingabout30 characteristics, e.g.: ¯ the value of the property (can be expressedby number,a wordfrom the Dictionaryor a word unknown to the Dictionary), ¯ the nameof the property ("price"), measurement units associatedwith the property(as, for example,"dollars"for "price"), ¯ the intensity and degreeof intensity for the property value, ¯ the numericalcoefficientof fuzzinessusedfor processingfuzzy values. ComplexRole Relation. Simple RRsserve as building material for three morecomplicatedtypes of knowledge structures whichrepresent any natural languagesentence in the KB,that is, for Systemof Facts, Task,andRule. Thesethree types reflect the followingcommunicative typesof utterances. Systemof Facts correspondsto narrative sentencesthat denotefacts, events, situations, etc andcannotbe defined as Tasksor Rules. Tasksrefer to sentenceswith hortatorymeaning(e.g. requests, orders, commands) that impel manor machineto performa certain action (Directive)undercertain Starting Conditions.Directiveis expressedby meansof the Imperativemood,different formsof modality,questions, andso on (cf. "Helpme!","I’d like youto help me", "Couldyouhelp me, please?"; "Whatdid you see at the exhibition?"- "Tell mewhatyou sawat the exhibition"). Rulesreflect varioustypes of relationships,dependencies, regularities, objectivelaws, etc that do not impelmanor systemto action. Rulesare mainlyexpressedby "if- then", "in order to" andsimilar constructions. For formaldifferentiation betweenSystemsof Facts, Tasksand Rules, a numberof linguistic meansare involved,such as mood,tense, modality,evaluative and emotionalexpressions,interrogative forms,andothers. Specificcombinations of these factors also predetermine quite definite semanticroles characteristicof eachtype. Thefollowingsentencesare examplesof the three types of knowledge structures respectively: $2: Convexannouncedthe total investmentprogram whichpreserves customerinvestments.(Systemof Facts) $3: As soonas a newissue of the "ComputerMagazine" appears, find mearticles on supercomputers. (Task) $4: If a company producesanyproduct, it evidently receivesordersfor this product.(Rule) Eachstructure is presentedin the formof a complexRR whichis a tree of simpleRRs. ASystemof Facts has the followingKBcharacteristics: the moment of registration; the author or sourceof information;the reliability coefficientof the sourceof information;the referencesto the simpleRRs,each of thempresentingoneseparatefact of the systemof facts. In sentence$2, for example,the simple RR1represents the mainclause of the complexsentence, while the simple RR2represents its subordinateclause manifestinginterpredicate(2nd-level)role ##80.1.1("DefiningRelative Clause"):RR1=>RR2<##80.1.1>. Naturallanguagedocuments to be searchedare, as a rule, systemsof facts. Note: Asimplesentencecorrespondsto a systemof facts containingonly onefact. A Taskincludes a Directive andStarting Conditions.The System’soperation, on the whole,consists in monitoring the starting conditionsand, on their fulfillment,in executingthe corresponding directives. A Taskhas the followingKBcharacteristics: the moment of registration; the authoror sourceof information;the referencesto the RRs,each of thempresentingone separatedirectiveor starting conditionof the task. In sentence$3, for example,the simpleRR1represents the mainclause of the complexsentence, while the simple RR2represents its subordinateclause manifesting2ndlevel role #4.1.2.2.1.3.1.b("Just after"): RR1=>RR2 < ##4.1.2.2.1.3.1.b>. Naturallanguagequestionsand queries are particular cases of Task,with starting conditionsoften omitted, whichmeans"Now"by default (e.g.: Tell meabout the supercomputer exhibition in London). ARulehas the followingKBcharacteristics: the moment of registration; the authoror sourceof information;the reliability coefficientof the sourceof information; the typeof the rule: inferencerule, identity, cause-effectrelationship,condition,etc; the probabilityof the antecedent-consequent relation; the referencesto the antecedentandconsequentof the rule, eachof them presentedby a RR. In sentence$4, for example,the consequentis representedby the simpleRR1,while the antecedentis representedby the simpleRR2with role ##30.1.a ("Condition"): RR1=>RR2< ##30.1.a >. Thus,anynatural languagesentenceis presented,in a generalcase, by a complexRR- a tree of simpleRRs, wherethe root represents the mainclause, the other nodes representsubordinateclauses, andthe arcs representthe corresponding roles. Note:Wetreat coordinationof clausesas a special case of subordination. Special Search-Oriented KBConstructions. Alongside with the registration of the above-mentionedknowledge constructions, the Systemalso registers special searchoriented constructions in the KB. They are files of conceptsandfiles of conceptinstances. In the file of concepts,eachconcepthas the following characteristics:the class of the concept;the list of the elementsof RRswhichinclude the instances of the given concept,i.e. the invertedlist for the concept;the list of definitions for the conceptsandthe conceptinstances whichprovidea powerfultool for the searchof documents. Main Modules of the System TheSystem’ssoftwarehas a modularstructure. The Modulesrun asynchronously, exchangingmessages, whichallowsto paraUelizetheir operationson a multiprocessor. TheSystemconsists of the followingmainmodules: (a) the moduleof graphicaluser interface, (b) the moduleof analysis whichforms the KBfrom natural languagetexts, (c) the moduleof generation(synthesis) whichproduces natural languagetexts fromthe KB, (d) the KB-accessmodulewhichprovidesaccess to the KBfor analyzingFacts, Tasksand Rules, (e) the moduleof logical inferencewhichhandles document searchingon the basis of a set of equivalent transformationsover the KB. Modules(b) and(e) are the mostcomplicatedin Systemand play a mostimportantrole in the document search. Moduleof Analysis. Theprocess of analysis consists of a numberof steps, each of themprovidingknowledge for the final KBrepresentation. The process begins with morphologicalanalysis, after whichsemantic-syntactic parsingis performed.Theparsingfalls into twostages. Thefirst stage accessesthe input text in its surfaceform. This stage consistsof the steps whichcarry out, in the appropriateorder, the processingof wordsand word groupings(set expressions,articles, personalpronouns, nounconstructions(NNand the like), negations,etc) attach 0- and1-st rank servantsto their masters- verbs, predicativeadjectives, nouns.Thesearchingof 0-rank servantsis conductedaccordingto the master’s Government model(for the actant servants) and by means of special Prepositionalmodels(for the non-actant prepositionalservants). Thereare special rules that limit the area of searching. TheSystemalso makesa canonical reduction of certain constructionson the basis of equivalenttransformationsof differenttypes. Thefirst stage results in the draft variant of parsingat the level of simpleRRs. Thesecondstage of parsing, whichdeals with RRsonly, producesfinal variants of the simpleRRs,establishes 2ndlevel relations (conjunctionalandnon-conjunctional), forms the final complexRR,computesthe deeptenseaspectcharacteristics, andregisters all the knowledge obtainedin the KB. In the processof analysis, the Systemdetects various typesof conflict situations, e.g.: a servantis linkedto more than onemaster;a servant is ambiguous; a predicateis ambiguous,etc. Toresolvethe conflicts the Systemexploits a special inventoryof rules and the mechanism of penaltiesandawards.If a conflict cannotbe resolved,the Systemregisters morethan one variant. It shouldbe noted that word-sensedisambiguation is carried out at various steps includingmorphological analysis, set expressionandarticle processingand especially whenusing such powerfulfilters as Government and Prepositional modelswith their strong semantic restrictions. In addition, word-orderrules anddomain filters are applied. Moduleof Logical Inference for Document Search. The moduleof logical inference, together with the KB-access module,makesall special transformations over the KB whichare necessaryfor querymatchingon the basis of the KBrepresentations of the documentand the query. The techniqueof keywordmatchingis not used at all. Thus, the one-sentence document "The developers of this supercomputer marketa variety of electronic devices"will be recognizedby our Systemas non-relevant to the query "Supercomputer market". Themodulepossessesthe full inventoryof the System’s search techniques,bringing theminto play when necessary. Theuse of these techniquesmaybe both sequentialandparallel. Thesearch processconsists of twomainstages: Stage1. Theexpansionof the input query(i.e. its KB representation)by use of quasi-synonyms, definitions, "General-specific" relations andconceptualNestrelations (cf. Vorhees1994). Theexpansionof the queryenables the Systemto take into accountnot only the explicit occurrencesof the query elementsin the RRs,but also the implicit, expandedones. Dueto the query expansion,the documentD1, for example,will be recognizedas relevant to the queryQ1, giventhe set of definitionsE1: E 1: Saleis a particularcaseof market. AnExemplarsystemis a supercomputer. D 1: The sales of high-performance Exemplarsystems increasedthreefoldin the last 3 years, to $6 billion in 1995. QI: Supercomputermarket. Stage2. Theuse of the rules of logical inference. Theyare put into effect if stage 1 is not sufficient for makingthe final decision. Thelogical inferencerules allow to matchthe querywith separate pieces of knowledge whichare often scattered over the document. Sometechniquesof this stage can be illustrated by the followingsearch microsituation, whereE2presents "encyclopedic"worldknowledge (definitions andother natural languagestatements entered into the KB beforehand),D2is the document,Q2is the query. E2: Crayproducessupercomputers. Saleis a particular caseof market. D2: Crayproductsare widelyused for high-performance computing. In the last quarter, the sales totaled $266 million. Q2: Supercomputermarket. Theelementsof the query(i.e. its RR)are checkedfor their co-occurrence (explicit or implicit) in oneof the document’s sentences(i.e. in the corresponding RR).(It shouldbe noted that here wemeansuch co-occurrenceof the queryelementsthat preservesthe structure of the query RoleRelation). Therearen’t such sentencesin D2, so the Systemselects the sentencecontainingonly the predicateof the query"market"(renderedby its implicit occurence"sale"). Then the previoussentencesof D2are searchedthroughfor findingthe objectof "sale". Forthis, specialobjectsearchingrules are applied.Theobjectis found- it is "products". "Products"is not the secondelementof the query ("supercomputer"), but it belongsto the groupof the socalled non-terminalwordswhichsuggest further reasoning.For the non-terminalwords,the conceptual Nest rules are attached whichactivate the conceptualNest relations of "products".This leads to generatinga new inner subqueryin the formof the sentence"Crayproduces XI", and, throughthe use of the encyclopedicfact "Cray producessupercomputers", results in recognizingthe relevanceof D2to the queryQ2. Besidesthe rules mentioned,the Systemuses a wide range of knowledge-based search techniques, such as ¯ various inference rules basedon different paradigmaticwordgroupings, ¯ mechanisms for searching antecedents, including not only antecedentsfor pronouns,wordsof vague meaning, etc., but also for text lacunas, ¯ local and temporal transformations, ¯ "cause-result"transfer, etc. Thesetechniquesallow, in particular, to realize query matchingfor the followingsearch microsituation: E3: Orderis a particular case of market. Craysells supercomputers. Franceis in Europe. D3: Cray Reports the Results of the Fourth Quarter and the Full Year. In France, orders totaled $66 million in the quarter comparedwith $47 million for the third quarter of 1995. Q3: Supercomputer market in Europe. The query matching process uses, among others, the inference rules "produce" --~ "sell" ~ "order" described in the LanguageModelsection. Conclusion At the present stage our Systemhandles documents containing commonlyused, non-specialized vocabulary. Besides, we process documentsbelonging to computer science and related domains. Further expansion of the Dictionary will enable the Systemto process documents related to other domains. Experiments with the System showits efficiency in refining results of the search performed by traditional search engines on the WWW. The Web-resident version of the System is currently under development. REASON possesses great potentialities for various practical applications involving man-machine communication and artificial intelligence (e.g. expert systems, machinetranslation, documentsummarization and filtering, information extraction, educational software, control of industrial processes). References Allen, J. 1995. Natural LanguageUnderstanding. USA/Canada: The Benjamin/Cummings Publishing Company,Inc. Apresian, J.; Boguslavski,I.; Iomdin, L.; Lazurski, A.; Pertsov, N.; Sannikov, V.; and Zinman, L. 1989. Linguistic Support of ETAP-2.Moscow:Nauka. Chomsky,N. 1982. SomeConcepts and Consequences of the Theory of Governmentand Binding. Cambridge: MIT Press. Fillmore, Ch. J. 1969. Towarda ModernTheory of Case. ModernStudies in English. N.Y.: Prentice Hall: 361-375. Haase, K. B. 1995. MappingTexts for Information Extraction. Proceedingsof SIGIR’95. Lakoff, G. 1970. Irregularity in Syntax. N.Y.: Holt. Melchuk,I.; and Pertsov, N. 1987. Surface Syntax of English. Amsterdam/Philadelphia. Miller, G. 1990. Wordnet: An On-Line Lexical Database. International Journal of Lexicography3(4). Piotrowski, P. 1986. Text Processing in the Leningrad Research Group"SpeechStatistics" - Theory, Results, Outlook. Literary and Linguistic Computing:Journal of ALLC1(1). Tesnlere, L. 1959. Elementsde syntaxe structurale. Pads. Vorhees, E. M. 1994. Query Expansion Using LexicalSemantic Relations. Proceedingsof S1GIR’94.