Inferring What a User is Not Interested In Almraet

advertisement

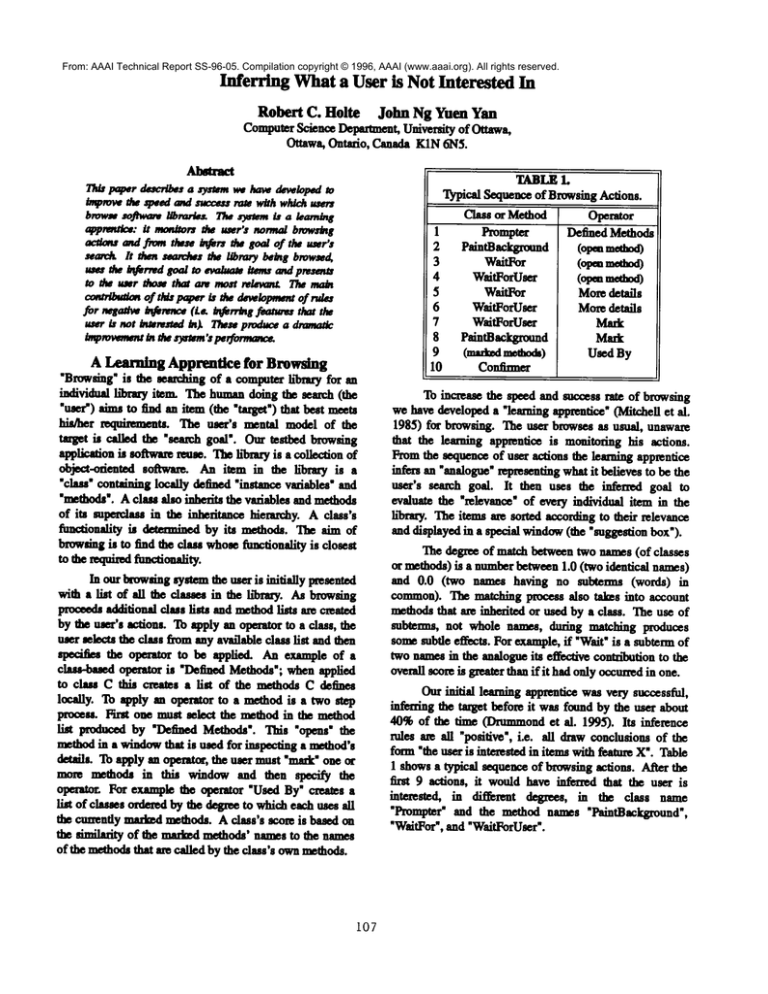

From: AAAI Technical Report SS-96-05. Compilation copyright © 1996, AAAI (www.aaai.org). All rights reserved. Inferring Whata User is Not Interested In Robert C. Holte John Ng Yuen Yan ComputerScience Depamnent,University of Ottawa, Ouawa, Ontszio, Canada K1N6N5. Almraet Th/.¢ paper dnscv/besa sys’tfm we hawde~/optJdto bnpmw~ sp~ed wtd sacce~ rote w/th wMchwws bmw~aoJh,~//brav/~ The s3etem/, a/eambtg appn~t/oe: /t mort/iota ghe user’s norma/ bmw~g acdam andfromtbneInfers thegoaloftheuser’s scarck It thensam~athelibrary being browsed. usestheIn,fredgoalm ~ itemsand pmsents to tbs u~rthosetbatam mastrelaw~The ma~ oftbds pap~ isthed~wloln,mt ofrula for msat~~r,~,~ (re. ~rr~s fmnmuthat t~ user is not tntsresud tn~ Dtsse pmdac~a drmm~ ~Wn,wmnt tn t~ Omm’speO,n, mc~ A Learning Apprentice for Browsing "Browsing" is the searching of a computer hl~aty for an individmd ~ item. The bnnmn doing the search (the "user’),i,,, to find an item(the "target’)that best meets his/her nxluimn~ts.The user’s mental modelof the tazget is called the "sentr, h goal’. Our testbed browsing applic~ion is software rense. The h’bmx7is a collection of object-oriented softwa~. An item in the library is a "class" containing locally defined "instance vaziables" and "methods’. A class also inherits the va6ables and methods of its superclass in the inhesitance hletat~y. A class’s functionality is detetm/ned by its methods. The aim of browsingis to find the class whose functionality is closest tothe requinKi functional/ty. In our browsingsystemthe user is initially presented witha listofalltheclaues in theh’bnwy. As browsing proceeds additional class lists and methodlists are created by the nsef’s actions. To apply an operator to a class, the user selects the class from any available class list and then specifies the operator to be applied. An example of a clau-hased operator is "Defined Methods"; when applied to class C this creates a list of the methods C defines locally. To apply an operator to a methodis a two step process. Hnt one must select the method in the method fist producedby "DefmedMethods’. ~ "opens" the methodin a windowthat is used for inspecting a method’s details. To apply an operate, the user must "magk"one of more methods in this window and thenspecLCythe operator- For example the operator "Used By" creates a listofclasses oniesed bythedegnmtowhich eachusesill thecun,entlymarked methods. A cless’s scoreisbasedon the si~ of the madmdmethods’namesto the nmnes ofthemethods thatarecalled bytheclass’s ownmethods. 107 TABLE L 1~yp/cal Sequenceof BrowsingActions. Class or Method Operator 1 Prompter Defined Methods 2 PalntBacksxound (opm method) 3 WaitFor (opm~ 4 WaitForUser (opm mmbx0 5 WaltFof Moredetails WaltForUser 6 Moredetails 7 WsitForUser Mark Magk 8 PalntBacksxound 9 (matt~ methods) Used By I0 Confumer To increase the speed and success rate of browsing we have developeda "learning apprentice" (Mitchell et al. 1985) for browsing. The user browses as usual, unaware that the lenming apprentice is monitoxing his actions. Fromthe sequence of user actions the learnin~ apprentice infers an "analogue"representing what it believes to be the nsof’s search goal. It then uses the infened goal to evaluate the "relevance" of every individual item in the librmT. The items are sorted according to their relevance and displayed in a special window(the "suggestion box’). Thedeg~of matchbetweentwomunes(of classes of methods) is a numberbetween 1.0 (two identical names) and 0.0 (two names having no subtermn (words) cemmoll). The lIlatchlng ~ also ta~s into account methodsthat are inhesited of used by a class. The use of subtenns, not wholeomn~u,duringmatching produces somesubtle effects. Forexample, if"Wait" isa subterm of twonames in theanalogue itseffective conm’bution tothe overall score isgreater thanifithadonlyoccun~i inone. Our initial learning aplnentice was very successful, infen~g the target before it was found by the user about 40%of the time (Drummondet al. 1995). Its inference rules ate all "positive’, i.e. all drawconclusions of the form "the use~ is intesested in items with feature X’. Table 1 showsa typical sequence of browsing actions. After the first 9 actions, it wouldhave inferred that the user is interested, in di~rent degrees, in the class name "Prompter" and the method names "PaintBaclq~-ound’, "WsitFof’, and "WaitForUser’. Negative Inference Positive inference in combinationwith partial matching proved very successful in our original experiments.The examplein Table1, however, illustrates a limitationof this system. Theuser’s actions plainly indicate that he has ¢b.Jibemtelydeckledmethod"WaitFor"is not of interest. immestex~’bited in openln~ and inspecting this methodwas tentative. Once"Waitl~~ and "WaitForUser" have been compared enda decision between them made, only one (’Wait]F~User") nmmiasof interest. Merely reUactln~ IN’I’ER~TED_IN(method name: WaitFor) wouldnot entirely catmnethis infmmationbecause"Wait" end "For" occur in "W~ser" and would therefore quite.ramspartialmatchm. To n,,b. the conectinference fxomthis sequenceof actions, two changesare necessaryto the learnln~ apprentice.Fh’st, mbtewna musthavetheir ownentries in the mmlolpze.This will permit the systemto assigna higher confidencefactor to "Uzer", the mbtermthat actually dizcfimiaatesbetween between the two method namesin this example,than to the sublenm"Wait" and "For’. Secondly, roles are needed to do negative ~ mthat feaaues that onceseemed iaterestin8 can be mnoved from the analogue when they prove to be nnln~. Browsensometimes have actions that directly /ndicate that the tu~ is not inmestedin an item. In such cases,nesat/ve inference is as maiShtforward as positive inference.In browsers,suchas ours, that only haveactions that a user applies to further explore items of interest, negative inferencemustbe basedon the missingactions actions that could have been taken but were not. For example,the user could haveappl/ed the "Mark"action to "Wa/t/~’, but did not. The ~ty, of course, is that there ama greet manyactions available to the user at any g/ven momentof which only a few will actually be executed. It is cemdnlynot ccmect to nu,k~ negative fxomall the missingactions. Whatis neededto reliably m,,b negative infmences is someindication that the user consciouslycons/deredan action and ~evt~it. In the example,the fact that the user opened"WaitFor" is a strong ind;,-Jt~onthat he consciously considmed its use in the subsequent"UsedBy"operation. Th/s is becausew/th our browserthe mainreasonto opena methodis so that it can be mt, t~ and usedin conjunct/on with "Used By" or "ImplementedBy". "Fne fact lhat "W~" was Rot used in the cnlmlnatln~ operatioll of eli. sequence is bestexplained byits being judged of no intemL To genendize this exilic, negative cu be ndiably performed when them is a definite culminatin8operation (in this case either "UsedBy" or By") and a two step process for selectin 8"Implemented 108 the item(s) to whichthe operator is to be applied. In our system negative ~ce is triggered by the "UsedBy" or "Implemented By" operafioasaudis appliedto the names of methodsthat aze open but not marked.For each word, W,in these namesthe assertion "the user is not interested in W"is ~d,.d pemmnentlyto the analosue. "l~is assertion is categorical; it oven/des any Inevious or mbsequent positive inference about W. In the above example,nesative inference wouldproduceu assertion of this form for "Wait" and for Wor".Only the positive assertions in the aualogueaze convertedinto the template. Class and methodnamesaxe matchedas before. A method mbtennin the template is consideredto matcha classif the subtermappearsin any of the chum’sownmethod nm~S. ExperimentalEvaluation The re-lmplemented version of the odginal learning aplnentice is called VI below. V2 is Vl with negative inference ~_~__,~1.As in (Drnrn-mndet al. 1995) the experimelitS Wel~ rmI with an a1LltOll~RtKI user, Le. a computerprogram(called Rover) that played the role the user, rather thau humanusers. This enableslarse-scale experimentsto be carried out quicklyand also guarantees lhat experimentsare repe~t*hleandperfectly controlled. A almaar experimental methodis used in (Haines & Croft 1993)to comparerelevancefeedbacksystems. The ~ used in the expedmmtis the Smatltalk codeh’br~y. E~chof the 389classes (except for the four that haveno definedmethods)wasused as the searchtarget in a separate run of the experiment. Rover continues searchinountil it finds the target or 70 steps havebeen taken- A"step" in the searchis the creationof a newclass list by the operation "Implemented By’. RoveT’scomplete set of actions is recordedas is the rank of the target in Rover’smostrecent class list at each step. Theresulting trace of Rover’sseaw.h is then fed into each learning apprentice(V1andV2)separately to determin~the target’s rank in the suue~onbox at each step and the step at whichthe learning apprenticesuccessfully identifies the target. The learning ~-ntice is considered to have identifi~ the target when its rank in the suggestion boxis l0 or better for five consecutivesteps. This definition precludesthe learning apprenticefromsucceedingif Rover finds the target in fewerthan 5 steps; this happenson 69 rims. The,xlmplest summary of the experimentalmmltsis given in Table2. Eachrun that is between5 and70 steps in length is categorized as a win (for the learning spp~-ntice), a loss, or a draw, depend/n 8 on whetherthe learnln~appt~mticeidentified the target beforeRoverfound it, did not identifythe target at all, or identifiedit at the samet/me Roverfoundit. Therowfor VI showsthat FIGURE L AverageRankof the Target at each step TABLE 2. Wins-Losses-Draws Vtrms VI V2 Lo6m Draws 7O 207 22 (23.4%) (69.20/0) (7.4%) 161 110 35 (52.6%) (36.0°1o) (11.4%) TABLE 3. Search Length whenVl and V2 AverageSearch Length V1 I V2 WI-V2I Vl beat V2 9.9 11.5 1.6 V2 beat VI 23.6 12.6 11.0 Nnml’~" of Targeu 11 120 23.4%of the runs were wins for V1. This figure is comdderablylower than the 40%win rate of the original leamin8 apprentice. Theditference mayin part be due to differences in the re-lmplementation of the learning apprentice or Rover, but is probably mainly due to differences in the h~ary. The Smalltalk h’brary is, it seems,moredifficult for the learning aplnenticethan the Objeotive-C h~mryused originally. V2 performs very well, identifyin8 the target beforeit is foundbyRoverover half the time. ~he ~h4~tion of negative ~ has more than doubledthe numberof wins. Table 3 is a direct compafimnof the learnln~ apprentices to each ether. Thefirst rowstanmadzasthe runs in whichVI identified the target beforeV2did. happenedonly 11 times, and on these targets V1wasonly 1.6 steps ahead of V2. The second row mmanadzesthe runs in whichV2identified the target before VI. happened120 times aud the reduction in search time on these rims wasvery emmiderable, 11.0 steps. This shows that negativeinferenceis rarely detr/montal,and nevera w mdfieant i mpedimont,and that it frequently Almost doubles the speed with which the leatnino apprentice identifi~the target. If the user is to benefit froma learn/n8apprenticein practice, it mastbe true that thmughontthe e~L, ch the apprentice’s rank for the target elms is consistently sioaifieantlyhigherthantheuseg’sownrank for the target. ]Rgure1 plots the averagerank of the tarset on eachstep. Theaverage for step N is computedusing only the ua~em that me~illactive at mpN. Thebeet rank is 1; the higher the avoraSe nmk, the worse the ~mem. From this ~e, VI is much better than Rove~ Except for the first 5-10 steps of a seagh, the target is 10-20poeitions higher in Vl’s sugSest/onbox than it is in Rover’smost recent ela~ list. V2dramatically oetperfonmVI. After jut one,tep it ranh the target 20 positionshigherthan 109 0 10 80 80 40 ~pmmw SO 80 70 RoverandVI; after 3 steps this differencehas increasedto 30. Thefigth’e also showsthat the target is almostalways (on average)amongthe first 40 in the suggestionbox. In sense the user’s semchspace has been reduced by 90%, flora a h’braryof almost400classesto a list of 40. Condmions This paperhas presentedrules for negativeinference(i.e. inferrin8 fe.amresthat the user is not interet~edin). When ndde~__to (a re--implemantation of) our original learning app1~t/ce the~ produce a dramatic /~rovemont in performance. The new system is more than twice as effective at identifyingthe user’s seagchgoal andit ranks the target muchmoreaccuratelyat all mtgesof search. Acknowledgements This research was ~ in part by a grant f~om the Natural Sciences and Engineering Research Council of Canada. We wish to thank C~/s Drummond, the developerof the first learningapprentice,for his assistance and advice. References Drnmm,md,C., D. Ione~u and R.C. Holte (1995). Learning Agent that Asdmsthe Brewing of Software IJ’braries, technical ~ TR-95-12, ComputerScience Dept., Univenityof Ottawa. Haines, D., and W.B.Croft (1993), "RelevanceFeedback andInference Networks’, Proceedings of the16th International ACMSlGIR Conference on Research and Development in InformationRetrieval, pp. 2-11. Mitchell, T.M., S. Mahadevan and L. Steinberg (1985). LEAP:ALearnin~ Apprentice for VLSIDesisn. IJCAI’85, pp. 573-580.