From: AAAI Technical Report SS-94-06. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

TowardA General Dynamic Decision Modeling Language:

An Integrated FrameworkFor Planning Under Uncertainty

Tze-YunLeong

Clinical Decision MakingGroup

MITLaboratory for ComputerScience

Cambridge, MA

leong@lcs.mit.edu

can generatemanyoptimalcoursesof actions or plans, conditional onall possibleevolutionsof the eventsor states in

the environment.

Abstract

Decisionmakingis often complicatedby the dynamismand uncertainty of the information

involved. This paper presents a newmethodology

for formulating,analyzing, and solving dynamic

decision problems.Theproposedformalismintegrates multi-disciplinary approaches; it also

generalizes current dynamicdecision modeling

frameworks.The ontology, graphical presentation, and mathematicalrepresentation of the

decisionfactors are distinguished;modularextensions to the scope of the admissible decision

problemsand systematic improvements

to the efficiencyof their solutionsare supported.

2 Dynamic Decision

Modeling

A dynamicdecision model,e.g., dynamicinfluence diagram [Tatmanand Shachter, 1990], Markovcycle tree

[Hollenberg,1984], or stochastic tree [Hazen,1992], is

basedon a graphicalmodelinglanguagefor visualizing the

relevant variablesin a dynamic

decisionproblem.In general, sucha modelconsists of the followingsix components,

the first five of whichconstitute a conventionaldecision

model:

¯ Aset of decisionnodeslisting the alternative actions that the decisionmakercan take;

1 Introduction

¯ Aset of chancenodesoutliningthe possibleoutcomesor happeningsthat the decision makerhas

Decisionmakingin our daily lives is often complicatedby

no control over;

the dynamism

and uncertaintyof the informationinvolved.

¯

Asingle or a set of valuefunctionscapturingthe

In medicine,for instance, a common

problemis to choose

desirability of eachoutcome

or action;

a courseof optimalIreatmentsfor a patient whosephysical

¯

A

set

ofprobabilistic

dependencies

depicting how

conditions mayvary in time. Dynamic

decision modelsare

the

outcomes

of

each

chance

node

depend

on othframeworks

for modelingand solving such dynamicdecier

outcomes

or

actions;

sion problems.Theseframeworks

are basedon structural

¯ Aset of informationaldependenciesindicating

and semanticalextensions of conventionaldecision modthe informationavailable whenthe decision makels, e.g., decision trees andinfluencediagrams,with the

er

makesa decision; and

mathematical

definitionsof finite-state stochasticprocess¯ Anunderlying stochastic process governingthe

es.

evolutionin time for the abovefive components.

This paper presents a newmethodology

for dynamicdeciIn existing frameworks,the mathematicalproperties of a

sion modeling, called DYNAMOL

(for DYNAmic

decision

dynamicdecision modelare defined with respect to its

MOdelingLanguage). The DYNAMOL

design integrates

approachesand techniquesin AI, decisiontheory, and con- graphicalstructures; solvingsucha modelinvolvesdirectly

the graphicalstructures.

1Iol theory;it supportsformulation,analysis, andsolution manipulating

of dynamicdecision models. DYNAMOL

generalizes existRecently, [Leong,1993] has identified semi-Markov

deciing dynamicdecision modelingframeworks;

it differs from sion processes as a common

theoretical basis of dynamic

previousapproachesby distinguishingthe decision ontolo- decision modeling. DYNAMOL

attempts to integrate the

gy, the graphical presentation, and the mathematical graphicalcapabilities of the existing frameworks,

and the

representation of dynamicdecision problems.The soluconcisepropertiesandvaried solutionsof the mathematical

tions to the models formulated in DYNAMOL,

called

formulations.

policies or decisionrules, are guidelinesfor choosingthe

optimalactions over time to achievesomegoals in a given 3 The DYNAMOLDesign

environment.In the AI planningvocabulary,a dynamicdeTheDYNAMOL

languagehas three distinct but integrated

cision modelcorrespondsto a planningproblem;a policy

155

components:

a dynamicdecision grammar,a graphical presentation convention, and a formal mathematical

representation. Thedecision grammar

allowsspecification

of the decision factors and cons~aints. Thepresentation

convention,in the tradition of graphicaldecision models,

enables visualization of the decision factors and constraints. The mathematicalrepresentation provides a

conciseformulationof the decisionproblem;it admitsvarious solution methods, depending on the different

properties of the formalmodel.

3.1 DynamicDecision Grammar

A dynamicdecision model formulated in DYNAMOL

has

the followingcomponents:1) the time-horizon, denoting

the time-frame

for the decisionproblem;2) a set of valuefunctions, denotingthe evaluationcriteria of the decision

problem;3) a set of states, denotingthe possibleconditions

that would

affect the valuefunctions;4) a set of actions,denoting the alternative choicesat each state; 5) a set of

events, denoting occurrencesin the environment,conditional on the actions selected, that might affect the

evolutionof the states; 6) a set ofprobabilisticparameters,

denotingthe transition characteristics, conditionalon the

actions, among

the states andevents;7) a set of declaratory

constraints,suchas the valid durationof particular states,

actions,events,andprobabilisticparameters;

and8) a set of

strategic constraints, suchas the numberof applicability

for certain actions,the validordering

of a subsetof actions,

andthe time spanbetweenapplicability of twosuccessive

actions.

The dynamicdecision grammarfor DYNAMOL

is an abstract grammar. Following the convention in

[Meyer,1990], this grammar

contains the followingcomponents:

¯ Afinite set of namesof constructs;

¯ Afinite set of productions,eachassociatedwith a

construct.

Anexampleof a production that defines the construct

"model"is as follows:

Model.->name:Identifier;

contexts:

Context-list;

definitions:

Definition-list;

constraints:

Constraint-list;

solution:

Optimal-policy

Eachconstructdescribesthe structure of a set of objects,

called the specimensof the construct. Theconstructis the

(syntactic) type of its specimens.

In the aboveexample,

object with the type Modelhas five parts: name(with type

Identifier),contexts(withtypeContext-list).... etc. Theconstruets/types appearingon the fight-handside of the above

definition are similarly definedby different productions.A

set of primitiveconsmacts/types

areassumed,

e.g, String,

Cumulative

DistributionFunction,

etc.

Therearesome

fixed waysin whichthe structureof a construct can be specified. Theaboveexampleshowsan

"aggregate"

production,i.e., the constructhasspecimens

comprising

of a fixed number

of components.

Othertypes

of productions

include:

"Choice"productions,

e.g., the timedurationin the decisionhorizoncanbe finite or infinite:

"time-duration --> $+ u {0} I oo

"List" productions,e.g. the state-space of the decision

problemconsists of oneor morestates:

State-space ---> State+

The DYNAMOL

grammardefines the structure of a dynamic decisionmodelin termsof its components;

the smJctures

of these components

are recursively defined in a similar

manner.Thegrammar

specifies the informationrequired to

build a model.Onthe other hand, there can be manyways

to manipulatesuch information.In other words, the abstract grammar can support different interface

implementations.For example,an object of type Statespacemaybe specified in 3 different ways:

¯ Text command

interface: Type"state-space state1 state-2 state-3" to command-prompt.

¯ Graphicalinterface: Drawthree state-nodes labeled"state-l,’, "state-2,""state-3"in the display

window.

¯ Routineor codeinterface: Type"(define-statespace ’state-1 ’state-2 ’state-3)" to the Common

Lisp prompt.

Thegrammar

defines the syntax of the language. Thesemanticsof the languageis definedby the correspondence

fromthe constructs to the underlyingmathematicalrepresentation of a semi-Markov

decision process.

3.2 GraphicalPresentation Convention

The graphical presentation conventionin DYNAMOL

prescribes howthe decisionfactors andconstraintsexpressible

in the grammar

are displayed.It is againdefinedin termsof

the correspondencefromthe grammarand graphical constructs to the underlyingmathematical

representationof the

model.Followingthe conventionin decision modeling,for

instance, an action-variableis denotedby a rectangle, an

event-variable an oval, etc. In DYNAMOL,

however,both

declaratoryandstrategic constraintsare also visualizable.

Themodelcan be presentedin part or in wholeas desired.

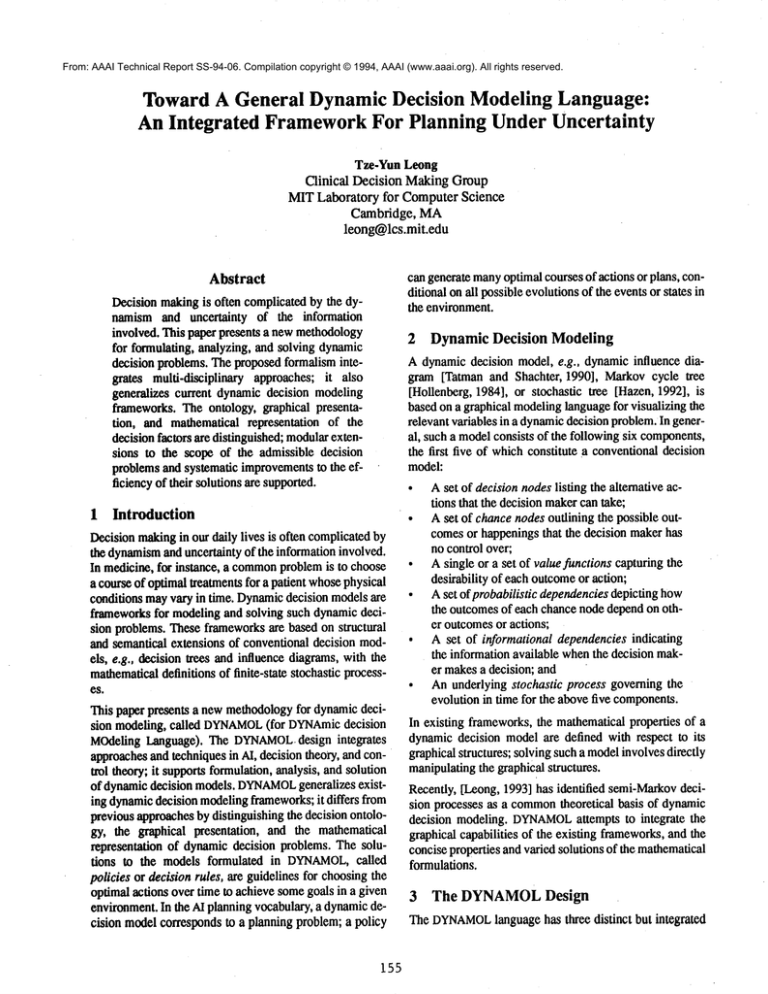

Moreover,all decision components

can be presentedin different perspectives. Figure1, shownlater in this paper,

depicts twodifferent perspectivesof a simpledynamicde-

156

cision modelfor a medical example.

3.3 Semi-MarkovDecision Process

A dynamicdecision modelspecified in the decision grammar in DYNAMOL

is automatically compiled or interpreted

into a semi-Markovdecision process. The modelspecification maybe more general than the semi-Markovdecision

process definition wouldallow. For instance, the events and

their correspondingconditional probabilities are compiled

into the transition probabilities in the state space of the

semi-Markov process

Formally, a semi-Markovdecision process is characterized

by the following components [Howard, 1971] [Heyman

and Sobel, 1984]:

¯ A time index set ~,

¯ A decision or control process denoted by a set of

random variables

{D(t);te

T}, where

D (t)~ A = { 1, 2 ..... k} is the decision made

at timet; and

¯ A semi-Markovreward process denoted by a set

of random variables {S (t) ;t ~ T], where

S(t) e S = {0, 1,2 .... } is the state of the process at timet, with:

1. an embeddedMarkovchain denoted by a set of random

variables {Sm;m> 0} ; such that Sm = S (Tm), where

T1 < T2 < T3 <... are the randomvariables denoting the

successive epochs (i.e., instants of time) at which the

process makestransitions;

probabilities

sets

of

transition

2. k

{P!.a);i>O,j>O, 1 <a<k} among the states of the

eml~lded chain, such that for any given decision

a ~ A, and states i, j ~ S:

p.C

)

q

= P {Sin+ 1 =Jl Sm=i, Dm= a }

= P{S(Tm+ l) =jIS(Tm)

=i,D(Tm)

=

whichalso satisfies the Markovianproperty:

q

= P{Sm+l =jlSm= i, Dm=a }

= P[Sm+1 =jlSm=i, Sm_l=h .....

Dm = a}

3.k sets of holding times {x~.a);i>0,j>0,

1 <a<k}

amongthe states of the embe~ldedchain, whichare randora numbers with corresponding distributions

{H~a) (n);i>O,j>O, l<a<k}, such that for any

giveh decision a 6 A, and states i, j E S:

(a)

Hij (n)

= P{Tm+I-Tm <nlSm=i, Sm+1 =j, Dm=a }

4.ksetsofrewardsoryields

{r~a)t (l);i>O, 1 <a<k}

associated with the states of the embeddedchain, such

that for any given decision a ~ A, r~a)" (l) is the value

achievable in state i e S of the chain over time interval

(l, l+l).

3.4 Solution Methods

A dynamic decision problem can be expressed as the dynamic programming equation, or Bellman optimality

equation, of a semi-Markovdecision process. For a decision horizon of duration n time units, with a discount factor

9, the optimal value achievablein any state i, Vi , gwenan

initial value Vi (0), is [Howard,1971]:

(n,9)

co

= maxaI~p(a)~n

J

(a)

+ h -ij

ra= !

t r a) (l)

(m)

+

n

~P(ija’ ~ (a, ( m)x

J

ra=l

F~.~m-I

Ll~=O~lr~a) (l) + ~mv; (n-m,

n>O;i,j>O;1

;

11

<a<k

(EO 1)

The first addend in EQ 1 indicates the expected value

achievableif the next transition out of state i occurs after

time duration n, and the second addend indicates the expected value achievable if the next transition occurs before

that time duration. This formulation assumesthe samevalue-function or reward structure r[a) (.)in each state i

conditional on an action a, independentof the next state j.

The most direct solution method, called value iteration, is

to solve the optimality equation shownin EQ1. The solution to such an equation is an optimal policy, i.e., a

sequenceof decisions over time (whichcould be one single

decision repeated indefinitely)

that maximizes

Vstar ? (N), the optimal expected value or reward for

starting state start, at time t = 0, or for decision horizon/Q,

wheren = N - t is the remaining duration for the decision

problem.

For infinite horizon problems,EQ1 simplifies into:

and

157

unchanged;

onlythe valid entities are displayedfor particular timeinstancesor durations.

v7 (~)

= maxa[~Pij

(a) ~ (a)

Ehij (m)

-J

i,j~

0;1 <a<k

Thesenewconstructsdirectly correspondto a generalclass

of semi-Markov

decision processes with dynamicstatespace andaction-space.Thevalue-iteration solution methods as described in EQ1 and EQ2 remain applicable to

these problems.

m= 1

(EQ2)

Mostsolution techniques for existing dynamicdecision

modelingframeworksare based on the value-iteration

method. [Shachter and Peot, 1992] has also employed

probabilistic inferencetechniquesfor solving dynamic

influence diagrams. DYNAMOL,

however,wouldadmit other

moreefficient solutions methodsfor semi-Markov

decision

processes.Forinstance, policyiteration, adaptiveaggregation, or linear programmingmaybe applicable in the

DYNAMOL

frameworkif certain assumptionsor conditions

are met.Theseconditionsincludestationary policies, constant discountfactors, homogeneous

state-space, etc.

4-2 Non-Homogeneous

Transition Probabilities

In manyreal-life examples,the transition probabilitymaybe time-dependent.For example,the morbidityrate of a

patient maydependon his age; a 70 year-old personis more

likely to havea heart attack than a 40 year-old one. Such

time-dependentor non-homogeneous

transition probabilities have been incorporated into DYNAMOL.

Again

extendingthe decision grammar

andgraphical presentation

are straightforward. Extendingthe underlyingmathematical representation leads to redefining the transition

probabilityin Section4.3 as a functionof time. Thevalueiteration methodin EQ1 has to be modifiedaccordingly.

Byseparating the modeling(with the decision grammar)

and the solution (with the mathematicalrepresentation)

tasks, therefore, different solution techniquescan be employed. Moreover,employinga newsolution technique

doesnot involveanychangeto the languageitself; all solution techniques reference only the mathematical

representationof a model.

4.3 Limited Memory

Transitionprobabilities governthe destinationsof transitions. As comparedto a Markovprocess, a Semi-Markov

processsupportsa seconddimensionof uncertainty: duration in a state. A semi-Markov

process is only "semi-"

Markovbecausethere is a limited memory

about the time

since entry into anyparticular state. In somecases, limited

memory

about previousstates or actions are importantin a

4 SupportingLanguageExtension

dynamicdecision problem. For example, having had a

heart attack beforewouldrenderhavinga secondheart atTheinitial version of DYNAMOL

contains only a basic set

can

of languageconstructs; manyof the constraints mentioned tack morelikely duringsurgery. Suchlimited memory

be

incorporated

into

a

semi-Markov

or

Markov

process

by

in Section3.1 are not includedin the initial design. DYtechniques

such

as

state-augmentation.

NAMOL

supports modular extensions to the language

constructs. This section sketches the approachesto adIn DYNAMOL,

a newset of syntactic constructs can be indressing someof these issues; someof these possible

troduced to specify such limited memory.A newset of

extensionshavealreadybeenincorporatedinto the current correspondence

rules will then be incorporatedto perform

version of DYNAMOL.

automatic state-augmentationand calculate the correspondingprobabilistic parameters.Wedo not yet knowif

4-1 Static vs. DynamicSpaces

this processcan be fully automated.Theresulting matheOnlystatic state-spaceandstatic action-spaceare currently matical representation, however,should be a well-formed

allowedin the DYNAMOL

decision grammar.Static statesemi-Markov

decision process; the solution methodswill

spaceindicatesthat the samestates are valid throughoutthe remainapplicable.

decision horizon; static action-spaceindicates that the

sameset of actionsare applicablein eachstate, overtime. 4.4 Strategic Constraints

Theoretically, wecan modelchangesin the state-space or

Strategicconstraintsare closelyrelated to the limitedmemaction-space by providing some"dummy"

states and "no- ory capabilities. Someexamplesof these constraints are"

op" actions. In practice, however,suchextraneousentities

action-number

constraints, whereone or moreactions canmaycompromise

modelclarity andsolution efficiency.

not be applied for morethan a specific numberof times;

The DYNAMOL

decision grammarcan be easily extended and action-orderconstraints, whereoneaction mustalways

to incorporate dynamicstate-space and action-space. New or never follow another action. Thereare two methodsto

productionsfor the corresponding

constructscan be written incorporatesuch constraints into a dynamicdecision modto incorporatethe valid time or durationfor eachstate and el. Thefirst methodis by augmentingthe state-space to

action. Thegraphicalpresentationrules can remainmostly keeptrack of the numberand/or order of the actions ap158

plied; this wouldusually result in a very complexstatespace, compromisingthe clarity of the model. The second

methodis to let the solution moduleworry about keeping

track of the constraints.

In either case, new constructs can be introduced in DYNAMOL

to express the constraints.

If the’ state-

PTCA,CABG}.For ease of exposition, assume that each

state s E S is a function of a set of binary state attribute or

health

outcome

variables

0 = {Status, It,tl, Restonosis } , e.g., "Well":=(Status.=

alive, MI = absent, Restenosis = absent), "MI":=(Status

alive, MI = present, Restenosis = absent), etc.

augmentation

method

is adopted,a newset of correspon- A partial modelspecification of the exampleproblemin the

dencerules need to be added as described earlier. If the dynamicdecision grammaris as follows’:

solution methodis responsible for the constraints, these

factors only need to be accessible.

($time-horizon:nature

discrete :duration60:unit month)

($actionmodieal-treatment

:durationall)

5 A Prototype Implementation

i$statewell:duration

all)

($staterestenosis

:duration

all)

¯ ,.

A prototype implementation of DYNAMOL

is currently un($transition

:fromwell:to dead

:transition-probability

0.1

derway. The system is implemented in Lucid Common

:duration30)

Lisp on a Sun Spare Station, with the GARNET

graphics

($transition

:fromwell:to dead

:transition-probability

0.2

package.It includes a graphical user interface that allows

;duration(3160])

interactive modelspecification. The specification can be

($transition

:fromwell:to restenosis)

strictly text-based, or aided by the graphical presentations

($event

hight-fat-diet:outcomes

(true false):predwell

of the available decision variables or constraints. Onlya

:succrestenosis

:condl-probability

<distribution>)

subset of the dynamicdecision grammaris included in the

($event

stress:outcomes

(true false) :predwell

:succ

first version of this implementation,and the solution method supported is value iteration. Future agenda on the

($inlluence

:fromstress:to MI:cond-probability

<distribution>

:duration

all)

project include improving the implementation, extending

and refining the dynamic decision grammar, identifying

($value-function

<function>

:stateall)

meta-level dynamicdecision problemtypes, searching for

($discount-factor

0.8:duration

all)

moreeffective solution methods,and investigating relevant

($action-number-constraint

CABG

:number

3)

issues in supporting automated generation of dynamicdecision models. The current domainfor examiningall these

Figure 1 showstwo graphical perspectivesof someof the

issues is medical decision makingin general.

decisionfactors in this model.

6 An Example

Theproblemis to determinethe relative efficacies of different treatments for chronic stable angina (chest pain), the

major manifestation of chronic ischemic heart disease

(CIHD). The alternatives considered are medical treatments, percutaneous transluminal angioplasty (PTCA),and

coronary artery bypass graft (CABG).The manifestations

of CIHDare progressive; if the angina worsensafter a treat°

ment, for instance, subsequent actions will be considered.

Evenafter successful treatment, restenosis, i.e., renewed

occlusion of the coronary arteries, mayoccur. Hencethe

decisions must be madein sequence. The treatment efficacies in lowering mortality decline as time progresses; the

treatment complications worsenas time progresses. A major complication for PTCAis perioperative myocardial

infarction (MI), or heart attack, which would render

emergencyCABG

necessary. The effectiveness of the different treatments is evaluated with respect to qualityadjusted life expectancy(QALE).

In this problem,assumethat the states S = {"Well", "Restenosis", "MI", "MI+Restenosis", "Dead"} represent the

possible physical conditions or health outcomesof a patient, given any particular treatment a E A = {MedRx,

In termsof the mathematical

representation,the set of actions is A = {MedRx, PTCA, CABG}. The semiMarkov,or in this case, Markovrewardprocess,with time

indexset T ~_{0, 1, 2 .... } , is definedby: 1) the embedded Markov chain with state-space S = {"Well",

"Restenosis", "MI", "MI+Restenosis","Dead"} as illusmated

in Figure1 a; 2) threesets of transition probabilities

among

the states in S, corresponding

to the actions in A;

3) constant holding times with distributions

H~/a)(n) = 1 (n - 1), where1 (n - 1) is a step function

at time n = 1 (in anyunit); and4) three sets of rewards,

correspondingto the amountof QALE

achievableper unit

timein eachstate in S, with respectto the actionsin A.

7

Related Work

This work is based on and extends existing dynamicdecision modeling frameworks such as dynamic influence

diagrams, Markovcycle trees, and stochastic trees. It also

integrates manyideas in control theory for the mathematical representation and solution of semi-Markovdecision

t. Theseare approximations

to the graphicalinterfacecommands;the plaintext command

interfaceis not complete.

159

..,...~.,...,..,..,..,..~..

.~...~.~.~.~.,..,.:

.,...

~:‘‘~‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘~‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘~‘‘‘~‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘‘~!

~‘

".:’

~:~i~ii~’

......

.~.~.~’i’i’~.~.~.;J

.....

~i~i~i~i~i~i~:,~’,~’,~:,~’,~i~:,:..~;~’,~i~’~:~;~’,’:’3

ENTITIES-LIFESPAN

~ All

REfiT

ION S-LIFESPAN

= All

Figure ] Twographical perspectivesof a dynamic

decisionmodel.Figure 1 (a) depicts the Markovstate transition

diagram.Thelinks representpossibletransitions fromonestate to another;givenanyaction; a transition-functionis

usuallyassociatedwith eachlink. Figure1 (b) depictsprobabilistic andinformationaldependencies,

in the convention

of influencediagrams,

among

the decisionfactors at timeunit n.

processes.Thenotionof abstractionthat lies in the core of ticular, the declaratory and strategic constraints are

the methodology

design is a fundamentalconcept in Com- explicitly incorporatedinto the graphicalstructure of the

model;the probabilistic and temporalparametersare exputer Science,andperhapsmanyother disciplines.

plicitly encodedfor each time slice or period considered.

[Egar and Musen,1993] has examinedthe grammarapThe dynamicdecision grammarin DYNAMOL,

on the other

proach to specifying decision model variables and hand,supportsabstract statementsaboutthe decisionsituconstraints. This grammar

is basedon the graphicalstrucation, e.g., statements about the validity duration of

ture of decisionmodel,andhas not beenextendedto handle particular states, statementsaboutthe orderingconstraints

dynamicdecision models. [Deanetai., 1993a], [Dean

on different subsetsof actions, etc. Theseabstract stateet al., 1993b],andother related efforts havedevisedmeth- ments are analogous to the macro constructs in

otis based on Markovdecision processes for planningin

conventionalprogramming

languages. Byfocusing on the

stochastic domains;they apply the mathematicalformula- decision problemontologyinstead of the decision model

tion directly, anddo not addressthe ontologyof general components, DYNAMOL

would provide a more concise

dynamicdecision problems.Muchcan be learned fromthis

and yet moreexpressive platform for supporting model

line of work, however,both in navigating throughlarge

construction.

state spacesfor findingoptimalpolicies, andin identifying

Theadvantagesof the graphicalnature of existing dynamic

meta-levelproblemtypes for devisingsolution strategies.

The graphical representation of semi-Markov

processes decision modelinglanguagesare preservedand extendedin

Thegraphical presentation conventionrenders

has been explored in [Berzuini etal., 1989] and [Dean DYNAMOL.

and constraint examinablegraphiet al., 1992];theyfocusonlyon the single-perspective

pre- every modelcomponent

sentationof relevantdecisionvariablesin a belief network cally. Thedifferent perspectivesin the presentationwould

furthercontributeto the visualizationeaseandclarity.

or influence diagramformalism.

Theoretically, semi-Markov

decision processes can approximatemoststochastic processesby state augmentation

Theresulting state-space, however,

Modelspecification in DYNAMOL

is expressedin a higher or other mechanisms.

may

be

too

complex

for

direct manipulationor visualizalevel languagethan that in existing dynamic

decision modtion.

On

the

other

hand,

efficient solution methodsmaynot

eling frameworks. In frameworks such as dynamic

exist

for

more

general

stochastic

models.Bydistinguishing

influencediagramsor Markercycle trees, mostparameters

the

specification

grammar

and

the

underlyingmathematical

of the modelneedto be explicitlyspecifiedin detail. In par8 Discussion

160

model,DYNAMOL

aims to preserve the clarity and expressivenessof the modelstructure, while minimizing

the loss

of information.This wouldcontribute towardthe ease and

effectivenessof modelanalysis.

Acknowledgments

Egar, J.W. and Musen, M.A. (1993). Graph-grammar

assistance for automatedgenerationof influencediagrams.

In Heckerman,D. and Mamdami,

A., editors, Uncertainty

in Artificial Intelligence: Proceedingsof the Ninth

Conference, pages 235-242, San Mateo, CA. Morgan

Kaufmann.

Hazen,G. B. (1992). Stochastictrees: Anewtechniquefor

temporal medical decision modeling. MedicalDecision

Making,12:163-178.

I wouldlike to thank Peter Szolovits for advising this

project, and MikeWellmanand Steve Pauker for many

helpful discussions. This research was supportedby the

National Institutes of Health Grant No. R01LM04493 Heyman,

D. P. and Sobel, M. J. (1984). Stochastic Models

fromthe National Library of Medicine,and by tile USAF in Operations Research: Stochastic Optimization,

RomeLaboratory and DARPA

under contract F30602-91- volume2. McGraw-Hill.

C-0018.

Hollenberg, J.P. (1984). Markovcycle trees: A new

representation for complexMarkovprocesses. Medical

References

Decision Making,4(4). Abstract from the Sixth Annual

Berzuini, C., Bellazzi, R., and Quaglini, S. (1989).

Meetingof the Society for MedicalDecisionMaking.

Temporalreasoningwith probabilities. In Proceedingsof Howard,R.A. (1971). DynamicProbabilistic Systems,

the Fifth Workshop on Uncertainty in Artificial

volume1 &2. John Wileyand Sons, NewYork.

Intelligence, pages14-21. Associationfor Uncertaintyin

Leong, T.-Y. (1993). Dynamicdecision modeling

Artificial Intelligence.

medicine: A critique of existing formalisms. In

Dean,T., Kaelbling,L. P., Kirman,J., andNicholson,A. Proceedings of the Seventeenth Annual Symposiumon

(1993a). Deliberation scheduling for time-critical

ComputerApplications in MedicalCare, pages 478-484.

sequential decision making. In Heckerman, D. and

IEEE.

Mamdami,A., editors, Uncertainty in Artificial

Intelligence: Proceedingsof the Ninth Conference,pages Meyer, B. (1990). Introduction to the Theory of

Programming

Languages.Prentice Hall.

309-316, San Mateo, CA. MorganKaufmann.

Dean,T., Kaelbling,L. P., Kirman,J., and Nicholson,A. Shachter, R. D. and Peot, M. A. (1992). Decisionmaking

using probabilistic inference methods. In Dubois, D.,

(1993b).Planningwith deadlinesin stochastic domains.

Wellman, M.P., D’Ambrosio, B.D., and Smets, P.,

Proceedings of the Eleventh National Conference on

editors, Uncertainty

in Artificial Intelligence:Proceedings

Artificial Intelligence, pages574-579.

of the Eighth Conference, pages 276-283. Morgan

Dean, T., Kriman, J., and K,~azawa, K. (1992).

Kaufmann.

Probabilistic networkrepresentations of continuous-time

stochastic processes for applications in planning and Tatman, J.A. and Shachter, R.D. (1990). Dynamic

programming

and influence diagrams. IEEETransactions

control. In Proceedings of the First International

on Systems, Man,and Cybernetics, 20(2):365-379.

Conferenceon AI PlanningSystems.

161