From: AAAI Technical Report SS-94-02. Compilation copyright © 1994, AAAI (www.aaai.org). All rights reserved.

Deciding Whenand Howto Learn

Jonathan Gratcha, Gerald DeJonga, b

and Steve A. Chien

aBeckman

Institute

Universityof Illinois

405 N. MathewsAv., Urbana,IL 61801

{gratch, dejong}@cs.uiuc.edu

bJet PropulsionLaboratory,

CaliforniaInstitute of Technology,

4800 Oak GroveDrive, M/S525-3660

Pasadena, CA91109-8099

sources1 andmayforce an agentto redirect its behaviortemporarilyawayfromsatisfyingits goalsandtowardsexploratory behavior.Thus,morerecentworkhas begunto considerthe

problemof developingtechniquesthat can makea reasoned

compromise

betweenlearning andacting, andmoregenerally,

to considerthe relationshipbetweenlearningandthe overall

goals andresourceconstraints of a rational agent[des Jardins92, Gratch93b,Provost92].

Abstract

Learning

is an importantaspectof intelligent behavior.

Unfortunately,learningrarely comesfor free. Techniques developedby machinelearning can improve

the abilities of an agentbut theyoftenentail considerable computational

expense.Furthermore,there is an

inherenttradeoff betweenthe powerandefficiencyof

learning. Morepowerfullearning approachesrequire

greater computational

resources. This poses a dilemmatoa learningagentthat mustact in the worldunder

a variety of resourceconstraints. This paperinvestigates the issues involvedin constructinga rational

learning agent. Drawingon workin decision-theory

wedescribea framework

for a rational agentthat embodieslearningactionsthatcanmodify

its ownbehavior. Theagentmustpossesdeliberativecapabilitiesto

assessthe relativemeritsof theseactionsin the larger

context of its overall behaviorand resource constraints. Wethen sketchseveral algorithmsthat have

beendevelopedwithin this framework.

1.

INTRODUCTION

Aprincipalcomponent

of intelligent behavioris an ability to

learn. In this article weinvestigatehowmachine

learningapproachesrelate to the designof a rationallearning

agent.This

is an entitythat mustact in the worldin the serviceof goalsand

inetudesamong

its actionsthe ability to changeits behavior

bylearningaboutthe world.Thereis a longtraditionof studying goaldirectedactionundervariableresourceconstraintsin

economics[Good71

] andthe decision sciences [Howard70],

andthis formsthe foundations

for artificial intelligenceapproaches to resonrce-bounded computational agents

[Doyle90,Horvitz89, Russell89]. However,these AI approacheshavenot addressedmanyof the issues involvedin

constructinga learningagent. Whenlearning has beenconsidered, it has involvedsimple,unobtrusiveprocedureslike

passivelygatheringstatistics [Wefald89]

or off-line analysis,

wherethe cost of learningis safely discounted[Horvitz89].

Manymachinelearning approachesrequire extensive re36

Decidingwhenandhowto learn is madedifficult bythe realization that there is no "universal"learning approach.Machine learning approachesstrike somebalance betweentwo

conflicting objectives. First is the goal to create powerful

learningapproachesto facilitate large performance

improvements.Secondis the needto restrict approaches

for pragmatic

considerationssuchas efficiency. Learningalgorithmsimplementsomebias whichembodiesa particular compromise

betweenthese objectives. Unformately,in whatis called the

static bias problem,

thereis nosinglebias that appliesequally

well acrossall domains,tasks, andsituations [Provost92].As

a consequence

there is a bewilderingarray of learning approacheswith differing tradeoffs betweenpowerand pragmarieconsiderations.

Thistradeoff posesa dilemma

to anyagentthat mustlearn in

complexenvironmentsunder a variety of resource constraints. Practical learningsituations place varyingdemands

on learning techniques. Onecase mayallow several CPU

monthsto devoteto learning; anotherrequires an immediate

answer.For onecasetraining examples

are cheap;in another,

expensiveproceduresate required to obtain data. Anagent

wishing to use a machinelearning approachmust decide

whichof manycompetingapproachesto apply, if any, given

its currentcircumstances.

This evaluationis complicated

because techniques do not provide feedbackon howa given

tradeoffplays out in specific domains.Thissameissue arises

t. GeraldTesauronoted that his reinforcementlearning

approachto learning strategies in backgammon

required a

monthof learning time on an IBMRS/6000[Tesanro92].

Environment

~ Agent-<~

I

ala2

t

Leamer-~

Environment

Learning ,,,,~

Agent

~\

al

a2.

learning ~

actions

11 12

Ii

12

Figurela" Standarddecision-theoreticview.

l..eamingis consideredexternalto the agent.

Figurelb: Alearning agent. Learningis

underthe direct controlof the agent.

in a number

of different learningproblems.

In statistical modeling the issue appearsin howto choosea modelthat balances

the tradeoffbetween

the fit to the data andthe number

of examplesrequiredto reacha givenlevel of predictiveaccuracy

[AI&STAT93].

In neural networksthe issue arises in the

choice of networkarchitecture (e.g. the numberof hidden

units). Thetradeoffisatthe coreof the field of decisionanalysis whereformalismshavebeendevelopedto determinethe

value of experimentation

undera variety of conditions[Howard70].

for a speed-uplearningsystemor a spaceof possibleconcepts

for a classification learningsystem.Eachof the transformations impartssomebehaviorto the computational

agenton the

tasks it performs.Thequality of a behavioris measured

by a

utility functionthat indicateshowwellthe behaviorperforms

ona giventask. Theexpectedutility of a behavioris the averageutility overthefixed

distributionof tasks. Ideally, aleaming systemshouldchoosethe transformationthat maximizes

the expectedutility of the agent. Tomakethis decision, the

learning systemmayrequire manyexamplesfromthe environment

to estimateempiricallythe expectedutility of different transformations.

Thisarticle

outlines

a decision-theoretic

formalism

with

which

tovicw

theproblem

offlexibly

biasing

learning

behavior.A learning

algorithm

isassumed

tohave

somechoice

in

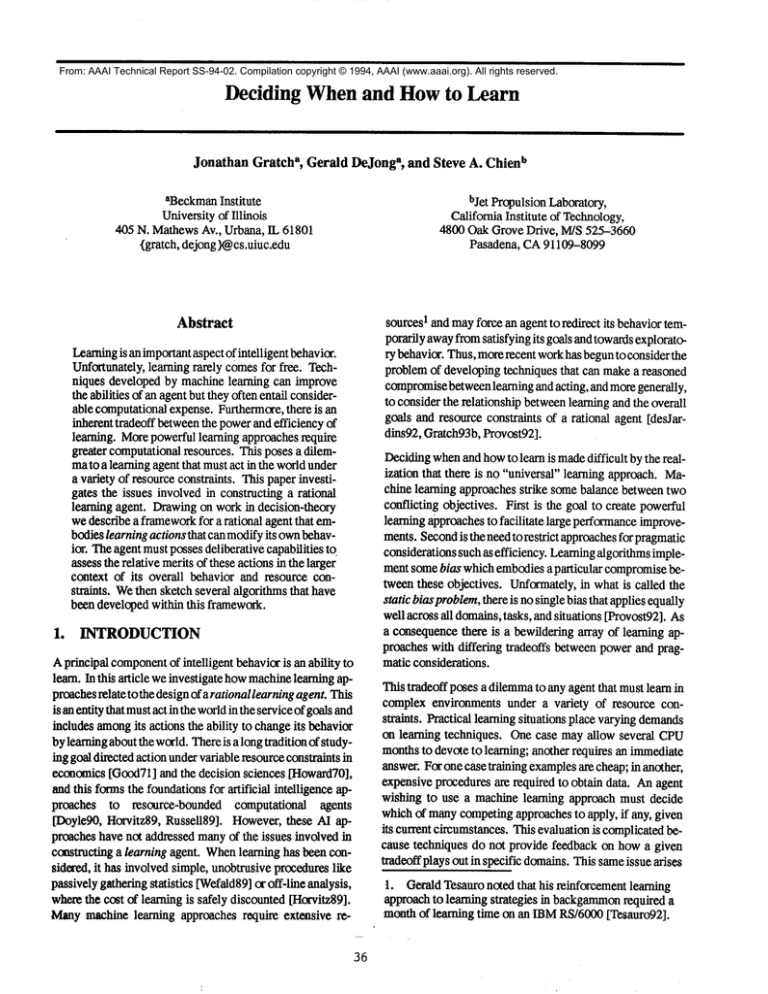

1.1 Learning an Agent vs. a Learning Agent

howitcanproceed

andbehavior

ina given

situation

isdeterFigurela illustrates this viewof learningsystems.Thereis a

mined

bya rational

cost-benefit

analysis

ofthese

alternative

computational

agentthat mustinteract with an environment.

"learning

actions."

A chief

complication

inthis

approach

is

The

agent

exhibits

somebehaviorthat is characterizedby the

assessing

theconsequences

ofdifferent

actions

inthecontext

expected

utility

of

its

actionswithrespectto the particularenofthecurrent

situation.

Often

these

consequences

cannot

be

vironment.

For

example

the agentmaybe an automatedplaninferred

simply

onthebasis

ofpriorinformation.

Thesolution

ning

system,

the

environment

a distributionof planningprobweoutline

inthis

article

uses

theconcept

ofadaptive

bias.

In

this

approach

a learning

agent

uses

intermediate

informationlems, and the behaviorcharacterized by the averagetime

acquired

inearlier

stages

oflearning

toguide

later

behavior.required to producea plan. A learning algorithmdecides,

froma spaceof transformations,howbest to changethe beWeinstantiate these notionsin the area of speed-uplearning

haviorof the agent. This viewclarifies the purposeof learnthroughthe implementation

of a "speed-uplearning agent."

ing: the learningalgorithmshouldadopta transformation

that

Theapproachautomaticallyassessesthe relative benefitsand

maximizes

the

expected

utility

of

the

agent’s

behavior.

This

costs of learningto improvea problem

solverandflexibly adviewdoes not, however,say anythingabout howto balance

justs its tradeoffdepending

on the resourceconstraintsplaced

pragmaticconsiderations.Theutility of the agentis defined

uponit.

withoutregardto the learningcost requiredto achievethat lev2. RATIONALITY IN LEARNING

el of performance.

Pragmaticissues are addressedby

an external entity throughthe choiceof a learningalgorithm.

Decisiontheoryprovidesa formalframework

for rational deFigurelb illustrates the viewweexplorein this article. Again

cision makingunderuncertainty(see [Berger80]).Recently,

therehas beena trend to providea decision-theoretic

interprethere is a computational

agentthat mustinteract withan envitation for learning algorithms[Brand91,Gratch92,Greinronment.Againthe agentexhibits behaviorthat is characterer92, Subramanian92].

The"goal" of a learning systemis to

izedbythe expected

utility of its actions.Thedifference

is that

improvethe capabilities of somecomputational

agent(e.g.

the decisionof whatandhowto learn is undercontrol of the

planneror classifier). Thedecision-theoreticperspective

agent, and consequently,pragmaticconsiderationsmustalso

viewsa learningsystemas a decisionmaker.Thelearningsysbe assessedby the agent, and cannotbe handedover to some

temmustdecidehowto improvethe computational

agentwith

externalentity. In additionto normalactions,it can perform

respecttoafuted,but possiblyunknown,

distributionof tasks.

learningactionsthat changeits behaviortowardsthe environTiaelearuingsystemregresentsa spaceof possibletransform~nt.A learning action mightinvolve running a machine

mat/onsto the agent:a spaceof alteraativecontrolstrategies

learningprogramas a black box, or it mightinvolvedirect

37

control over low-levellearningoperations- therebygiving

the agentmoreflexibility over the learningprocess.Theexpenseof learningmaywellnegativelyimpactexpectedutility.

Therefore,the learningagentmustbe reflective [Horvitz89].

This meansit mustpossessthe meanstoreasonaboutboth the

benefitsandcosts of possiblelearningactions.Asan example,

such a learning agentcould be a automatedplanningsystem

withlearningcapabilities.Aftersolvingeachplanthis system

has the opportunityto expendresourcesanalyzingits behavior

for possible improvements.

Thedecision of whatand howto

learn mustinvolve considerationofhowtheimprovement

and

the computational

expenseof learningimpactthe averageutility exhibitedbythe agent.

Notethat the senseof utility differs in the twoviews.In the

first case,utility is definedindependent

of learningcost. In the

secondease,utility mustexplicitlyencodethe relationshipbetweenthe potential benefitsandcosts of learningactions.For

example,the averagetime to producea plan maybe a reasonable wayto characterizethe behaviorof a non-learningagent

butit doesnotsufficeto characterize

the behaviorof a learning

agent. Thereis never infinite time to amortizethe cost of

learning.Anagentoperatesunderfinite resourcesandlearning actions are only reasonableif the improvement

theyprovide outweighs

the investmentin learningcost, giventhe resourceconstraintsof the presentsituation. Thesetwosenses

of utility are discussedin the rational decisionmaking

literature. Following

the terminology

of Goodwerefer to a utility

functionthat doesnot reflectthe costof learningas TypeI utility function[Good71

]. Autility functionthat doesreflect

learningcost is a TypeII utility function.TheTypeI vs. Type

II distinctionis alsoreferredto as substantivevs. procedural

utility [Simon76]

or object-level vs. comprehensive

utility

[Horvitz89].Weare interested in agentsthat assess TypelI

utility.

1.2 Examples of Type II Utility

natural goal is to evaluatethe behaviorof the learningagent

by howmanyproblemsit can solve withinthe resourcelimit.

Alearningactionis onlyworthwhile

if leads to an increasein

this number.In this case the expectedTypeII utility correspondsto the expectednumber

of problemsit can solve given

the resourcelimit. If weare givena finite number

of problems

to solve, an alternativerelationshipmightbe to solvethemin

as fewresourcesas possible. In this case expected"I~peII

wouldbe the expected resources (including learning resources) required to solve the fixed numberof problems.

Learning

actionsshouldbe chosenonlyif theywill reducethis

value.

Anothersituation arises in the caseof learninga classifier

wherethere is great expensein obtainingtraining examples.

Thepresumed

benefit of a learnedclassifier is that it enables

an agent to makeclassifications relevant to achievingits

goals.Obviously,

the moreaccuratethe classifier, the greater

the benefit. It is also generallythe casethat a moreaccurate

classifier will result byincreasingthe richnessof the hypothesis space- increasingthe number

of attributes in a decision

tree, increasingthe numberof hiddenunits in a neural network,or increasingthe number

of parametersin a statistical

modelingprocedure.Whilethis improvesthe potential benefit, addingflexibility will increasethe number

of examples

requiredto achievea stable classifier. ATypeII utility function

shouldrelate the expectedaccuracyto the cost of obtaining

training examples.Alearning systemshouldthen adjust the

flexibility (attributes, hiddenunits, etc.) in sucha waytomaximizethis TypeII function.

1.3 Adaptive Bias

In our modelan agent mustbe able to assess the costs and

benefitsof alternativelearningactionsas theyrelate to the current learningtask. Occasionally

it maybe possibteto provide

the learning agent with enoughinformationin advanceto

makesuchdeterminations

(e.g. uniformitiesare onesourceof

statistical knowledge

that allowsan agentto infer the benefits

of usinga givenbias [desJardins92]).Unfortunately,

suchinformationis frequentlyunavailable.Weintroducethe notion

of adaptivebias to enableflexible control of behaviorin the

presenceof limited informationon the cost and benefit of

learning actions. Withadaptivebias a learning agentestimatesthe factors influencescost andbenefit as the learning

processunfolds. Informationgained in the early stages of

learningis usedto adaptsubsequent

learningbehaviorto the

characteristicsof the givenlearningcontext.

TypeI funetiomcan be usedin realistic learningsituations

¢xdyaftar somehumanexpert has assessed the current resourceconstraints, andchosena learningapproachto reflect

the appropriatetradeoff. Thedecision-theoreticviewis that

the decision makingof the humanexpert can be modeledby

a TypeII utility function. Thus,to developa learningagent

wemustcapturethe relationshipbetween

benefit andcost that

webelieve the humanexpert is using. This, of course, will

vary withdifferentsituations. Wediscussa fewlikely situations andshowhowthese can be modeledwith TypeII functions.

3.

Speed-uplearningis generallycast as reducingthe average

amountof resources neededby an agent to solve problems.

Theprocessof learning also consumes

resourcesthat might

otherwisebe spent on problemssolving. ATypeII function

mustrelatethe expenseof this learninginvestment

andits poteatialreturn.If weare restrictedto a finite resourcelimi’t, a

Thenotionof a rational learningagent outlinedin Section

NOTAG

is quite general. Manyinteresting, and moremanageablelearning problemsarise fromimposingassumptions

on the general framework.

Weconsiderone specialization of

these ideas in somedetail in this section,whilethe following

sectionrelates severalalteraative approaches.

38

RATIONAL SPEED-UP

LEARNING

Oneofourprimary

interests is in area of speed-uplearningand

thereforewepresentan approachmimed

to this class of learning problems.Weadoptthe followingfive assumptions

to the

generalmodel.(1) Anagentis viewedas a problemsolverthat

mustsolve a sequenceof tasks providedby the environment

accordingto a fixed distribution. (2) Theagent possesses

learningactionsthat changethe averageresourcesrequiredto

solve problems.(3) Learningactions mustprecedeanyother

actions.Thisfollowsthe typicalcharacterization

of the speeduplearningparadigm:

there is an initial learningphasewhere

the learning systemconsumes

training examples

and produces

an improved

problemsolver;, this is followedby a utilization

phasewherethe problemsolver is used repeatedlyto solve

problems.Oncestopped,learningmaynot be returnedto. (4)

Theagentlearns by choosinga sequenceof learning actions

and each action must (probabilisticaUy) improveexpected

utility. Asa consequence,

the agentcannotchooseto makeits

behaviorworsein the hopethat it mayproducelarger subsequent improvements.

(5) Learningactions haveaccess to

unbounded

numberof training examples.For any given run

of the system,this number

will be finite becausethe agent

mustact underlimitedresources.Thisis reasonablefor speedup learning techniquesare unsupervisedlearning techniques

so the examples

do not needto be classified. Together,these

assumptions

define a sub-classof rational learningagentswe

call one.stagespeed-uplearningagents.

Giventhis class of learningagents,their behavioris determinedbythe choiceof a TypeII utility function.Wehavedevelopedalgorithmsfor two alternative characterizationsof

Typen utility that result fromdifferentviewsonhowto relate

benefit andcost. Thesecharacterizationsessentially amount

to TypeII utility schemas- theycombine

somearbitrary Type

I utility functionandan arbitrarycharacterization

of learning

cost into a particular TypeII function. Ourfirst approach,

RASL,

implementsa characterization whichleads the system

to maximizethe numberof tasks it can solve with a fixed

amountof resources. A second approach,RIBS,encodesa

different characterizationthat leads the systemto achievea

fixed level of benefit with a minimum

of resources.Bothapproaches

are conceptually

similar in their use of adaptivebias,

so wedescribe RASL

in somedetail to give a flavor of the

techniques.

the COMPOSER

system, RASLincorporates decision making procedures

that provideflexible controlof learningin responseto varyingresourceconstraints. RASL

acts as a learnhag agentthat, givena problemsolver anda fixed resource

constraint, balanceslearning and problemsolving with the

goalof maximizing

expectedTypeII utility. In this contextof

speed-uplearningresourcesare definedin termsof computational time, however

the statistical modelappliesto anynumericmeasureof resourceuse (e.g., space, monetary

cost).

1.4 Overview

RASL

is designedin the serviceof the followingTypeII goal:

Givena problemsolver and a known,fixed amountof resources, solve as manyproblemsas possible within the resources. RASL

can start solving problemsimmediately.Alternatively, the agent can devote someresources towards

learningin the hopethat this expenseis morethanmadeup by

improvements

to the problemsolver. TypeII utility correspondsto the number

of problemsthat can be solvedwith the

availableresources.Theagentshouldonly invokelearningif

it increases the expectednumberofproblems(ENP)that can

be solved. RASL

embodiesdecision makingcapabilities to

performthis evaluation.

Theexpectednumberof problemsis governedby the following relationship:

availableresourcesafter learning

ENP =

avg. resourcesto solvea problemafter learning

Thetop term reflects the resourcesavailable for problems

solving.If nolearningis perforlned,this is simplythe total

availableresources.Thebottomtermreflects the averagecost

to solve a problemwith the learnedproblemsolver. RASL

is

reflectivein that it guidesits behaviorbyexplicitlyestimating

ENP.To evaluate this expression, RASL

mustdevelopestimatersfor the resourcesthat will be spentonlearningandthe

performance

of the learned problemsolver.

Like COMPOSER,

RASLadopts a kill-climbing approachto

maximizing

utility. Thesystemincrementallylearns transformationsto an initial problem

solver. Ateachstep inthesearch,

a transformation

generatorproposesa set of possiblechanges

to the current problemsolver.2 Thesystemthen evaluates

these changeswith training examplesandchoosesonetransRASL

(for RAtionalSpeed-upLearner)builds uponour preexpectedutility. At eachstep in the

vious work on the COMPOSER

learning system. COMPOS- formationthat improves

search,RASL

has twooptions:(1) stop learningandstart solvERis a general statistical framework

for probabilistically

ing problems,or (2) investigate newtransformations,choosidentifyingtransformationsthat improveTypeI utility of a

ing onewith the largest expectedincreaseinENP.Inthis way,

problem solver [Gratch91, Gratch92]. COMPOSER

has

demonstrated

its effectivenessin artificial planningdomains

2. Theapproachprovidesa frameworkthat can incorpo[Grateh92] and in a real-world scheduling domain

rate varying mechanisms

to proposingchanges. Wehave

[Gratch93a].Thesystemwasnot, however,developedto supdevelopedinstantiations of the frameworkusing PRODIportrationallearningagents.It hasa fixedtradex~-ffor balancGY/EBL’s

proposedcontrol rules [Minton88],STATIC

ing learnedimprovements

withthe cost to attain themandproproposedcontrol rules [Etziom’91]

anda Hbraryof expert

proposedschedulingheuristics [Gratch93a].

videsnofeedback.U,sing the low-levellearningopecationsof

39

the behaviorof RASL

is driven by its ownestimate of how

learninginfluencesthe expectednumber

of problemsthat may

be solved. Thesystemonly continuesthe hill-climbingsearch

as longas the nextstep is expected

to improve

the TypeII utility. RASL

proceedsthrougha series of decisionswhereeach

action is chosento producethe greatest increase in ENP.

However,

as it is a hill-climbingsystem,the ENPexhibitedby

RASL

will be locally, andnot necessarilyglobally, maximal.

1.5 Algorithm Assumptions

n observationsof X.~’n is a goodestimatorfor EX.Variance

is a measure

of the dispersionor spreadof a distribution. We

use the samplevariance,S2" ffi 1n Zn(Xi - Z~)2asan estima/-1

tor for the varianceof a distribution.

x

Thefunction~)(x) ffi 1(1/f-~-)exp{-0.5y2}dy

is the cumula-aa

five distributionfunctionof the standardnormal(also called

standardgaussian)distribution,tlKx)is the probabilitythat

RASL

relies on several assumptions.Themostimportantaspoint

drawnrandomlyfroma standard normaldistribution

sumption

relates to its reflectiveabilities. Thecostof learning

will

be

less thanor equaltox. Thisfunctionplaysa important

actionsconsistsof three components:

the cost of deliberating

role in statistical estimationandinference.TheCentralLimit

on the effectivenessof learning,the cost of proposing

transTheorem

showsthat the difference betweenthe samplemean

formations,andthe cost of evaluatingproposedtransformaandtrue meanof an arbitrary distribution can be accurately

tions. When

RASL

decideson the utility of a learningaction,

representedby a standardnormaldistribution, given suffiit shouldaccountfor all of these potential costs. Ourimpleciently large sample.In practicewecan performaccuratestamentation,however,relies on an assumption

that the cost of

tistical inferenceusinga "normalapproximation."

evaluationdominatesother costs. This assumption

is reasonable in the domainsCOMPOSER

has been applied. Thepre1.6.1 COMPOSER’s

Statistical

Evaluation

lxmderant

learningcost lies in the statistical evaluationof

transformations,

throughthe large cost of processingeachexLet T be the set of hypodaesized

transformations.Let PEdeampleproblemm this involves invokinga problemsolver to

note

the

current

problem

solver.

WhenRASL

processes a

solve the exampleproblem.Thedecision makingprocedures

training

example

it

determines,

for

each

transformation,

the

that implementthe Type1/deliberations involveevaluating

change

in

resource

cost

that

the

transformation

would

have

relatively simple mathematicalfunctions. This assumption

providedif it wereincorporated

intoPE.Thisis the difference

has the consequencethat RASL

does not account for some

in cost between

solvingthe problemwith andwithoutincorpooverhead

in the cost of learning,andundertight resourceconrating

the

transformation

intoPE.This is denotedAr,(tlPE)

straints the systemmayperformpoorly. RASL

also embodies

the same statistical assumptions adopted by COMPOSER. for the/th training example.Trainm"g examplesareprocessed

incrementally.After eachexample

the systemevaluatesa staThestatistical routinesmustestimatethe meanof variousdististic called a stoppingrule. Theparticular rule weuse was

tribution. Whileno assumption

is madeaboutthe formof the

proposedby Arthur Nhdasand is called the N~idasstopping

distributions, wemakea standardassumption

that the sample

rule [Nadas69].This rule determineswhenenoughexamples

meanof these distributionsis normallydistributed.

havebeenprocessedto state with confidencethat a transformarionwill help or hurt the averageproblemsolve speedof

1.6 Estimating ENP

PE.

Thecoreof RASL’s

rational analysisis a statistical estimator

After processinga training example,RASL

evaluatesthe folfor the ENPthat results frompossiblelearningactions. This

lowing

rule

for

each

transformation:

sectiondescribesthe derivationof this estimator.Ata given

decision point, RASLmust choose between terminating

learningor investigatinga set of transformations

tothecurrent

n

t~ (1)

n > no AND

[j~_(tlPE)]2 < ~-, s.t. ~(a) ffi 2rll

problemsolver. RASL

uses COMPOSER’s

statistical procedureto identify beneficialtransformations.

Thus,the cost of

learningis basedonthe cost of this procedure.Afterreviewwherenois a smallfinite integerindicatingan initial sample

ing somestatistical notationwedescribethis statistical procesize, nis the number

of examples

takenso far, is the transfordure. Wethen developfromthis statistic a estimatorfor ENP.

mation’saverageimprovement,

is the observedvariance in

the transformation’simprovement,

and5 is a confidencepaLet Xbe a random

variable. Anobservationof a random

varirameter.

If

the

expression

holds,

the transformationwill

ablecanyield oneofasetof possible numericoutcomeswhere

speed-up

(slow

down)PEif

is

positive

(negative) with confithe likelihoodof eachoutcome

is determinedby an associated

probabilitydistribution. Xlis the RhobservationofX.EXdedence1--~. Thenumberof examplestaken at the point when

Equation1 holdsis called the stoppingtime associatedwith

notesthe expeoted

valueOf X,also called the meanof the distributioa. ~nis the s~aplemeanandrefers to the averageof

.t~ tr~msfom~ation

andis denotedST(tlPE).

4O

1.6.2

Estimating Stopping Times

Giventhat weare usingthe stoppingrule in Equation1, the

cost of learninga transformation

is the stoppingtimefor that

transformation,

timesthe averagecost to processan example.

Thus,oneelementof an estimatefor learningcost is an estimatefor stoppingtimes. In Equation1, the stoppingtimeassociatedwith

atransformation

is a functionof the variance,the

squareof the mean,and the samplesize n. Wecan estimate

thesefirst twoparameters

usinga smallinitial sample

of noexamples: and ~r,(tlPE) ,~ ~:,0(tlPE). Wecan estimate

stoppingtimeby usingtheseestimates,treating the inequality

in Equation1 as an equality, andsolvingfor n. Thestopping

timecannot

be less thanthe noinitial examples

so the resulting

estimatoris:

" {.r "-<,,o1)

ST,0(tlPE)

" max , a: [~:o(tlPE)}

,

whereis the averageimprovement

in TypeI utility that resuits if transformation

t is adopted,is the variancein this randornvariable,andO(a)ffi 8/(21TI).

1.6.3

vided by the transformation:r~o(PE

) - ~:~o(tlPE).Combining these estimatorsyields the followingestimatorfor the expectednumber

of problemsthat can be solvedafter learning

transformation

t:.

^

^

R-A~o(t,T, PE)× ST~o(tlPE

)

ENP~(R,

tlPe) ffi r,o(PE) _ 2~:o(ttpg

)

In the generalcase, the cost of processingthejth problem

dependson several factors. It can dependon the particulars of

the problem.In can also dependon the currentlytransformed

performanceelement, PEt. For example,manylearning approachesderiveutility statistics byexecuting(or simulating

the execution)of the performance

elementon eachproblem.

Finally, as potential transformations

mustbe reasonedabout,

learningcostcandepend

onthe size of the currentset of transformations,T.

Let kj(T, PE)denotethe learningcost associatedwiththe jth

problemunderthe transformationset T andthe performance

elementPE.Thetotal learningcost associatedwitha transformation,t, is the sumof the per problem

learningcostsoverthe

numberof examplesneededto apply the transformation:

ENP Estimator

Wecan nowdescribe howto computethis estimate for individualtransformations

basedca aainitial sampleof n~ exampies. Let R be the available resources. Theresources ex9endedtv~’~ting a transformation caa :be estimated by

41

(3)

Spaceprecludesa descriptionof howthis estimatoris usedbut

the RASL

algorithm is listed in the Appendix.Giventhe

availableresources,RASL

estimates,basedon a smallinitial

sample,ff a transformation

shouldbe learned.If not, is uses

the remainingresourcesto solve problems.If learningis expectedto help, RASL

statistically evaluatescompeting

transformations,choosingthe onewith the greatest increase in

ENP.It then determines,basedon a smallinitial sample,if a

newtransformationshouldbe learned, and so on.

4.

Learning Cost

Eachtransformation

can alter the expectedutility of a perrefinanceelement.Toaccuratelyevaluate potential changes

wemustallocate sonaeof the available resources towards

learning. Underour statistical formalizationof the problem,

learningcost is a functionof the stoppingtimefor a transformation,andthe cost of processingeachexampleproblem.

1.6.4

multiplyingthe averagecost to process an exampleby the

stoppingtime associatedwith that transformation:. Theaverageresourceuse of the learnedperformance

elementcan be

estimatedby combining

estimatesof the resourceuse of the

current performance

elementand the changein this use pro-

EMPIRICAL

EVALUATION

RASL’s

mathematicalframework

predicts that the systemcan

appropriatelycontrollearningundera variety of timepressures. Givenan initial problem

solver anda fixed amountof resources,the systemshouldallocate resourcesbetweenlearning and problem solving to solve as manyproblems as

possible. Thetheorythat underliesRASL

makesseveral predictions that wecanevaluateempirically.(1) Givenan arbiWarylevel of initial resources,RASL

shouldsolve a greater

than or equalto number

of problems

thanff weusedall available resourcesonproblemsolving3. Asweincreasethe available resources,RASL

should(2) spendmoreresourceslearning, (3) acquireproblemsolvers with loweraveragesolution

cost, (4) exhibit a greater increasein the number

of problems

that can be solved.

Weempiricallytest these claimsin the real-worlddomainof

spacecraftcommunication

scheduling.Thetask is to allocate

communication

requests betweenearth-orbitingsatellites and

the three 26-meterantennasat Goldstone,Canberra,and Madrid. These antennas makeup part of NASA’s

DeepSpace

Network.Schedulingis currently performedby a humanexpert, but the Jet PropulsionLaboratory(JPL)is developing

heuristicscheduling

techniquefor this task. In a previousarticle, wedescribe an application of COMPOSER

to improving this schedulerovera distributionof scheduling

problems.

3. RASL

pays an overheadof taking no initial examples

to makeits rational deliberations.If learningprovidesno

benefit, this can result in RASL

solvingless examples

than a non-learningagent.

COMPOSER

acquired search control heuristics that improvedthe averagetime to producea schedule[Gratch93a].

Weapply RASL

to this domainto evaluate our claims.

COMPOSER

exploreda large spaceof heuristic search strategies for the schedulerusingits statistical hill-climbingtechnique.In the courseof the experiments

weconstructeda large

databaseof statistics onhowthe schedulerperformed

with different search strategies over manyschedulingproblems.We

use this databaseto reducethe computational

cost of the current set of experiments.Normally,whenRASL

processes a

training example

it mustevokethe schedulerto performan expensivecomputation

to extract the necessarystatistics. For

these experimentswedrawproblemsrandomlyfromthe databaseandextract the statistics alreadyacquiredfromthe previous experiments.RASL

is "told" that the cost to acquire

these statistics is the sameas the cost to acquirethemin the

original COMPOSER

experiments.

Alearning trial consists of fixing an amountof resources,

evokingRASL,and then solving problemswith the learned

problemsolver until resources are exhausted.This is comparedwitha non-learning

approachthat simplyusesall available resourcesto solve problems.Learningtrials are repeated

multipletimesto achievestatistical significance.Thebehavior of RASL

underdifferent timepressuresis evaluatedbyvarying the amountof availableresourees.Resultsare averaged

overfifty learningtrials to showstatistical significance.We

measureseveral aspects of RASL’s

behaviorto evaluate our

claims. Theprinciple component

of behavioris the number

of

problems

that are solved. In additionwetrack the timespent

learning,the TypeI utility of the learnedscheduler,andthe

numberof hill-climbing steps adoptedby the algorithm.

RASL

has two parameters.Thestatistical error of the stopping rule is controlled by ~, whichweset at 5%.RASL

requiresan initial sampleof size no to estimateENP.Forthese

experimentswechose a value of thirty. RASL

is compared

againsta non-learning

agentthat usesthe originalexpertcontrol strategy to solve problems.Thenon-learningagent devotesall availableresourcesto problemsolving.

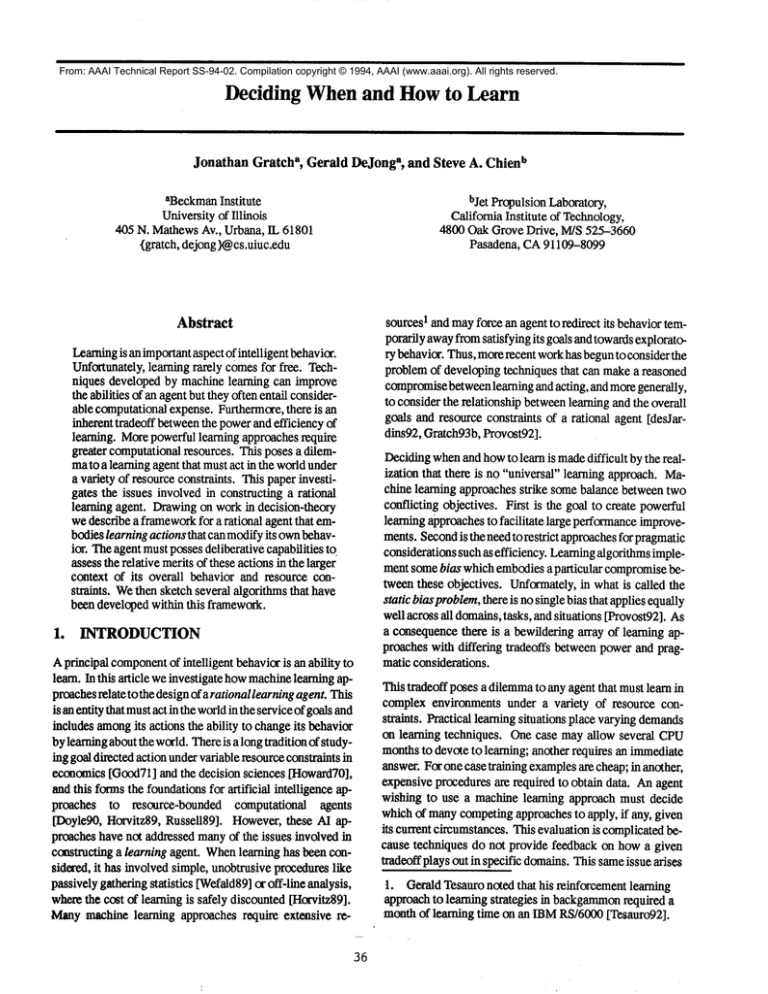

Theresults are summarized

in Figure2. RASL

yields an increasein the number

of problems

that are solvedcompared

to a

n0n-lenmingagent, and the improvement

accelerates as the

resources are increased. As the resources are increased,

RASL

spendsmoretime in the learning phase, taking more

steps in the hill-climbingsearch, andacquiringschedulers

with better averageproblemsolving performance.

experiments [Gratch93a]. In this domain, COMPOSER

learnedstrategies that take aboutthirty secondsto solve a

schedulingproblem.This suggeststhat wemustconsiderably

increasethe availableresourcesbeforethe cost to attain this

better strategy becomesworthwhile.Weare somewhat

surprisedbythe strict linear relationshipbetween

the available

resourcesandthe learningtime. At somepoint learningtime

shouldlevel off as learning producesdiminishingreturns.

However,as suggestedby the difference in the schedulers

learned by COMPOSER

and RASL,wecan greatly increase

the availableresourcesbeforeRASL

reachesthis limit.

It is an openissuehowbest to set the initial sampleparameter,

no. Thesize of the initial sampleinfluencesthe accuracyof the

estimateOf ENP,whichin turn influencesthe behaviorof the

system. Poorestimates can degradeRASL’s

decision making

capabilities. Making

no verylarge will increasethe accuracy

of the estimates,but increasesthe overheadof the technique.

It appearsthat the best settingfor nodepends

oncharacteristics

of the data, suchas the varianceof the distribution.Furtherexperienceonreal domains

is neededto assessthe impactof this

sensitivity. Thereare waysto addressthe issue in a principled

way(e.g. usingcross-validationto evaluatedifferentsetting)

but these wouldincreasethe overheadof the technique.

5.

RELATED WORK

RASL

illustrates just one of manypossible waysto reason

aboutthe relationshipbetween

the cost andbenefit of learning

actions. Alternativesaeise whenweconsiderdifferent definitions for TypeII utility or whenthere exists prior knowledge

aboutthe learningactions.

In [Chien94]wedescribethe RIBSalgorithm(RationalInterval-BasedSelection)for a different relationshipbetween

cost

andbenefit. In this situationthe TypeII utility is definedin

termsof the ratio of TypeI utility andcost. Weintroducea

generalstatistical approach

for choosing,withsomefixed level of confidence,the best of a set of k hypotheses,wherethe

hypothesesare characterizedin termsof someTypeI utility

function.Theapproachattemptsto identify the best hypothesis by investigatingalternativesovervariablypricedtraining

examples,wherethere is differing cost in evaluatingeachhypothesis.This problem

arises, forexample,

in learningheuristics to improvethe quality of schedulesproduced

by an automatedschedulingsystem.

Thisissue of rational learninghas frequentlybeencast as a

problem

of bias selection [desJardins92,Provost92].Alearning

algorithm

is providedwitha set of biasesandsomemetaTheimprovement

in ENPis statistically significant. The

graphof problemssolvedshows95%confidenceintervals on

proceduremustchooseonebias that appropriatelyaddresses

the performance

of RASL

over the learning trials. Intervals

the pragmaticfactors. Provost[Provost92]showsthat under

are not showfor the non-learningsystemas the variancewas

very weakprior informationabout bias space, a technique

negligibleoverthe fifty learningtrials. Looking

at TypeI utilcalled iterative weakening

canachievea fixed level of benefit

ity, the searchstrategies learnedby RASL

wereconsiderably with near minimum

of cost. Theapproachrequires ~at biases

worsethan the average strategy learned in our COMI~SER :be orderedin terms of ~creasmg

cost andexi~essiv~ess.

42

10000.

8000-

Agent with RASL

.,+~

o

Agent with RASL

.~40-

000-

30-

4000-

~20-

~’~- Non-learning

20000

0

20

40

60

80

20’

100

Available Resources(hours)

~)

60

’

’ 80 100

Available Resources(hours)

.

.

1-

I°

0.

0

0

20

40

60

80

100

0

Available

Resources

(hours)

20....

40

60

80

100

Available Resources (hours)

Figure 2: RSLresults. Results are averagedover thirty trials.

desJardins [desJardins92] introduces a class of prior knowledge that can be exploited for bias selection. She introduces

the statistical knowledgecalled uniformities from whichone

can deducethe predictive accuracythat will result fromlearning a concept with a given inductive bias. By combiningthis

with a simple model of inductive cost, here PAGODA

algorithm can choosea bias appropriateto the givenlearning situation.

es. Thechallengeis to developwaysof estimating the cost and

benefit of this type of computation.In classification learning,

for another example,a rational classification algorithmmust

developestimates on the cost and the resulting benefit of improvedaccuracy that result from growinga decision tree.

This work focused on a one learning tradeoff but there are

moreissues we can consider. Wefocused on the computational cost to process training examples. Weassume an unRASL

focuseson one type of learning cost that wasfairly easy

boundedsupply of training examplesto draw upon, however

to quantify: the cost of statistical inference. It remainstoapunderrealistic situations weoften havelimited data and it can

be expensiveto obtain more. Our statistical evaluation techply these conceptsto other learning approaches.For example,

STATICis a speed-up learning approach that generates

niquestry to limit beth the probability of adoptinga transforknowledgethrough an extensive analysis of a problemsolvmationwith negativeutility and the probability of rejecting a

transformation with positive utility (the difference between

er’s domaintheory [Etzioni91 ]. Wemight imaginea similar

rational

control

forSTATIC

where

thesystem

performs

differ- type I and type II error). Undersomecircumstance,one factor

entlevels

ofanalysis

whenfaced

withdifferent

timepressatr- maybe more important than the other. These issues must be

43

faced by any rational agent that includes learning as one of its

courses of action. Unfortunately, learning techniques embody

arbitrary and often unarticulated commitmentsto balancing

these characteristics. This research takes just one of many

needed steps towards makinglearning techniques amenableto

rational learning agents.

6.

CONCLUSIONS

Traditionally, machinelearning techniques have focused on

improvingthe utility of a performanceelement without much

regard to the cost of learning, except, perhaps, requiring that

algorithms be polynomial.While polynomialrun time is necessary, it says nothing about howto chooseamongsta variety

of polynomialtechniques whenunder a variety of time pressures. Yet different polynomialtechniquescan result in vast

practical differences. Mostlearning techniquesare inflexible

in the face of different learning situations as they embody

a

fixed tradeoff between the expressiveness of the technique

and its computational efficiency. Even whentechniques are

flexible, a rational agent is faced with a complexand often unknownrelationship betweenthe configumbleparameters of a

techniqueand its learning behavior. Wehavepresented an initial foray into rational learning agents. Wepresented the

RASLalgorithm, and extension of the COMPOSER

system,

that dynamicallybalances the costs and benefits of learning.

Acknowledgements

Portions of this work were performedat the BeckmanInstitute, Universityof Illinois underNational ScienceFoundation

Grant 1RI-92--09394,and at the Jet Propulsion Laboratory,

California Institute of Technology

undercontract with the National Aeronautics and Space Administration.

References

[AI&STAT93]AI&STAT,Proceedings of the Fourth International Workshopon Artificial Intelligence andStatistics, Ft. Lauderdale,January1993.

[Berger80]

J.O. Berger, Statistical Decision Theory

and Bayesian Analysis, Springer Verlag, 1980.

[Brand91]

M. Brand, "Decision-Theoretic Learning

in an Action System,"Proceedingsof the Eighth International Workshopon MachineLearning, Evanston, IL,

June 1991, pp. 283-287.

[Chien94]

S.A. Chien, J. M. Gratch and M. C. Burl,

"Onthe Efficient Allocation of Resourcesfor Hypothesis

Evaluation in Machine Learning: A Statistical Approach,"submittedto Institute ofElectrical andElectronics Engineers Transactions on Pattern Analysis and Machine Intelligence, 1994.

[desJardins92] M.E. desJardins, "PAGODA:

A Model for

Autonomous Learning in Probabilistic Domains,"Ph.D.

The~is, ComputerScience Division, University of California, Berkeley, CA,April 1992. (Also appears as UCB/

ComputerScience Department 92/678)

44

[Doyle90]

J. Doyle, "Rationality and its Roles in Reasoning (extended version)," Proceedingsof the National

Conferenceon Artificial lntelligence,Boston, MA,1990,

pp. 1093-1100.

[Etzioni91]

O. Etzioni, "STATICa Problem--Space

Compiler for PRODIGY,"

Proceedings of the National

Conferenceon Arttficial Intelligence, Anaheim,CA,July

1991, pp. 533-540.

[Good71]

I.J. Good, "The Probabilistic Explication

of Information, Evidence, Surprise, Causality, Explanation, and Utility,"in Foundations

of Statistical Inference,

V. P. Godambe,D. A. Sprott (ed.), Hold, Rienhart and

Winston, Toronto, 1971, pp. 108-127.

[Gratch91]

J. Gratch and G. DeJong, "A Hybrid Approach to GuaranteedEffective Control Strategies," Proceedings of the Eighth International Workshopon Machine Learning, Evanston, IL, June 1991.

[Gratch92]

J. Gratch and G. DeJong, "COMPOSER:

A

Probabilistic Solution to the Utility Problemin Speed-up

Learning," Proceedings of the National Conference on

Artificial Intelligence, San Jose, CA,July 1992, pp.

235-240.

[Gratch93a]

J. Gratch, S. Chien and G. DeJong, "Learning Search Control Knowledgefor Deep Space Network

Scheduling," Proceedings of the Ninth International

Conference on Machine Learning, Amherst, MA,June

1993.

[Gratch93b]

J. Gratch and G. DeJong, Rational Learning: Finding a Balance BetweenUtility and Efficiency,,

January 1993.

[Greiner92]

R. Greiner and I. Jurisica, "A Statistical

Approachto Solving the EBLUtility Problem," Proceedings ofthe National ConferenceonArtificial lntelligence,

San Jose, CA, July 1992, pp. 241-248.

[Horvitz89]

E.J. Horvitz, G. F. Cooperand D. E. Heckerman, "Reflection and Action UnderScarce Resources:

Theoretical Principles and Empirical Study," Proceedings of the Eleventh International Joint Conferenceon

Artificial Intelligence, Detroit, MI, August1989, pp.

1121-1127.

[Howard70]

R.A. Howard, "Decision Analysis: Perspectives on Inference, Decision, and Experimentation,"

Proceedingsof the Institute of Electrical andElectronics

Engineers 58, 5 (1970), pp. 823-834.

[Minton88]

S. Minton,in Learning Search Control

Knowledge: An Explanation-Based Approach, Kluwer

AcademicPublishers, Norwell, MA,1988.

[Nadas69]

A. Nadas, "An extension of a theorem of

Chowand Robbinson sequential confidence intervals for

the mean," The Annals of MathematicalStatistics 40, 2

(1969), pp. 667-671.

[provost92] F.J. Provost, "Policies for the Selection of

Bias in Inductive Machine Learning," Ph.D. Thesis,

ComputerScience Department,University of Pittsburgh,

Pittsburgh, 1992. (Also appears as Report 92-34)

[Russell89]

S. Russell and E. Wcfald, ,Principles of

Metareasoning,"Proceedingsof the First Internationa~

Conference on Principles of KnowledgeRepresentation

andReasoning,Toronto, Ontario, Canada, May1989, pp.

400-411.

[Simon76]

H.A. Simon, "From Substantive to Procedural Rationality,"in Methodand Appraisal in Economics, S. J. Latsis (ed.), Cambridge

UniversityPress, 1976,

pp. 129-148.

[Subramanian92]D. Subramanianand S. Hunter, "Measuring

Utility and the Design of Provably Good EBLAlgorithms," Proceedingsof the Ninth International Conference on Machine Learning, Aberdeen, Scotland, July

1992, pp. 426-435.

[Tesauro92]

G. Tesauro, "Temporal Difference Learning of Backgammon

Strategy," Proceedingsof the Ninth

International Conference on MachineLearning, Aberdeen, Scotland, July 1992, pp. 451-457.

[Wefald89]

E.H. Wefald and S. J. Russell, "Adaptive

Learning of Decision-Theoretic Search Control Knowledge," Proceedingsof the Sixth International Workshop

on Machine Learning, Ithaca, NY, June 1989, pp.

408-411.

Appendix

Function RASL(PEo, R0, 8)

[1] i :- 0; Learn:- True; T :-- Transforms(PEi);8" := 8/(21TI);

[2] WHILE

T ~ O ANDLearning-worthwhile (T, PEi)

[3] ( continue :- True; j := no; good-rules :--- ~;

[4] WHILET ~ O ANDcontinue DO

[5] ( j :-j + 1; Get Xj(T, PEi); Vt~T: GetA~)(tIPE);

significant :- N6das-stopping-rule(T, PEi, 8", j);

[6]

T :-- T - significant;

[7]

[8]

[9]

WHEN

3t e significant :

~(tlPEi) > 0

/* should learning continue? */

/* find a goodtransformation */

/* beneficial

transformation

found */

( good-rules:.~ good-rulesI..J [t ~ significant2~TA/tPE,)

> 0]

[10]

WHEN

maxIegP,<R,,t,Pe,)]

IE~IPj(R,,tIPEI)}

/*nobettertransformation*/

tETI.

J maxt~sisn/~ca,

ut

[11]

best := t ~ significant : Vw~ significant, ~7~(tteE3 > ~(wlPE3

[12]

PEi+I:-- Apply(best, PEi); T "= Transforms(PEi+l);

8* "= ~/(21TI); /*adopt it

[13]

Ri+l := Ri -j × ~j(T, PEi); i := i + 1; continue "= False; }

[14] Solve-problems-with-remaining-resources (PEi

Function Learning-worthwhile (T, PE)

[1] FORj-1 TOno DO

[2] Get *j(T, PE); VteT: Get rj(PE), Arj(t[PE);

[3] ReturnmaxlEl~e~o(R.tlPE,)

} <R-----2----t

t~rt t

r~o(PEj)

FunctionNddas-stopping-rule(T, PE, 8", j)

[11 Return t E T: (~7/tlpE)) 2 a2

-®

Figure 3: Rational COMPOSER

Algorithm

45