The Evolution and Evaluation of an

Internet Search Tool for Information Analysts

Elizabeth T. Whitaker and Robert L. Simpson, Jr.

Georgia Tech Research Institute and Applied Systems Intelligence, Inc.

Atlanta, GA. 30332 and Roswell, GA. 30076

Betty.Whitaker@gtri.gatech.edu

BSimpson@asinc.com

web (Whitaker and Simpson, 2004). A number of search

strategies have been devised to illustrate the assistance to

the analyst in the performance of knowledge discovery

activities. A software prototype that applies case-based

reasoning, combined with other reasoning techniques, has

been developed for use by information analysts to help

discover novel information from documents on the Internet.

The search strategies have been developed based on

feedback from analysts and information gleaned from

literature and has been stored as cases in a case library.

The cases, in combination with the prototype software, are

being used to illustrate and investigate improvements in the

knowledge discovered and the time required for analysis.

Abstract

We are working on a project aimed at building next

generation analyst support tools that focus analysts’

attention on the most critical and novel information found

within the data,, thus helping analysts deal with the

information overload problem. .This paper discusses the

Case-based Reasoning for Knowledge Discovery (CBR for

KD) which is designed to support the Internet-based search

and information gathering activities of information analysts.

An information analyst gathers, organizes and analyzes

information and based on that analysis, makes predictions

that can be used for decision making. Because of the huge

volumes of data that information analysts must search,

effective information gathering on the web is a complex

activity requiring planning, text processing, and

interpretation of extracted data to find information relevant

to a major analysis task or subtask (Etzioni and Weld,

1994), (Knoblock, 1995), (Lesser, 1998) and (Nodine,

Fowler et al., 2000). We have identified knowledge

discovery plan categories that correspond to different

contextual domains which provide analysts with indications

of activities of potential interest, opportunity or threat.

Using a case-based reasoning engine, a plan is selected from

one of these categories and it is adapted to the current

knowledge discovery problem. The resulting search plan is

executed, relevant information is extracted from

unstructured documents, and the extracted information is

used to make further inferences and launch additional

searches. This paper discusses the evolution and evaluation

of the system presented in the FLAIRS 2004 Paper “CaseBased Reasoning in Support of Intelligence Analysis.”

The Users and Their Tasks

The envisioned users are information analysts who are

given an assignment to research a topic and produce a

finished information product. Information analysts may

work for business or government. Examples of areas in

which they provide analysis are business intelligence,

technology tracking, financial information, crime analysis,

counter-terrorist information or military defense. The

analytic process includes searching, reading, organizing,

integrating and drawing inferences from many sources of

information, both proprietary and open. The users are

typically very methodical, and analysts attempt to be

thorough, but occasionally time constraints prevent them

from doing as much research as they would like (Patterson,

Roth and Woods, 1999). They may receive a task outside

of their areas of expertise (Heuer, 2001), (Krizen, 1999),

(Bodnar, 2003). The tasking can be either long term or

short term, that is, the analyst may be given a few hours or

a few days to provide the finished analysis, or may be

monitoring a situation over a long sustained period.

Background

We previously introduced our project called Case-Based

Reasoning for Knowledge Discovery (CBR for KD) in

which the Georgia Tech Research Institute team is

investigating information analysts’ strategies for

discovering new knowledge in support of a variety of

assignments called “taskings,” and analyzing the data

collected as they conduct searches for information on the

The variety of approaches to information analysis derives

from differences in the analysts’ domains of expertise, the

agency or company they work for, the sources of

Copyright © 2007, American Association for Artificial Intelligence

(www.aaai.org). All rights reserved.

435

•

information they can access, their experience and the stage

of analytic process on which they focus. The basic analytic

process is as follows:

• Understand the customer’s need

• Decompose the task into component questions

• For each question, gather relevant information

• Analyze available information to form hypotheses and

test for conclusions

• Produce the finished analytic product

The focus of our project is the third step in the analytical

process: gather relevant information.

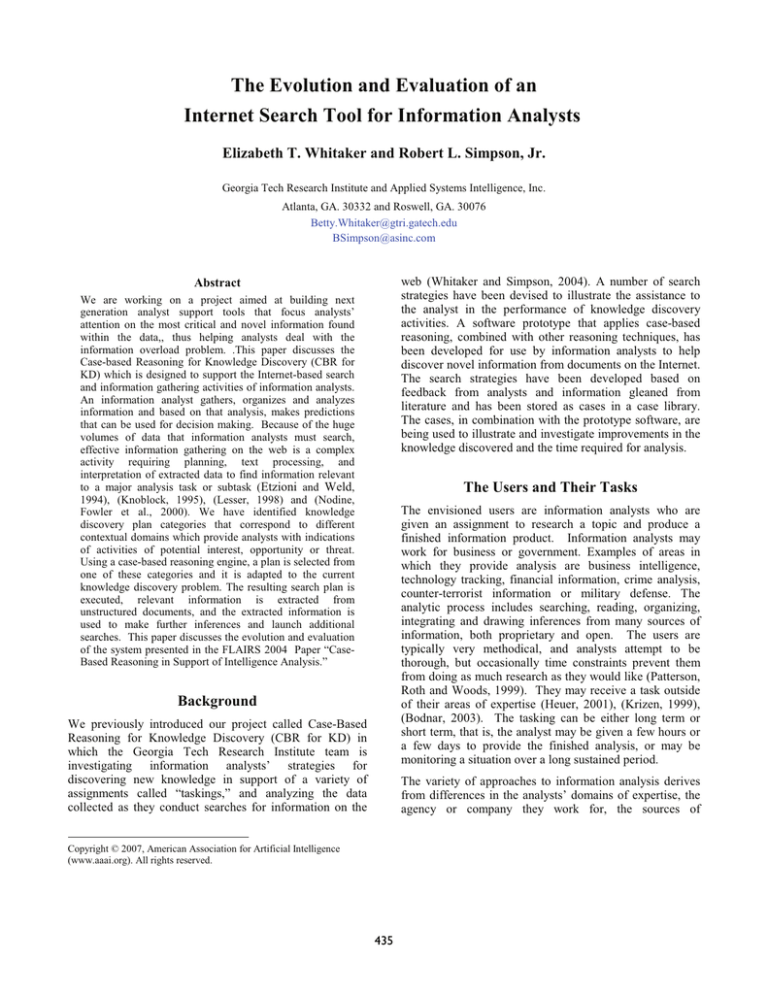

Figure 1 is a simplified illustration of our approach of

capturing the analyst’s implicit search strategies in the form

of explicit knowledge discovery plans which are used via

our software to accelerate and improve derived knowledge

needed to support an improved overall analytic process.

An Analyst’s Knowledge Discovery Problems

Examples of knowledge discovery problems that an analyst

might use to fulfill some of the knowledge requirements of

a tasking include the following which will act as goals in

the context of case-based planning:

• Find researchers and market needs for cutting edge

knowledge discovery research

• Find events connected to people who are

mathematicians from Country X

• Describe (or explain) the computer capabilities of the

Offshore Gambling Casinos

• Find clusters of related neural network experts

• Discover organizations with illegal gambling activity

• Discover bioterrorist experts who might be associated

with a given set of organizations

• Who are the leaders of the Terrorist Organization Y

Research Approach

Our approach is to represent analytic strategies as domain

specific search plans such that a future analyst support

system could reuse a successful analytic strategy on

massive data by interacting with the analyst. Significant

portions of the search and analytic strategy can be

automated, but we have come to understand the importance

of allowing interaction between the analysts and the

automated process. Analysts want to understand the search

strategies as well as the results and to be able to interact

with and tailor these strategies based on their experience

and background knowledge. We have added the ability for

the analyst to add or delete terms to the search plans to

enhance the chosen plan.

Implicit

Search Strategies

Case: Goal: Find clusters of restaurant owners in city X,

who are associated with suspect organizations

KD Plan:

1. Extract names of people with the particular

characteristic “restaurant owner,” “City X”

2. Find organizations that each person is associated with

3. Compare these organizations against suspect

organizations and store resulting organizations in a

database used to accumulate intermediate values and

results that the analyst may wish to reference later

4. Find links between people (the above selected

restaurant owners in City X) through organizations,

with the result being links between people with a

particular characteristic (restaurant owners in City X)

who are associated with suspect organizations

Present

Transform

Tasking

Analyst

A set of prior cases will help the system in managing the

computationally expensive task of search planning. For

example, a notional case would contain the following:

Discovered

Knowledge

Analyst

Case-Based

Reasoning

For Knowledge

Discovery

Knowledge

Discovery

Plans

Determining what assumptions drive the analysts’

search and making those explicit in the search plans

Discovered

Knowledge

Future

Figure 1: CBR for KD Research Approach

Capabilities we have explored include:

• Identifying and capturing the often implicit search

plans (analytic strategies) used by successful

information analysts

• Providing an infrastructure for reusing search plans

among a community of analysts for the purpose of

enabling collaborative investigation of hypotheses and

respective assemblies of supporting evidence, which

ultimately constitute the discovery of new knowledge;

• Determining the types of queries analysts issue to a

particular source and which sources they query for a

particular type of problem so that the queries may be

made explicit in a case’s search plan;

Steps 1 through 4 are the sequence of actions used to solve

the knowledge discovery problem. This same case could

be instantiated differently and reused by the analyst to

solve the following related problems: “find clusters of

microbiology experts,” “find clusters of explosives

experts,” and “find clusters of drug dealers.”

The CBR for KD project is based on the assumption that

analysts have many assignments that cause them to search

for and make inferences about information, reusing

techniques that have worked for them before. Often this

436

documents are unstructured and are not marked-up. In

natural language there are many different words to express

related concepts, and in some situations we are searching

for terms which include two or more concepts, e.g.

biological chemistry expert. We must not only be able to

recognize terms related to biological chemistry and terms

related to expert, but in order to increase the recall, we

must be able to deal with a variety of grammatical

constructs and alternative phrases:

• biochem expert

• Bio-chemistry expert

• Authority on bio-chem

• Former director of biological chemistry laboratory

• Researcher in biological chemistry

• Bio-chem specialist

• Expert in the production of bio-chem products

• Professor of bio-chemistry

involves extracting pieces of information from many open

source websites, sorting them, organizing and linking them

in ways that are very time consuming. Tools to automate a

significant subset of that work, will allow analysts to focus

on the difficult aspects of their assignments, allowing them

to examine more sources, discover more pieces of evidence

and find associations among those pieces of evidence.

Case-Based Planning

Our technical approach leverages research in cased-based

planning. Case-based planning (also CBR Planning)

(Hammond, 1989) is the reuse of past plans to solve new

planning problems.

The system retrieves previously

generated solutions from a case library and adapts or

repairs one of them (the closest match) to suit the current

problem. A plan in this context consists of

• A goal: a knowledge discovery problem that the

information analyst wishes to solve,

• An initial state: in analysts’ knowledge discovery plans

the initial state is described by a set of pieces of

information including the analyst’s task goal and task

decomposition, as well as explicit assumptions such as

background about the world context, e.g., history,

recent events and geographic conditions

• A sequence of actions that when executed starting in the

initial state results in a goal state

For concepts that analysts use routinely in searches,

building elaboration files, that is, files that contain many

phrases related to the concept(s) that an analyst is searching

for, will magnify the utility of the knowledge discovery

plans. The elaboration files contain knowledge that can be

used to adapt plans to different domains according to

analysts’ specializations. This allows the construction of

knowledge discovery plans that when executed spawn

searches for many alternative wordings, saving analysts

from this tedious set of activities. A simple analysis,

yielding an elaboration file which contains only a handful

of terms, makes for much more effective searches.

The goal is to have a set of pieces of information with their

appropriate connections and inferences to address the

knowledge discovery problem. Each action has a set of

preconditions which, in information space, consist of the

state of having the information necessary to perform the

next step. Each action also has a set of post-conditions

consisting of the knowledge and the representation of

partial solutions that exist after the step is performed.

Many case-based reasoning systems retrieve and adapt

problem solutions given as advice or for execution by a

human. The CBR for KD work has the added complexity of

providing and adapting solutions that must be automatically

executed by the software. The approach is to represent

knowledge discovery plans as scripts and to map them to

the modules being used to execute the individual steps of

knowledge discovery plans. This will also allow module

reuse for better support of plan actions.

Plan actions are chosen from the following set:

Create String

Search

Extract

Extract Named Entities

Filter

Create Table

Write Elaboration

Write Table

Display Results

Our analysis has led to the following set of components for

building a knowledge discovery plan:

• Actions allowed by the knowledge discovery plan and

executable by the system

• Preconditions and sequences to define the allowable

execution sequences of plan actions

• Parameters for the adaptation of plans to plans that

address the current problem

• Elaboration files for mapping search concepts to a set

of specific search engine query terms

• Similarity metric for retrieving the appropriate cases

• Parser file for recognition of word constructions in

information extraction from documents

There are over 50 cases in our current case library. A case

consists of a Knowledge Discovery Plan with its associated

features, i.e., a set of attributes that describe and

characterize the plan, a plan for solving the knowledge

discovery problem, and a description of the expected

results of executing the plan.

One of the actions that our plans include is an elaboration

action. Many of the knowledge discovery plans stored in

the case library and reused in support of a new problem,

include plan steps which use search engines to search the

Internet for particular topics. They also include steps

which scan the documents retrieved for particular terms.

One of the things that make this difficult is that the

Based on the preconditions for a given action, there are

constraints on the order in which the plan actions can be

executed. Each plan action has a set of parameters which

will include the conceptual object to be acted upon and

potentially an object to be produced or transformed by the

437

action. Elaboration files are necessary to map from a

domain concept being sought to all of the terms and

phrases that can be used to express it in retrieved

documents. This technique makes our searches more

powerful and allows the elaborations to be customized by

the user to the types of wordings that are most likely to be

fruitful. The similarity metric is used to describe the most

important characteristics of a case and to help retrieve the

most relevant cases to the knowledge discovery problem

being solved by a user. Parser files are used by the system

to extract potentially relevant information. They include

wording permutations and patterns that can be recognized

by the Extraction modules.

The following features of the knowledge discovery cases

were chosen for their utility in defining the similarities to a

current knowledge discovery problem for the purpose of

retrieving a case to adapt and reuse:

1)_Structure: One of the primary attributes of knowledge

discovery problems is the “structure” of the information

that the analyst is looking for. Because the information

being searched for by the information analysts goes beyond

looking for simple facts or processes, the structure can be

very complex, having primary influence on the

characteristics of the search plan. Examples of structures

commonly used in a knowledge discovery plan are:

Associations or relationships:

One technique that

analysts and other information workers use when looking

for relationships between two entities, is to look for

common relationships to a third entity

Clusters: A more complex kind of association is a cluster

of related entities such as:

People (e.g., counterfeiting experts or gang members)

Organizations (suspect businesses and organizations that

do business with criminal organizations)

Events (e.g., bombings, attacks, or criminal events)

Time Sequences: When tracking an event such as a

criminal event or trying to identify a potential criminal

event, there are sequences of subevents or activities that

take place as part of training and preparation. We have

created search plans to search Internet web pages and

documents, extract information and create a representation

that allows the analyst to see the time sequence of events.

Many knowledge workers, such as historians or

epidemiologists, search for information and relate it to a

time sequence in order to explain, prevent, influence or

predict future events.

Spatial Associations: An important structure that analysts

use is a representation relating the movements of entities

through space and time. In trying to predict or influence

terrorist events, analysts might look for components that

can be tied together in a space-time representation.

Sample KD Plan: “Find Clusters of Biochemical

Experts”

1. CreateString (Parameters) Creates search string on

biochemical experts using elaboration file

2. Search (Parameters) Uses search string for web

searches

3. Extract (Parameters) From URLs found, extracts

phrases with the requested content

4. ExtractNamedEntities (Parameters) Marks up or tags

names, organizations, dates, etc

5. CreateString (Parameters) Names found from extract

are put in a string

6. WriteElab (Parameters) Elaborates organization words

7. Search (Parameters) Searches data sources for names

found above

8. CreateTable (Parameters) Creates a database table to

store information found

9. Extract (Parameters) For each URL found, extracts

phrases with name and organization

10. ExtractNamedEntities (Parameters) From the above

phrases, extracts organization names

11. WriteTable (Parameters) Information is written to a

database table

12. Display (Parameters) Displayed for the user

2) Type of knowledge: Information analysts have taskings

that require searches or knowledge discovery techniques

that are specialized to the types of knowledge that they are

looking for. Some examples of types of knowledge that

analysts find useful are:

• capabilities, such as software development processes etc.

• expertise and knowledge

• beliefs and intents

• communication patterns

• financial transactions

• organizational e.g., people filling leadership positions

Knowledge Discovery Cases

Since we want to be able to retrieve and adapt the most

useful plan for solving the analyst’s current problem the

important features of the knowledge discovery plan must be

identified, and stored as a feature vector. The feature

choices are driven by the goal of the knowledge discovery

plan and the characteristics that distinguish one case from

another.

Through requirements analysis, knowledge acquisition,

experimentation with knowledge discovery, and analysis of

published analysts’ processes, we have identified and

classified some of the types of information that information

analysts look for and attached some initial attributes that

have proven useful in case retrieval. There are eight types

of knowledge in our feature set which is used to index the

cases. This will continue to evolve as we learn more about

the knowledge discovery problems needed by different

information analysts.

3) Focus of Information: The Focus of Information is the

specific information domain of the knowledge search being

performed. Examples are “neural network,” “weapons of

mass destruction,” “terrorism,” and “gambling.” The Focus

of Information is included as one of the attributes used to

index into the case library to identify the most similar case.

There may be sources, search approaches, and inferences

that analysts reuse and share in a particular domain.

438

Reid, 2002 ) was administered as an on-line questionnaire.

The metrics collected for each scenario analysis were:

Timing information: overall time, time in applications and

time in CBR for KD windows

System performance: time to formulate case selection

criteria, # cases run, # cases abandoned, time to process

cases, time to process each search of a multi-search case,

number of nodes in results tree, including instances where

the set is empty , number of leaves (snippets) in the results

tree and number of documents represented in results set

Document measures: # relevant documents, percentage of

viewed documents that were considered relevant, fraction

of documents that were represented in final report and #

keystrokes in report vs. # cut/paste events.

Other measures: Cognitive Workload; Product ratings and

rankings;

Questionnaire

ratings

and

comments;

Observation notes and Scenario complexity rating.

4) Geographic Area: Analysts often search for particular

types of information related to a particular geographic area.

There are specialized websites, search and inference

techniques related to specific geographic areas that are

represented in a case. Searching for information about a

geographic area may require references to distances,

cultures, geography and climate, for example.

For case retrieval we are experimenting with similarity

metrics so that the retrieved cases are those that most

closely match the target case. One approach is to supply

different weightings on the above features. Our analysis of

the current system suggests that the highest weight is for

“Structure” and the lowest weights are the “Focus of

Information” and “Geographic Area.” Our adaptation

process does not combine actions from multiple plans to

create a unique, domain-specific plan. Instead the nearest

plan is adapted by search-and-replace of the corresponding

terms from the elaboration files. Because it is easy for us to

adapt a case by changing its Focus of Information or

Geographic area by substituting the concepts and related

elaboration files in the plan script, it is unnecessary to

match those features closely. The Structure has much more

influence on the steps of the knowledge discovery plan,

making it harder to adapt a plan to a different structure. As

we continue to grow the case library and increase the

richness of the case representation, we expect our similarity

metric to require more sophisticated matching. For

example, in the current implementation, features either

match completely or not at all (1 or 0), but over time we

may find it useful to define partial matches.

Some of the summary results of the study were:

• CBR for KD was easy to learn to use. Subjects

exhibited proficiency after training and also indicated

this on post-scenario questionnaires.

• CBR for KD was used for an average of about 20

minutes during the 2-hour session; this came at the

expense of both using Word and using the IE browser.

Most of the time, subjects used the Case Selector panel

to express their information need and to select a

matching case and the Results panel to browse the

information returned by the system.

• Seven of the twenty cases in the Case Library (at the

time of this experiment) matched the needs of the

analysts.

• CBR for KD use was accompanied by a decreased

temporal pressure compared to Google use. This

finding is based on the TLX data and questionnaire

responses. When subjects were debriefed, most

subjects indicated that CBR for KD saved them time.

• Although there were no appreciable differences in the

quality of the reports that subjects wrote when they

used CBR for KD, most subjects indicated in their

debrief that they believed that they wrote better reports

with the tool than without it.

• The information that CBR for KD returned to the

subjects was perceived to be of better quality. One

subject cautioned that filtering data can be a bad thing

since it tends to weed out alternative explanations and

divergent opinions. Other subjects noted that the tool

would be a good tool to use at the beginning of an

analysis when they are working at the broad picture;

other tools could be used later to fill in gaps.

Results from User Evaluation

The CBR for KD system was evaluated during early 2005

in a study performed by National Institute of Standards and

Technology (NIST). Six Naval reservists, most of whom

had experience as analysts, participated in the study. They

had a variety of backgrounds mostly professional. After

receiving training, they each performed two analysis tasks,

one using Google for search as the baseline for comparison,

and one using CBR for KD for search. The task was to

find the information to use in a report and to provide the

outline of that report. The subjects were given two hours to

search for the information and provide the outline for the

requested report. Each task was based on a scenario that

described the subject of the report that the analyst was to

provide. One of the tasks was to provide a report on the

“Status of Russia’s Chemical Weapons Program” and the

other was to “Identify the Science and Technology Interests

of Iran by Analyzing the Organizational Structure of the

Universities.” The primary goal of the study was to

determine the utility of CBR for KD in terms of time

savings, lower cognitive workload, improved quality of

product, and increased amount of information. No subject

finished before the 2-hour limit. After the two hours, the

NASA TLX a cognitive workload instrument, (Dufort and

Other findings from the study identified user interface

aspects that need improvements. This is an area that is not

part of our current research. The case library associated

with the current prototype CBR for KD system is small and

may not cover the space necessary for a particular analyst’s

area of interest. For this evaluation we seeded the case

library with a few special cases. However the evaluation

identified needed extensions to functionality that should be

439

addressed by expanding the collection of cases. Later

evaluations have provided us suggestions for the redesign

of the user interface to align more closely with the thought

processes of the analysts.

Strategic Intelligence Research. Joint Military Intelligence

College. Washington, DC.

Conclusions

Etzioni, O. and Weld, D. A softbot-based interface to the

internet. CACM, 37(7):72--76, July 1994.

Dufort, P. and Reid, L.D., 2002, "NASA TLX Task Load

Index Evaluation Program User Guide", Prepared for

DCIEM.

We have presented some detail about the application of

case-based reasoning to a challenging new task: knowledge

discovery for information analysts. We have shown the

results of our research of the information analysts’ needs

and processes, the initial case representation, the overall

system design and the current state of implementation. In

conducting this research, we have been challenged on

numerous occasions as we applied case-based reasoning to

the broad task of knowledge discovery, and the even

broader domain of information analysis. This required

experimenting with approaches to apply case-based

reasoning to an area where there are not explicitly

represented preexisting cases. The project required making

explicit a conceptual model of search planning, an area that

the domain practitioners rarely think about.

We

conceptualized hypothetical user scenarios to guide the

exploration of system design in the context of different

layers of knowledge and likely types of questions that

analysts might ask both within and between layers. Cases

were created by mapping current user processes into

envisioned future technologies. We hope that our research

can ultimately become part of an analyst’s standard toolkit

to assist in effective knowledge discovery about threats to

U.S. strategic interests.

Hammond, K.. 1989. Case-Based Planning: Viewing

Planning as a Memory Task. San Diego: Academic Press.

Heuer, Richards J.. 2001. Psychology of Intelligence

Analysis, Center for the Study of Intelligence.

Jones, Morgan D. 1995. The Thinkers Toolkit, Three

Rivers Press, New York.

Kolodner, Janet L. 1993. Case-Based Reasoning, Morgan

Kaufmann.

Kolodner, J.; Simpson, R. 1989. The MEDIATOR:

Analysis of an Early Case-Based Problem Solver,

Cognitive Science, Vol. 13, Number 4: 507-549.

Knoblock, C. 1985 Planning, Executing, Sensing, and

Replanning for Information Gathering. In Proceedings of

the Fourteenth International Joint Conference on Artificial

Intelligence, Montreal, Canada.

Krizan, L. 1999. Intelligence Essentials for Everyone,

Joint Military Intelligence College, Washington, DC.

Lesser, V., et al. 1999. BIG: A Resource-Bounded

Information Gathering Decision Support Agent, UMass

Computer Science Technical Report 1998-52, Multi-Agent

Systems Laboratory, Computer Science Department,

University of Massachusetts.

Several areas are natural for further development of this

system.

One of these is the integration of more

opportunities for the user to prune the retrieved document

set. We have also done a small experiment with multilingual searching and have had some success. Design and

development of the maintenance tools to support this

system in an operational environment is needed. These are

three directions for further development of this tool.

Nodine, M., Fowler, J, et al. 2000. Active Information

Gathering in Infosleuth. International Journal of

Cooperative Information Systems Vol. 9, Nos. 1 & 2: 3-27.

World Scientific Publishing Company.

Patterson, E., Roth, E. and Woods, D. 1999. Aiding the

Intelligence Analyst in Situations of Data Overload: A

Simulation Study of Computer-Supported Inferential

Analysis Under Data Overload. The Ohio State University

Institute for Ergonomics/Cognitive Systems Engineering

Laboratory, Report ERGO-CSEL-99-02.

Acknowledgements

This paper is based upon work funded by the U.S.

Government and any opinions, findings, conclusions or

recommendations expressed in this material are those of the

authors and do not necessarily reflect the views of the U.S.

Government. The authors gratefully acknowledge the

evaluation work of Emile Morse and Jean Scholtz at NIST,

the assistance and contributions of our Georgia Tech

Research Institute Case-Based Reasoning for Knowledge

Discovery research team members: Laura Burkhart, Reid

MacTavish, Collin Lobb, and especially our Government

Contracting Officer's Technical Representative.

Whitaker, E. T. and Bonnell, R.D. 1992. Plan Recognition

in Intelligent Tutoring Systems, Intelligent Tutoring Media.

Whitaker, E. and Simpson, R. 2003. Case-Based Reasoning for

Knowledge Discovery. In Proceedings of Human Factors and

Ergonomics Society (HFES) Conference, Denver, CO.

Whitaker, E. and Simpson, R.2004. “Case-Based Reasoning in

Support of Intelligence Analysis,” In Proceedings of 17th Annual

FLAIRS Conference, Miami, FL.

References

Bodnar, J. 2003. Warning Analysis for the Information

Age: Rethinking the Intelligence Process. Center for

440