From: AAAI Technical Report WS-99-09. Compilation copyright © 1999, AAAI (www.aaai.org). All rights reserved.

Intelligent

Testing can be Very Lazy

TimMenzies1, 2BojanCukic

1NASA/WVU

Software Research Lab, 100 University Drive, Fairmont, WVUSA

2Departmentof ComputingScience and Electrical Engineering, West Virginia University, Morgantown,WVUSA

< tim@menzies,com, cukic@csee,wvu. edu>

Abstract

Testingis a searchprocessand a test suite is completewhen

the search has examined

all the comersof the program.Standardmodelsof test suite sizes are grossover-estimatessince

they are unawareof the nature of that search space.For example,onlya smallpart of the possiblesearch spaceis ever

exercisedin practice. Further,a repeatedresult is that a few

randomsearches often yields as muchinformation as more

thoroughsearchstrategies. Hence,onlya fewtests are needed

to samplethe range of behavioursof a program.

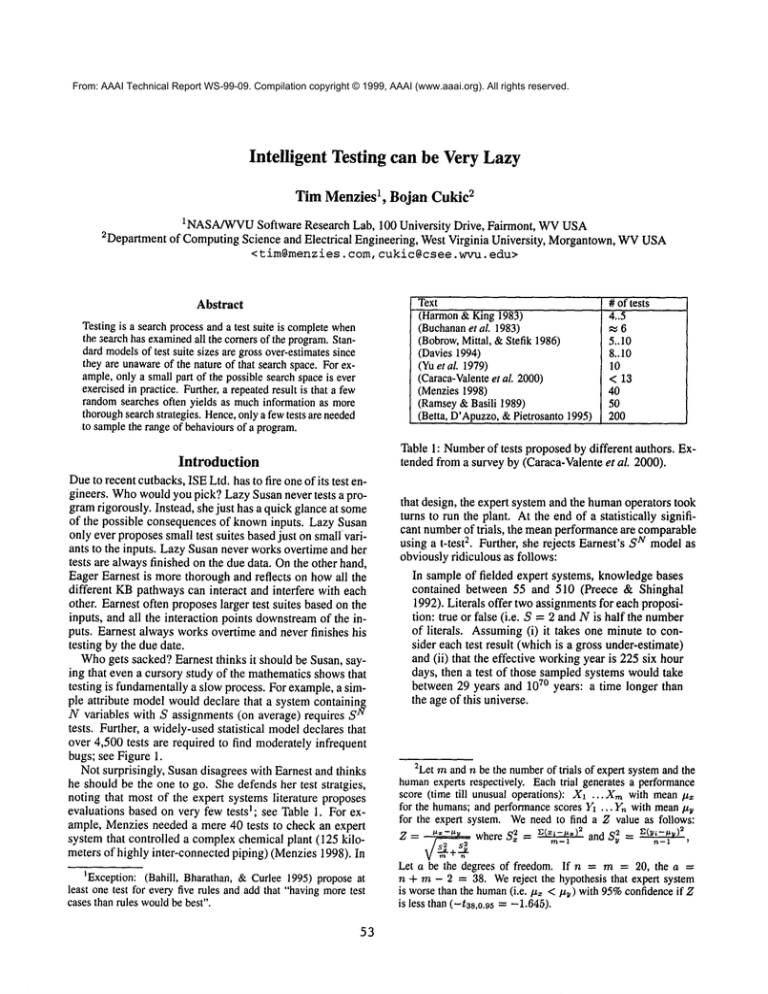

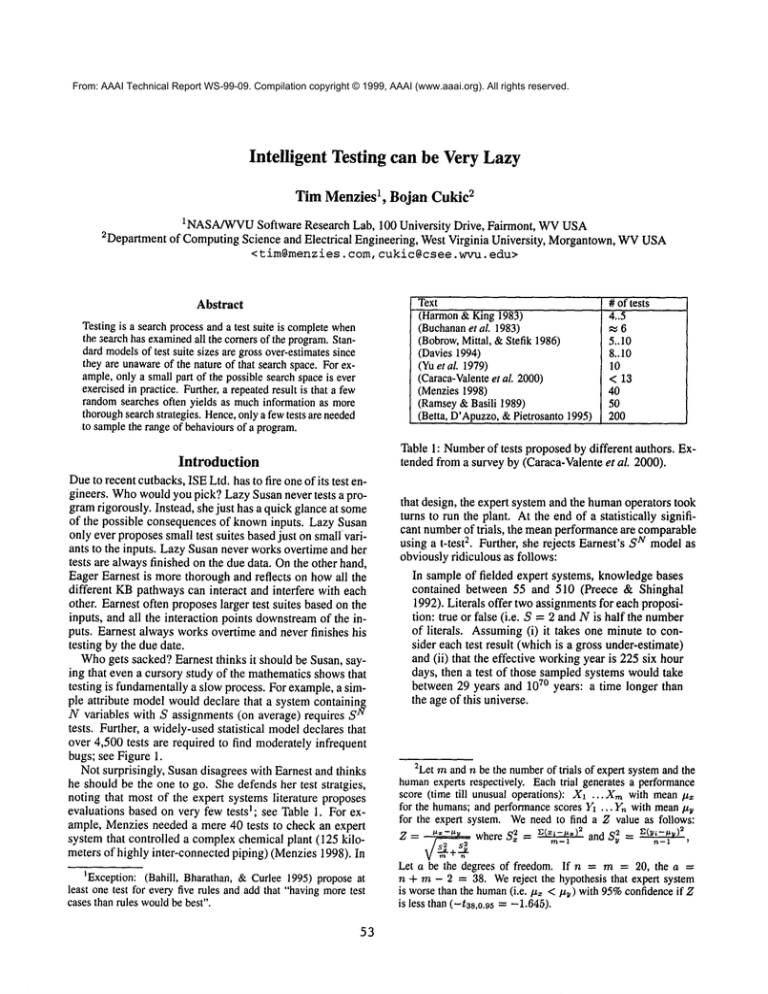

Text

(Harmon& King 1983)

(Buchanan

et al. 1983)

(Bobrow,Mittal, &Stefik 1986)

(Davies1994)

(Yuet al. 1979)

(Caraca-Valente

et al. 2000)

(Menzies1998)

(Ramsey&Basili 1989)

(Betta, D’Apuzzo,

&Pietrosanto1995)

# of tests

4..5

~-,6

5..10

8..10

10

< 13

40

50

200

Table 1: Numberof tests proposedby different authors. Extended from a survey by (Caraca-Valenteet al. 2000).

Introduction

Dueto recent cutbacks, ISELtd. has to fire one of its test engineers. Whowouldyou pick? LazySusan never tests a programrigorously. Instead, she just has a quick glance at some

of the possible consequences of knowninputs. Lazy Susan

only ever proposessmall test suites basedjust on small variants to the inputs. LazySusan never works overtime and her

tests are alwaysfinished on the due data. Onthe other hand,

Eager Earnest is morethorough and reflects on howall the

different KBpathwayscan interact and interfere with each

other. Earnest often proposeslarger test suites based on the

inputs, and all the interaction points downstreamof the inputs. Earnest always works overtime and never finishes his

testing by the due date.

Whogets sacked? Earnest thinks it should be Susan, saying that even a cursory study of the mathematicsshowsthat

testing is fundamentallya slow process. For example,a simple attribute modelwoulddeclare that a system containing

N variables with S assignments (on average) requires Iv

tests. Further, a widely-usedstatistical modeldeclares that

over 4,500 tests are required to find moderatelyinfrequent

bugs; see Figure 1.

Not surprisingly, Susan disagrees with Earnest and thinks

he should be the one to go. She defends her test stratgies,

noting that most of the expert systems literature proposes

evaluations based on very few testsl; see Table 1. For example, Menziesneeded a mere 40 tests to check an expert

system that controlled a complexchemical plant (125 kilometers of highly inter-connected piping) (Menzies1998).

1Exception:(Bahill, Bharathan, &Curlee 1995) propose

least one test for everyfive rules and addthat "havingmoretest

cases than rules wouldbe best".

53

that design, the expert systemand the humanoperators took

turns to run the plant. At the end of a statistically significant numberof trials, the meanperformanceare comparable

using a t-test 2. Further, she rejects Earnest’s SN modelas

obviouslyridiculous as follows:

In sample of fielded expert systems, knowledgebases

contained between 55 and 510 (Preece & Shinghal

1992). Literals offer two assignmentsfor each proposition: true or false (i.e. S = 2 and N is half the number

of literals. Assuming(i) it takes one minute to consider each test result (whichis a gross under-estimate)

and (ii) that the effective workingyear is 225six hour

days, then a test of those sampledsystems wouldtake

between 29 years and 1070 years: a time longer than

the age of this universe.

2Letmand n be the numberof trials of expert systemand the

humanexperts respectively. Eachtrial generatesa performance

score (time till unusual operations): XI ...Xm with mean#x

for the humans;and performancescores Y1 ... Yn with mean/~

for the expert system. Weneed to find a Z value as follows:

Z = ~ whereS~ = c<~i-~’)2

m-,and S~ = .~(~i-"v}~

n-i ,

j~+~

v,,,

~

Leta be the degrees of freedom. Ifn = rn = 20, thea =

n + ra - 2 = 38. Wereject the hypothesisthat expert system

is worsethan the human(i.e. #~ </z~) with 95%confidenceifZ

is less than (-ts8,o.95 = -1.645).

4603

w

1

0.9

0.8

0.7

"6 0.6

=Z" 0.5

"~

0.4

,~

0.3

~o 0.2

"

0.1

0

’-

../

7"

~10

100

1000

/ 1 failureIn 100..e--.

fl 1 failure

In1,000

--*--.

./ 1 failurein 101000

~ 1 failureIn 1001000

i" 1 failureIn 1,000,000

--=,-"

10000 100000 le+06

# oftests

le+07

le+08

Figure 1: Chanceof finding an error = 1 - (1 - failure rate) tests. Theoretically, 4603 tests are required to achieve a 99%

chance of detecting moderately infrequent bugs; i.e. those which occur at a frequency of 1 in a thousand cases. (Hamlet

Taylor 1990).

The research division of ISE Inc. has been hired to resolve this dispute. Their core insight is that testing samples

a search space. Someparts of the space contain desired goals

and someparts contain undesirable behaviour. Regardless of

howusers interpret different parts of the space, the testingas-search process is the same: a test suite is completewhen

it has lookedin all the corners of that search space.

This notion of testing=search covers numeroustesting

schemes:

¯ Oracle testing uses someexternal source of information

to specify distributions for the inputs and outputs

¯ Model-checkingfor temporal properties (Clarke, Emerson, &Sistla 1986) can be reduced to search as follows.

First, the modelis expressed as the dependencygraph betweenliterals. Secondly,the constraints are groundedand

negated. The generation of counter-examples by model

checkers then becomesa search for pathwaysfrom inputs

to undesirable outputs.

¯ A test suite that satisfies the "all-uses" data-flow coverage criteria can generateproof trees for all portions of the

search space that connectsomeliteral fromwhereit is assigned to whereverit is used (Frankl &Weiss 1993).

¯ Partition testing (Hamlet&Taylor 1990)is the generation

of inputs via a reflection over the search space looking for

key literals that fork the program’s conclusions into N

different partitions.

¯ The multiple-worlds reasoners (described below) divide

the accessible areas of a search space into their consistent

subsets.

Based on this insight of testing=search, ISE Research

madethe following conclusion. Table 1 proposes far fewer

tests than Figure 1 since Figure 1 has limited insight into the

devices being testing. Figure l’s estimates are grossly overinflated since it is unawareof certain regularities within the

software it is testing. ISE research bases this conclusionon

a literature review whichshows:

¯ Whilea static analysis of a programsuggests SN states,

in practice only a small percentage of those are exercised

by the systems inputs.

¯ Further, a review of inference engines showsthat a few

randomstabs into the search space often yields as much

information as morethoroughsearch strategies.

Theliterature reviewis presentedbelow.Notethat this article is framedin terms of knowledgeengineering. However,

there is nothing stopping these results from being applied to

software engineering (Menzies & Cukic 1999).

Studies with Data Sets

The sections discusses two studies with data sets showing

that the attribute space size of the data presented to an expert systemis far less than the potential upper boundof the

attribute space size of the KB(BN). This is consistent with

the used portions of search spaces being non-complex.Test

suites grown beyond some small size would yield no new

information since the smaller test suite wouldhave already

exploredthe interesting parts of the KB’ssearch space.

Avritzer et.aL studied the inputs given to an expert system. Eachrow of a state matrix stored one unique case presented to the expert system(and each literal in the inputs

were generate one columnin the matrix). After examining

355 days of input data, they could only find 857 different

inputs. There was massiveoverlap betweenthose input sets.

On average, the overlap between two randomlyselected inputs was 52.9%. Further, a simple algorithm found that

26 carefully selected inputs covered 99%of the other inputs while 53 carefully selected inputs covered 99.9%of the

other inputs (Avritzer, Ros, &Weyuker1996). If most naturally occuring test suites have such an overlap, then only a

small numberof tests will be required.

Avritzer et.al, looked at the inputs to a system. Coiomb

comparedthe inputs presented to an expert systemwith its

internal structure. Recalling the introduction, the internals

of a KBcan very large: S states per N variables implies

SN combinations. Each such combination could be represented as one row in a state matrix. Colombargues that

the estimate of SN is a gross over-inflation of effective KB

size. He argues that KBsrecord the very small regions of

experience of humanexperts. For example, one medical

expert system studied by Colombhad ,5 ’~v = 10z4. However, after one year’s operation, the inputs to that expert sys54

Isamp(theory)

for i := 1 to MAX-TRIES{

set all variablesto unassigned;

loop {

if all variablesare valued

return(currentassignment);

v := random unvaluedvariable;

assign v a randomlychosen value;

unit_propagate();

if contradictionexit loop;

}

} return failure

Figure 2: The ISAMPrandmoised-search theorem prover.

(Crawford & Baker 1994).

tern could be represented in a state matrix with only 4000

rows. That is, the region of experience exercised after one

N

year within the expert systemwas only a tiny fraction ofS

(4000 << 1014) (Colomb1999). If most systems have

feature, then only a few tests will be required to exercise a

KB’sregion of expertise.

Studies with Inference Engines

This section reviews two studies suggesting the extra effort

of the thorough engineer wouldbe wasted since the larger

thoroughtest suite wouldyield little moreinformation that

the smaller lazy test suite.

Recall the behaviourof our two test engineers:

¯ Lazy Susan explored a couple of randomly chosen paths

while Earnest considered all inputs and their downstream

interactions.

¯ Earnest is acting like an ATMS

device (DeKleer 1986).

The ATMS

takes the justification for every conclusions

and weaves it into an assumption network. At anytime,

the ATMS

can report the key assumptions that drive the

KBinto very different conclusions. Such a search, it was

thoaght, was a useful way to explore all the competing

options within a search space.

¯ LazySusanis acting like a locally-guidedbest-first search

across the KB. Whena contradiction is detected, Susan

(or the search engine) could look at the contradiction for

hints on howto resolve that problem.Susan(or the search

engine) could then instantiated those hints and search on.

Note that, as a results, the earnest ATMS

wouldfind many

options and the lazy searcher wouldonly ever find one.

Williams and Nayakcomparedthe results of their locallyguides search to the ATMS

and found that this locally-guide

contradiction resolution mechanismwas comparable to the

very best ATMS

implementations. That is, the information

gained from an exploration of all options was nearly equivalent to the informationgained from the exploration of a single option (Williams & Nayak1996).

In other work, Crawford and Baker compared TABLEAU,

a depth-first search backtracking algorithm, to ISAMP,a

randomised-search theorem prover (see Figure 2). ISAMP

A

B

C

D

E

F

TABLEAU:

full search

o~ Success Time(sec)

90

255.4

100

104.8

70

79.2

10o

90.6

80

66.3

81.7

100

ISAMP:

partial, random

search

%Success Time(sec) "rries

l0

7

100

100

13

15

100

11

13

21

45

100

19

52"

lO0

100

68

252"

Table 2: Average performance of TABLEAU

vs ISAMPon

6 scheduling problems(A..F) with different levels of constraints and bottlenecks. From(Crawford &Baker 1994).

randomlyassigns a value to one variable, then infers some

consequences using unit propagation. Unit propagation is

not a very thoroughinference procedure:it only infers those

conclusions whichcan be found using a special linear-time

case of resolution; i.e.

(,T,) A (~,T, or Yl ... Yn)[- (Yl or.." Yn)

(’~x) A (X or Yl Or... Yn) (Ylor.. . Yn)

After unit propagation, if a contradiction was detected,

ISAMP

re-assigns all the variables and tries again (giving

up after MAX-TR’IES

number of times). Otherwise, ISAMP

continues looping till all variables are assigned. LazySusan

approves of ISAMPwhile Earnest likes TABLEAU

since it

explores more options moresystematically.

Table 2 shows the relative performance of the two algorithms on a suite of scheduling problems based on realworld parameters. Surprisingly, ISAMP

took less time than

TABLEAU

to reach more scheduling solutions using, usually, just a small numberof TRIES.That is, a couple of randomexplorations of the easy parts of a search space yielded

the best results. Crawfordand Bakeroffer a speculation why

ISAMPwas so successful: their systems contained mostly

"dependent" variables which are set by a small numberof

"control" variables (Crawford &Baker 1994). If most systemshavethis feature, then large test suites are not required

since a few key tests are sufficient to set the control variables.

Experiments

with HTx

The results in the previous section support the argument

that search spaces are less complexthan Earnest thinks.

Hence,the test suites required to sample that search space

maybe very small. However,the sample size of the above

studies is very small. This section addresses the sample

size issue. Based on the HTxabductive model of testing (Menzies 1995; Menzies & Compton1997), a suite

mutators can generate any numberof sample testing problems3. Three HTx algorithms are HT4 (Menzies 1995),

3Informally,abductionis inferenceto the best explanation.

Moreprecisely, abductionmakewhateverassumptionsA whichare

required to reach output goals Outacross a theory T U A F- Out

withoutcausingcontradictionTUA~.L. Eachconsistentset of assumptionsrepresentsan explanation.If morethan one explanation

55

HT4-dumb(Menzies & Waugh1998), and HT0 (Menzies

& Michael 1999). HTxalgorithms were designed as automatic hypothesistesters for under-specified theories and are

a generalization and optimization of QMOD,

a validation

tool for neuroendocrinological theories (Feldman, Compton, & Smythe 1989). HTx and QMOD

assumes that the

definitive test for a theory is that it can reproduce(or cover)

knownbehaviour of the entity being modeled. Theory T1 is

a better theory than theory T2iff

cover(T1)

>>cover(T2)

Below, we will showexperiments where HTxis run millions

of times over tens of thousands of models. The results endorse the widespread existence of simple search spaces. A

small number of randomsearches (by HT0)covered nearly

as muchoutput as extensive searches by HT4.Further, the

sample size is muchlarger than that offered by Williams,

Nayak, Crawford, and Baker.

An Informal Model of Testing

This section gives a quick overviewof the intuitions of HTx.

Thenext section details these intuitions.

At runtime, an inference engine explores the search space

of a program. A given test applies someinputs (In) to the

programto reach someoutputs (Out). The inference engine

maybe indeterminate or chosen randomly (e.g. by ISAMP)

and so the pathwaytaken from inputs to outputs mayvary.

Hence,we call the intermediaries assumptions(A). That is:

< Out, A >= f(ln,

HTx: An More Formal Model of Testing

This section describes the details of HTx.The next section

describes an example.

Werepresent a theory as a directed-cyclic graph T = G

containing vertices {t~,~,...} with roots roots(G) and

leaves leaves(G). A vertex is one of two types:

¯ Andscan be believed if all their parents are believed.

¯ Ors can be believed if any their parents are believed

or it has been labeled an In vertex (see below). Each

or-node contradicts 0 or more other or-nodes, denoted

no(Vi) = {Vj, Vt~, ...}. The average size of the no

sets is called eontraints(G). For propositional systems,

contraints(G) = 1; e.g. no(a) = {~a}.

In and Out are sets of vertices from ors(G). A proof

P _C G is a tree containing the vertices uses(Px)

{ V~,~,... }.Theproof tree has:

¯ Exactly one leaf whichis an output; i.e.

Ileaves(uses( P~) )l =

KB)

where f is the inference engine and KBare the literals

{a..z} which we can divide as follows:

In

Our testing intuition is that a test case beamsa searchlight from In across A to Out. Sometimes,the light reveals

somethinginteresting. Wecan stop testing whenour searchlight stops finding anything new. Whatwill be shownbelow

is that a few quick flashes showas muchas lots of poking

aroundin corners with the flashlight.

Vi 6 leaves(uses(P,))

¯ 1 or more roots roots(uses(Pc)) C_

Notwo proof vertices can contradict each other; i.e.

Out

< Vi, Vj >6 uses(ex) A Vj ~. no(Vi)

a, b, c, ..... , l, m,n, ..., ...x, y, z

A

That is, fromthe inputs a, b, c, .... wecan reach the outputs

...x, y, z via the assumptions...l, m, n, .... Someof a..z are

positive goals that we are trying to achieve while someare

negative goals reflecting situations we are trying to avoid.

In the general case, only a subset of Out will be reachable

using somesubset of the In, A since:

¯ The search space may not approve of connections between all of In and Out.

* Someof the assumptions maybe contradictory. Multiple

worlds of beliefs maybe generated when contradictory

assumptionsare sorted into their maximalconsistent subsets,

, In the case of randomizedsearch, only parts of the search

space may be explored. For example, we could run

ISAMPfor a finite numberof TRIESand return the TRY

wb~chthe maximumnumber of assignments to Out.

is found,someassessment

criteria is appliedto select the preferred

explanation(s).

A Vi 6

Aproof’s assumptionsare the vertices that are not inputs or

outputs;i.e.

assumes( P, ) = uses( Px )-roots(uses( Px ) )-leaves(uses(

pairs

A

test

suite

is

N

We assumed

{< lnx,Outa >,... < lnn,Outn >.

that each Ini, Outi contains only or-vertices. Each output Outi can generated 0 or more proofs {P*,Pu, ".}.

A world is a maximal consistent subsets of the proofs

(maximal w.r.t, size and consistent w.r.t, the no sets)

denoted proofs(Wi) = {P,, P~, ...}. Worlds to proofs is

many-to-many.The cover of a world is howmanyoutputs it

contains;i.e.

lUv,

cover(W/)

proo/s(W,)

^ Zeaves(P I)}

[Outl

Wemakeno other commenton the nature of Outi. It may

be someundesirable state or somedesired goal. In either

case, the aimof our testing is to find the worldsthat cover

the largest percentageof Out.

56

Ahypothetical economicstheory

foreign

sales

domestic

sales

public

confidence

company

profits

inflation

wages

restraint

corporate

spending

account

balance

world W.2

worldW.1

foreign [

] sales=up]

company

profits=up

trade

deficit

corporate

spending=up

public

confidence=up

domestic

sales=down I

f°reign

I sales=up]

company

profits=down

public

confidence=up

corporate

¢"~.n~t ," .,._-~,~.-’n’~

~ ....

spending=down

investor

confidence

Figure 3: Twoworldsfor outputs (ellipses) from inputs (squares). Assumptions

are worldvertices that are not inputs or outputs.

Note that contradictory assumptions are managedin separate worlds.

Pt foreignSales=up, companyProflts=upt,corporateSpending=upt,investorConfldence=up

domesticSales=down,companyProflts=down~,

corporateSpending=downT,

wageRestraint=up

domesticSales=down,companyProfits=downT,

infation=down

P4 domesticSales=down,companyProflts=down~,

inflation=down, wagesRestraint=up

P5 foreignSales=up,publicConfidence=up,inflation=down

foreignSales=up,publicConfidence=up,

inflation=down,wageRestraint=up

Table 3: Proofs connectinginputs to outputs. Xt, and X~’denotethe assumptionsand the controversial assumptionsrespectively.

An Example

Figure 3 (left) showsa hypothetical economicstheory written in the QCMlanguage (Menzies & Compton1997). All

theory variables have three mutually exclusive states: up,

downor steady; i.e. T~o(Va=up) ---- {Va=down, Va=steady)

and constraints(G) = 2. These values model the sign of

the first derivative of these variables (i.e. the rate of change

in each value). In QCM,x ~ y denotes that y being up

or downcould be explained by x being up or down respectively. Also, x ~ y denotes that y being up or downcould

be explained by x being downor up respectively. Somelinks

are conditional on other factors; for example/f transport

then education ~-~ literacy. To process such a link, QCM

adds a conjunction betweeneducation and literacy. The preconditions of that connection are noweducation and transport. QCM

also adds conjunctions to offer explanations of

steadies: the conjunction to competingupstream influences

can explain a steady.

Consider the case where the inputs In is (foreignSales=up, domesticSales=down)and the goals Out are (investorConfidence=up, inflation=down, wageRestraint=up).

There are six proofs that connect In to Out (see Table 3).

These proofs comprise only or-nodes. These proofs contain

assumptions (variable assignments not found in In tJ Out).

Someof these proofs make contradictory assumptions A;

e.g. corporateSpending=up in P1 and corporateSpending=downin/°2. That is, we cannot believe in P1 and P2 at

the sametime. If we sort these proofs into the subsets which

we can believe at one time, we get worlds W1(Figure 3,

middle) and W2(Figure 3, right). W1is a maximalconsistent subset of pathwaysthat can be believed at the same

time; i.e. {P1, Ps, P~}. W~is another maximalconsistent

subset: {P2,Pa,P4,P6}. The cover of W1is 100%while

the cover of W2is 67%.

Recall that HTxscores a theory by the maximum

cover of

the worlds it generates. Hence, our economicstheory gets

full marks: 100%. HT4(and QMOD

before it) found errors in a published theory of neuroendocrinological(Smythe

1989) when its maximum

cover was found to be 42%. That

is, after makingevery assumption possible to explain as

manyof the goals as possible, only 42%of certain published observations of humanglucose regulation could be

explained. Interestingly, these faults were found using the

data published to support those theories. This suggests that

HT4-styleabductivevalidation is practical for assessing existing scientific publications. HTxis practical for many

other real-world theories. For example, one implementation

of HT4,was fast enoughto process at least one published

sample of real-world expert systems (Menzies1996).

HT4, HT4-dumb, HTx

The difference between the HTxalgorithms is how they

searchedfor their worlds:

¯ HT4found all proofs for all outputs, then generated all

the worlds from those proofs. Next, the worlds(s) with

57

1.

2.

3.

4.

Figure 4: An expansion of the left-hand-side QCM

theory

into IEDGEand XNODE.

Note that for the XNODE

expansion, the "~t variable wasA.

largest cover was then returned.

¯ HT4-dumb

was an a crippled version of liT4 that returned

any world at all, chosen at random.It was meant to be a

straw-mansystem but its results were so promising (see

below) that it lead to the developmentof HT0.

¯ HT0 is a randomised each engine that ~zx.X-TRIES

times, finds 1 proof for each memberof Outi. Out is

explored in a randomorder and whenthe proof is being

generated, if morethan one option is found, one is picked

at random.If the proof for Outj is consistent with the

proof found previously for Outi, (i < j), it is kept. Otherwise, HT0moveson to Out~ (j < k) and declares Outj

unsolvable. After each TRY, HT0compares the "best"

world found in previous TRIESto the world found in the

try and discards the one with the lowest cover.

Addedinfluencesbetweenvariables.

Corruptedthe influence betweentwovariables; e.g. proportionality++wasflippedto inverseproportionality-- or

visa versa.

Experimented

with different meaningstimewithin the system. In the explicit node (XNODE)

interpretation

time, all ax variables implied a link i)

(x at Time

(z at Tiraei+l). In the implicit edge lEDGE

interpretation of time, all edges z -~ y also implied a link

(z at Time~) ~ (V at Time’+l); (a 6 { + +,--}).

XNODE

and lEDGE

are contrasted in Figure 4. Whythese

twointerpretations of time?Thesewererandomlyselected

froma muchlarger set of timeinterpretations studied by

(Waugh,Menzies,&Goss1997).

Forcedthe algorithm to generate different numbersof

worlds. Experiments showedthat the maximum

number

of worldsweregeneratedwhenbetweena fifth to threefifths of the variablesin the theorywereunmeasured;

i.e.

U=20..60whereU = 100- (I;"l+l°"t[/*l°°

and

IVl

is

the

Ivl

numberof variables in the theory. Themutatorsbuilt In

and Outsets withdifferent U settings. Valuesfor In and

Outwerecollected using a mathematical

simulationof the

fisheries.

Table 4" Problem mutators used in the HT4vs HT4-dumb

study

fish

growth

rate

/

~++ /++ ~

changein fish

population

|

[’"

\

Susan approves of HT0since, like her, it uses a lazy

methodto study a program. Earnest likes HT4since it explores more of the program. For example, says Earnest, if

Lazy Susan had stumbled randomly on W2of Figure 3, and

did not look any further to find W1,then she wouldhave inappropriately declared that the economicsmodelcould only

explain 67%of the outputs. Susanreplies that, on average,

the cost of such extra searchingis not justified by its benefits. The following studies comparingHTxalgorithms support Susan’s case.

\

~ "

\÷÷1

fish

density

/++

fish

catch

catch

proceeds

/ ++

net

income

y

investment

fraction

++

~+÷

HT4 vs HT4-dumb

Menzies and Waugh compared HT4 and HT4-dumbusing

tens of thousands of theories (Menzies & Waugh1998).

Starting with a seed theory (fish growingin a fishery, see

Figure 5), automatic mutators were built to generate a wide

range of problems(see Table 4). Whenthese problems were

run with HT4 and HT4-dumb,the maximumdifference in

the cover was 5.6%;i.e. very similar, see Figure 6. That is,

in a large number(1,512,000) of world generation experiments, (l) manydifferent searches contain the same goals,

(2) there was little observedutility in using morethan one

world.

boat

purchases

~÷÷

change in

boat numbers

/ ÷+

boat

maintenance

boat

decomissions

catch

potential

Figure 5: The QCMfisheries model contains two dx variables: change in fish population and change in boat numbers.

58

10000.

A

i

HT4

..o

....

HT0

"-*

....

y=O(XA2)

1000

100

Q)

E

10

2II

>-

1

0,1

J ,

I

0

3000

6000

I

I

9000

12000

X=number

of clauses

I

I

15000

18000

Figure 7: RuntimesHT4(top left) vs HT0(bottom). HT0was observed to be 2) (R~= 0. 98).

XNODE,U%unmeasured

100

90 ~

l~

~’~

~

8O ~t~

meny;U-0 -c-one:U=o -*-.

many;U=20

-*--one;U-20-+-.

many;U=40

-m-one;U=40

.o.many;U=60

he;U=60-N-.

70

100

90

80

lEDGE,U%unmeasured

i ,

many;U= 0 ~

one;U=O- -.

meny;U=20

one;U=20

¯ -meny;U-40

one;U-40- "-

\\

~,~

~

me,y;u.6o

7O

In other experiments, the maximum

cover found for different values of MAX-TRIES

was explored. Surprisingly,

the maximumcover found for MAX-TRTES=

5 0 was found

with MAX-TRIES

as low as 6.

In summary,a small number of quick random searches

(HT0)foundnearly as muchof the interesting portions of the

KBas a careful, slower, larger numberof searchers (HT4).

6O

!oo

50

Conclusion

5O

40

4O

30

3O

~

20 .,,

i

i

I t

5

10

1517

Number

of corrupted

edges;max=l7

2O

5

10

1517

Number

of corrupted

edges;

rnax=l 7

Figure 6: Comparing coverage of Out seen in 1,512,000

runs of the HT4(solid line) or HT4-dumb

(dashed line)

gorithms with different percentages of unmeasuredU variables. X-axis refers to howmanyedges of Figure 5 were

corrupted (++, -- flipped to --, ++ respectively.)

HT4 vs HT0

HT4-dumbwas implemented as a back-end to HT4. HT4

would propose lots of worlds and HT4-dumbwould pick

one at random. That is, HT4-dumb

was at least as slow as

HT4.However, it workedso astonishingly well, that HT0

was developed. Real world and artificially generated theories were used to test HT0.A real-world theory of neuroendocrinology with 558 clauses containing 91 variables with

3 values each (273 literals) was copied X times. Next,

of the variables in in one copy were connected at randomto

variables in other copies. In this way, theories between30

and 20000 Prolog clauses were built using Y=40, average

sub-goals

elattses

clause ~ 1.7, literals = 1..5.5. Whenexecuted with

MAX-TRIES=50

the O(N2) curve of Figure 7 was generated. HT4did not terminate for theories with more than

1000 clauses. Hence, we can only compare the cover of

HT0to HT4for part of this experiment. In the comparable

region, HT0found 98%of the outputs found by HT4.

On average, exploring a theory is not as complex as one

mightthink. Testing is a search process and a test suite is

complete whenthe search has examinedall the corners of

the program.Often a few lazy explorations of a search space

yields as muchinformation as more thorough searches.

Philosophically, this must be true. Our impressions are

that humanresources are limited and most explorations of

theories are cost-bounded. Hence, manytheories are not

explored rigourously. Yet, to a useful degree, this limited

reasoning about our ideas works. This can only be true

if our theories yield their key insights early to our limited

tests. Otherwise, we doubt that the humanintellectual process could have achieved so much.

Earnest strongly disagrees with the last paragraph, saying

that such an argumentrepresents the height of humanarrogance; i.e. once again humanityis claiming to have some

special authority over the randomuniverse around us. Lazy

Susan does not have the heart to disagree. She’d just been

promotedwhile Earnest had been sacked. Her view is that

this arrogance seemsjustified, at least based on the above

evidence.

She had lunch with Earnest on his last day. As he cried

into his soup, he warnedthat the aboveanalysis is overly optimistic. The results of this article refer to the averagecase

behaviourof a test rig. In safety-critical situations, such an

average case analysis is inappropriate due to the disastrous

implications of the non-average case. Secondly, these resuits only refer to the numberof tests that can detect anomalous behaviour. Oncean error is found, it must be localized and removed.Strategies for fault localization and repair are exploredelsewherein the literature (Shapiro 1983;

Hamscher, Console, & DeKleer 1992).

Susanpaid for that last lunch using her pay rise: it seemed

the decent thing to do.

59

Acknowledgements

SEKE ’99, June 17-19, Kaiserslautern,

Germany. Available from hCtp://research.ivv.nasa.gov/docs/

Cechreports/1999~NASAIW- 99- 007. pdf.

Menzies, T., and Waugh, S. 1998. On the practicality

of

viewpoint-based requirements engineering. In Proceedings,

Pacific Rim Conference on Artificial Intelligence, Singapore.

Springer-Verlag.

Menzies, T. 1995. Principles for Generalised Testing of Knowledge Bases. Ph.D. Dissertation, University of NewSouth Wales.

Avaliable

from http: //www.cse. unsw.edu.au/~timm/

pub/docs/ 95 thesis.ps .gz.

Menzies, T. 1996. Onthe practicality of abductive validation.

In ECAI’96. Available from http: //www. cse. unsw. edu.

au/~timm/pub/docs/96abvalid.ps.gz.

Menzies,T. 1998. Evaluationissues with critical success metrics.

In BanffKA ’98 workshop. Available from ht:tp: //www. cse.

unsw. EDU. AU/~ timm/pub/docs

/ 97evalcsm.

Preece, A., and Shinghal, R. 1992. Verifying knowledgebases by

anomalydetection: Anexperience report. In ECA!’92.

Ramsey,C. L., and Basili, V. 1989. Anevaluation for expert systems for software engineering management.IEEE Transactions

on Software Engineering 15:747-759.

Shapiro, E.Y. 1983. Algorithmic program debugging. Cambridge, Massachusetts: MITPress.

Smythe, G. 1989. Brain-hypothalmus, Pituitary and the Endocrine Pancreas. The Endocrine Pancreas.

Waugh,S.; Menzies, T.; and Goss, S. 1997. Evaluating a qualitative reasoner. In Sattar, A., ed., AdvancedTopics in Artificial

Intelligence: lOth Australian Joint Conferenceon AI. SpringerVerlag.

Williams, B., and Nayak,P. 1996. A model-basedapproach to reactive self-configuring systems. In Proceedings, AAAI’96, 971978.

Yu, V.; Fagan, L.; Wraith, S.; C!ancey, W.; Scott, A.; Hanigan,

J.; Blum, R.; Buchanan, B.; and Cohen, S. 1979. Antimicrobial Selection by a Computer: a Blinded Evaluation by Infectious Disease Experts. Journal of AmericanMedical Association

242:1279-1282.

This work was partially

supported by NASAthrough cooperative agreement #NCC2-979.

References

Avritzer, A.; Ros, J.; and Weyuker,E. 1996. Reliability of rulebased systems. IEEESoftware 7(>--82.

Bahill, A.; Bharathan, K.; and Cudee, R. 1995. Howthe testing techniques for a decision support systems changedover nine

years. 1EEETransactions on Systems, Man, and Cybernetics

25(12):1535-1542.

Betta, G.; D’Apuzzo, M.; and Pietrosanto,

A. 1995. A

knowledge-basedapproachto instrument fault detection and isolation. IEEETransactions of Instrumentation and Measurement

44(6):1109-1016.

Bobrow,D.; Mittal, S.; and Stefik, M, 1986. Expert systems:

Perils and promise. Communicationsof the ACM29:880-894.

Buchanan,B.; Barstow, D.; Bechtel, R.; Bennet, J.; Clancey, W.;

Kulikowski, C.; Mitchell, T.; and Waterman,D. 1983. Building

Expert Systems, E Hayes-Roth and D. Watermanand D. Lenat

(eds). Addison-Wesley.chapter Constructing an Expert Sytem,

127-168.

Caraca-Valente,J.; Gonzalez,L.; Morant,J.; and Pozas, J. 2000.

Knowledge-basedsystems validation: Whento stop running test

cases. International Journal of Human-ComputerStudies. To

appear.

Clarke, E.; Emerson, E.; and Sistla, A. 1986. Automatic verification of finite-state concurrent systems using temporallogic

specifications.

ACMTransactions on ProgrammingLanguages

and Systems 8(2):244--263.

Colomb, R. 1999. Representation of propositional expert

systems as partial functions. Artificial Intelligence (to appear). Available

from http://www.csee.uq.edu.au/

~ colomb/PartialFunct ions.html.

Crawford, J., and Baker, A. 1994. Experimental results on the

application of satisfiability algorithms to schedulingproblems.In

AAAI ’94.

Davies, P. 1994. Planning and expert systems. In ECA1’94.

DeKleer, J. 1986. An Assumption-BasedTMS.Artificial Intelligence 28:163-196.

Feldman, B.; Compton, P.; and Smythe, G. 1989. Hypothesis Testing: an Appropriate Task for Knowledge-BasedSystems.

In 4th AAAl-SponsoredKnowledgeAcquisition for Knowledge.

based Systems WorkshopBanff, Canada.

Frankl, P., and Weiss, S. 1993. Anexperimental comparisonof

the effectiveness of branch testing and data flow testing. IEEE

Transactions on Software Engineering 19(8):774-787.

Hamlet, D., and Taylor, R. 1990. Partition testing does not inspire confidence. IEEE Transactions on Software Engineering

16(12):1402-1411.

Hamscher,W.; Console, L.; and DeKleer, J. 1992. Readings in

Model-Based Diagnosis. Morgan Kaufmann.

Harmon,D., and King, D. 1983. Expert Systems: Artificial Intelligence in Business. John Wiley & Sons.

Menzies, T., and Compton,P. 1997. Applications of abduction:

Hypothesistesting of neuroendocrinologicalqualitative compartmental models. Artificial Intelligence in Medicine 10:145-175.

Available from http : //www. cse. unsw. edu. au/~ tim.m/

pub/docs/ 96aim.ps. gz.

Menzies, T., and Cukic, B. 1999. Whenyou don’t need to re-test

the system. In Submittedto ISRE-99.In preperation.

Menzies, T., and Michael, C. 1999. Fewer slices of

pie: Optimising mutation testing via abduction.

In

60