EFFECTIVENESS OF SAMPLING BASED SOFTWARE PROFILERS IC. J. INDIA

advertisement

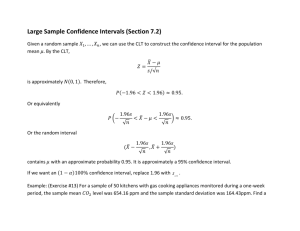

EFFECTIVENESS OF SAMPLING BASED SOFTWARE PROFILERS IC. V. Subramaniam and Matthew J. Thazliuthaveetil Computer Science and Automation, Inclian Institute of Science, Bangalore 560012, INDIA Email: (l;vs,mjt}Ocsa.iisc.ernet.in been compiled for profiling. Tlie counters are later used to estimate how the total execution time of the program was spent in its various subprograms [3]. Weinberger [3] suggests the following sources of inaccuracies in the profiles generated by prof: (i) One or more of the counters corresponding to (8 byte) chudis of code may straddle two subprograms, in which case the counts may get attributed to the wrong subprogram. (ii) If event lifetimes are much smaller than the sampling period, a short lived subprogram may never be sampled. (iii) A resonance Abstract Profilers are a class of program monitoring tools which aid in tuning performance. Profiling tools which employ PC (Program Counter) sampling to monitor program dynamics are popular owing to their low overhead. However, the effectiveness of such profilers depends on the accuracy and coinpletenes of the measurements made. We study the methodology aiid effectiveness of PC sampling based software profilCl3 I. INTRODUCTION or beating effect could occur when the sampling period and the time taken to execute a loop are I lentical. Ponder and Fateman [4]also identify the resonance effect as a potential source of inaccuracies in prof in their analysis of inaccuracies in gprof, but argue that such instances of resonance are uncommon in real programs. The other two problems cannot be ignored as easily. In the presence of these and other possible sources of error, a profile generated by prof only a rough estimate of program behaviour. T racy of the measurement could possibly be increased by doing more profiling runs. This prompts the following questions: (i) flow approximate are the measurements made b y p r o f ? ( z i ) Can these approximate measurements also be inaccurate? (iii) How many profiling runs are required to arrive at an accurate estimate of program behaviour? To illustrate the degree of inaccuracy, we conducted the following experiment: Five profiling runs of dhrystone’ were done on the same machine under identical workload conditions. The results of the experiments are shown in Table I. Observe that even after 5 profiling runs, tkemost frequently executed subprograms cannot be positively identified. We conclude that measurements made by prof can be quite inaccurate, and question the rationale of performing multiple profiling runs - with each run contributing a 100% profiling overhead: just to arrive at a “rough” estimate of program locality. There is clearly a need to better understand the causes of these inaccuracies. Profilers arc. software tools that provide the programmer wit.li estimates of how much execution time is spent in the various components of his program. Commonly used profilers are known to suffer from deficiencies. We evaluate the effectiveness of profilers based on their Accuracy (reflecting the c1osenc:ss of the measurement made by the profiler to the actual case), and Variation (across profiling runs; a good profiler should show little or no variation in measurements) [l]. In this paper we study profilers based on PC (Program Counter) sampling, examples of which include lJnix prof and Borland Turbo Profiler [2] operating in the passive mode. In the remainder of this paper, the term prof is used for any profiler that uses this profiling technique. In t.ltis paper, we study prof inaccuracy and its causes, and suggest a teclii?iqrieto enhance accuracy. 11. MOTIVATION The accuracy of the profile generated by a profiler is often important. To a programmer using a profiler as a decision making tool i n optimizing portions of a program, wasted effort could result from such inaccuracies. Execution profilers such as prof are expected to provide accurate measurements at a low time overhead. prof associates an array of countcm with the program to be profiled. The program itself is vic-i:cd as a sequence of equal sized chunks (typically 8 bytes of profiled rode), and one counter is associated with each such chunk. O n every clock tick Jiiiiiig the execution of the program being profiled. the counter associated with thr. sampled PC value is incremented. This activity is initiated by the timer interrupt handler, for programs that have 0-7803-2608-3 __ ----- ‘?O,OOO iterations of the synthetic berichmark Dhrystone 1 [5] E TABLE I prof Proc main I I I 1:%i results of top 6 procedures on 5 successive runs of dhrystone - 20,000 iterations I %t Proc I I I %t Proc %t Proc I 16.6 I strcpy I 14.0 Procl I 21.1 I main 7.5 12.8 11.0 9.9 8.7 7.6 15.4 11.4 10.3 F u n d 7.4 strcmp 6.9 main ProcJ strcpy I %t I 16.6 main Procl strcpy strcpy strcmp 11.8 strcmp Proc-8 -write 6.5 111. CAUSES O F INACCURACY A N D VARIATION We differentiate between profile inaccuracy and variation The inaccuracy of any one profiling run is the difference between the profile it generates and the actual distribution of cycles among the subprograms of the program. For a given input data set, a program should follow the same execution path in different runs. Ideally, we would expect neither the total number of cycles taken by the program, nor the distribution of these cycles across subprograms, to vary across these runs. Therefore, given that the the prof sampling interrupt occurs at fixed, periodic intervals during the execution of a profiled program, profiles should not vary across profiling runs. We identify the following as causes of profile inaccuracy: process is rescheduled, its cache context must be reconstructed. The time taken for the profiled process to rebuild it's cache context will depend on (a) how much of the cache is occupied by a process during one quantum, and (b) the background load, which characterizes the number of quanta after which the profiled process is scheduled again. Having identified the possible causes of error and variation in results reported by prof, we studied the effect of each through trace driven simulation. acmss profiing runs. r t t t t t t t Beating Effect:- As mentioned earlier, it is possible that the sampling interval and the time taken to execute a loop are identical. As a result, an inordinate proportion of the PC samples taken might be to a particular subprogram. procedure 1(PI) Gmnularity of Sampling:. Consider a system operating at 12 Mhz, with the clock tick (timer interrupt) occurring every 10 ms. For a processor that can execute one instruction per cycle, about 120,000 instructions would be executed between two PC samples. Many subprograms might have single invocation instruction path lengths that are much shorter than this. Such short lived events occurring between sampling interrupts might escape capture altogether. Figure 1 illustrates this problem. Profile variations across multiple runs can be attributed to external events -events caused by other activities executing on the system, resulting in changes to the effective sampling interval. This background load on the system can affect the sampling interval in the following ways: t Fig. 1. Processor Pre-emption:. 1/0 activity on the system gener- #samples actual time profiling interrupt Error in prof results due to large sampling interval t t ates interrupts that are serviced at high processor priority, pre-empting the execution of the profiled process. From the perspective of the profiled process, this has the effect of changing the sampling interval, as illustrated in Figure 2. The ftuctuation in sampling interval is a function of the interrupt frequency' which is related to the load on the system. Quantum Size = 24 Interrupt Service = 6 Cache Effect:. At the end of a CPU quantum, when another process gets scheduled instead of the profiled process, the cache context built up by tlie profiled process is replaced by that of newly scheduled process. Thus, when the profiled Effective Quantum Size = 18 interrupt Service t Timer tick Reduction in the sampling interval due to interrupts generated by background load Fig. 2. 2 ing load as follows: The number of lines lost by the profiled process on a context switch is assumed to increase exponentially with the increase in background load. As both the Instruction Cache and the Data Cache are assumed to be small, we assume that the entire cache context is lost on a fully loaded machine [9]- However, on a system with no load, the profiled process is the only process on the system, and so the loss in cache context is only due to the execution of the profile interrupt handler and the context switching code. We consider only the effects of the profile interrupt handler. The loss in cache context due to the execution of thekterrupt handler is viewed .as a loss of a fixed set of lines in the I Cache (due to execution of the handler code) and a loss of a set of lines from tbe D Cache. The address of the lost lines in the D Cache depends on the address of the currently executing instruction and the loss can be attributed to the incrementing of the profile countefs by the handler. All of our simulation assumptions were validated through experiments performed under controlled conditions [lo]. IV. SIMULATION In our simulations, we assumed a similar computing environment to that on which the experirent described earlier was conducted - a Unix workstation based on the htIPS 2000 processor. The simulations were driven by traces generated on that system using p i x i e [SIwith the -idtrace option. y e used the traces of 20,000 iterations of the synthetic benchmark dhrystone2 (referred to as dhry), the SPICE benchmxk from the Perfect benchmark suite (referred to as as) [7],and the Monte Carlo simulation benchmark QCD2 from the Perfect benchmark suite (referr-4 to as lgs). Each trace file was approximately 40h4B in size, correspondingto 15-20 million inskructions executed. Simulation Model System modelling:. We assume that the processor operates with a 12MHz clock, with an SKB direct mapped data cache and a 16KB direct mapped instruction cache. The line size of the data cache is 4 bytes, and there is a cache miss penalty of 5 cycles. We assume that the same parameters hold for the instruction cache a.. well. There is a 4 cycle stall between successive memory store instructions. A context switch is associated with every profiling interrupt. This assumption was made after we obsecved that the getrusage0 report for the number of context switches during a profiling run is identical to the number of samples collected by prof. The prof sampling period is 10 ms. We assume an overhead of 4500 cycles for every sampling period. This overhead can be attributed to the execution of the profiling interrupt handler and the context switch that occurs at the end of the sampling period. v. RESULTS We quantify the accuracy of a profile relative to the distribution of cycles among the subprograms of the program under certain ideal con'ditions, which we refer to as the true histogram. The true histogram is defined as the normalized number of cycles per subprograms of the profiled program executing under no load. Under taese conditions, the profiled process is the only process in zxecution. The true histogram is estimated in the absence of operating system overheads due to context switching and profiling. We computed the true histograms for each of our benchmark program traces through simulation. The inaccuracy of a measured profile was then quantified as its deviation from the true histogram. We use the chi square measure to quantify the difference between two histograms. Simulations of profiled execution were carried out under no l,oad, half load and full load conditions, with load characterized by interrupt activity and/or loss in cache context. Figure 3 compares the true histogram of css and the simulation results under no load conditions. The chi square values showing the error in the results reported by prof under no load conditions for each of the application traces is shown in column 1 of Table 111. Table I1 compares the measurements made by prof under varying load conditions using 4he chi square measure. From Figure 3, it is clear that the error is not due to sampling errors in just one or two subprograms, but across many subprograms, eliminating the resonance phenomenon as source of error in this case. It appears that the likely cause of the errors is the fact that the sampling interval is too large to capture all events. To study this hypothesis, we reran the simulations with an initial skew of a few cycles, i.e., by shifting all the samples by a few cycles. W e would expect this shift to have little effect on the measurements made, but the simulation results indicate otherwise. Table IV shows the chi-square measure between the skewed System workload modelling:. The number of processes contending for the CPU has a significant impact on the measurements made by prof. Two factors that are effected by increases in load are (i) increased loss of cache context, and (ii) increased interrupt handling overheads. Other events, such as page fault handling, are assumed not to affect the effective sampling period; we assume that they are not accounted against the profiled process even if they occur in its CPU quanta. *Systemworkload is therefore modelled using two parameters: 1. Amount of interrupt activity: The number of interrupts occurring in a quantum is assumed to increase linearly with background load, reducing the effective length of the CPU quantum proportionately. We assume a loss of 10% of quantum size on a fully loaded system due to the interrupt activity caused by the background load. We assume that the load conditions are maintained at a constant level through the profiling run. 2. Loss in Cache context: The effett of context switching on cache context has been studied by Mogul et a1 [SI and others. We quantify the loss in cache context with increas'AU printf 0 statements are removed from the dhrystone code to eliminate terminal 110 3 0.4 TABLE I1 chi square measure showing variation on the measurements made by prof under varying loads I only cache effects only i n t e r r u p t s effects b o t h cache a n d interrupt effects I 1 I half 1.79 e6 full 8183 e6 half 5.48 e6 full 1.30 e8 half 2.21 e6 full 16.90 e6 I I 7.81 5.02 4.93 18.14 I 6.27 7.39 I css ks e6 e6 e6 e6 e6 e6 6.13 e5 8.85 e5 7.63 e5 1.61 e6 16.81 e5 1.92 e6 1 I 0.3 U 0 0.2 I TABLE 111 Chi square measure between the true histogram and simulation results for the prof interval of 10ms and the program dependent profiling frequency I I Application dhry css Igs Benchmark Name dhry CSS Igs Skew (in cycles) 1 Interval lams I CModified 4.50 e06 9.01 e05 5.00 e06 2.61 e05 2.30 e06 I 5.72 e06 1 No Load 0 0.0 10 11.2 e06 0.0 I 01 10 I 3.1 e06 I 0.0 1 0 ) 10 I 0.2 e06 I 0.1 I a Fig . 3 . Error in prof measurements with a sampling interval of 120,000 cycles 10% Loss Full Load 10% Loss 2.2 e06 3.1 e06 6.2 e06 6.7 e06 0.6 e06 0.6 e06 6.9 e06 7.3 e06 7.3 e06 7.3 e06 1.9 e06 1.9 e06 Half Load I I 1 I Profiling at this frequency should guarantee accurate results. However, for all three of our traces, the minimum number of cycles between subprogram changes is Zcycles, yielding an ideal sampling interval of 1 cycle, which is equivalent to single stepping through the program! If this is caused by a routine with a low execution count, we could use a larger profiling interval without much loss in accuracy. We arrive at such a practical sampling interval using the average number of cycles between subprogram changes, calculated as c A 4 o d i f zed = cAvg/'s where: C h f o d t f t e d is the modified profiling interval and is the Average number of cycles between subprogram changes. The modified sampling intervals were found to be 12 cycles for dhry, 53 cycles for css and 7s cycles for lgs. Simulations of profiling under these sampling intervals were then conducted. Since the new sampling interval is orders of magnitude smaller than the default value used earlier, we modified our simulation assumptions as follows: (i) We no longer assume that a context switch will occur at the end of every sampling interval. Under such an assumption, with a small sampling interval, many cycles will be wasted in undoing the effects of the context switch. We therefore assume that a contest switch occurs every 30 ms, the default CPU quantum for a process on our Unix system. (ii) We assume that the overhead associated with profiling is 50 cycles. Earlier, both the profiling handler and the context switch code were executed simultaneously, so it is possible that the profile interrupt handler was executing some extra code. Based on an analysis of the activity involved in profiling,'we estimate that a carefully hand coded profiling interrupt handler should be able execute in 50 cycles. trace under different load characterizations and prof measurements under no load conditions. We conclude that to improve the accuracy of measurements made by prof, it is necessary to use a sampling interval that is smaller than the default interval of 10ms. Further, the choice of profiling interval should be program dependent. CA, VI. MODIFICATION TO prof In this section we study the efficacy of of a program dependent profiling interval as a means of improving the accuracy of the measurements made by prof. Let us view the program in execution in terms of the variation in PC value with time. We assume that the program unit of interest is the subprogram, and represent each subprogram by it entry PC value. Using considerations similar to the Sampling Theorem, the ideal sampling interval would then be : Cldeal = cM,n/2 where C I d c a l is the ideal profiling interval and C A ~ ,is, the minimum number of cycles between whprogram changes. 4 Results with modified sampling interval Figure 4 compares the true histogram of css with the measurements made by prof under no load with the modified sampling interval. Unfortunately, the behaviour illustrated in this figures was shown only by the css benchmark. A comparison of the chi square measure for modified sampling interval against the fixed i n t e r d under no load conditions is shown in Table 111. While there is a significant reduction in the error for css, and a marginal reduction for dhry, the error for the lgs prograni actually increased with higher sampling frequency. A similar comparison of the variation in prof results with load (see Table V) indicates a drop in the variation in the case of lgs, but wide variation for css and dhry. We also studied the affect of initial skew in the variation in measurements, under different load conditions The chisquare measures for the three benchmarks are tabulated in Table VI. We conclude that the modified sampling interval is capable of capturing most events, but does not guarantee niore accurate measurements than those obtained with the default sampling interval. Hence, to guarantee accurate measurements using p r o f , we have no choice but to use the ideal sampling interval - even if it implies interrupting program execution every cycle, which amounts to single stepping through the execution of the program. 0.3 U . I 0 0.2 0.1 Zig. 4. True histogram VIS prof a t modified profiling interval Rb load results for css with TABLE VI Chi square measure showing variation in prof with load and skew using a program dependent profiling interval VII. CONCLUSION While program profilers like Unix prof are widely used as program tuning tools, their effectiveness in capturing actual program behaviour is not well understood. We have studied the interaction between the profiling process and the processor state for a typical RISC based Unix system. We conclude that PC sampling profilers which use a fixed sampling interval, like Unix p r o f , can not be relied on to produce accurate measurements. Although this makes them unsuitable as architecture analysis tools, if used with multiple profiling runs, they can provide useful information to programmers interested in identifying program bottlenecks. PC sample based profiling using program dependent sampling intervals maybe capable of providing accurate measurements, provided the ideal sampling interval is used. This carries a high time overhead with it; in the cases studied, accurate profiling would involve single stepping through program execution. A less restrictive sampling interval was also studied, but could not guarantee accurate measurements. REFERENCES [l] C.B. Stunkel, 3. Janssens, and W.K. Fuchs. Address Tracing for Parallel Machines. IEEE Computer, pp 31-38, Jan 1991. [2] Borland International Inc. Turbo Profirer Version 1.0 User's Guide, 1990. [3] P.J. Weinberger. Cheap Dynamic Instruction Counting. ATBT Bell Lab Tach J"a1, 63(8):1815-1826, Oct 1984. 141 C. Ponder and R. J. Eateman. Inaccuracies in Program ProNers. Softzoane - P w t i c e and Experience, 18(5):459[5] R.P. Weidker. An Overview of Common Benchmarks. ZEEE Computer, 1990. [GI M.D. Smith. Tracing with pixie. Tech Report CSL-TR91-497, Computer Systems Lab, Stanford University, Nov 1991. [7] G. Cybenko, L. Kipp, L. Pointer, and D. Kuck. Supercom- TABLE V chi square measure showing variation in results reported by prof with load using a program dependent sampling interval load characterization only cache effects load noload half load dhry . 0.0 227.5 uter Performance Evaluation and the Perfect Benchmarks. fn Proc Inntl Conf on Supercomputing, pp 254-266, 1990. [8] J.C. Mogul and A. Borg. The Effect of Context Switches on Cache Performaace. In PTOC4th ACM/IEEE Conf on Arch Su port fur Prog Lung and Operating Systems, pp 75-84, 19JL [9] S. Laha, J.H. Patel, and R.K. Iyer. Accurate Low Cost css Igs 0.0 0.0 301.0 440 0 Methods for Performance Evaluation of Cache Memory SysIEEE Trans Fomp, pp 1325-1336, Nov 1986. [lo] K. Y. Subramdam. Momtoring Program Dynamics: An Evaluation of Software ProNer Effectiveness. Master's thesis, Com uter Sdence and Automation, Indian Institute of Science, 1993. tems. interrupt effects I full load I 640.2 I 462.0 I 348.2 kov 5